Abstract

Statistical graphics play an important role in exploratory data analysis, model checking and diagnosis. With high dimensional data, this often means plotting low-dimensional projections, for example, in classification tasks projection pursuit is used to find low-dimensional projections that reveal differences between labelled groups. In many contemporary data sets the number of observations is relatively small compared to the number of variables, which is known as a high dimension low sample size (HDLSS) problem. This paper explores the use of visual inference on understanding low-dimensional pictures of HDLSS data. Visual inference helps to quantify the significance of findings made from graphics. This approach may be helpful to broaden the understanding of issues related to HDLSS data in the data analysis community. Methods are illustrated using data from a published paper, which erroneously found real separation in microarray data, and with a simulation study conducted using Amazon’s Mechanical Turk.

Similar content being viewed by others

References

Amazon (2010) Mechanical Turk. http://aws.amazon.com/mturk/

Buja A, Wolgang R (2005) Calibration for simultaneity: (re)sampling methods for simultaneous inference with applications to functional estimation and functional data. Tech. rep. http://stat.wharton.upenn.edu/buja/PAPERS/paper-sim.pdf

Buja A, Cook D, Hofmann H, Lawrence M, Lee E, Swayne D, Wickham H (2009) Statistical inference for exploratory data analysis and model diagnostics. R Soc Philoso Trans A 367(1906):4361–4383

Comon P (1994) Independent component analysis: a new concept? Sig Process 36(3):287–314

Donoho D, Jin J (2008) Higher criticism thresholding: optimal feature selection when useful features are rare and weak. Proc Natl Acad Sci U S A 105:14,790–14,795

Donoho D, Jin J (2009) Feature selection by higher criticism thresholding achieves the optimal phase diagram. Philos Trans R Soc A 367:4449–4470

Dudoit S, Fridlyand J, Speed T (2002) Comparison of discrimination methods for the classification of tumors using gene expression data. J Am Stat Assoc 97(457):77–87

Friedman JH, Tukey JW (1974) A projection pursuit algorithm for exploratory data analysis. IEEE Trans Comput c–23:881–890

Hall P, Marron J, Neeman A (2005) Geometric representation of high dimension, low sample size data. J R Stat Soc B 67:427–444

Hennig C (2014) fpc: Flexible procedures for clustering. http://CRAN.R-project.org/package=fpc. R package version 2.1-7

Huber PJ (1985) Projection pursuit. Ann Stat 13:435–475

Johnson RA, Wichern DW (2002) Applied multivariate statistical analysis, 5th edn. Prentice-Hall, Englewood Cliffs

Jung S, Sen A, Marron JS (2012) Boundary behavior in high dimension, low sample size asymptotics of PCA. J Multivar Anal 109:190–203

Lee EK, Cook D (2010) A projection pursuit index for large p small n data. Stat Comput 20(3):381–392

Majumder M, Hofmann H, Cook D (2013) Validation of visual statistical inference, applied to linear models. J Am Stat Assoc 108(503):942–956

Marron JS, Todd MJ, Ahn J (2007) Distance weighted discrimination. J Am Stat Assoc 480:1267–1271

R Core Team (2013) R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna. http://www.R-project.org/

Ripley BD (1996) Pattern recognition and neural networks. Cambridge University Press, New York

Roweis S, Saul L (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290:2323–2326

Roy Chowdhury N, Cook D, Hofmann H, Majumder M (2012) Where’s Waldo: looking closely at a lineup. Tech. Rep. 2, Iowa State University, Department of Statistics. http://www.stat.iastate.edu/preprint/articles/2012-02.pdf

Toth A, Varala K, Newman T, Miguez F, Hutchison S, Willoughby D, Simons J, Egholm M, Hunt J, Hudson M, Robinson G (2007) Wasp gene expression supports an evolutionary link between maternal behavior and eusociality. Science 318:441–444

Toth A, Varala K, Henshaw M, Rodriguez-Zas S, Hudson M, Robinson G (2010) Brain transcriptomic analysis in paper wasps identifies genes associated with behaviour across social insect lineages. Proc R Soc Biol Sci B 277:2139–2148

Wickham H (2009) ggplot2: Elegant graphics for data analysis. Springer, New York. http://had.co.nz/ggplot2/book

Wickham H, Cook D, Hofmann H, Buja A (2011) tourr: An R package for exploring multivariate data with projections. J Stat Softw 40(2):1–18. http://www.jstatsoft.org/v40/i02/

Witten D, Tibshirani R (2011) Penalized classification using Fisher’s linear discriminant. J R Stat Soc Ser B (Stat Methodol) 73(5):753–772

Yata K, Aoshima M (2011) Effective PCA for high dimension, low sample size data with noise reduction via geometric representations. J Multivar Anal 105:193–215

Acknowledgments

This work was funded by National Science Foundation grant DMS 1007697. All figures were made using the R (R Core Team 2013) package ggplot2 (Wickham 2009). The authors extend their sincere thanks to the associate editor and two reviewers for their helpful comments that resulted in an improved final version.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Solution

-

The solution to the lineup at Fig. 2 is Plot 16.

-

The solution to the lineup at Fig. 3 is Plot 8.

-

The solution to the lineup at Fig. 6 is Plot 17.

-

The solution to the lineup at Fig. 7 is Plot 20.

1.2 Choice of dimensions

The experiment is set up with the three factors separation, dimension and projection dimension. To decide on the levels of dimension to use, we considered the distribution of the absolute difference of the sample group means, for data with two groups, no separation and projection dimension \(d=1\). The same levels are used for data with 3 groups, \(d=2\), and for data with separation.

Let \({\mathbf{X}}_{ij}\) denote the \(j\)th observation in the \(i\)th group where \(j = 1, \dots , n; i=1, \ldots , g\). The \({\mathbf{X}}_{ij}\)’s are random noise, generated by drawing samples from a standard normal distribution. For this experiment, \(g = 2\) and \(n = 15\). The difference between the group means is given by \(\overline{\text{ X }}_{1.} - \overline{\text{ X }}_{2.}\) and

Let \(\text{ U } = |\overline{\text{ X }} _{1.} - \overline{\text{ X }}_{2.}|\) where \(\text{ U } \sim \hbox {Half Normal}\) with scale parameter \( \sigma = \sqrt{2/15}\). The expectation and the variance of \(\text{ U }\) are \(E(\text{ U } ) = \sigma \sqrt{2/\pi }\) and \(Var(\text{ U }) = \sigma ^2 (1 - 2/\pi )\), respectively.

For \(p\) dimensions, consider \(p\) independent samples from the same distribution, denoted as

where \({\mathbf{X}} _{mij}\) is the \(j\)th observation in the \(i\)th group for the \(m\)th dimension. The difference between the two group means projected into one dimension, is the sum over \(p\) dimensions of the absolute difference between the means:

and by independence it follows that

Thus we expect to find this amount of separation between the projected sample means, for data sampled from populations with the same means.

Now consider data where there is some separation (equal to \(2c)\) between the population means:

giving \(\overline{\text{ Z }}_{1.} - \overline{\text{ Z }}_{2.} \sim \hbox {Normal}(2c, 2/15)\). Then define \(\text{ Z } = |\overline{\text{ Z }}_{1.} - \overline{\text{ Z }}_{2.}|\) where \({\text{ Z }} \sim \hbox {Folded Normal Distribution}\) with scale parameter \( \sigma = \sqrt{2/15}\). The expectation and the variance of \(\text{ Z }\) can be calculated to be:

Suppose that only one of the \(p\) dimensions is simulated from this distribution, and all of the rest are simulated from populations having identical means. Define \(\text{ V }\) as the sum of the absolute differences of the mean with one dimension of real separation as

Then, by independence, it follows that:

In this experiment, \(c = 3\) and \(\sigma ^2 = 2/15\). Therefore,

Hence,

As dimension \(p\) increases for a fixed \(n\), the spread of both U and V increases by a factor of \(p\). The means of U and V also increase with a factor of \(p\) but the expected value of the difference between U and V stays constant and is independent of dimension (\(p\)).

Two \(p\)-dimensional datasets are generated with 30 observations in each dimension. The datasets are then divided into two groups with 15 observations in each group. For one set, data is obtained from random noise and hence there is no real separation between the two groups. But for the other set, one dimension among these \(p\) is adjusted so that the data have some real separation between the groups in that dimension. The absolute difference of the means for each group in each of these \(p\) dimensions is considered for both datasets. The absolute difference is considered as we are concerned with projections. These absolute differences between the groups are then summed over all the dimensions to obtain the absolute difference of means for the data. This process is repeated 1,000 times. These 1,000 sum of absolute differences are then plotted for the different values of \(p\).

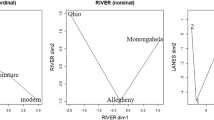

Figure 12 shows the distribution of sum of absolute difference of means for data with and without separation for different dimensions. The distributions of data with and without separation are shown in brown and green respectively. The area of the distribution of pure noise which is above the 5th percentile of the distribution of data with separation is shown in dark purple. Hence a 5 % error is allowed and let the area of the distribution of U greater than the 5th percentile of V be denoted by \(\delta \). Mathematically,

where \(\text{ V }_{\alpha }\) is the \(100\alpha \)th percentile of V, where \(\alpha = 0.05\). It can be seen that the dark purple region increases with dimension (\(p\)). This indicates that as dimension increases, the distributions of data with or without separation gets closer. Hence it gets harder to detect real differences with higher dimensions. Fixing the area of the dark purple region (\(\delta \)) and calculating the dimensions to obtain the required region provides the choice of levels of dimension used in the experiment.

Plot showing the distribution of the sum of absolute difference of means for data with and without separation for different dimensions. The distributions of data with real separation (V) and purely noise data (U) are shown in brown and green, respectively with the dark purple line showing the 5th percentile of V. The dark purple area shows the area of U which is greater than the 5th percentile of V. The dark purple region (\(\delta \)) increases as dimension (\(p\)) increases (color figure online)

The various values of \(\delta \) are chosen such that the distributions has no separation (\(\delta \approx 0\)) or has 1, 5, 10 and 20 % common region. For each value of \(\delta \), the procedure is repeated 100 times and Table 5 shows the summaries of the dimension (\(p\)) for each value of \(\delta \).

Rights and permissions

About this article

Cite this article

Roy Chowdhury, N., Cook, D., Hofmann, H. et al. Using visual statistical inference to better understand random class separations in high dimension, low sample size data. Comput Stat 30, 293–316 (2015). https://doi.org/10.1007/s00180-014-0534-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-014-0534-x