Abstract

This paper discusses approximations of continuous and mixed-integer non-linear optimization problems via piecewise linear functions. Various variants of circle cutting problems are considered, where the non-overlap of circles impose a non-convex feasible region. While the paper is written in an “easy-to-understand” and “hands-on” style which should be accessible to graduate students, also new ideas are presented. Specifically, piecewise linear functions are employed to yield mixed-integer linear programming problems which provide lower and upper bounds on the original problem, the circle cutting problem. The piecewise linear functions are modeled by five different formulations, containing the incremental and logarithmic formulations. Another variant of the cutting problem involves the assignment of circles to pre-defined rectangles. We introduce a new global optimization algorithm, based on piecewise linear function approximations, which converges in finitely many iterations to a globally optimal solution. The discussed formulations are implemented in GAMS. All GAMS-files are available for download in the Electronic supplementary material. Extensive computational results are presented with various illustrations.

Similar content being viewed by others

Notes

The GAMS-file cutting_NLP.gms, used to compute Table 2, is available in the Electronic supplementary material of this article.

Tightest in the sense of minimizing the maximal distance, cf. Sect. 7 and “Appendix 1”.

“Refining the approximation” refers to the addition of breakpoints while the previous breakpoints remain untouched.

The GAMS-file cutting_piecewise.gms, used to compute Table 6, is available in the Electronic supplementary material of this article.

The GAMS-file cutting_piecewise.gms, used to compute Table 7, is available in the Electronic supplementary material of this article.

The GAMS-file cutting_assignment.gms, used to compute Table 9, is available in the Electronic supplementary material of this article.

The GAMS-file cutting_assignment_MILP.gms, used to compute Table 10, is available in the Electronic supplementary material of this article.

The GAMS-file cutting_discrete.gms, used to compute Table 11, is available in the Electronic supplementary material of this article.

References

Beale ELM (1963) Two transportation problems. In: Proceedings of the third international conference on operational research 1963, Paris and English Universities Press, London, Dunod, pp 780–788

Beale ELM, Tomlin JA (1970) Special facilities in a general mathematical programming system for nonconvex problem using ordered sets of variables. In: Lawrence J (ed) Proceedings of the fifth international conference on operational research 1969, Tavistock Publishing, London, pp 447–454

Beale EML, Forrest JJH (1976) Global optimization using special ordered sets. Math Program 10:52–69

Cerisola S, Latorre JM, Ramos A (2012) Stochastic dual dynamic programming applied to nonconvex hydrothermal models. Eur J Oper Res 218(3):687–697

Correa-Posada CM, Sanchez-Martin P (2014) Gas network optimization: a comparison of piecewise linear models. Optimization. http://www.optimization-online.org/DB_HTML/2014/10/4580.html

Frank S, Rebennack S (2012) A primer on optimal power flow: theory, formulation, and practical examples. CSM working paper

Frank SM, Rebennack S (2015) Optimal design of mixed AC-DC distribution systems for commercial buildings: a nonconvex generalized Benders decomposition approach. Eur J Oper Res 242(3):710–729

Geißler B (2011) Towards globally optimal solutions for MINLPs by discretization techniques with applications in gas network optimization. Dissertation, Friedrich-Alexander-Universität Erlangen-Nürnberg, Erlangen-Nürnberg, Germany

Geißler B, Kolb O, Lang J, Leugering G, Martin A, Morsi A (2011) Mixed integer linear models for the optimization of dynamical transport networks. Math Methods Oper Res 73:339–362

Hettich R, Kortanek KO (1993) Semi-infinite programming. SIAM Rev 35:380–429

Horst R, Pardalos PM, Thoai NV (2000) Introduction to global optimization, 2nd edn. Kluwer, London

Kallrath J (2009) Cutting circles and polygons from area-minimizing rectangles. J Global Optim 43:299–328

Kallrath J, Rebennack S (2014a) Computing area-tight piecewise linear overestimators, underestimators and tubes for univariate functions. In: Butenko S, Floudas CA, Rassias TM (eds) Optimization in science and engineering. Springer, Berlin, pp 273–292

Kallrath J, Rebennack S (2014b) Cutting ellipses from area-minimizing rectangles. J Global Optim 59(2–3):405–437

Keha AB, de Farias IR, Nemhauser GL Jr (2004) Models for representing piecewise linear cost functions. Oper Res Lett 32:44–48

Keha AB, de Farias IR, Nemhauser GL Jr (2006) A branch-and-cut algorithm without binary variables for nonconvex piecewise linear optimization. Oper Res 54(5):847–858

Koch T, Achterberg T, Andersen E, Bastert O, Berthold T, Bixby RE, Danna E, Gamrath G, Gleixner AM, Heinz S, Lodi A, Mittelmann H, Ralphs T, Salvagnin D, Steffy DE, Wolter K (2011) MIPLIB 2010. Math Program Comput 3(2):103–163

Li X, Tomasgard A, Barton PI (2011) Nonconvex generalized Benders decomposition for stochastic separable mixed-integer nonlinear programs. J Optim Theory Appl 151(3):425–454

Li X, Chen Y, Barton PI (2012a) Nonconvex generalized Benders decomposition with piecewise convex relaxations for global optimization of integrated process design and operation problems. Ind Eng Chem Res 51(21):7287–7299

Li X, Tomasgard A, Barton PI (2012b) Decomposition strategy for the stochastic pooling problem. J Global Optim 4(4):765–790

Lodi A, Tramontani A (2013) Performance variability in mixed-integer programming, chapter 2, pp 1–12. INFORMS

Misener R, Floudas CA (2014) Antigone: Algorithms for continuous/integer global optimization of nonlinear equations. J Global Optim 59(2–3):503–526

Padberg M (2000) Approximating separable nonlinear functions via mixed zero–one programs. Oper Res Lett 27:1–5

Pardalos PM, Rosen JB (1987) Constrained global optimization: algorithms and applications. Lecture notes in computer science. Springer, Berlin

Pereira MVF, Pinto LMVG (1991) Multi-stage stochastic optimization applied to energy planning. Math Program 52:359–375

Rebennack S (2016) Combining sampling-based and scenario-based nested benders decomposition methods: application to stochastic dual dynamic programming. Math Program 156(1):343–389

Rebennack S, Kallrath J (2015a) Continuous piecewise linear delta-approximations for bivariate and multivariate functions. J Optim Theory Appl 167(1):102–117

Rebennack S, Kallrath J (2015b) Continuous piecewise linear delta-approximations for univariate functions: computing minimal breakpoint systems. J Optim Theory Appl 167(2):617–643

Rebennack S, Kallrath J, Pardalos PM (2009) Column enumeration based decomposition techniques for a class of non-convex MINLP problems. J Global Optim 43(2–3):277–297

Rosen JB, Pardalos PM (1986) Global minimization of large-scale constrained concave quadratic problems by separable programming. Math Program 34:163–174

Sherali HD (2001) On mixed-integer zero–one representations for separable lower-semicontinuous piecewise-linear functions. Oper Res Lett 28:155–160

Tomlin JA (1988) Special ordered sets and an application to gas supply operating planning. Math Program 45:69–84

Vielma JP, Nemhauser G (2011) Modeling disjunctive constraints with a logarithmic number of binary variables and constraints. Math Program 128:49–72

Vielma JP, Ahmed S, Nemhauser G (2010) Mixed-integer models for nonseparable piecewise-linear optimization: unifying framework and extensions. Oper Res 58(2):303–315

Acknowledgments

The author thanks Ed Klotz (IBM CPLEX) and two anonymous reviewers for their valuable suggestions.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendices

Appendix 1: A general properties of approximator systems

In this appendix, we provide some definitions for terminology and we generalize some of the concepts used in Sects. 3–7.

We are interested in the following non-linear optimization problem:

with continuous functions \(f\,{:}\,\mathbb {D}\rightarrow \mathbb {R}, g\,{:}\,\mathbb {D} \rightarrow \mathbb {R}^{m_{1}}\) and \(h\,{:}\,\mathbb {D}\rightarrow \mathbb {R}^{m_{2}}\). We also allow for special cases when the number of equality constraints, \(m_1\), is zero, or the number of inequality constraints, \(m_2\), is zero. We are particularly interested in those cases where problem (54)–(57) is a non-convex optimization problem, for example, f(x) is a non-convex function in x, and/or constraint set (55)–(56) defines a non-convex feasible region.

Notice that integrality on the decision variable x can be enforced via special non-convex constructs (Horst et al. 2000). Thus, (NOP) models the general class of mixed-integer non-linear programming problems where lower and upper bounds on all decision variables are present. With that, the continuous NLP cutting problems \((\mathcal {C}^\mathrm{length})\) and \((\mathcal {C}^\mathrm{area})\) as well as the MINLP assignment–cutting problem \((\mathcal {A})\) are special cases of (NOP).

We are not interested in solving problem (54)–(57) to global optimality. In fact, we assume that there are global solvers available, which can solve this optimization problem (or variations of it) to global optimality. Rather, we are interested in finding linear \( \varepsilon \)-approximations of the optimization problem (54)–(57) in the following sense:

Definition 1

(\(\varepsilon \)-approximated problem) Let \(x^*\) be a globally optimal solution to (54)–(57) and \(| \cdot |\) denote the absolute value function. We call an optimization problem an \(\varepsilon \) -approximated problem, iff for any optimal solution \(y^*\) of the \(\varepsilon \)-approximated problem, \(y^*\) satisfies the following properties:

Linear \(\varepsilon \)-approximations satisfying properties \(P_{1}\)–\(P_{3}\) often require non-convex and non-concave piecewise linear functions, which can be expressed via linear functions and breakpoints. Thus, one obtains an MILP, approximating the non-linear optimization problem (54)–(57). The size of the resulting MILP depends crucially on the number of breakpoints and one can expect that the MILP has significantly more variables than the original (MI)NLP (54)–(57).

The definition of the \(\varepsilon \)-approximated problem assumes that both the original non-linear optimization problem as well as the approximated problem are feasible. However, to safely detect infeasibility of the non-linear optimization problem (54)–(57) is an interesting problem by itself. Particularly, for an \(\varepsilon \)-approximated problem, one might demand the additional property

If an optimization problem is infeasible, then we use the convention for minimization problems that the “optimal” objective function value is infinity, e.g., \(f(x^{*})=\infty \). We note that feasible solutions to the \(\varepsilon \)-approximated problem might not be feasible to the (NOP). This relaxation enables us to obtain valid lower bounds on the optimal objective function value of (NOP).

Let us recall the definition of a \(\delta \)-approximator (in n-dimensions)

Definition 2

(\(\delta \)-approximator, Rebennack and Kallrath 2015a) Let \(f{:}\, \mathbb {D} \rightarrow \mathbb {R}\) be a function on the compactum \(\mathbb {D}\) and let scalar \(\delta > 0\). A piecewise linear, continuous function \(\ell \,{:}\,\mathbb {D} \rightarrow \mathbb {R}\) is called a \(\delta \) -approximator for f, iff the following property holds

as well as a \(\delta \)-underestimator and a \(\delta \)-overestimator

Definition 3

(\(\delta \)-underestimator/\(\delta \)-overestimator, Rebennack and Kallrath 2015a) Let scalar \(\delta > 0\). We call function \(\ell \,{:}\, \mathbb {D} = D_1 \rightarrow \mathbb {R}\) a \(\delta \) -underestimator of function \(f\,{:}\,\mathbb {D} \rightarrow \mathbb {R}\), iff condition (58) is satisfied along with

We call function \(\ell \) a \(\delta \) -overestimator of function f, iff \(-\ell \) is a \(\delta \)-underestimator of \(-f\).

The approximation quality of the \(\delta \)-approximators, \(\delta \)-underestimators and \(\delta \)-overestimators are defined through the maximal absolute difference between the function and the approximators over the given compactum \(\mathbb {D}\). Later in this appendix, we see how that is useful when approximating (NOP). However, other quality measures for approximators exist, for instance, the area between the approximator and the function (Kallrath and Rebennack 2014a).

We call a \(\delta \)-approximator, \(\delta \)-underestimator and \(\delta \)-overestimator tight, if there is no approximator, under- or overestimator with the same number of breakpoints having a maximal absolute function deviation smaller than \(\delta \).

Next, we apply \(\delta \)-approximator functions towards NLP (54)–(57). We obtain

Theorem 1

Given is problem (54)–(57). For a \(\delta \)-approximator \(\ell \) over \(\mathbb {D}\) for objective function f with \(\delta := \frac{\varepsilon }{2}\), any optimal solution of

satisfies properties \(P_1\)–\(P_3\). Furthermore, (60)–(63) satisfies \(P_4\). Replacing \(\ell \) with an \(\varepsilon \)-underestimator for f yields the same properties.

Proof

Assume that (60)–(63) is infeasible. As the feasible region of (61)–(63) is identical to the feasible region of (55)–(57), one obtains \(P_4\).

Now, assume that (60)–(63) is feasible. For \(P_3\), let \(y^*\) be an optimal solution to (60)–(63) and \(x^*\) be an optimal solution to (54)–(57). Assume that

where the closed ball is defined as

By (58), we obtain

By assumption, \(\overline{B}_\delta \big ( f(y^*) \big ) \cap \overline{B}_\delta \big ( f(x^*) \big ) = \emptyset \) and because \(f(x^*) \le f(y^*)\) due to the optimality of \(x^*\), we have that \(\ell (x^*) < \ell (y^*)\) which contradicts optimality of \(y^*\).

We have that \(f(y^*) - \ell (y^*) \le \varepsilon \) which implies \(f(y^*) - f(x^*) \le \epsilon \). \(\square \)

One might expect that \(x^* = y^*\) in most cases. However, note that \(x^*\) does not even have to be “close” to \(y^*\) in general.

Theorem 1 shows that if the “difficult” non-linear relations only occur in the objective function, then linear \(\varepsilon \)-approximations work fine to approximate the original non-linear optimization problem. However, if non-linear terms occur in difficult to handle constraints, such as equality constraints, we rather resort to under- and overestimators.

By relaxing the feasible region (outer approximation), lower bounds on the optimal objective function of the original problem can be calculated. This is formalized in the next

Theorem 2

(Outer approximation) Given is problem (54)–(57). With functions \(\ell _-, g_{i-}, h_{j-}\) being \(\varepsilon \)-underestimators for \(f, g_i\) and \(h_i\), respectively, and \(g_{i+}\) being a \(\varepsilon \)-overestimator for \(g_{i}\), any optimal solution \(y^*\) of

satisfies properties \(P_1, P_2\) and \(\ell _-(y^*) \le f(x^*)\). Furthermore, (64)–(68) satisfies \(P_4\).

Proof

\(P_1\) and \(P_2\) hold by construction. The condition \(\ell _-(y^*) \le f(x^*)\) and \(P_4\) hold true because any feasible solution x to (54)–(57) is also feasible for (64)–(68). \(\square \)

By Theorem 2, any optimal solution of (64)–(68) defines a (valid) lower bound to the original problem (54)–(57). An upper bound can be obtained, e.g., by running a local solver (using the obtained solution \(y^*\)).

Note that Theorem 2 remains true when any of the exact functions f, g or h are used. With that, our MILP cutting problem \((\mathcal {P}^\mathrm{length})\) is a special case for (64)–(68) when the quadratic functions in the non-overlap constraints are overestimated. The non-overlap constraints are parts of the \(-h(x)\) function in (54)–(57). Similarly for \((\mathcal {P}^\mathrm{area})\) when in addition underestimators are used in the objective.

One may wonder, if it is possible to prove a stronger version of Theorem 2 along the lines of Theorem 1. To do so, consider the following one-dimensional example for any positive scalars M and \(\vartheta \le 1\):

with the (unique) optimal solution \(x_1^* = 1\) and optimal objective function value \(f(x_1^*) = M\). Let \(\ell _-\) be an \(\varepsilon \) -underestimator of \(h(x):= - x_1^2 + \vartheta \) with \(\varepsilon \ge \vartheta \). Thus, the optimization problem of the form

may allow \(y_1^* = 0\) as an optimal solution of (69)–(71) with objective function value 0. (The interested reader may construct an example over a continuous domain, by replacing the domain by [0, 1] and adding the term \(M \cdot x_1 \cdot (1-x_1)\) to the objective, and working with an \(\varepsilon \)-underestimator for the objective). For this example, the phenomenon of arbitrary large differences in the objective function is avoided by using an \(\varepsilon \)-underestimator for h(x) with \(\varepsilon < \vartheta \).

When shrinking the feasible region (inner approximation), one may obtain an upper bound on the objective function of the original problem if the approximated problem is feasible.

Theorem 3

(Inner approximation) Given is problem (54)–(57). With functions \(\ell _{+}\) and \(h_{j+}\) being \(\varepsilon \)-overestimators for f and \(h_j\), any optimal solution \(y^*\) of

satisfies properties \(P_1, P_2\) and \(\ell _+(y^*) \ge f(x^*)\).

Proof

\(P_1\) and \(P_2\) hold by construction. Because any feasible point to (72)–(75) is also feasible for (54)–(57), and function \(\ell _+\) is an overestimator for objective function \(f, \ell _+(y^*) \ge f(x^*)\) holds. \(\square \)

Note that \(P_4\) does not hold for the inner approximation (72)–(75).

Similar to the discussion on outer approximations, \((\mathcal {P}^\mathrm{length})\) and \((\mathcal {P}^\mathrm{area})\) define an inner approximation if the quadratic functions in the non-overlap constraints are underestimated.

Appendix 2: Discretization of rectangles

Instead of approximating the non-overlap constraints (1) of the circles via piecewise linear constructs, we restrict the placement of the circle centers on predefined grid points. Therefore, let \([0,S_1^+]\) be discretized at points \(\chi _l\) with

where set \(\mathbb {B}^\mathrm{rect}_1 = \{1, \ldots , K^\mathrm{R}_1\}\) collects all indices of the discretization points. Similarly, the width is discretized as

with set \(\mathbb {B}^\mathrm{rect}_2\). We label all grid points inside the rectangle accordingly by lw.

Binary variable \(x^\mathrm{b}_{ilw}\) then has value 1, if the circle center \(i \in \mathbb {I}\) is placed on the grid point lw and 0 otherwise. The non-overlap constraints (1) then simplify to the linear constraints

If circle center i is placed on grid point lw, then set \(O_{ijlw}\) contains all such grid points \(l'w'\) at which circle center j cannot be placed on. For i, j and lw, set \(O_{ijlw}\) contains grid point \(l'w'\), if

Each circle needs to be placed exactly once

To ensure that the circle fits inside the rectangle, we formulate conditions (2) and (3) in terms of the binary variable \(x^\mathrm{b}_{ilw}\). Therefore, we approximate \(x_{ id}\) through \(x^\mathrm{b}_{ilw}\). For the length, or dimension \(d=1\), we identify

and for the width, or \(d=2\),

Circle fitting constraints (2) are then enforced by fixing all decision variables \(x^\mathrm{b}_{ilw}\) to value 0 for which

Instead of adding constraints of the type “\(x^\mathrm{b}_{ilw}=0\)”, we can eliminate these decision variables from the model formulation in the pre-processing step.

Constraints (3) translate then to

We obtain the following MILP problem, which minimizes the design rectangle’s length over the discretized rectangle, as

In our second cutting problem \((\mathcal {C}^\mathrm{area})\), we minimize the area of the design rectangle. This leads to the bilinear term

Because both \(x_{1}^{\mathrm {P}}\) and \(x_{2}^{\mathrm {P}}\) are continuous decision variables, we cannot find an exact linear representation for (80). Thus, we use an approximation. The idea here is again to use a discretization of the length and the width, just like we did for the circle center coordinates in \((\mathcal {D}^\mathrm{length})\). Let \(\chi _m\) discretize the rectangle’s length in (80) (\(\mathbb {B}^\mathrm{obj}_1 = \{ 1 \ldots , K^\mathrm{O}_1 \}\))

and let \(\chi _o\) discretize its width (\(\mathbb {B}^\mathrm{obj}_2 = \{ 1 \ldots , K^\mathrm{O}_2 \}\))

We obtain the approximations

with the additional binary variables \(x_{1m}^\mathrm{P,b}\) and \(x_{2n}^\mathrm{P,b}\) respecting the constraints

Alternatively, we can use “\(\le \)” constraints in (81)—we expect slightly better computational performance when using “\(\le \)” instead of “\(=\)”.

The design rectangle’s area (80) is then approximated by

In contrast to (80), the bilinear terms in (82) are the product of two binary variables. This can be linearized exactly. For that we define for \(m \in \mathbb {B}^\mathrm{obj}_1\) and \(n \in \mathbb {B}^\mathrm{obj}_2\)

and observe that \(x^\mathrm{a}_{mn} = x_{1m}^\mathrm{P,b} \cdot x_{2n}^\mathrm{P,b}\).

It remains to substitute the approximated length and with into the fitting constraint (79) to yield

This allows us to formulate the following pure integer programming (IP) problem, which approximates \((\mathcal {C}^\mathrm{area})\),

By construction of the non-overlap constraints (76) via the set \(O_{ijlw}\), we are able to recover a feasible point for \((\mathcal {C}^\mathrm{length})\) and \((\mathcal {C}^\mathrm{area})\) from an optimal solution of \((\mathcal {D}^\mathrm{length})\) and \((\mathcal {D}^\mathrm{area})\), respectively, assuming that there is at least one. Thus, by solving \((\mathcal {D}^\mathrm{length})\) and \((\mathcal {D}^\mathrm{area})\), we obtain an upper bound on the minimal rectangle’s length and minimal area, i.e.,

for any discretization (assuming feasibility).

Because we are discretizing the bilinear objective function term via the discretization of the rectangle \([S_-^1,S_+^1] \times [S_-^2, S_+^2]\) and the binary decision variables \(x_{1m}^\mathrm{P,b}\) and \(x_{2n}^\mathrm{P,b}\), the area of the rectangle associated with the computed (feasible) placement of the circles is actually given by

where \(l^*\) and \(w^*\) is the optimal length and width of the design rectangle computed by \((\mathcal {D}^\mathrm{area})\).

Next, we briefly discuss symmetry. In the NLP formulation, we used constraints (5) to break the symmetry caused by axial reflection (both horizontal and vertical) of any placement of the circles. However, this symmetry is not likely to be present in the derived formulation \((\mathcal {D}^\mathrm{area})\) because the grid is likely to not be symmetric with respect to the constructed design rectangle. The same holds true for \((\mathcal {D}^\mathrm{length})\) for vertical reflection. Horizontal reflection, however, can be broken by eliminating decision variables \(x^\mathrm{b}_{1lw}\) with \(\chi _w > S_2^+/2\) in the preprocessing step from the problem formulation. Thus, the number of variables is reduced.

The quality of the upper bounds computed depends on the discretization chosen. Actually, if we refine the grid, i.e., we add additional grid points to an existing grid (and do not change the grid points on the existing grid), we obtain a sequence of monotone decreasing length and area. Intuitively, this sequence converges to \(L^*\) and \(A^*\), respectively. However, we cannot readily provide a formula which bounds the maximal deviation, dependent on the discretization.

Lower bounds on \(L^*\) and \(A^*\) can also be obtained via the solution of \((\mathcal {D}^\mathrm{length})\) and \((\mathcal {D}^\mathrm{area})\) by allowing “enough” overlap of the circles. For instance, we can define the members of the set \(O_{ijlw}\), for i, j and lw, if

where \(\varrho \) is the maximal diagonal of all rectangles imposed by the chosen discretization. In order to obtain valid bounds, the circles must respect the boundary conditions, i.e., they must fit inside the design triangle.

Further, we obtain the following bounds on the length and area

To illustrate the two formulations, computational resultsFootnote 8 are reported in Table 11 for the instances of Table 1. Consider now Table 11. Columns two to four report on the discretization choice and the resulting maximal diagonal value \(\varrho \). We have placed the grid equidistantly and the distance between two discretization points is equal in both dimensions. For formulation \((\mathcal {D}^\mathrm{area})\), we have chosen \(K^\mathrm{O}_1 = K^\mathrm{R}_1\) and \(K^\mathrm{O}_2 = K^\mathrm{R}_2\). Columns five to eight belong to formulation \((\mathcal {D}^\mathrm{length})\). \(\underline{L}\) is the computed lower bound on \(L^*\) while \(\bar{L}\) is an upper bound. Similarly, columns nine to twelve provide the lower and upper bounds on the area \(A^*\) as computed by \((\mathcal {D}^\mathrm{area})\). Computational times are given in seconds.

We observe that all bounds reported in Table 11 are correct but quite weak. The computational times are considerable, especially compared to global solvers, see Table 1. The reason for the weak bounds is the quite large discretization error, as measured in \(\varrho \), even for a grid with as many as 52 and 27 segments. This is particularly visible when tracking the lower bound on the rectangle’s length, \(\underline{L}\). We also notice that it is computationally more expensive to compute upper bounds (both for the length and the area) than lower bounds, though the formulations are identical. The reason is that problems computing the lower bounds contain much fewer constraints due to the less restrictive overlap conditions.

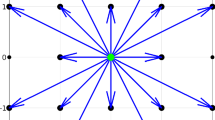

A few example plots in Fig. 15 illustrate the computed circle cuttings (upper bounds) as well as the resulting arrangements when allowing overlap of circles (lower bounds).

Rights and permissions

About this article

Cite this article

Rebennack, S. Computing tight bounds via piecewise linear functions through the example of circle cutting problems. Math Meth Oper Res 84, 3–57 (2016). https://doi.org/10.1007/s00186-016-0546-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00186-016-0546-0