Abstract

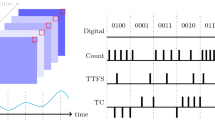

Spiking neural networks are the third generation of artificial neural networks that are inspired by a new brain-inspired computational model of ANN. Spiking neural network encodes and processes neural information through precisely timed spike trains. eSNN is an enhanced version of SNN, motivated by the principles of Evolving Connectionist System (ECoS), which is relatively a new classifier in the neural information processing area. The performance of eSNN is highly influenced by the values of its significant hyperparameters’ modulation factor (mod), threshold factor (c), and similarity factor (sim). In contrast to the manual tuning of hyperparameters, automated tuning is more reliable. Therefore, this research presents an optimizer-based eSNN architecture, intended to solve the issue regarding optimum hyperparameters’ values’ selection of eSNN. The proposed model is named eSNN-SSA where SSA stands for salp swarm algorithm, which is a metaheuristic optimization technique integrated with eSNN architecture. For the integration of eSNN-SSA, Thorpe’s standard model of eSNN is used with population rate encoding. To examine the performance of eSNN-SSA, various benchmarking data sets from the UCR/UAE time-series classification repository are utilized. From the experimental results, it is concluded that the salp swarm algorithm plays an effective role in improving the flexibility of the eSNN. The proposed eSNN-SSA offers solutions to conquer the disadvantages of eSNN in determining the best number of pre-synaptic neurons for time-series classification problems. The performance accuracy obtained by eSNN-SSA was on datasets spoken Arabic digits, articulatory word recognition, character trajectories, wafer, and GunPoint, i.e., 0.96, 0.97, 0.94, 1.0, and 0.94, respectively. The proposed approach outperformed standard eSNN in terms of time complexity.

Similar content being viewed by others

Availability of Data and Materials (Data Transparency)

Not applicable.

References

Basu, J.K., Bhattacharyya, D., Kim, T.: Use of artificial neural network in pattern recognition. Int. J. Soft. Eng. Appl. 4(2) (2010)

Zainuddin, Z., Ong, P.: Function approximation using artificial neural networks. WSEAS Trans. Math. 7(6), 333–338 (2008)

Beskopylny, A., Lyapin, A., Anysz, H., Meskhi, B., Veremeenko, A., Mozgovoy, A.: Artificial neural networks in classification of steel grades based on non-destructive tests. Materials (Basel) 13(11), 2445 (2020)

Heo, S., Lee, J.H.: Fault detection and classification using artificial neural networks. IFAC-PapersOnLine 51(18), 470–475 (2018)

Hagan, M.T., Demuth, H.B., Beale, M.: Neural Network Design. PWS Publishing Co., Boston (1997)

Vreeken, J.: Spiking Neural Networks, an Introduction. Utrecht University Information and Computing Sciences, Utrecht (2003)

Maass, W.: Networks of spiking neurons: the third generation of neural network models. Neural Netw. 10(9), 1659–1671 (1997)

Doborjeh, Z., et al.: Spiking neural network modelling approach reveals how mindfulness training rewires the brain. Sci. Rep. 9(1), 6367 (2019). https://doi.org/10.1038/s41598-019-42863-x

Ahmed, F.Y., Yusob, B., Hamed, H.N.A.: Computing with spiking neuron networks: a review. Int. J. Adv. Soft. Comput. Appl. 6(1) (2014)

Agebure, M.A., Wumnaya, P.A., Baagyere, E.Y.: A survey of supervised learning models for spiking neural network. Networks 5 (2021)

Holland, J.H.: Adaptation in Natural and Artificial Systems: An Introductory Analysis with Applications to Biology, Control, and Artificial Intelligence. MIT Press, Cambridge (1992)

Kasabov, N.K.: The ECOS framework and the ECO learning method for evolving connectionist systems. J. Adv. Comput. Intell. Intell. Inform. 2(6), 195–202 (1998)

Saleh, A.Y., Hameed, H., Najib, M., Salleh, M.: A novel hybrid algorithm of differential evolution with evolving spiking neural network for pre-synaptic neurons optimization. Int. J. Adv. Soft Comput. Appl 6(1), 1–16 (2014)

Abdull Hamed, H.N.: Novel integrated methods of evolving spiking neural network and particle swarm optimisation. Auckland University of Technology, Auckland (2012)

Kennedy, J.: Swarm intelligence. In: Handbook of nature-inspired and innovative computing, pp. 187–219. Springer, Berlin (2006)

Anderson, P.A.V., Bone, Q.: Communication between individuals in salp chains. II. Physiology. Proc. R. Soc. London. Ser. B. Biol. Sci. 210(1181), 559–574 (1980)

Ruiz, A.P., Flynn, M., Large, J., Middlehurst, M., Bagnall, A.: The great multivariate time-series classification bake off: a review and experimental evaluation of recent algorithmic advances. Data Min. Knowl. Discov. 35(2), 401–449 (2021)

Roslan, F., Hamed, H.N.A., Isa, M.A.: The enhancement of evolving spiking neural network with firefly algorithm. J. Telecommun. Electron. Comput. Eng. 9(3–3), 63–66 (2017)

Yusuf, Z.M., Hamed, H.N.A., Yusuf, L.M., Isa, M.A.: Evolving spiking neural network (ESNN) and harmony search algorithm (HSA) for parameter optimization. In: 2017 6th International Conference on Electrical Engineering and Informatics (ICEEI), pp. 1–6 (2017)

Saleh, A.Y., Shamsuddin, S.M., Hamed, H.N.A., Siong, T.C., Othman, M.K.: A new harmony search algorithm with evolving spiking neural network for classification problems. J. Telecommun. Electron. Comput. Eng. 9(3–11), 23–26 (2017)

Saleh, A.Y., Shamsuddin, S.M., Hamed, H.N.A.: Multi-objective differential evolution of evolving spiking neural networks for classification problems. In: IFIP International Conference on Artificial Intelligence Applications and Innovations, pp. 351–368 (2015)

John, G.H., Kohavi, R., Pfleger, K.: Irrelevant features and the subset selection problem. In: Machine Learning Proceedings 1994. Elsevier, pp. 121–129 (1994)

Hamed, H.N.A., Saleh, A.Y., Shamsuddin, S.M., Ibrahim, A.O.: Multi-objective K-means evolving spiking neural network model based on differential evolution. In: 2015 International Conference on Computing, Control, Networking, Electronics and Embedded Systems Engineering (ICCNEEE), pp. 379–383 (2015)

Saleh, A.Y., Shamsuddin, S.M., Hamed, H.N.A.: Memetic harmony search algorithm based on multi-objective differential evolution of evolving spiking neural networks. Int. J. Swarm Intel. Evol. Comput. 5(130), 2 (2016)

Saleh, A.Y., Hamed, H.N.B.A., Shamsuddin, S.M., Ibrahim, A.O.: A new hybrid k-means evolving spiking neural network model based on differential evolution. In: International Conference of Reliable Information and Communication Technology, pp. 571–583 (2017)

Bohte, S.M., Kok, J.N., La Poutre, H.: Error-backpropagation in temporally encoded networks of spiking neurons. Neurocomputing 48(1–4), 17–37 (2002)

Séguier, R., Mercier, D.: Audio-visual speech recognition one pass learning with spiking neurons. In: International Conference on Artificial Neural Networks, pp. 1207–1212 (2002)

Kasabov, N.K.: Time-Space, Spiking Neural Networks and Brain-Inspired Artificial Intelligence. Springer, Berlin (2019)

Kasabov, N.: Integrative connectionist learning systems inspired by nature: current models, future trends and challenges. Nat. Comput. 8(2), 199–218 (2009)

Schliebs, S., Kasabov, N.: Computational modeling with spiking neural networks, pp. 625–646. Springer Handb. Bio-/neuroinformatics, Berlin (2014)

Mirjalili, S., Gandomi, A.H., Mirjalili, S.Z., Saremi, S., Faris, H., Mirjalili, S.M.: Salp swarm algorithm: a bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 114, 163–191 (2017)

Abualigah, L., Shehab, M., Alshinwan, M., Alabool, H.: Salp swarm algorithm: a comprehensive survey. Neural Comput. Appl. 32(15), 11195–11215 (2020)

Ibrahim, R.A., Ewees, A.A., Oliva, D., Abd Elaziz, M., Lu, S.: Improved salp swarm algorithm based on particle swarm optimization for feature selection. J. Ambient Intell. Humaniz. Comput. 10(8), 3155–3169 (2019)

Hamed, H.N.A., Kasabov, N., Shamsuddin, S.M.: Integrated feature selection and parameter optimization for evolving spiking neural networks using quantum inspired particle swarm optimization. In: 2009 International Conference of Soft Computing and Pattern Recognition, pp. 695–698 (2009)

Dau, H.A., et al.: The UCR time-series archive. IEEE/CAA J. Autom. Sin. 6(6), 1293–1305 (2019)

Forrester, A., Sobester, A., Keane, A.: Engineering design via surrogate modelling: a practical guide. Wiley, New York (2008)

Al-Tashi, Q., Rais, H., Jadid Abdulkadir, S., Mirjalili, S.: Feature selection based on grey wolf optimizer for oil and gas reservoir classification. In: 2020 International Conference on Computational Intelligence (ICCI). IEEE, pp. 211–216 (2020)

Ren, H., Li, J., Chen, H., Li, C.: Adaptive levy-assisted salp swarm algorithm: analysis and optimization case studies. Math. Comput. Simul. 181, 380–409 (2021)

Ragab, M.G., Abdulkadir, S.J., Aziz, N., Al-Tashi, Q., Alyousifi, Y., Alhussian, H., Alqushaibi, A.: A novel one-dimensional CNN with exponential adaptive gradients for air pollution index prediction. Sustainability. 12(23), 10090 (2020). https://doi.org/10.3390/su122310090

Abdulkadir, S.J., Shamsuddin, S.M., Sallehuddin, R.: Three term back propagation network for moisture prediction. In: International Conference on Clean and Green Energy, pp. 103–107 (2012)

Abdulkadir, S.J., Alhussian, H., Alzahrani, A.I.: Analysis of recurrent neural networks for henon simulated time-series forecasting. J. Telecommun. Electron. Comput. Eng. 10(1–8), 155–159 (2018)

Alhussian, H., Zakaria, N., Patel, A., Jaradat, A., Abdulkadir, S.J., Ahmed, A.Y., Bahbouh, H.T., Fageeri, S.O., Elsheikh, A.A., Watada, J.: Investigating the schedulability of periodic real-time tasks in virtualized cloud environment. IEEE Access 7, 29533–29542 (2019)

Aman, M., Said, A.B.M., Kadir, S.J.A., Ullah, I.: Key concept identification: a sentence parse tree-based technique for candidate feature extraction from unstructured texts. IEEE Access 6, 60403–60413 (2018)

Abdulkadir, S.J., Yong, S.-P.: Lorenz time-series analysis using a scaled hybrid model. In: 2015 International Symposium on Mathematical Sciences and Computing Research (iSMSC). IEEE, pp. 373–378 (2015)

Acknowledgements

We are thankful to the Editor and the reviewers for their valuable suggestions and remarks, which significantly enhanced the quality of the manuscript.

Funding

This research was supported by Universiti Teknologi PETRONAS, under the Yayasan Universiti Teknologi PETRONAS (YUTP) Fundamental Research Grant Scheme (YUTPFRG/015LC0-308).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors proclaim no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

About this article

Cite this article

Ibad, T., Abdulkadir, S.J., Aziz, N. et al. Hyperparameter Optimization of Evolving Spiking Neural Network for Time-Series Classification. New Gener. Comput. 40, 377–397 (2022). https://doi.org/10.1007/s00354-022-00165-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00354-022-00165-3