Abstract

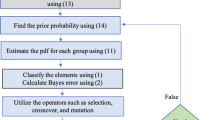

The goal of classification is to classify new objects into one of the several known populations. A common problem in most of the existing classifiers is that they are very much sensitive to outliers. To overcome this problem, several author’s attempt to robustify some classifiers including Gaussian Bayes classifiers based on robust estimation of mean vectors and covariance matrices. However, these type of robust classifiers work well when only training datasets are contaminated by outliers. They produce misleading results like the traditional classifiers when the test data vectors are contaminated by outliers as well. Most of them also show weak performance if we gradually increase the number of variables in the dataset by fixing the sample size. As the remedies of these problems, an attempt is made to propose a highly robust Gaussian Bayes classifiers by the minimum β-divergence method. The performance of the proposed method depends on the value of tuning parameter β, initialization of Gaussian parameters, detection of outlying test vectors, and detection of their variable-wise outlying components. We have discussed some techniques in this paper to improve the performance of the proposed method by tackling these issues. The proposed classifier reduces to the MLE-based Gaussian Bayes classifier when β → 0. The performance of the proposed method is investigated using both synthetic and real datasets. It is observed that the proposed method improves the performance over the traditional and other robust linear classifiers in presence of outliers. Otherwise, it keeps equal performance.

Similar content being viewed by others

References

Agostinelli, C., Leung, A., Yohai, V.J., Zamar, R.H. (2015). Robust estimation of multivariate location and scatter in the presence of cellwise and casewise contamination. TEST, 24, 441–461. https://doi.org/10.1007/s11749-015-0450-6.

Alqallaf, F.A. (2003). A new contamination model for robust estimation with large high dimensional data sets. PHD Thesis: University of British Columbia.

Anderson, T.W. (2003). An introduction to multivariate statistical analysis. Wiley Interscience.

Basu, A, Harris, I.R., Hjort, N.L., Jones, M.C. (1998). Robust and efficient estimation by minimising a density power divergence. Biometrika, 85, 549–559.

Chork, C., & Rousseeuw, P.J. (1992). Integrating a high breakdown option into discriminant analysis in exploration geochemistry. Journal of Geochemical Exploration, 43, 191–203.

Croux, C., & Dehon, C. (2001). Robust linear discriminant analysis using s-estimators. The Canadian Journal of Statistics, 29, 473–492.

Donoho, D.L., & Huber, P.J. (1983). The notion of breakdown point. In Bickel, P.J., Doksum, K., Hodges, Jr. J.L. (Eds.) A Festschrift for Erich L. Lehmann (pp. 157–184). Belmont: Wadsworth.

Hawkins, D.M., & McLachlan, G. (1997). High-breakdown linear discriminant analysis. Journal of the American Statistical Association, 92, 136–143.

He, X., & Fung, W. (2000). High breakdown estimation for multiple populations with applications to discriminant analysis. Journal of Multivariate Analysis, 72, 151–162.

Hubert, M., & Driessen, K.V. (2004). Fast and robust discriminant analysis. Computational Statistics and Data Analysis, 45, 301–320.

Hubert, M., & Debruyne, M. (2010). Minimum covariance determinant. Advanced review (Vol. 2). Wiley.

Johnson, R.A., & Wichern, D.W. (2007). Applied multivariate statistical analysis, 6th edn. Prentice-Hall.

Lopuha, H.P., & Rousseeuw, P.J. (1991). Breakdown points of an equivariant estimators of multivariate location and covariance matrices. The Annals of Statistics, 19, 229–248.

Maronna, R.A. (1976). Robust m-estimators of multivariate location and scatter. The Annals of Statistics, 4, 51–67.

Maronna, R., & Zamar, R. (2002). Robust estimation of location and dispersion for high-dimensional datasets. Technometrics, 44, 307–317.

Minami, M., & Eguchi, S. (2002). Robust blind source separation by β-divergence. Neural Computation, 14, 1859–1886.

Mollah, M.N.H., Minami, M., Eguchi, S. (2006). Exploring latent structure of mixture ICA models by the minimum β-divergence method. Neural Computation, 18, 166–190.

Mollah, M.N.H., Minami, M., Eguchi, S. (2007). Robust prewhitening for ICA by minimizing beta-divergence and its application to FastICA. Neural processing Letters, 25(2), 91–110.

Mollah, M.N.H., Sultana, N., Minami, M., Eguchi, S. (2010). Robust extraction of local structures by the minimum β-divergence method. Neural Networks, 23, 226–238.

Puranen, J. (2006). Fish catch data set. http://www.amstat.org/publications/jse/datasets/fishcatch.txt.

Randles, R.H., Broffitt, J.D., Ramberg, J.R., Hogg, R.V. (1978). Generalized linear and quadratic discriminant functions using robust estimates. Journal of the American Statistical Association, 73, 564–568.

Todorov, V.K. (2006). rrcov, Scable Robust Estimators with high breakdown point. R package.

Todorov, V., & Pires, A.M. (2007). Comparative performance of several robust linear discriminant analysis methods. REVSTAT-Statistical Journal, 5, 63–83.

Todorov, V., Neykov, N., Neytchev, P. (1990). Robust selection of variables in the discriminant analysis based on mve and mcd estimators. In Pro-ceedings in computational statistics COMPSTAT. Heidelberg: Physica.

Todorov, V., Neykov, N., Neytchev, P. (1994). Robust two-group discrimination by bounded influence regression. Computational Statistics and Data Analysis, 17, 289–302.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Figures 3a–b and 4a–b show the performance results of the proposed Bayesian robust LDA and QDA both under different conditions. Figure 3a shows the plots of training (left) and test (right) MER against the common differences between the mean components of two mean vectors with equal covariance matrices (V1 = V2) for the data structure D1B of p = 15 variables respectively, in absence outliers, while Fig. 3b shows the plots of training (left) and test (right) MER against the common differences between the mean components of two mean vectors with equal covariance matrices (V1 = V2) for the data structure D1B of p = 15 variables respectively, in presence of 15% outlying data vectors. We observed that the proposed LDA shows smaller test MER than the proposed QDA in both absence and presence of outliers. Figure 4a shows the plots of training (left) and test (right) MER against the common differences between the mean components of two mean vectors with unequal covariance matrices (V1 ≠ V2) for the data structure D1B of p = 15 variables respectively, in absence outliers, while Fig. 4b shows the plots of training (left) and test (right) MER against the common differences between the mean components of two mean vectors with unequal covariance matrices (V1 ≠ V2) for the data structure D1B of p = 15 variables respectively, in presence of 15% outlying data vectors. We observed that the proposed QDA shows smaller test MER than the proposed LDA in both absence and presence of outliers. Thus the proposed LDA shows better performance than the proposed QDA in the case of constant covariance matrices, while the proposed QDA shows better performance than the proposed LDA in the case of inconstant covariance matrices.

Plot of misclassification error rate (MER) against the common differences between the mean components of two mean vectors with equal covariance matrices for the data structure D1B of p = 15 variables. a Training (left) and test (right) MER in absence of outliers. b Training (left) and test (right) MER in presence of 15% outlying vectors under THCM in both training and test datasets

Plot of misclassification error rate (MER) against the common differences between the mean components of two mean vectors with unequal covariance matrices for the data structure D1B of p = 15 variables. a Training (left) and test (right) MER in absence of outliers. b Training (left) and test (right) MER in presence of 15% outlying vectors under THCM in both training and test datasets

However, it should be to be mentioned here that the performance of the proposed method also depends on the cutoff/threshold value (δ) of βj-weights for outlier detection as discussed in Section 2.3.1. Figure 5 shows the plots of MER (Top) and ROC curve (Bottom) to investigate the performance of the proposed threshold/cutoff value (δ) of βj-weight function in a comparison of the cutoff value used by Mollah et al. (2010) for outlier detection with the data structure D1B of p = 15 variables. We observe that the proposed cutoff value produces smaller MER and larger TPR (true positive rate) by the proposed classifiers.

ROC curves with the average values of TPR and FPR based on 500 simulated datasets of types D1A and D1B in absence/presence of outliers by the classical MLE, FSA, MCD-A, MCD-B, MCD-C, OGK, and proposed methods. a ROC curve with p = 5 (left) and p = 15 (right) variables in absence of outliers. b ROC curve with p = 5 (left) and p = 15 (right) variables in presence of 15% outlying vectors under THCM in the test datasets. c ROC curve with p = 5 (left) and p = 15 (right) variables in presence of 15% outlying vectors under THCM in both training and test datasets

Plot for misclassification error rate (MER) against common mean vector differences for data structure D2B. a Training (left) and test (right) MER in presence of 50% outlying vectors under THCM in the test datasets only. b Training (left) and test (right) MER in presence of 50% outlying vectors under THCM in both training and test datasets

Some important results for the proposed method with the simulated data structure D2 = {D2A, D2B} and the real fish catch dataset. a Plot of βj-weight for each data point of each group/class in presence of outliers. b The tuning parameter βj selection by cross-validation (CV) using the measure which is defined in Eq. 17. (i) Box plot for all estimated βj with all simulated datasets in the absence (left) and presence (right) of outliers. (ii) Plot of \(D_{{\beta _{j}}_{0}}(\beta _{j})\) against βj (17) based on CV results for βj selection from real fish catch dataset. (iii) Plot of CV results for βj selection from real fish catch dataset in the presence 10% outlying vectors under THCM

Rights and permissions

About this article

Cite this article

Rahaman, M.M., Mollah, M.N.H. Robustification of Gaussian Bayes Classifier by the Minimum β-Divergence Method. J Classif 36, 113–139 (2019). https://doi.org/10.1007/s00357-019-9306-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00357-019-9306-1