Abstract

Linear mixed-effects models are commonly used when multiple correlated measurements are made for each unit of interest. Some inherent features of these data can make the analysis challenging, such as when the series of responses are repeatedly collected for each subject at irregular intervals over time or when the data are subject to some upper and/or lower detection limits of the experimental equipment. Moreover, if units are suspected of forming distinct clusters over time, i.e., heterogeneity, then the class of finite mixtures of linear mixed-effects models is required. This paper considers the problem of clustering heterogeneous longitudinal data in a mixture framework and proposes a finite mixture of multivariate normal linear mixed-effects model. This model allows us to accommodate more complex features of longitudinal data, such as measurement at irregular intervals over time and censored data. Furthermore, we consider a damped exponential correlation structure for the random error to deal with serial correlation among the within-subject errors. An efficient expectation-maximization algorithm is employed to compute the maximum likelihood estimation of the parameters. The algorithm has closed-form expressions at the E-step that rely on formulas for the mean and variance of the multivariate truncated normal distributions. Furthermore, a general information-based method to approximate the asymptotic covariance matrix is also presented. Results obtained from the analysis of both simulated and real HIV/AIDS datasets are reported to demonstrate the effectiveness of the proposed method.

Similar content being viewed by others

Data Availability

The data that support the findings of this study are available from the corresponding author upon request.

References

Acosta EP, Wu H, Hammer SM, Yu S, Kuritzkes DR, Walawander A, Eron JJ, Fichtenbaum CJ, Pettinelli C, Neath D, et al. (2004) Comparison of two indinavir/ritonavir regimens in the treatment of hiv-infected individuals. JAIDS Journal of Acquired Immune Deficiency Syndromes 37(3), 1358–1366.

Akaike H (1974) A new look at the statistical model identification. IEEE Trans Autom Cont 19:716–723.

Bai X, Chen K, Yao W (2016) Mixture of linear mixed models using multivariate t distribution. Journal of Statistical Computation and Simulation 86(4), 771–787.

Basso RM, Lachos VH, Cabral CRB, Ghosh P (2010) Robust mixture modeling based on scale mixtures of skew-normal distributions. Computational Statistics & Data Analysis 54(12), 2926–2941.

Booth JG, Casella G, Hobert JP (2008) Clustering using objective functions and stochastic search. Journal of the Royal Statistical Society, Series B (Statistical Methodology) 70(1):119–139

Celeux G, Martin O, Lavergne C (2005) Mixture of linear mixed models for clustering gene expression profiles from repeated microarray experiments. Statistical Modelling 5(3), 243–267.

De la Cruz-Mesía R, Quintana FA, Marshall G (2008) Model-based clustering for longitudinal data. Computational Statistics & Data Analysis 52(3), 1441–1457.

Cuesta-Albertos JA, Gordaliza A, Matrán C, et al. (1997) Trimmed \(k\)-means: An attempt to robustify quantizers. The Annals of Statistics 25(2), 553–576.

Dempster A, Laird N, Rubin D (1977) Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society, Series B, 39:1–38.

Faria S, Soromenho G (2010) Fitting mixtures of linear regressions. Journal of Statistical Computation and Simulation 80(2), 201–225.

Fitzgerald AP, DeGruttola VG, Vaida F (2002) Modelling hiv viral rebound using non-linear mixed effects models. Statistics in Medicine 21(14), 2093–2108.

Gaffney S, Smyth P (2003) Curve clustering with random effects regression mixtures. In: AISTATS

Gałecki AT, Burzykowski T (2013) Linear mixed-effects models using R: a step-by-step approach. New York: Springer.

Hathaway RJ, et al. (1985) A constrained formulation of maximum-likelihood estimation for normal mixture distributions. The Annals of Statistics 13(2), 795–800.

Hughes J (1999) Mixed effects models with censored data with application to HIV RNA levels. Biometrics 55:625–629.

Karlsson M, Laitila T (2014) Finite mixture modeling of censored regression models. Statistical Papers 55(3), 627–642.

Kiefer NM (1978) Discrete parameter variation: Efficient estimation of a switching regression model. Econometrica: Journal of the Econometric Society 46:427–434

Lachos VH, Ghosh P, Arellano-Valle RB (2010) Likelihood based inference for skew-normal independent linear mixed models. Statistica Sinica 20:303–322.

Lachos, V. H., Matos, A. L., Castro, L. M., & Chen, M. H. (2019) Flexible longitudinal linear mixed models for multiple censored responses data. Statistics in Medicine, 38(6), 1074–1102.

Laird NM, Ware JH, et al. (1982) Random-effects models for longitudinal data. Biometrics 38(4), 963–974.

Lin TI (2010) Robust mixture modeling using multivariate skew t distributions. Statistics and Computing 20(3), 343–356.

Lin TI (2014) Learning from incomplete data via parameterized t mixture models through eigenvalue decomposition. Computational Statistics & Data Analysis 71:183–195.

Lin TI, Wang WL (2013) Multivariate skew-normal linear mixed models for multi-outcome longitudinal data. Statistical Modelling 13(3), 199–221.

Lin TI, Wang WL (2020) Multivariate-t linear mixed models with censored responses, intermittent missing values and heavy tails. Statistical Methods in Medical Research 29(5), 1288–1304.

Lin TI, McLachlan GJ, Lee SX (2016) Extending mixtures of factor models using the restricted multivariate skew-normal distribution. Journal of Multivariate Analysis 143:398–413.

Lin TI, Lachos VH, Wang WL (2018) Multivariate longitudinal data analysis with censored and intermittent missing responses. Statistics in Medicine 37(19), 2822–2835.

Louis TA (1982) Finding the observed information matrix when using the em algorithm. Journal of the Royal Statistical Society, Series B (Methodological) 44(2):226–233

Matos LA, Lachos VH, Balakrishnan N, Labra FV (2013a) Influence diagnostics in linear and nonlinear mixed-effects models with censored data. Computational Statistics & Data Analysis 57(1):450–464

Matos LA, Prates MO, Chen MH, Lachos VH (2013b) Likelihood-based inference for mixed-effects models with censored response using the multivariate-t distribution. Statistica Sinica 23(3):1323–1345

Matos LA, Bandyopadhyay D, Castro LM, Lachos VH (2015) Influence assessment in censored mixed-effects models using the multivariate Student t distribution. Journal of Multivariate Analysis 141:104–117.

Matos LA, Castro LM, Lachos VH (2016) Censored mixed-effects models for irregularly observed repeated measures with applications to HIV viral loads. TEST 25(4), 627–653.

McLachlan GJ, Peel D (2000) Finite mixture models. John Wiley & Sons.

McNicholas PD (2016) Model-based clustering. Journal of Classification 33(3), 331–373.

Meng XL, Rubin DB (1993) Maximum likelihood estimation via the ECM algorithm: A general framework. Biometrika 80(2), 267–278.

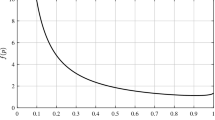

Muñoz A, Carey V, Schouten JP, Segal M, Rosner B (1992) A parametric family of correlation structures for the analysis of longitudinal data. Biometrics 48(3), 733–742.

Ng SK, McLachlan GJ, Wang K, Ben-Tovim Jones L, Ng SW (2006) A mixture model with random-effects components for clustering correlated gene-expression profiles. Bioinformatics 22(14), 1745–1752.

Rousseeuw PJ, Kaufman L (1990) Finding groups in data. Hoboken: Wiley Online Library.

Schwarz G, et al. (1978) Estimating the dimension of a model. The Annals of Statistics 6(2), 461–464.

Spiessens B, Verbeke G, Komárek A (2002) A sas-macro for the classification of longitudinal profiles using mixtures of normal distributions in nonlinear and generalised linear mixed models. Arbeitspapier, Katholische Universität Leuven: Biostatistisches Zentrum, Belgien.

Tzortzis G, Likas A (2014) The minmax k-means clustering algorithm. Pattern Recognition 47(7), 2505–2516.

Vaida F, Liu L (2009) Fast implementation for normal mixed effects models with censored response. Journal of Computational and Graphical Statistics 18(4), 797–817.

Vaida F, Fitzgerald A, DeGruttola V (2007) Efficient hybrid EM for linear and nonlinear mixed effects models with censored response. Computational Statistics & Data Analysis 51:5718–5730

Verbeke G, Lesaffre E (1996) A linear mixed-effects model with heterogeneity in the random-effects population. Journal of the American Statistical Association 91(433), 217–221.

Wang WL (2013) Multivariate t linear mixed models for irregularly observed multiple repeated measures with missing outcomes. Biometrical Journal 55(4), 554–571.

Wang WL (2017) Mixture of multivariate t linear mixed models for multi-outcome longitudinal data with heterogeneity. Statistica Sinica 27:733–760.

Wang WL (2019) Mixture of multivariate t nonlinear mixed models for multiple longitudinal data with heterogeneity and missing values. TEST 28(1), 196–222.

Wang WL, Fan TH (2011) Estimation in multivariate t linear mixed models for multiple longitudinal data. Statistica Sinica 21:1857–1880.

Wang WL, Lin TI (2015) Robust model-based clustering via mixtures of skew-t distributions with missing information. Advances in Data Analysis and Classification 9(4), 423–445.

Wu L (2009) Mixed effects models for complex data. Chapman and Hall/CRC

Yang YC, Lin TI, Castro LM, Wang WL (2020) Extending finite mixtures of t linear mixed-effects models with concomitant covariates. Computational Statistics & Data Analysis 148:106961, 1–20.

Zeller CB, Cabral CR, Lachos VH (2016) Robust mixture regression modeling based on scale mixtures of skew-normal distributions. Test 25(2), 375–396.

Zhang B (2003) Regression clustering. In: Data Mining, 2003. ICDM 2003. Third IEEE International Conference on, IEEE, pp 451–458

Acknowledgements

We thank the editor, associate editor and three anonymous referees for their important comments and suggestions which lead to an improvement of this paper.

Funding

This study was financed in part by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior - Brazil (CAPES) - Finance Code 001. Larissa A. Matos received support from FAPESP-Brazil (Grant 2020/16713-0).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendices

Appendix A. The derivatives of the expectations

Using the properties of conditional expectation, we obtain:

To compute the expectation terms above, it is important to note that,

where \(TN_{n_i}(\cdot ;\mathbb {A})\) denotes the truncated normal distribution in the interval \(\mathbb {A}\), \(\varvec{\varphi }_{ij} = \frac{1}{\sigma ^2_j}\varvec{\Lambda }_{ij}\mathbf {U}_i^\top\) and \(\varvec{\Lambda }_{ij} = \left( \mathbf {D}^{-1} + \frac{1}{\sigma ^2_j}\mathbf {U}_i^\top \mathbf {E}_{ij}^{-1}\mathbf {U}_i\right) ^{-1}\). So, the expectation terms are given by

As mentioned earlier, the E-step reduces to the computation of \(\widehat{\mathbf {y}}_{i}^{(k)}\) and \(\widehat{\mathbf {y}}^{2(k)}_{i}\), that is, the mean and the second moment of a multivariate truncated Gaussian distribution, and can be determined in closed form (Vaida and Liu , 2009; Matos et al. , 2016).

Appendix B. Empirical information matrix

The elements of \(\widehat{s}_i\) in Equation (13) are given by

where \(\left. \dot{\mathbf {D}}_j(r) = \frac{\partial \mathbf {D}_j}{\partial \alpha _{jr}}\right| _{\alpha =\widehat{\alpha }}\), \(r = 1, 2, \ldots , \dim (\varvec{\alpha }_j)\); and \(\left. \dot{\mathbf {E}}_{ij}(s) = \frac{\partial \mathbf {E}_{ij}}{\partial \phi _{js}}\right| _{\phi =\widehat{\phi }}\), \(s = 1, 2\). For the DEC structure, the derivatives are given by

Rights and permissions

About this article

Cite this article

de Alencar, F.H.C., Matos, L.A. & Lachos, V.H. Finite Mixture of Censored Linear Mixed Models for Irregularly Observed Longitudinal Data. J Classif 39, 463–486 (2022). https://doi.org/10.1007/s00357-022-09415-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00357-022-09415-x