Abstract

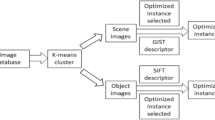

In this paper, we present a new object recognition model with few instances based on semantic distance. Learning objects with many instances have been studied in computer vision for many years. However, in many cases, not enough positive instances occur, especially for some special categories. We must take full advantage of all instances, including those that do not belong to the category. The main insight is that, given a few positive instances from one category, we can define some other candidate instances as positive instances based on semantic distance to learn this model. Our model responds more strongly to instances with closer semantic distance to positive instances than to instances with farther semantic distance to positive instances. We use a regularized kernel machine algorithm to train the images from the database. The superiority of our method to existing object recognition methods is demonstrated. Experiments using an image database show that our method not only reduces the number of learning instances but also keeps the accurate rate of recognition.

Similar content being viewed by others

References

Bart, E., et al.: Unsupervised learning of visual taxonomies. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8. IEEE, Anchorage (2008)

Felzenszwalb, P., McAllester, D., Ramanan, D.: A discriminatively trained, multiscale, deformable part model. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8. IEEE, Anchorage (2008)

Fergus, R., Weiss, Y., Torralba, A.: Semi-supervised learning in gigantic image collections. In: Neural Information Processing Systems, Vancouver, B.C., Canada, p. 23 (2009)

Palatucci, M., et al.: Zero-shot learning with semantic output codes. In: Neural Information Processing Systems, Vancouver, B.C., Canada, p. 22 (2009)

Fei-Fei, L., Fergus, R., Perona, P.: One-shot learning of object categories. IEEE Trans. Pattern Anal. Mach. Intell. 28(3), 594–611 (2006)

Maji, S., Berg, A.C.: Max-margin additive classifiers for detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 40–47. IEEE, Kyoto (2009)

Kumar, N., et al.: Attribute and simile classifiers for face verification. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 365–372. IEEE, Kyoto (2009)

Zha, Z., et al.: Joint multi-label multi-instance learning for image classification. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1–8. Anchorage (2008)

Russell, B.C., et al.: LabelMe: a database and web-based tool for image annotation. Int. J. Comput. Vis. 77(1–3), 157–173 (2008)

Ulges, A., et al.: Identifying relevant frames in weakly labeled videos for training concept detectors. In: Proceedings of International Conference on Content-Based Image and Video Retrieval, Niagara Falls, Canada, pp. 9–16 (2008)

Fu, Y., Hospedales, T., Xiang, T., Gong, S.: Learning multi-modal latent attributes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2013)

Torralba, A., et al.: Describing visual scenes using transformed Dirichlet processes. Adv. Neural Inf. Process. Syst., pp. 1297–1304 (2005)

Sudderth, E.B., et al.: Learning hierarchical models of scenes, objects, and parts. In: Tenth IEEE International Conference on Computer Vision, 2005. ICCV 2005, vol. 2. IEEE (2005)

Lampert, C.H., Nickisch, H., Harmeling, S.: Learning to detect unseen object classes by between-class attribute transfer. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 951–958. IEEE, Miami (2009)

Herbrich, R., Graepel, T., Obermayer, K.: Large margin rank boundaries for ordinal regression. In: KDD, pp. 115–132. Microsoft Research Publisher/MIT Press, Cambridge (2002)

Zhang, J., et al.: Local features and kernels for classification of texture and object categories: a comprehensive study. Int. J. Comput. Vis. 73(2), 213–238 (2007)

Weinberger, K., Blitzer, J., Saul, L.: Distance Metric learning for large margin nearest neighbour classification. In: Proceedings of the Conference on Advances in Neural Information Processing Systems, vol. 18, pp. 1437–1480 (2006)

Jacobs, D.W., Weinshall, D., Gdalyahu, Y.: Classification with non-metric distances: image retrieval and class representation. IEEE Trans. Pattern Anal. Mach. Intell. 22(6), 583–600 (2000)

Frome, A., et al.: Learning globally-consistent local distance functions for shape-based image retrieval and classification. In: Proceedings of the IEEE International Conference on Computer Vision, Rio de Janeiro, Brazil, pp. 1–8 (2007)

Wang, G., Fotsyth, D.: Joint learning of visual attributes, object classes and visual saliency. In: Proceedings of the IEEE Conference on Computer Vision, pp. 537–544. IEEE, Kyoto (2009)

Wu, C.: Content-based image detection of semantic similarity. In: 2010 Second International Workshop on Education Technology and Computer Science (ETCS), vol. 2, pp. 452–455. IEEE (2010)

Choi, J., et al.: Concept-based image retrieval using the new semantic similarity measurement. In: Computational Science and Its Applications-ICCSA 2003. Springer, Berlin, pp. 79–88 (2003)

Cui, C., et al.: Semantically coherent image annotation with a learning-based keyword propagation strategy. In: Proceedings of the 21st ACM International Conference on Information and Knowledge Management, pp. 2423–2426. ACM (2012)

Wang, G., Forsyth, D., Hoiem, D.: Comparative object similarity for improved recognition with few or no examples. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 3525–3532. IEEE, San Francisco (2010)

Lowe, D.G.: Distinctive image features from scale-invariant keypoints. J. Comput. Vis. 60(2), 91–110 (2004)

van de Sande, K., Gevers, T., Snoek, C.: Evaluation of color descriptors for object and scene recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8. IEEE, Anchorage (2008)

Bosch, A., Zisserman, A., Munoz, X.: Representing shape with a spatial pyramid kernel. In: Proceedings of the ACM International Conference on Image and Video Retrieval, pp. 672–679. ACM, New York (2007)

James, H., et al.: Scene completion using millions of photographs. ACM Trans. Graph. 26(3) (2007)

Zheng, Y.-T., et al.: Toward a higher-level visual representation for object-based image retrieval. Vis. Comput. 25(1), 13–23 (2009)

Bart, E., Ullman, S.: Cross-generalization: learning novel classes from a single example by feature replacement. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 672–679. IEEE, San Diego (2005)

Torralba, A., Murphy, K.P.: Sharing visual features for multiclass and multiview object detection. IEEE Trans. Pattern Anal. Mach. Intell. 29(5), 854–869 (2007)

van de Weijer, J., Schmid, C., Verbeek, J.: Learning color names from real-world images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8. IEEE, Minneapolis (2007)

Farhadi, A., et al.: Describing objects by their attributes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1778–1785. IEEE, Miami (2009)

Kunze, K., et al.: The wordometer–estimating the number of words read using document image retrieval and mobile eye tracking. In: 12th International Conference on Document Analysis and Recognition (ICDAR), 2013. IEEE (2013)

Deselaers, T., Keysers, D., Ney, H.: Features for image retrieval: an experimental comparison. Inf. Retr. 11(2), 77–107 (2008)

Hiremath, P.S., Pujari, J.: Content based image retrieval using color, texture and shape features. In: International Conference on Advanced Computing and Communications, 2007. ADCOM 2007, pp. 780–784. IEEE (2007)

Vedaldi, A., Zisserman, A.: Image Classification Practical (2011). http://www.robots.ox.ac.uk/vgg/share/practical-image-classification.htm

An, S., Liu, W., Venkatesh, S.: Fast cross-validation algorithms for least squares support vector machine and kernel ridge regression. Pattern Recognit., pp. 2154–2162 (2007)

Acknowledgments

The authors would like to thank the anonymous reviewers for their valuable comments. This work is supported by the National Key Technology R&D Program of China (2012BAH01F03), National Basic Research (973) Program of China (2011CB302203), research fund of Tsinghua -Tencent Joint Laboratory for Internet Innovation Technology.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wu, H., Miao, Z., Wang, Y. et al. Optimized recognition with few instances based on semantic distance. Vis Comput 31, 367–375 (2015). https://doi.org/10.1007/s00371-014-0931-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-014-0931-8