Abstract

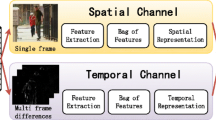

Deep learning approaches emphasized on learning spatio-temporal features for action recognition. Different to previous works, we separate the spatio-temporal feature learning unity into the spatial feature learning and the spatial/temporal feature pooling procedures. Using the temporal slowness regularized independent subspace analysis network, we learn invariant spatial features from sampled video cubes. To be robust to the cluttered backgrounds, we incorporate the denoising criterion to our network. The local spatio-temporal features are obtained by pooling features from the spatial and the temporal aspects. The key points are that we learn spatial features from video cubes and pool features from spatial feature sequences. We evaluate the learned local spatio-temporal features on three benchmark action datasets. Extensive experiments demonstrate the effectiveness of the novel feature learning architecture.

Similar content being viewed by others

References

Andrew, G., Gao, J.: Scalable training of \(l_1\)-regularized log-linear models. In: International conference on Machine Learning, pp. 33–34 (2007)

Chang, C., Lin, C.: Libsvm : a library for support vector machines. ACM Trans. Intell. Syst. Technol. 2(3), 27 (2011)

Chen, B., Ting, J.A., Marlin, B., de Freitas, N.: Deep learning of invariant spatio-temporal features from video. In: Workshop of Neural Information Processing Systems (2010)

Cox, D., Meier, P., Oertelt, N., Dicarlo, J.: ‘Breaking’ position-invariant object recognition. Nat. Neurosci. 8(9), 1145–1147 (2005)

Dawn, D.D., Shaikh, S.H.: A comprehensive survey of human action recognition with spatio-temporal interest point (STIP) detector. Vis. Comput. (2015). doi:10.1007/s00371-015-1066-2

Delaitre, V., Laptev, I., Sivic, J.: Recognizing human actions in still images: a study of bag-of-features and part-based representations. In: British Machine Vision Conference (2010)

Larochelle, H., Erhan, D., Courville, A., Bergstra, Bengio, B.: An empirical evaluation of deep architectures on problems with may factors of variation. In: IEEE International Conference on Machine Learning, New York, ACM, pp. 473–480 (2007)

Hyvarinen, A., Hurri, J., Hoyer, P.: Natural Image Statistics. Springer, Heidelberg (2009)

Jarrett, K., Kavukcuoglu, K., Ranzato, M., LeCun, Y.: What is the best multi-stage architecture for object recognition? In: IEEE International Conference on Computer Vision (2009)

Ji, S., Xu, W., Yang, M., Yu, K.: 3D convolutional neural networks for human action recognition. In: IEEE International Conference on Machine Learning, pp. 3212–3220 (2012)

Jiang, X., Zhong, F., Peng, Q., Qin, X.: Online robust action recognition based on a hierarchical model. Vis. Comput. 30, 1021–1033 (2014)

Jiang, Z., Lin, Z., Davis, L.S.: Recognizing human actions by learning and matching shape-motion prototype trees. IEEE Trans. Pattern Anal. Mach. Intell. 34(3), 533–547 (2012)

Karlinsky, L., Dinerstein, M., Ullman, S.: Using body-anchored priors for identifying actions in single images. In: IEEE Conference on Neural Information Processing Systems (2010)

Karpathy, A., Toderici, G., Shetty, S., Leung, T., Sukthankar, R., Fei-Fei, L.: Large-scale video classification with convolutional neural networks. In: IEEE Conference on Computer Vision and Pattern Recognition (2014)

Kläser, A., Marszalek, M., Schmid, C.: A spatio-temporal descriptor based on 3D gradients. In: British Machine Vision Conference (2008)

Lan, T., Wang, Y., Mori, G.: Discriminative figure-centric models for joint action localization and recognition. In: IEEE International Conference on Computer Vision (2011)

Laptev, I.: On space-time interest points. IEEE Int. J. Comput. Vis. 64, 107–123 (2005)

Laptev, I., Lindeberg, T.: Space-time interest points. In: IEEE International Conference on Computer Vision (2003)

Laptev, I., Marszalek, M., Schmid, C., Rozenfeld, B.: Learning realistic human actions from movies. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8 (2008)

Le, Q.V., Zou, W.Y., Yeung, S.Y., Ng, A.Y.: Learning hierarchical invariant spatio-temporal features for action recognition with independent subspace analysis. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 3361–3368 (2011)

Lee, H., Battle, A., Raina, R., Ng, A.Y.: Efficient sparse coding algorithms. Neural Inf. Process. Syst. 19, 801–808 (2006)

Li, N., Dicarlo, J.J.: Unsupervised natural experience rapidly alters invariant object representation. Science 321, 1502–1507 (2008)

Liang, X., Lin, L., Cao, L.: Learning latent spatio-temporal compositional model for human action recognition. In: Proceedings of the 21st ACM International Conference on Multimedia, pp. 263–272 (2013)

Marszalek, M., Laptev, I., Schmid, C.: Actions in context. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 2929–2936 (2009)

Memisevic, R., Hinton, G.: Unsupervised learning of image transformations. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8 (2008)

Niebles, J., Wang, H., Fei-Fei, L.: Unsupervised learning of human action categories using spatial-temporal words. Int. J. Comput. Vis. 79(3), 299–318 (2008)

Pei, L., Ye, M., Xu, P., Li, T.: Fast multi-class action recognition by querying inverted index tables. Multimed. Tools Appl. (2014). doi:10.1007/s11042-014-2207-8

Pei, L., Ye, M., Xu, P., Zhao, X., Li, T.: Multi-class action recognition based on inverted index of action states. In: IEEE International Conference on Image Processing (2013)

Raptis, M., Kokkinos, I., Soatto, S.: Discovering discriminative action parts from mid-level video representations. In: IEEE Conference on Computer Vision and Pattern Recognition (2012)

Rodriguez, M., Ahmed, J., Shah, M.: Action mach: a spatio-temporal maximum average correlation height filter for action recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 3361–3366 (2008)

Schüldt, C., Laptev, I., Caputo, B.: Recognizing human actions: a local svm approach. In: IEEE International Conference on Pattern Recognition, pp. 32–36 (2004)

Sharma, G., Jurie, F., Schmid, C.: Expanded parts model for human attribute and action recognition in still images. In: IEEE Conference on Computer Vision and Pattern Recognition (2013)

Shi, F., Petriu, E., Laganière, R.: Sampling strategies for real-time action recognition. In: IEEE Conference on Computer Vision and Pattern Recognition (2013)

Taylor, G.W., Fergus, R., LeCun, Y., Bregler, C.: Convolutional learning of spatio-temporal features. In: European Conference on Computer Vision, pp. 140–153 (2010)

Nair, V., Hinton, G.: 3D object recognition with deep belief nets. In: Neural Information Processing Systems, pp. 1339–1347 (2009)

Vincent, P., Larochelle, H., Lajoie, I., Bengio, Y., Manzagol, P.A.: Stacked denoising autoencoders: learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res. 11, 3371–3408 (2010)

Wang, H., Ullah, M.M., Kläser, A., Laptev, L., Schmid, C.: Evaluation of local spatio-temporal features for action recognition. In: British Machine Vision Conference (2010)

Wang, K., Wang, X., Lin, L., Wang, M., Zuo, W.: 3D human activity recognition with reconfigurable convolutional neural networks. In: Proceedings of the ACM International Conference on Multimedia (2014)

Willems, G., Tuytelaars, T., Gool, L.: An efficient dense and scale-invariant spatio-temporal interest point detector. In: European Conference on Computer Vision (2008)

Zou, W.Y., Zhu, S., Ng, A.Y., Yu, K.: Deep learning of invariant features via simulated fixations in video. In: IEEE Conference on Neural Information Processing Systems, pp. 3212–3220 (2012)

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China (61375038).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Pei, L., Ye, M., Zhao, X. et al. Action recognition by learning temporal slowness invariant features. Vis Comput 32, 1395–1404 (2016). https://doi.org/10.1007/s00371-015-1090-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-015-1090-2