Abstract

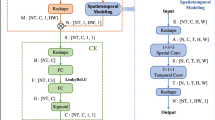

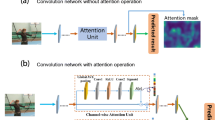

Inflated 3D ConvNet (I3D) utilizes 3D convolution to enrich semantic information of features, forming a strong baseline for human action recognition. However, 3D convolution extracts features by mixing spatial, temporal and cross-channel information together, lacking the ability to emphasize meaningful features along specific dimensions, especially for the cross-channel information, which is, however, of crucial importance in recognizing fine-grained actions. In this paper, we propose a novel multi-view attention mechanism, named channel–spatial–temporal attention (CSTA) block, to guide the network to pay more attention to the clues useful for fine-grained action recognition. Specifically, CSTA consists of three branches: channel–spatial branch, channel–temporal branch and spatial–temporal branch. By directly plugging these branches into I3D, we further explore the impact of location information as well as the number of blocks in terms of recognition accuracy. We also examine two different strategies for designing a mixture of multiple CSTA blocks. Extensive experiments demonstrate the effectiveness of our CSTA. Namely, while using only RGB frames to train the network, I3D equipped with CSTA (I3D–CSTA) achieves accuracies of 95.76% and 73.97% on UCF101 and HMDB51, respectively. These results are indeed comparable with the results produced by the methods using both RGB frames and optical flow. Even more, with the assistance of optical flow, the recognition accuracies of CSTA–I3D rise to 98.2% on UCF101 and 82.9% on HMDB51, outperforming many state-of-the-art methods.

Similar content being viewed by others

References

Bilen, H., Fernando, B., Gavves, E., Vedaldi, A.: Action recognition with dynamic image networks. IEEE Trans. Pattern Anal. Mach. Intell. 40(12), 2799–2813 (2018)

Carreira, J., Zisserman, A.: Quo vadis, action recognition? a new model and the kinetics dataset. pp. 4724–4733 (2017)

Diba, A., Sharma, V., Gool, L.V.: Deep temporal linear encoding networks. CoRR arXiv:1611.06678 (2016)

Du, T., Bourdev, L., Fergus, R., Torresani, L., Paluri, M.: Learning spatiotemporal features with 3d convolutional networks. In: 2015 IEEE International Conference on Computer Vision, ICCV 2015, pp. 4489–4497, Santiago (2015)

Feichtenhofer, C., Pinz, A., Zisserman, A.: Convolutional two-stream network fusion for video action recognition. pp. 1933–1941 (2016)

Fu, J., Zheng, H., Mei, T.: Look closer to see better: recurrent attention convolutional neural network for fine-grained image recognition. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, pp. 4476–4484. Honolulu (2017)

Girdhar, R., Ramanan, D.: Attentional pooling for action recognition. In: Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, pp. 33–44. Long Beach (2017)

Girdhar, R., Ramanan, D., Gupta, A., Sivic, J., Russell, B.: Actionvlad: Learning spatio-temporal aggregation for action classification. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, pp. 3165–3174. Honolulu (2017)

Hara, K., Kataoka, H., Satoh, Y.: Learning spatio–temporal features with 3d residual networks for action recognition. In: IEEE International Conference on Computer Vision Workshop (2018)

Hu, J., Shen, L., Albanie, S., Sun, G., Wu, E.: Squeeze-and-excitation networks. CoRR arXiv:1709.01507 (2017)

Ji, S., Xu, W., Yang, M., Yu, K.: 3d convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 35(1), 221–231 (2013)

Karpathy, A., Toderici, G., Shetty, S., Leung, T., Sukthankar, R., Li, F.F.: Large-scale video classification with convolutional neural networks. In: 2014 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2014, pp. 1725–1732. Columbus (2014)

Kay, W., Carreira, J., Simonyan, K., Zhang, B., Hillier, C., Vijayanarasimhan, S., Viola, F., Green, T., Back, T., Natsev, P., Suleyman, M., Zisserman, A.: The kinetics human action video dataset. CoRR arXiv:1705.06950 (2017)

Kuehne, H., Jhuang, H., Stiefelhagen, R., Serre, T.: HMDB51: A Large Video Database for Human Motion Recognition (2013)

Lan, Z., Yi, Z., Hauptmann, A.G., Newsam, S.: Deep local video feature for action recognition. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops, CVPR Workshops 2017, pp. 1219–1225. Honolulu (2017)

Laptev, I.: On space-time interest points. Int. J. Comput. Vis. 64(2–3), 107–123 (2005)

Li, Y., Song, S., Li, Y., Liu, J.: Temporal bilinear networks for video action recognition. CoRR arXiv:1811.09974 (2018)

Qiu, Z., Yao, T., Tao, M.: Learning spatio–temporal representation with pseudo-3d residual networks. pp. 5534–5542 (2017)

Sevilla-Lara, L., Liao, Y., Güney, F., Jampani, V., Geiger, A., Black, M.J.: On the integration of optical flow and action recognition. CoRR arXiv:1712.08416 (2017)

Sharma, S., Kiros, R., Salakhutdinov, R.: Action recognition using visual attention. CoRR arXiv:1511.04119 (2015)

Simonyan, K., Zisserman, A.: Two-stream convolutional networks for action recognition in videos. pp. 568–576 (2014)

Soomro, K., Zamir, A.R., Shah, M.: UCF101: A dataset of 101 human actions classes from videos in the wild. CoRR arXiv:1212.0402 (2012)

Tran, D., Ray, J., Shou, Z., Chang, S.F., Paluri, M.: Convnet architecture search for spatiotemporal feature learning. CoRR arXiv:1708.05038 (2017)

Varol, G., Laptev, I., Schmid, C.: Long-term temporal convolutions for action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 99, 1–1 (2018)

Wang, C., Bai, X., Wang, S., Zhou, J., Ren, P.: Multiscale visual attention networks for object detection in VHR remote sensing images. IEEE Geosci. Remote Sens. Lett. 16(2), 310–314 (2019)

Wang, H., Kläser, A., Schmid, C., Liu, C.: Action recognition by dense trajectories. In: The 24th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2011, pp. 3169–3176. Colorado Springs, USA (2011)

Wang, H., Schmid, C.: Action recognition with improved trajectories. In: IEEE International Conference on Computer Vision (2014)

Wang, L., Li, W., Li, W., Van Gool, L.: Appearance-and-relation networks for video classification. pp. 1430–1439 (2018)

Wang, L., Xiong, Y., Zhe, W., Yu, Q., Lin, D., Tang, X., Gool, L.V.: Temporal segment networks: Towards good practices for deep action recognition. In: Computer Vision—ECCV 2016—14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part VIII, pp. 20–36 (2016)

Wang, L., Yu, Q., Tang, X.: Action recognition with trajectory-pooled deep-convolutional descriptors. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2015, pp. 4305–4314. Boston (2015)

Wang, X., Girshick, R.B., Gupta, A., He, K.: Non-local neural networks. CoRR arXiv:1711.07971 (2017)

Wang, Y., Long, M., Wang, J., Yu, P.S.: Spatiotemporal pyramid network for video action recognition. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, pp. 2097–2106. Honolulu (2017)

Wu, C., Zaheer, M., Hu, H., Manmatha, R., Smola, A.J., Krähenbühl, P.: Compressed video action recognition. CoRR arXiv:1712.00636 (2017)

Yu, Z., Liu, G., Liu, Q., Deng, J.: Spatio–temporal convolutional features with nested lstm for facial expression recognition. Neurocomputing (2018). https://doi.org/10.1016/j.neucom.2018.07.028

Yu, Z., Liu, Q., Liu, G.: Deeper cascaded peak-piloted network for weak expression recognition. V. Comput. 6C8, 1–9 (2017)

Zach, C., Pock, T., Bischof, H.: A duality based approach for realtime tv-l1 optical flow. In: Pattern Recognition, 29th DAGM Symposium, Heidelberg, Germany, September 12–14, 2007, Proceedings, pp. 214–223 (2007)

Zhang, B., Wang, L., Zhe, W., Yu, Q., Wang, H.: Real-time action recognition with enhanced motion vector CNNs pp. 2718–2726 (2016)

Zhou, B., Andonian, A., Torralba, A.: Temporal relational reasoning in videos. CoRR arXiv:1711.08496 (2017)

Zhou, B., Khosla, A., Lapedriza, À., Oliva, A., Torralba, A.: Learning deep features for discriminative localization. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, pp. 2921–2929. Las Vegas (2016)

Zhou, Y., Sun, X., Zha, Z.J., Zeng, W.: Mict: Mixed 3d/2d convolutional tube for human action recognition. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Zhu, J., Zou, W., Zhu, Z., Li, L.: End-to-end video-level representation learning for action recognition. CoRR arXiv:1711.04161 (2017)

Acknowledgements

This work is supported in part by National Natural Science Foundation of China (NSFC) under Grant 61622305, 61502238 in part by the Natural Science Foundation of Jiangsu Province of China (NSFJPC) under Grant BK20160040.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All the authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhu, Y., Liu, G. Fine-grained action recognition using multi-view attentions. Vis Comput 36, 1771–1781 (2020). https://doi.org/10.1007/s00371-019-01770-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-019-01770-y