Abstract

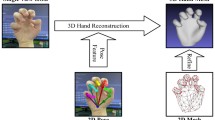

Most of the existing methods for 3D hand analysis based on RGB images mainly focus on estimating hand keypoints or poses, which cannot capture geometric details of the 3D hand shape. In this work, we propose a novel method to reconstruct a 3D hand mesh from a single monocular RGB image. Different from current parameter-based or pose-based methods, our proposed method directly estimates the 3D hand mesh based on graph convolution neural network (GCN). Our network consists of two modules: the hand localization and mask generation module, and the 3D hand mesh reconstruction module. The first module, which is a VGG16-based network, is applied to localize the hand region in the input image and generate the binary mask of the hand. The second module takes the high-order features from the first and uses a GCN-based network to estimate the coordinates of each vertex of the hand mesh and reconstruct the 3D hand shape. To achieve better accuracy, a novel loss based on the differential properties of the discrete mesh is proposed. We also use professional software to create a large synthetic dataset that contains both ground truth 3D hand meshes and poses for training. To handle the real-world data, we use the CycleGAN network to transform the data domain of real-world images to that of our synthesis dataset. We demonstrate that our method can produce accurate 3D hand mesh and achieve an efficient performance for real-time applications.

Similar content being viewed by others

References

Animated 3d characters. https://www.mixamo.com (2018)

Bogo, F., Kanazawa, A., Lassner, C., Gehler, P., Romero, J., Black, M.J.: Keep it SMPL: automatic estimation of 3d human pose and shape from a single image. In: European Conference on Computer Vision, pp. 561–578. Springer (2016)

Boukhayma, A., Bem, R.D., Torr, P.H.: 3d hand shape and pose from images in the wild. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 10843–10852 (2019)

Chung, F.R., Graham, F.C.: Spectral Graph Theory, vol. 92. American Mathematical Society, New York (1997)

Defferrard, M., Bresson, X., Vandergheynst, P.: Convolutional neural networks on graphs with fast localized spectral filtering. In: Advances in Neural Information Processing Systems, pp. 3844–3852 (2016)

Dhillon, I.S., Guan, Y., Kulis, B.: Weighted graph cuts without eigenvectors a multilevel approach. IEEE Trans. Pattern Analy. Mach. Intell. 29(11), 1944–1957 (2007)

Fan, Q., Shen, X., Hu, Y.: Detail-preserved real-time hand motion regression from depth. Vis. Comput. 34(9), 1145–1154 (2018)

Flickr community. https://www.flickr.com (2018)

Ge, L., Cai, Y., Weng, J., Yuan, J.: Hand pointnet: 3d hand pose estimation using point sets. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8417–8426 (2018)

Ge, L., Ren, Z., Li, Y., Xue, Z., Wang, Y., Cai, J., Yuan, J.: 3d hand shape and pose estimation from a single rgb image. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 10833–10842 (2019)

He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask R-CNN. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2961–2969 (2017)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4700–4708 (2017)

Jang, Y., Noh, S.T., Chang, H.J., Kim, T.K., Woo, W.: 3d finger cape: clicking action and position estimation under self-occlusions in egocentric viewpoint. IEEE Trans. Vis. Comput. Graph. 21(4), 501–510 (2015)

Joo, H., Simon, T., Sheikh, Y.: Total capture: a 3d deformation model for tracking faces, hands, and bodies. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8320–8329 (2018)

Kanazawa, A., Black, M.J., Jacobs, D.W., Malik, J.: End-to-end recovery of human shape and pose. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7122–7131 (2018)

Khamis, S., Taylor, J., Shotton, J., Keskin, C., Izadi, S., Fitzgibbon, A.: Learning an efficient model of hand shape variation from depth images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2540–2548 (2015)

Lassner, C., Romero, J., Kiefel, M., Bogo, F., Black, M.J., Gehler, P.V.: Unite the people: closing the loop between 3d and 2d human representations. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6050–6059 (2017)

Lin, M., Chen, Q., Yan, S.: Network in network. arXiv preprint arXiv:1312.4400 (2013)

Lipman, Y., Sorkine, O., Levin, D., Cohen-Or, D.: Linear rotation-invariant coordinates for meshes. ACM Trans. Graph. (TOG) 24, 479–487 (2005)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431–3440 (2015)

Loper, M., Mahmood, N., Romero, J., Pons-Moll, G., Black, M.J.: SMPL: a skinned multi-person linear model. ACM Trans. Graph. (TOG) 34(6), 248 (2015)

Ma, C., Wang, A., Chen, G., Xu, C.: Hand joints-based gesture recognition for noisy dataset using nested interval unscented kalman filter with lstm network. Vis. Comput. 34(6–8), 1053–1063 (2018)

Malik, J., Elhayek, A., Stricker, D.: WHSP-Net: a weakly-supervised approach for 3d hand shape and pose recovery from a single depth image. Sensors 19(17), 3784 (2019)

Mueller, F., Bernard, F., Sotnychenko, O., Mehta, D., Sridhar, S., Casas, D., Theobalt, C.: Ganerated hands for real-time 3d hand tracking from monocular RGB. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 49–59 (2018)

Oberweger, M., Lepetit, V.: Deepprior++: improving fast and accurate 3d hand pose estimation. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 585–594 (2017)

Pavlakos, G., Choutas, V., Ghorbani, N., Bolkart, T., Osman, A.A., Tzionas, D., Black, M.J.: Expressive body capture: 3d hands, face, and body from a single image. arXiv preprint arXiv:1904.05866 (2019)

Pavlakos, G., Zhu, L., Zhou, X., Daniilidis, K.: Learning to estimate 3d human pose and shape from a single color image. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 459–468 (2018)

Piumsomboon, T., Clark, A., Billinghurst, M., Cockburn, A.: User-defined gestures for augmented reality. In: Chi 13 Extended Abstracts on Human Factors in Computing Systems (2013)

Qi, C.R., Yi, L., Su, H., Guibas, L.J.: Pointnet++: deep hierarchical feature learning on point sets in a metric space. In: Advances in Neural Information Processing Systems, pp. 5099–5108 (2017)

Rahimi, A., Cohn, T., Baldwin, T.: Semi-supervised user geolocation via graph convolutional networks. arXiv preprint arXiv:1804.08049 (2018)

Remelli, E., Tkach, A., Tagliasacchi, A., Pauly, M.: Low-dimensionality calibration through local anisotropic scaling for robust hand model personalization. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2535–2543 (2017)

Romero, J., Tzionas, D., Black, M.J.: Embodied hands: modeling and capturing hands and bodies together. ACM Trans. Graph. (TOG) 36(6), 245 (2017)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Sridhar, S., Rhodin, H., Seidel, H.P., Oulasvirta, A., Theobalt, C.: Real-time hand tracking using a sum of anisotropic Gaussians model. In: 2014 2nd International Conference on 3D Vision, vol. 1, pp. 319–326. IEEE (2014)

Stoker, J.J.: Differential Geometry. Wiley, New York (1989)

Tan, V., Budvytis, I., Cipolla, R.: Indirect deep structured learning for 3d human body shape and pose prediction (2018)

Tkach, A., Pauly, M., Tagliasacchi, A.: Sphere-meshes for real-time hand modeling and tracking. ACM Trans. Graph. (TOG) 35(6), 222 (2016)

Tzionas, D., Ballan, L., Srikantha, A., Aponte, P., Pollefeys, M., Gall, J.: Capturing hands in action using discriminative salient points and physics simulation. Int. J. Comput. Vis. 118(2), 172–193 (2016)

Wang, N., Zhang, Y., Li, Z., Fu, Y., Liu, W., Jiang, Y.G.: Pixel2mesh: generating 3d mesh models from single rgb images. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 52–67 (2018)

Wu, X., Finnegan, D., O’Neill, E., Yang, Y.L.: Handmap: robust hand pose estimation via intermediate dense guidance map supervision. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 237–253 (2018)

Yao, P., Fang, Z., Wu, F., Feng, Y., Li, J.: Densebody: Directly regressing dense 3d human pose and shape from a single color image. arXiv preprint arXiv:1903.10153 (2019)

Zhang, X., Li, Q., Zhang, W., Zheng, W.: End-to-end hand mesh recovery from a monocular RGB image. arXiv preprint arXiv:1902.09305 (2019)

Zhu, J.Y., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2223–2232 (2017)

Zimmermann, C., Brox, T.: Learning to estimate 3d hand pose from single RGB images. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4903–4911 (2017)

Zimmermann, C., Ceylan, D., Yang, J., Russell, B., Argus, M., Brox, T.: Freihand: A dataset for markerless capture of hand pose and shape from single RGB images. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 813–822 (2019)

Zitnik, M., Agrawal, M., Leskovec, J.: Modeling polypharmacy side effects with graph convolutional networks. Bioinformatics 34(13), i457–i466 (2018)

Acknowledgements

The authors are grateful to Professor Yongjun Li (1968–2019) for the valuable discussion and his support for this work.

Funding

This work was supported by the Natural Science Foundation of Guangdong Province under Grant 2019A1515011793.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Peng, H., Xian, C. & Zhang, Y. 3D hand mesh reconstruction from a monocular RGB image. Vis Comput 36, 2227–2239 (2020). https://doi.org/10.1007/s00371-020-01908-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-020-01908-3