Abstract

In this article we develop a vectorial kernel method—a powerful method which solves in a unified framework all the problems related to the enumeration of words generated by a pushdown automaton. We apply it for the enumeration of lattice paths that avoid a fixed word (a pattern), or for counting the occurrences of a given pattern. We unify results from numerous articles concerning patterns like peaks, valleys, humps, etc., in Dyck and Motzkin paths. This refines the study by Banderier and Flajolet from 2002 on enumeration and asymptotics of lattice paths: we extend here their results to pattern-avoiding walks/bridges/meanders/excursions. We show that the autocorrelation polynomial of this forbidden pattern, as introduced by Guibas and Odlyzko in 1981 in the context of rational languages, still plays a crucial role for our algebraic languages. En passant, our results give the enumeration of some classes of self-avoiding walks, and prove several conjectures from the On-Line Encyclopedia of Integer Sequences. Finally, we also give the trivariate generating function (length, final altitude, number of occurrences of the pattern p), and we prove that the number of occurrences is normally distributed and linear with respect to the length of the walk: this is what Flajolet and Sedgewick call an instance of Borges’s theorem.

Similar content being viewed by others

1 Introduction

Combinatorial structures having a rational or an algebraic generating function play a key role in many fields: computer science (e.g. for the analysis of algorithms involving trees, lists, words), computational geometry (integer points in polytopes, maps, graph decomposition), bioinformatics (RNA structure, pattern matching), number theory (integer compositions, automatic sequences and modular properties, integer solutions of varieties), probability theory (Markov chains, directed random walks); see e.g. [8, 22, 42, 78]. Rational functions are often the trace of a structure encodable by an automaton, while algebraic generating functions are often the trace of a structure which has a tree-like recursive specification (typically, a context-free grammar), or which satisfies a functional equation solvable by variants of the kernel method.

One of the origin of this method goes back to 1968, when Knuth introduced it to enumerate permutations sortable by a stack; see the solution to Exercise 2.2.1–4 in The Art of Computer Programming [52, pp. 536–537], which presents a “new method for solving the ballot problem”. For this problem, the solution involves the root of a quadratic polynomial (the so-called kernel). The method was later extended to more general equations (see e.g. [9, 24, 39, 40]). We refer to [14] for more on the long history and the numerous evolutions of this method, which found many applications e.g. for planar maps, permutations, lattice paths, directed animals, polymers, may it be in combinatorics, statistical mechanics, computer algebra, or in probability theory.

In this article, we show how a new extension of this method, which we call vectorial kernel method, allows us to solve the enumeration of languages generated by any pushdown automaton. Since the seminal article by Chomsky and Schützenberger on the link between context-free grammars and algebraic functions [27], which also holds for pushdown automata [76], many articles tackled the enumeration of combinatorial structures via a formal language approach. See e.g. [36, 56, 61] for such an approach on the so-called generalized Dyck languages. The words generated by these languages are in bijection with directed lattice paths, and we show how these fundamental objects can be enumerated when they have the additional constraint to avoid a given pattern. For sure, such a class of objects can be described as the intersection of a context-free language and a rational language; therefore, classical closure properties imply that they are directly generated by another (but huge and clumsy) context-free language. Unfortunately, despite the fact that the algebraic system associated with the corresponding context-free grammar is in theory solvable by a resultant computation or by Gröbner bases, this leads in practice to equations which are so big that no current computer could handle them in memory, even for generalized Dyck languages with only 20 different letters.

Our vectorial kernel method offers a generic and efficient way to tackle such enumeration and bypass these intractable equations. Our approach thus generalizes the enumeration and asymptotics obtained by Banderier and Flajolet [9] for classical lattice paths to lattice paths avoiding a given pattern. This work continues the tradition of investigation of enumerative and asymptotic properties of lattice paths via analytic combinatorics [9, 10, 12, 14]. This allows us to unify the considerations of many articles which investigated natural patterns like peaks, valleys, humps, etc., in Dyck and Motzkin paths, corresponding patterns in trees, compositions, ...; see e.g. [5, 16, 25, 33, 38, 53, 57, 60, 62, 66, 69] and all the examples mentioned in our Sect. 8.

2 Definitions, Notations, Autocorrelation Polynomial

Let \({\mathcal {S}}\), the set of steps, be some finite subset of \({\mathbb {Z}}\), that contains at least one negative and at least one positive number.Footnote 1 A lattice path with steps from\({\mathcal {S}}\) is a finite word \(w=[v_1, v_2, \ldots , v_n]\) in which all letters \(v_i\) belong to \({\mathcal {S}}\), visualized as a directed polygonal line in the plane, which starts at the origin and is formed by successive appending of vectors \((1, v_1), (1, v_2), \ldots , (1, v_n)\). The letters that form the path are referred to as its steps. The length of \(w=[v_1, v_2, \ldots , v_n]\), to be denoted by |w|, is the number of steps in w (that is, n).

The final altitude of w, to be denoted by \({\text {alt}}(w)\), is the sum of all steps in w (that is, \(v_1 + v_2 + \cdots + v_n\)). Visually, \((|w|, {\text {alt}}(w))\) is the point where w terminates.

Under this setting, it is usual to consider two possible restrictions: that the whole path be (weakly) above the x-axis and that the final altitude be 0 (that is, the path terminates at the x-axis). Consequently, one considers four classes of lattice paths (see Table 1, p. 6 for an illustration):

- 1.

A walk is any path as described above.

The corresponding generating function is denoted by W(t, u).

Nota bene: in all our generating functions, the variable t encodes the length of the paths, and the variable u the final altitude.

- 2.

A bridge is a path that terminates at the x-axis.

The corresponding generating function is denoted by B(t). One hasFootnote 2\(B(t)=[u^0]W(t,u)\).

- 3.

A meander is a path that stays (weakly) above the x-axis.

The corresponding generating function is denoted by M(t, u).

- 4.

An excursion is a path that stays (weakly) above the x-axis and also terminates at the x-axis. In other words, an excursion satisfies both restrictions.

The corresponding generating function is denoted by E(t). One has \(E(t)=M(t,0)\).

For each of these classes, Banderier and Flajolet [9] gave general expressions for the corresponding generating functions and the asymptotics of their coefficients. The step polynomial of the set of steps \({\mathcal {S}}\), denoted by P(u), is defined by

The smallest (negative) number in \({\mathcal {S}}\) is denoted by \(-c\), and the largest (positive) number in \({\mathcal {S}}\) is denoted by d: that is,Footnote 3 if one orders the terms of P(u) by the powers of u, one has \(P(u)=u^{-c}+\cdots +u^d\).

We extend the study of Banderier and Flajolet by considering lattice paths with step set \({\mathcal {S}}\) that avoid a certain pattern of length \(\ell \), that is, an a priori fixed path

To be precise, we define an occurrence of p in a lattice path w as a (contiguous) substring which coincides with p. If there is no occurrence of p in w, we say that wavoidsp. For example, the path \([1, 1, 1, 2, -3, 1, 2]\) has two occurrences of [1, 1], two occurrences of [1, 2], and it avoids [2, 1].

Before we state our results, we introduce some notations.

A presuffix of p is a non-empty string that occurs in p both as a prefix and as a suffix. In particular, the whole word p is a (trivial) presuffix of itself. If p has one or several non-trivial presuffixes, we say that p exhibits an autocorrelation phenomenon. For example, the pattern \(p=[1, 1, -2, 1, -2]\) has no autocorrelation. In contrast, the pattern \(p=[1,1,2,-3,1,1,2,-3,1,1]\) has three non-trivial presuffixes: [1], [1, 1], and \([1,1,2,-3,1,1]\), and thus in this case we have autocorrelation.

While analysing the Boyer–Moore string searching algorithm and properties of periodic words, Guibas and Odlyzko introduced in 1981 [45] what turns out to be one of the key characters of our article, the autocorrelation polynomialFootnote 4 of the pattern p. For any given word p, let \({\mathcal {Q}}\) be the set of its presuffixes; the autocorrelation polynomial of p is

where \({\bar{q}}\) denotes the complement of q in p (i.e., \(p=q \bar{q}\)). The choice of the letter R to denote this polynomial is a mnemonic of the fact that it encodes the relations of the pattern p.

For example, consider the pattern \(p=[1,1,2,3,1,1,2,3,1,1]\). Its four presuffixes produce four terms of R(t, u) as follows:

Presuffix | Length of its complement | Final altitude of its complement |

|---|---|---|

q | \(|{\bar{q}}|\) | \({\text {alt}}({\bar{q}})\) |

[1] | 9 | 15 |

[1, 1] | 8 | 14 |

[1, 1, 2, 3, 1, 1] | 4 | 7 |

[1, 1, 2, 3, 1, 1, 2, 3, 1, 1] | 0 | 0 |

Therefore, for this p we have \(R(t,u) = 1 + t^4u^7 + t^8u^{14} + t^9u^{15}\).

Notice that if for some p no autocorrelation occurs, then we have \({\mathcal {Q}}=\{p\}\) and therefore \(R(t,u)=1\).

Finally, we define the kernel as the following Laurent polynomial:

It will be shown in Proposition 4.4 of Sect. 4.2 that each root u(t) of \(K(t,u)=0\) is either small (meaning \(\lim _{t \rightarrow 0} u(t)=0\)) or large (meaning \(\lim _{t \rightarrow 0} |u(t)|= \infty \)). Moreover, the number of small roots (to be denoted by e) is the absolute value of the lowest power of u, and the number of large roots (to be denoted by f) is the highest power of u in K(t, u). In particular, if \(R(t,u)=1\), then we have \(e=\max \{c, -{\text {alt}}(p)\}\), and \(f=\max \{d, {\text {alt}}(p)\}\).

Equipped with these definitions, we can give a first illustration of some of our results in Table 1. From now on, we use the notations W / B / M / E for generating functions enumerating paths constrained to avoid a pattern p.

In the next section, we present in a more detailed way the generating functions of all these paths.

3 Lattice Paths with Forbidden Patterns

In this section, we state our main results for the generating functions W / B / M / E of walks, bridges, meanders, excursions, constrained to avoid a given pattern p. In all the theorems, we denote by \(\ell \) the length of the pattern p, and by \({\text {alt}}(p)\) its final altitude.

Theorem 3.1

(Generating function of walks, generic pattern) Let \({\mathcal {S}}\) be a set of steps, and let p be a pattern with steps from \({\mathcal {S}}\).

- 1.

The bivariate generating function for walks avoiding the pattern p is

$$\begin{aligned} W(t,u)=\frac{R(t,u)}{K(t,u)}. \end{aligned}$$(5)If one does not keep track of the final altitude, this yields

$$\begin{aligned} W(t) = W(t,1)=\frac{1}{1- tP(1) + t^\ell /R(t,1)}. \end{aligned}$$(6) - 2.

The generating function for bridges avoiding the pattern p is

$$\begin{aligned} B(t)= - \sum _{i=1}^e \frac{u_i'(t)}{u_i(t)} \frac{R(t,u_i)}{K_t(t,u_i)} \text{, } \end{aligned}$$(7)where \(u_1(t), \ldots , u_e(t)\) are the small roots of the kernel K(t, u), as defined in (4).

In order to find the generating functions of meanders and excursions, we shall use a novel approach that we call vectorial kernel method. With this method, we obtain formulas that generalize those from the Banderier–Flajolet study (in which they considered classical paths, without the additional pattern constraint).

Theorem 3.2

(Generating function of meanders and excursions, generic pattern) The bivariate generating function of meanders avoiding the pattern p is

where \(u_1(t), \ldots , u_e(t)\) are the small roots of \(K(t, u)=0\), G(t, u) is a polynomial in u (and a formal power series in t) which will be characterized in (27).

For excursions, just take the above closed form for \(u\rightarrow 0\).

Although we present here this formula under the paradigm of patterns in lattice paths, it holds in fact much more generally for the enumeration of words generated by any pushdown automaton. This shall become transparent via the proof given Sect. 5 and via the examples from Sect. 8.

We now introduce two classes of patterns for which the factor G(t, u) in Formula (8) has a very nice simple shape.

Definition 3.3

(Quasimeanders, reversed meanders) A quasimeander is a lattice path which does not cross the x-axis, except, possibly, at the last step. A reversed meander is a lattice path whose terminal point has a (strictly) smaller y-coordinate than all other points. (Notice that the empty path is both a quasimeander and a reversed meander.)

Theorem 3.4

(Generating function of meanders, quasimeander pattern subcase) Let p be a quasimeander.

- 1.

The bivariate generating function of meanders avoiding the pattern p is

$$\begin{aligned} M(t,u) = \frac{R(t,u)}{u^c \, K(t,u)} \prod _{i=1}^c \bigl (u-u_i(t)\bigr ), \end{aligned}$$(9)where \(u_1(t), \ldots , u_c(t)\) are the small rootsFootnote 5 of \(K(t, u)=0\). If one does not keep track of the final altitude, this yields

$$\begin{aligned} M(t) = M(t,1)= \frac{R(t,1)}{K(t,1)} \prod _{i=1}^c \bigl (1-u_i(t)\bigr ). \end{aligned}$$(10) - 2.

The generating function for excursions avoiding the pattern p is

$$\begin{aligned} E(t)=M(t,0)= {\left\{ \begin{array}{ll} \displaystyle {\frac{(-1)^{c}}{-t} \prod _{i=1}^c u_i(t)} &{} \text { if } {\text {alt}}(p)>-c\text{, } \\ &{} \\ \displaystyle {\frac{(-1)^{c}}{t^\ell -t} \prod _{i=1}^c u_i(t)} &{} \text { if } {\text {alt}}(p)=-c. \end{array}\right. } \end{aligned}$$(11)

Theorem 3.5

(Generating function of meanders, reversed meander pattern subcase) Let p be a reversed meander.

- 1.

The bivariate generating function for meanders avoiding the pattern p is

$$\begin{aligned} M(t,u) = \frac{1}{u^e \, K(t,u)} \prod _{i=1}^e \bigl (u-u_i(t)\bigr )\text{, } \end{aligned}$$(12)where \(u_1(t), \ldots , u_e(t)\) are the small roots of \(K(t, u)=0\). If one does not keep track of the final altitude, this yields

$$\begin{aligned} M(t) = M(t,1)= \frac{1}{K(t,1)} \prod _{i=1}^e \bigl (1-u_i(t)\bigr )\text{. } \end{aligned}$$(13) - 2.

The generating function for excursions avoiding the pattern p is

$$\begin{aligned} E(t)=M(t,0)= \displaystyle {\frac{(-1)^{e}}{D(t)} \prod _{i=1}^e u_i(t)}\text{, } \end{aligned}$$(14)where \(D(t):=[u^0] u^e K(t,u)\) is either some power of t, or a difference of two powers of t (similarly to (11), but with more cases that will be specified in the proof in Sect. 5).

Remark 3.6

(Compatibility with the literature on classical lattice paths) Notice that for these four classes of lattice paths, if one forbids a pattern of length 1 or if one uses symbolic weights for each step, this recovers the formulas from Banderier and Flajolet [9].

The proof will involve the adjacency matrix of a finite automaton which encodes accumulating the prefixes of the forbidden pattern. This will be introduced in the next section.

4 Automaton, Adjacency Matrix A, and Kernel \(K=|I-tA|\)

4.1 The Automaton and Its Adjacency Matrix

In this section, we introduce an automaton which will allow us to tackle pattern avoidance. As explained in Sect. 2, any path is seen as word (the steps are transformed into alphabet letters). Let \(p=[a_1\), \(\ldots \), \(a_\ell ]\) be the “forbidden” pattern. Sharing the spirit of the Knuth–Morris–Pratt algorithm, we build an automaton with \(\ell \) states, where each state corresponds to a proper prefix of p collected so far. We label these states \(X_1\), \(\ldots \), \(X_{\ell }\): the first state is labelled by the empty word (namely, \(X_1 = \epsilon \)) and the next states are labelled by proper prefixes of p (namely, \(X_{i} = [a_1, a_2, \ldots , a_{i-1}]\)). If the automaton reads a path w, then it ends in the state labelled by the longest prefix of p that coincides with a suffix of w. Note that the automaton is completely determined by P(u) and p.

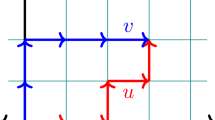

The automaton and the adjacency matrix A for \({\mathcal {S}}=\{-1, 1, 2\}\) and the pattern \(p=[1,2,1,2,-1]\). (The 0 entries of A are replaced by dots.) The powers of u in the superdiagonal (in red) correspond to the pattern. For each other diagonal (consisting of entries surrounded in colour), there is only one nonzero entry, according to a property illustrated in the bottom right part of the figure and explained hereafter (Color figure online)

Let us describe the transitions of this automaton more precisely. For \(i, j \in \{1, \ldots , \ell \}\), we have an arrow labelled \(\lambda \) from the state \(X_i\) to the state \(X_j\) if j is the maximum number such that \(X_j\) is a suffix of \(X_i \lambda \). Its adjacency matrix (also called transition or transfer matrix by some authors) will be denoted by A: it is an \(\ell \times \ell \) matrix, and its (i, j)-entry is the sum of all terms \(u^{\lambda }\) such that there is an arrow labelled \(\lambda \) from \(X_{i}\) to \(X_{j}\). See Fig. 1 for an example. The following general properties of A are an easy consequence of its combinatorial definition:

For all i, j such that \(j > i+1\), we have \(A_{i,j}=0\).

The superdiagonal entries are \(A_{i,i+1}=u^{a_i}\).

For each j such that \(2 \le j \le \ell \), every entry in the j-th column is either 0 or \(u^{a_{j-1}}\), depending on a procedure explained below.

The first column is such that all rows sum to P(u), except for the last row, where this entry is \(P(u)-u^{a_{\ell }}\) (because the transition with \(a_\ell \) is forbidden in this row, as it would create an occurrence of the pattern p).

The entries of A along the diagonals surrounded in colour can be determined by the following procedure, which is also illustrated in the bottom right of Fig. 1. For \(d=0, \ldots , \ell -2\):

- 1.

If \([a_1, a_2, \ldots , a_{\ell -d-1}]=[a_{d+2}, a_{d+3}, \ldots , a_{\ell }]\), then all the entries \(A_{i,j}\) with \(i-j=d\), \(j \ge 2\), are 0.

- 2.

Otherwise, if k is the smallest number such that \(a_{k} \ne a_{d+1+k}\), then \(A_{d+k+1, k+1} = u^{k}\), unless a smaller d yielded \(u^{k}\) in the same row, to the right of this position (if this happens, \(A_{d+k+1, k+1}=0\)).

This more intimate knowledge of the structure of the adjacency matrix A will help us to compute some related determinants.

4.2 Algebraic Properties of the Kernel: Link with the Autocorrelation Polynomial

It is well known that the matrix \(1/(I-tA)= \mathrm {adj}(I-tA) / \det (I-tA)\) (where I is the identity matrix) plays an important role in the enumeration of walks. We will see that this adjoint and this determinant also play a fundamental role in the enumeration of meanders. In fact, the role of \(\det (I-tA)\) in our study is the analogue of the role played by \(1-tP(u)\) in the study of Banderier and Flajolet [9], but, as we shall see, the situation is more involved in our case.

Proposition 4.1

(Structure of the kernel) Let \({\mathcal {S}}\) be a set of steps, and let p be a pattern with steps from \({\mathcal {S}}\). Then the adjacency matrix A of the automaton satisfies

where R(t, u) and K(t, u) are the kernel and the autocorrelation polynomial, as defined in (3) and (4). In particular, in the case without autocorrelation we have \(\det (I-tA) = 1-tP(u)+ t^{|p|}u^{{\text {alt}}(p)}\), and the sum of the entries in the first row of \(\mathrm {adj}(I-tA)\) is 1.

Proof

Equations (15) and (16) will be proven in the course of the proof of Theorem 3.1. \(\square \)

Another important quantity related to the adjacency matrix is what we call the autocorrelation vector, defined as \(\vec {{\mathbf {v}}}:=\mathrm {adj}(I-tA)\cdot \vec {{\mathbf {1}}}\), where \(\vec {{\mathbf {1}}}\) denotes the column vector \((1, 1, \ldots , 1)^{\top }\) of size \(\ell \times 1\). In Proposition 4.2 we give a simple combinatorial description of this vector \(\vec {{\mathbf {v}}}\); in particular, this description has the advantage of smaller computational cost than getting it via a case-by-case matrix inversion.

Proposition 4.2

(Structure of the autocorrelation vector) The autocorrelation vector \(\vec {{\mathbf {v}}}:=\mathrm {adj}(I-tA)\cdot \vec {{\mathbf {1}}}\) satisfies

where the j-th entry of \(\vec {{\mathbf {s}}}(t,u)\) is a polynomial, the generating function of a finite set \({\mathcal {S}}_j\) of walks (which we call the “subtracted set” of walks). The subtracted set \({\mathcal {S}}_j\) is the finite set of walks of length smaller than \(\ell \) having the factorization \(w.a_\ell .{{\bar{q}}}\), where

- 1.

w is any walk starting in state \(X_{j}\) and ending in state \(X_{\ell }\),

- 2.

\(a_\ell \) is the last step of the pattern p,

- 3.

\({{\bar{q}}}\) corresponds to a term of R(t, u) (that is, \({{\bar{q}}}\) is the complement of some presuffix q of p).

As this proposition is a little bit hard to digest, we give an example after the proof (Example 4.3), and the reader will see that the situation is in fact simpler than what she may have feared.

Proof

First note that \((I-tA)\vec {{\mathbf {v}}}=K(t,u)\vec {{\mathbf {1}}}\) and \(A\vec {{\mathbf {1}}}=P(u)\vec {{\mathbf {1}}}-u^{a_\ell }\vec {{\mathbf {e}}_\ell }\), where \(\vec {{\mathbf {e}}_\ell }=(0,\dots ,0,1)^\top \).

Now define \(\vec {{\mathbf {s}}}\) by \(\vec {{\mathbf {s}}}:=R(t,u)\vec {{\mathbf {1}}}-\vec {{\mathbf {v}}}\). Then

where \(\vec {{\mathbf {e}}_\ell }=(0,\dots ,0,1)\). This implies

In this form, it is now easy to give a combinatorial interpretation to the column vector \(\vec {{\mathbf {s}}}\): its j-th component is the generating function of certain lattice paths for which the associated automaton is in state \(X_{j}\) before the walk does its first step.

The entries of \((I-tA)^{-1}\) are the generating functions of lattice paths not containing the pattern \(p=[a_1,a_2,\dots ,a_\ell ]\), specifically: the (i, j)-entry of this matrix is the generating function of such walks that start in state \(X_{i}\) and end in state \(X_{j}\). Therefore, the j-th component of \((I-tA)^{-1} \vec {{\mathbf {e}}_\ell }\, tu^{a_\ell } R(t,u)\) is the generating function of all lattice paths that are composed as follows: first, start with a p-avoiding walk w that begins in the state \(X_{j}\) and ends in state \(X_{\ell }\), followed by the single step \(a_\ell \), and then finally by some complement \({{\bar{q}}}\) of some presuffix q of the pattern p. Note that if the walk w is long enough and \(\bar{q}\) is not the empty sequence, then adding the step \(a_{\ell }\) to w makes it end with q. So, \(w.a_{\ell }.\bar{q}\) has an occurrence of p at the very end. Furthermore, having added the step \(a_{\ell }\) to w an occurrence of p is created unless w was shorter than \(\ell \).

The second term, \((I-tA)^{-1} \vec {{\mathbf {1}}}t^\ell u^{{\text {alt}}(p)}\), is the generating function of all walks avoiding p to which we add p at their end. We may have a single occurrence of p at the end of the walk or two overlapping patterns if the first part of the walk ended with some prefix of p, which needs only a presuffix to complete p; the completion is done by the final occurrence of p.

We observed that the two terms on the right-hand side of (17) correspond to sets of lattice paths. Clearly, any walk ending with p and having only one occurrence of p is in both sets. And so is any walk having two overlapping occurrences of p at its very end. However, the first set contains in addition paths being too short for having an occurrence of p: they are precisely the set of paths described in the assertion. Since this set is finite, its generating function is a polynomial. \(\square \)

Example 4.3

Consider as an example the lattice path model with some step set \({\mathcal {S}} \supseteq \{1,2\}\) and the pattern \(p=[1,2,1,1,2,1]\). The autocorrelation polynomial is \(R(t,u)=1+t^3u^4+t^5u^7\), and the autocorrelation vector \(\vec {{\mathbf {v}}}=\mathrm {adj}(I-tA)\cdot \vec {{\mathbf {1}}}\) has the following structure:

Now, let us interpret the polynomials subtracted from R(t, u) according to Proposition 4.2. To this end, it is convenient to introduce a variant of the automaton defined at the beginning of this section (see Fig. 1), in which we add a transition with the letter \(a_\ell \) from the last state to the state indexed by the longest presuffix which is shorter than the whole pattern (in our case, it is the state labelled by [1, 2, 1]). Marking this new transition allows us to count the occurrences of the pattern p, instead of just counting walks avoiding this pattern. (Section 7 will be dedicated to this topic.) This gives the automaton shown in Fig. 2.

Illustration to Example 4.3: the automaton marking occurrences of the pattern 121121 (Color figure online)

With this automaton, it is easy to exhaustively list the walks having the factorization \(w.a_\ell .{{\bar{q}}}\) mentioned in Proposition 4.2 (n.b.: the suffix w does not contain the pattern p, i.e., it is a word generated by the automaton without the added red transition); this gives a combinatorial explanation of the components of the vector \(\vec {{\mathbf {s}}}(t,u)\).

For the first component of \(\vec {{\mathbf {s}}}\), the subtracted set of walks is in fact empty. Indeed, each path w from \(X_1\) to \(X_6\), followed by the step \(a_{\ell }\), has at least length 6. So, there are no walks that satisfy the condition of being shorter than \(\ell \). Therefore, we have \(\vec {{\mathbf {s}}}_1=0\) (which holds in full generality, implying \(\vec {{\mathbf {v}}}_1=R(t,u)\) for any pattern).

For the second component of \(\vec {{\mathbf {s}}}\), we start in state \(X_2\). The length of the prefactor w has to be smaller than \(\ell -1\), and the only way to reach \(X_6\) in strictly fewer than 5 steps is the path \(X_2 \rightarrow X_3 \rightarrow X_4 \rightarrow X_5 \rightarrow X_6\), which generates 2112, to which we append the last letter \(a_\ell =1\). Then the only possible \({{\bar{q}}}\) is the empty one (otherwise the path will be too long). Thus, the subtracted set of walks is \({\mathcal {S}}_2=\{2112.1.\varepsilon \}\), and its generating function is \(t^5u^7\).

For the third component of \(\vec {{\mathbf {s}}}\), the only sufficiently short walk from \(X_3\) to \(X_6\) is \(X_3 \rightarrow X_4 \rightarrow X_5 \rightarrow X_6\), and the only possible choice of \({{\bar{q}}}\) is \(\varepsilon \). Thus, the subtracted set of walks is \({\mathcal {S}}_3=\{112.1.\varepsilon \}\), and its generating function is \(t^4u^5\).

For the fourth component of \(\vec {{\mathbf {s}}}\), we have two ways to go from \(X_4\) to \(X_6\): \( X_4 \rightarrow X_5 \rightarrow X_6\) and \(X_4 \rightarrow X_3 \rightarrow X_4 \rightarrow X_5 \rightarrow X_6\). In both cases, the only possible choice of \({{\bar{q}}}\) is \(\varepsilon \). Thus, the subtracted set of walks is \({\mathcal {S}}_4=\{12.1.\varepsilon ,2112.1.\varepsilon \}\), and its generating function is \(t^3u^4+t^5u^7\).

For the fifth component of \(\vec {{\mathbf {s}}}\), starting in \(X_5\), only one short path to \(X_6\) is possible: \(X_5 \rightarrow X_6\), but in this case we have two choices for \({{\bar{q}}}\): \(\varepsilon \) and 121. This gives the subtracted set of walks \({\mathcal {S}}_5=\{2.1.\varepsilon , 2.1.121\}\), and its generating function is \(t^2u^3+t^5u^7\).

For the sixth component of \(\vec {{\mathbf {s}}}\), starting in \(X_6\), the subtracted set of walks is \({\mathcal {S}}_6=\{\varepsilon .1.\varepsilon ,\varepsilon .1.121\}\), and its generating function is \(tu+t^4u^5\).

In conclusion, we combinatorially got \(\vec {{\mathbf {s}}}= (0, \, t^5u^7, \, t^4u^5, \, t^3u^4+t^5u^7, \, t^2u^3+t^5u^7, \, tu+t^4u^5)^\top \), which agrees with Formula (18). \(\square \)

This autocorrelation vector \(\vec {{\mathbf {v}}}\) plays a role for many problems of lattice path pattern enumeration: it allows rewriting the initial functional equation associated to the problem in a condensed system, with less unknowns. We shall see in the proof of Theorem 3.2 (for the generating function of meanders) that \(\vec {{\mathbf {v}}}\) will act as an eigenvector for a system playing a key role in our enumeration.

Three possibilities for the Newton polygon of the kernel K(t, u). This classification depends on the final altitude h of the pattern p, and is exhaustive if \(R(t,u)=1\). Each point (i, j) corresponds to a monomial \(t^i u^j\) of the numerator of K. The slopes of the convex hull segments on the left give the Puiseux behaviour at 0 of the small and large roots \(u_i\) and \(v_j\) (Color figure online)

4.3 Analytic Properties of the Kernel: Newton Polygons and Geometry of Branches

Let us end this section with the proof of an important property of the kernel, the number of “small” and “large” roots u(t) of \(K(t, u)=0\):

Proposition 4.4

(Small and large roots of the kernel K) All roots u(t) of K(t, u) are either small (meaning \(\lim _{t \rightarrow 0} u(t)=0\)) or large (meaning \(\lim _{t \rightarrow 0} |u(t)|= \infty \)). Let \(d_K\) denote the degree of K(t, u) in u, i.e., \(d_K=\max \{j\,\mid \, [u^j]K(t,u)\ne 0\}\), and let \(l_K\) denote the lowest power of u in the monomials of K, i.e., \(l_K=\min \{j\,\mid \, [u^j]K(t,u)\ne 0\}\). Then, K has e small roots and f large roots, where \(e=\max (0, -l_K)\) and \(f=\max (0,d_K)\)

Remark 4.5

The nested \(\max /\min \) is needed for the cases where either \(l_K>0\) or \(d_K<0\). An example for such a model is \({\mathcal {S}}=\{-1,3\}\) and \(p=[-1,-1]\), where we have \(K=1-u^3t-u^2t^2\).

Proof

Recall that the kernel K is defined as \(K(t,u)=(1-tP(u))R(u)+t^\ell u^{{\text {alt}}(p)}.\) Now, consider \(u^e K(t,u)\), which is a polynomial, and draw for each of its monomials \(t^{r_1} u^{r_2}\) a point \((r_1,r_2)\) in the plane. This gives a set of points \({\mathcal {P}}\). The Newton polygonFootnote 6 of K is the boundary of the convex hull of \({\mathcal {P}}\); of particular interest to us are the segments of this polygon that are visible when we look from the left: their slope (it is always a rational number) will give the exponent of the Puiseux expansions of each root of K. Figure 3 shows schematically all possible shapes for the case \(R(t,u)=1\) (if \(R(t,u)\ne 1\), the possible shapes are more diverse). Each segment (in green) which lies below the point (0, e) (they all have a negative slope) gives the Puiseux expansion of a set of small roots. Each segment (in red) which lies above the point (0, e) (they all have a positive slope) gives the Puiseux expansion of a set of large roots. We now focus on the small roots and prove the proposition for them. (The proof for large roots is similar and can be obtained from this one by the replacement \(u \mapsto \frac{1}{u}\), which corresponds to the vertical reflection of the Newton polygon.)

If the Newton polygon has no segment of negative slope (the ones drawn in green in Fig. 3), then K is a polynomial in u (and in t as well) having the constant term 1. Hence, \(K(t,u)=1+Q(t,u)\) where Q(t, u) is a polynomial in t and u with \(\lim _{(t,u)\rightarrow (0,0)}Q(t,u)=0\). This implies that small functions u(t) can never compensate the constant term near \(t=0\), and so there are zero small roots and \(d_K\) large roots, in accordance with \(e=0\) and \(f=d_K\).

If the Newton polygon has at least one such segment of negative slope, then consider one of them, denote it by \(\Sigma \), and let \(-\beta /\alpha \) be its slope. The two endpoints of \(\Sigma \) correspond to monomials of \(u^eK(t,u)\) (some other points of \(\Sigma \) also possibly do). Any two such monomials differ by some power of \(t^\alpha u^{-\beta }\). If \(u\sim C\cdot t^{\alpha /\beta }\) as \(t\rightarrow 0\), then \(t^\alpha u^{-\beta }\sim C^{-\beta }\), which is some nonzero constant. Thus, all monomials that correspond to a point of the segment \(\Sigma \) are of the same order of magnitude as Puiseux series in t. What about the other monomials? By construction of the Newton polygon, their corresponding points must lie above the line containing the segment \(\Sigma \). Hence, such a point can be represented in the form \(t^{r_1+j} u^{r_2+k}\) where \((r_1,r_2)\in \Sigma \) and \(k>-j \beta /\alpha \). We now use the notation \(f(t) \approx g(t)\) for \(\lim _{t\mapsto 0^+} f(t)/g(t)= \text { constant}\). If \(u \approx t^{\alpha /\beta }\), then one has

Since \(k>-j \beta /\alpha \), we have \(j + k\alpha /\beta > 0\) and thus the monomial \(t^{r_1+j} u^{r_2+k}\) has a smaller order of magnitude than the monomials corresponding to the points of \({\mathcal {P}}\) on the segment \(\Sigma \), like \(t^{r_1} u^{r_2}\). The arguments above show that if \(u\approx t^{\alpha /\beta }\), then

Now, let \((\Sigma _1, \Sigma _2)\) denote the upper left endpoint of \(\Sigma \) and \((\Sigma _3, \Sigma _4)\) be its lower right endpoint. Clearly, the highest power of u in the right-hand side of (19) is \(u^{\Sigma _2}\) and the lowest one is \(u^{\Sigma _4}\). Thus the right-hand side of (19) is a polynomial in u that can be split into \(u^{\Sigma _4}\) and a polynomial of degree \(\Sigma _2-\Sigma _4\) which has a nonzero constant term. Hence the number of non-trivial solutions equals \(\Sigma _2-\Sigma _4\). All those solutions are small roots of the kernel. Moreover, note that \(\Sigma _2-\Sigma _4\) is the height of the segment \(\Sigma \).

Among all monomials of the form \(t^j u^{-e}\) in K(t, u), let \(t^\lambda u^{-e}\) be the one where \(\lambda \) is minimal. If we draw the Newton polygon of the polynomial \(u^e K(t,u)\), then it contains the points (0, e) and \((\lambda ,0)\). Assume that the polygonal line we obtain when traversing the boundary of the Newton polygon from (0, e) to \((\lambda ,0)\) consists of line segments \(\Sigma _1,\dots , \Sigma _r\) of respective slopes \(-\beta _1/\alpha _1\), ..., \(-\beta _r/\alpha _r\). Then, the line segment \(\Sigma _j\) corresponds to a set of small roots of K(t, u) each satisfying \(u(t)\approx t^{\alpha _j/\beta _j}\) as \(t\rightarrow 0\). The number of such roots is equal to the height of \(\Sigma _j\), which is the difference of the y-coordinates of the two endpoints of \(\Sigma _j\). In particular, this implies that the number of small roots of K(t, u) is equal to e.

Using the same reasoning for the line segments (drawn in red in Fig. 3) above (0, e), we obtain that the number of large roots of the kernel is indeed f. \(\square \)

Table 2 on the next page gives several examples of plots of these small and large roots. Then, equipped with all these notions, we can give the proofs of our main theorems.

5 Proofs of the Generating Functions for Walks, Bridges, Meanders, and Excursions

In addition to our usual notations for generating functions of different classes of paths, we denote by \(W_{\alpha }=W_{\alpha }(t,u)\) (where \(1 \le \alpha \le \ell \)) the bivariate generating function of those walks avoiding the pattern p that terminate in state \(\alpha \); similarly \(M_{\alpha }=M_{\alpha }(t,u)\) for meanders avoiding the pattern p that terminate in state \(\alpha \).

Proof of Theorem 3.1

(Generating function of walks) and proof of Proposition 4.1 On the one hand, we have the following vectorial functional equation:

Therefore, the generating function W(t, u), which is the sum of the generating functions \(W_\alpha (t,u)\) over all states, is equal to

On the other hand, the generating function for W(t, u) can be obtained using the following combinatorial argument which en passant also justifies the introduction of the autocorrelation polynomial, as done in the seminal work of Guibas and Odlyzko [45]. We first introduce \(W^{\{p\}}(t,u)\), the generating function of the walks over \({\mathcal {S}}\) that end with p and contain no other occurrence of p. Then we have \(W+W^{\{p\}} = 1+tPW\) (if we add a letter from \({\mathcal {S}}\) to a p-avoiding walk, then we either obtain another p-avoiding walk, or a walk with a single occurrence of p at the end), and \(W t^\ell u^{{\text {alt}}(p)} = W^{\{p\}} R\) (a walk obtained from a p-avoiding walk by appending p at the end, can also be obtained from a walk ending with a single occurrence of p at the end by appending the complement of a presuffix of p). Solving this system, we obtain \(W(t,u)= R(t,u)/K(t,u)\).

Thus, we got two representations for W(t, u):

In order to see that this is the same representation (that is, the numerators and the denominators are equal in both fractions), we notice that \(\det (I-tA)\) is a polynomial in t of degree \(\ell \) and constant term 1. This is also the case for \((1-tP(u))R(t,u)+t^{\ell }u^{{\text {alt}}(p)}\), so this allows us to say that the two numerators in Formula (20) are actually equal. This gives the proof of Theorem 3.1 for walks, and also proves Proposition 4.1 on the structure of the kernel. \(\square \)

We now turn to the consequences of this formula for W(t, u) when one considers bridges.

Proof of Theorem 3.1

(Generating function of bridges) In order to find the univariate generating function B(t) for bridges, we need to extract the coefficient of \([u^0]\) from W(t, u). To this end, we assume that t is a sufficiently small fixed number, extract the coefficient of a (univariate) function by means of Cauchy’s integral formula, and apply the residue theorem (recall that \(u_1, \ldots , u_e\) are the small roots of K(t, u)):

By the formula for residues of rational functions, we have

The denominator of this expression is

Next, we differentiate \(K(t, u_i)=0\) with respect to t and obtain an expression for \(P'(u_i(t))\). When we substitute it into (21), we obtain (7). \(\square \)

We now consider the nonnegativity constraint for the paths.

Proof

(Generating function of meanders) We have the following vectorial functional equation:

or, equivalently,

where \(\{u^{<0}\}\) denotes all the terms in which the power of u is negative.

The right-hand side of (22) is a vector whose components are power series in t and Laurent polynomials in u (of lowest degree \(\ge -c\)). For \(\alpha =1, \ldots , \ell \), denote the \(\alpha \)-th component of this vector by \(F_\alpha =F_\alpha (t,u)\) (the letter F can be seen as a mnemonic for “forbidden”, as these components correspond to the forbidden transitions towards a negative value as exponent of u). In summary, one has

We multiply this from the right by \(\displaystyle {(I-tA)^{-1}\vec {{\mathbf {1}}}=} \)\(\displaystyle { \frac{(\mathrm {adj}(I-tA))\vec {{\mathbf {1}}}}{\det (I-tA)}}\). At this point, we denote \(\vec {{\mathbf {v}}}= \vec {{\mathbf {v}}}(t,u):= (\mathrm {adj}(I-tA))\vec {{\mathbf {1}}}\), where \(\vec {{\mathbf {1}}}\) is the column vector \((1 \ 1 \ \cdots \ 1)^{\top }\). This vector \(\vec {{\mathbf {v}}}\) is the autocorrelation vector we encountered in Proposition 4.2. As a direct consequence of its definition, one has

The following step is the essential part of the vectorial kernel method. Let \(u_i=u_i(t)\) be any small root of \(K(t,u)=\det (I-tA)\). We plug in \(u=u_i(t)\) into (23). The matrix \((I-tA)|_{u=u_i}\) is then singular. At this point we observe that \(\vec {{\mathbf {v}}}|_{u=u_i}\) is an eigenvector of \((I-tA)|_{u=u_i}\) belonging to the eigenvalue \(\lambda =0\). Indeed, \(\vec {{\mathbf {v}}}|_{u=u_i} = (\mathrm {adj}((I-tA)|_{u=u_i}))\vec {{\mathbf {1}}}\) is equivalent to \((I-tA)|_{u=u_i} \vec {{\mathbf {v}}}|_{u=u_i} = \det ((I-tA)|_{u=u_i})\vec {{\mathbf {1}}}\), which implies \((I-tA)|_{u=u_i} \vec {{\mathbf {v}}}|_{u=u_i} = 0\). Moreover, due to the structure of A, we have \(\mathrm {rank}((I-tA)|_{u=u_i} )=\ell -1\), therefore, the dimension of the characteristic space of \(\lambda =0\) is 1, and \(\vec {{\mathbf {v}}}|_{u=u_i}\) is the unique (up to scaling) eigenvector of \((I-tA)|_{u=u_i}\) that belongs to \(\lambda =0\).

Thus, if we multiply (23) by \(\vec {{\mathbf {v}}}|_{u=u_i}\), the left-hand side vanishes. In other words, the equation \((F_1(t,u), \ \ldots , \ F_{\ell }(t,u)) \, \vec {{\mathbf {v}}}(t, u)=0\) is satisfied by every small root \(u_i(t)\) of K(t, u).

Let

Note that \(\Phi \) is a Laurent polynomial, as the \(F_i\)’s and \(\vec {{\mathbf {v}}}\) are by construction Laurent polynomials in u. What is more, since \(\Phi (t,u) = u^eM(t,u)K(t,u)\) by (24) and since M(t, u) is a power series in u, \(\Phi (t,u)\) has no negative powers of u and is thus a polynomial. Now, we know that every small root \(u_i(t)\) of K(t, u) is a root of a polynomial equation

It follows that

for some G(t, u) which is a formal power series in t and a polynomial in u. We substitute this into (24), and obtain the claimed formula

\(\square \)

If the degree of \(\Phi (t,u)\) is precisely e, the formula simplifies as G is then just the leading term (in u) of \(\Phi (t,u)\). As we shall show now, this happens if p is a quasimeander (as introduced in Definition 3.3).

Proof of Theorem 3.4

(Generating function of meanders, whenpis a quasimeander) First, we notice that all the powers of u in R(t, u) are non-negative, and \({\text {alt}}(p) \ge -c\). Moreover, if \({\text {alt}}(p)=-c\), the cancellation of terms with \(u^{-c}\) in K(t, u) is not possible.Footnote 7 Therefore, the lowest power of u in K(t, u) is c, and thus we have \(e=c\) by Proposition 4.4.

Let us return to (22):

We claim that all the components of the right-hand side, except for the first component, are 0. Indeed, if the path arrives at state \(X_i\) with \(i>1\), this means that it accumulated a non-empty prefix of p. And since p is a quasimeander, w will always remain (weakly) above the x-axis while it accumulates its non-empty prefix.

Therefore, we have \(\Phi = u^c\,(F_1 \ 0 \ \cdots \ 0) \, \vec {{\mathbf {v}}}\), and thus by (15), \(\Phi = u^c F_1 R\). Since the constant term of \(F_1\) is 1, \(u^cF_1\) is a monic polynomial in u. Therefore, we have

This yields

as claimed. \(\square \)

Let us now simplify this formula for excursions, i.e., when \(u=0\).

Proof of Theorem 3.4

(Generating function of excursions, whenpis a quasimeander) The generating function of excursions is given by \(E(t) = M(t,0)\). If \({\text {alt}}(p) >-c\), we have, as u tends to 0, \(K(t,u)\sim -tu^{-c}R(t,0)\) from (16). If \({\text {alt}}(p) = -c\), then we have \(R(t,u) = 1\) and \(K(t,u)\sim -tu^{-c} + t^\ell u^{-c}\). In both cases, (11) follows. \(\square \)

We now handle the next interesting class of patterns leading to generating functions with a nice closed form: the case of reversed meanders. Recall that a reversed meander is a lattice path whose terminal point has a (strictly) smaller y-coordinate than all other points. Moreover, we define a positive meander to be a meander that never returns to the x-axis.

Proof of Theorem 3.5

(Generating function of meanders, whenpis a reversed meander) If p is a reversed meander, then all the terms of R(t, u), except for the monomial 1, contain negative powers of u, and we also have \({\text {alt}}(p)<0\). Therefore, the highest power of u in K is d, and (by Proposition 4.4) the number of large roots of \(K(t, u)=0\) is d: we denote them as above by \(v_1, \ldots , v_d\). The number of small roots can be in general higher than c: as usual, we denote it by e, and the roots themselves by \(u_1, \ldots , u_e\).

We consider the following generalization of the Wiener–Hopf factorization for lattice paths. If we split the walk w at its first and at its last left-to-right minimum, we obtain a decomposition \(w=m^-.e.m^+\), where \(m^-\) is a reversed meander, e is a translate of an excursion, and \(m^+\) is a translate of a positive meander. One also has the decomposition \(w=m^-.m\), where \(m=e.m^+\) is a meander. Notice that these decompositions are unique (Fig. 4).

The Wiener–Hopf factorization of a walk: \(W=M^- E M^+\), a product of a reversed-meander, an excursion, and a positive meander. See e.g. [44] for the importance of this factorization for lattice path enumeration. This has further consequences on pattern avoidance when one reverses the time

Moreover, since p is a reversed meander, its occurrence cannot overlap the junction of two factors. That is, m is p-avoiding if and only if its both factors are p-avoiding, and w is p-avoiding if and only if its three factors are p-avoiding. Therefore, we have

and

where W(t, u), \(M^-(t,u)\), E(t), \(M^+(t,u)\) are the generating functions of p-avoiding walks, reversed meanders, excursions, positive meanders (respectively). This in particular implies

By Theorem 3.1, we have \(W(t,u) = R(t,u)/K(t,u)\). In order to find \(M^-(t,u)\), we use a time reversal argument. Namely, we notice that a path is a reversed meander if and only if its horizontal reflection (upon translating the initial point to the origin) is a positive meander. The precise statement is as follows. Let \(- {\mathcal {S}} =\{-s:s \in {\mathcal {S}} \}\); and for the pattern \(p=[a_1, a_2, \ldots , a_\ell ]\), let \(\overleftarrow{p}=[-a_\ell , \ldots , -a_2, -a_1]\). Then there is a straightforward bijection between p-avoiding reversed meanders with steps from \({\mathcal {S}}\) and \(\overleftarrow{p}\)-avoiding positive meanders with steps from \(-{\mathcal {S}}\) which preserves the length and reflects the altitude. Therefore, we have

where the arrow means that it is the generating function for \(\overleftarrow{p}\)-avoiding paths (positive meanders in this equation) with the step set \(-{\mathcal {S}}\) (rather than p-avoiding with the step set \({\mathcal {S}}\)).

Refer to the \(m=e.m^+\) decomposition above. As we noticed, if the pattern p is a reversed meander, then m is p-avoiding if and only if both e and \(m^+\) are p-avoiding. The same is true if p is a positive meander. Therefore, similarly to Formula (30), \(M(t,u)=E(t)M^+(t,u)\), we also have \(\overleftarrow{M}(t,u)= \overleftarrow{E}(t) \overleftarrow{M}^+(t,u)\). Combined with (32), this implies

Since \(\overleftarrow{p}\) is a meander, (9) holds for \(\overleftarrow{M}(t,u)\) and we have

These identities are justified as follows. The equalities \(\overleftarrow{R}(t,1/u)=R(t,u)\) and \(\overleftarrow{K}(t,1/u)=K(t,u)\) can be easily derived directly, but also notice that we have \(W(t,u)=R(t,u)/K(t,u)\) and \(\overleftarrow{W}(t,1/u)=\overleftarrow{R}(t,1/u)/\overleftarrow{K}(t,1/u)\), and \(W(t,u)=\overleftarrow{W}(t,1/u)\) from the bijective horizontal reflection. Finally, \(\overleftarrow{K}(t,u)\) has d many small roots and e many large roots: if \(u_i(t)\) is a small root of K(t, u), then \(1/u_i(t)\) is a large root of \(\overleftarrow{K}(t,u)\); and if \(v_j(t)\) is a large root of K(t, u), then \(1/v_j(t)\) is a small root of \(\overleftarrow{K}(t,u)\).

Similarly, Eq. (11) holds for \(\overleftarrow{E}(t)\), and we have

Notice that the leading term of the polynomial \(u^eK(t,u)\) is \(-tu^{d+e}\) and, therefore, one has

We now substitute (34) and (35) into (33) and use (36) to obtain

Finally, we substitute this into (31) and obtain

\(\square \)

Remark 5.1

It is interesting to notice that though M(t, u), for p being a quasimeander [as given in (29)], is similar to M(t, u), for p being a reversed meander [as given in (39)], the latter does not contain the factor R(t, u) even if p has a non-trivial autocorrelation.

Remark 5.2

It is also worth mentioning that if only the terminal point of the pattern p has negative y-coordinate, then p is both a quasimeander and a reversed meander, and \(R=1\). Therefore, we have \({M(t,u)= \frac{1}{u^e \, K(t,u)} \, \prod _{i=1}^e (u-u_i(t))}\) by both Theorems 3.4 and 3.5.

Proof of Theorem 3.5

(Generating function of excursions whenpis a reversed meander) Excursions are given by M(t, 0), so we need to compute \(D(t):=[u^0] u^e K(t,u)\). To this aim, first note that as p is a reversed meander (see Definition 3.3), one has the following facts.

In all the terms of R(t, u), the powers of u are non-positive.

Moreover, if \(t^{m_1}/u^{\gamma _1}\) and \(t^{m_2}/u^{\gamma _2}\) are two distinct terms in R(t, u) such that \(0 \le m_1 < m_2\), then we have \(0 \le \gamma _1 < \gamma _2\).

Therefore, we can order the terms of R(t, u) according to the powers of t, and write \(u^e K(t, u)\) as follows:

where \(\frac{t^{m}}{u^{\gamma }}\) (the last term in R(t, u)) corresponds to the longest complement of a presuffix. Now, we have the following cases:

Case 1: \(c+\gamma > -{\text {alt}}(p)\). Then \(e=c+\gamma \) and we have \(D(t) = -t^{m+1}\).

Case 2: \(c+\gamma < -{\text {alt}}(p)\). Then \(e=-{\text {alt}}(p)\) and we have \(D(t)= t^{\ell }\).

Case 3: \(c+\gamma = -{\text {alt}}(p)\) and \(\ell \ne m+1\). Then \(e=c+\gamma = -{\text {alt}}(p)\) and \(D(t) = t^{\ell }-t^{m+1}\).

Case 4: \(c+\gamma = -{\text {alt}}(p)\) and \(\ell = m+1\). If \(\ell \ge 2\), then \(m\ge 1\), and therefore \(R(t,u) \ne 1\). Then \(e=c+\gamma '\) and \(D(t) = -t^{m'+1}\). As usual, we ignore the degenerate case \(\ell =1\).

In summary, we get the claim we wanted to prove, namely

where D(t) is either some power of t, or a difference of two powers of t. \(\square \)

Now that we have proven these closed forms for the generating functions, we can turn to the asymptotics of their coefficients.

6 Asymptotics of Lattice Paths Avoiding a Given Pattern

The aim of this section is to characterize the asymptotics of the number of paths (walks, bridges, meanders, excursions) with steps from \({\mathcal {S}}\) avoiding a given pattern p.

In order to avoid pathological cases, we now focus on “generic” walks.

Definition 6.1

(Generic walks) We call a constrained walk model generic if the following five properties hold:

Property 1. The generating functions B(t), M(t) and E(t) are algebraic, not rational.

Property 2. They have a unique dominant singularity, which is algebraic, not a pole.

Property 3. The factor G(t, u) in Eq. (8) is a polynomial in t.

Property 4. Let \(\rho \) be the smallest positive real number such that a large branch meets a small branch at \(t=\rho \). No large negative branch (i.e., a branch of \(K(t,u)=0\) such that \(\lim _{t\rightarrow 0^+} u(t)=-\infty \)) meets a small negative branch at \(t=\rho \).

Property 5. The smallest positive root of K(t, 1) is simple.

These properties are natural and it is easy to analyse the subcases for which they are not holding.

For Property 1, it can be the case that the forbidden pattern leads to a degenerate model, in the sense that it is no more involving any stack. Thus, we have words generated by a regular automaton (hence, the generating functions are rational and the asymptotics are well understood). Example: \({\mathcal {S}}=\{-1,1\}\) with \(p=[1,-1]\) or \(p=[-1,-1]\).

For Property 2, it is proven in [7] that several dominant singularities appear if and only if the gcd of the pairwise differences of the steps is not 1. In this case, the asymptotics are obtained via [14, Theorem 8.8]. Moreover, polar singularities are possible, but these are easy to handle.

For Property 3, it is satisfied in many natural cases (like e.g. in Theorems 3.4 and 3.5) and we analyse in the follow-up article [3] what happens otherwise.

For Property 4, we conjecture that it always holds. In fact, we have a proof for many classes of walks, but some remaining cases are open. Note that it is possible to exhibit cases where one small negative root meets a large negative root, at some \(\rho '>\rho \): this is e.g. the case for \({\mathcal {S}}=\{-2, -1, 0, 1, 2\}\) with \(p=[0, 1, -2]\). Moreover, it is also possible that two small negative roots meet at \(\rho \): e.g. for \({\mathcal {S}}=\{-2,1\}\) with \(p=[1,-2,1,-2]\).

For Property 5, an example of a double root for K is given by \({\mathcal {S}}=\{-1,1\}\) and \(p=[-1,1]\) (this example corresponds to the very last drawing in Table 2). Double or higher multiplicity roots would just create additional subcases (trivial to handle) in the following theorems.

We observe that the behaviour of real branches of \(K(t,u)=0\) is much more complicated and diverse than that in the Banderier–Flajolet study. To recall, in their case there are always two real positive branches (one small branch \(u_1\) and one large branch \(v_1\)) that meet at a singularity point \((t,u)=(\rho , \tau )\), where \(u=\tau \) is the only positive number such that \(P'(\tau )=0\). In contrast, in our case we may have additional positive branches—even when the autocorrelation is trivial.

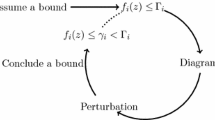

Table 2 from Sect. 4 illustrates that we always have a small branch and one large branch whose shape in general resembles that of \(u_1 \cup v_1\) observed for classical paths by Banderier and Flajolet. In one sense, the geometry of the branches of K observed for classical paths is now perturbed by the pattern avoidance constraint: this perturbation adds new branches. In the next section, we introduce the generating function W(t, u, v) where v encodes the number of occurrences of the pattern p. One can then play with v like if it would encode a Boltzmann weight/Gibbs measure (a typical point of view in statistical mechanics): moving the parameter v in a continuous way from 1 to 0 gives a rigorous explanation of this perturbation phenomenon, and shows the coherence with the emergence of new branches. More information about these branches (and their Puiseux expansions) can be derived from the Newton polygon associated with the kernel (see Proposition 4.4 and [34]).

Lemma 6.2

(Location and nature of the dominant singularity) For any generic model, the dominant singularity of B(t) and E(t) is \(\rho \), the smallest real positive number such that a small branch meets a large branch at \(t=\rho \). (The branches refer to the roots of \(K(t,u)=0\), as defined in (4)). We call these branches \(u_1\) and \(v_1\). Additionally, their branching point is a square root singularity.

Proof

Lattice paths avoiding a given pattern can be generated by a pushdown automaton (see Fig. 1). Accordingly, they can be generated by a context-free grammar, and their generating functions thus satisfy a “positive” system of algebraic equations (see [27]). Therefore, the asymptotic number of words of length n in such languages is of the form \(C \rho ^{-n} n^\alpha \). When the system is not strongly connected, \(\alpha \) is either an integer (if \(\rho \) is a pole), either a dyadic number (if one has an iterated square root Puiseux singularity at \(\rho \)), as proven by Banderier and Drmota in [8]. For excursions, one has a strongly connected dependency graph (see Fig. 1); the dominant singularity \(\rho \) (or, possibly, the dominant singularities) thus behaves like a square root, as we have generic walks (and not a degenerate case where we face a polar singularity).

Now, for generic walks, because of the product formula (8) for excursions, one (or several) of the small roots have to follow this square root Puiseux behaviour. By Pringsheim’s theorem, this has to be at a place \(0<\rho \le 1\). Note that the Pólya–Fatou–Carlson theorem [26] on pure algebraic functions with integer coefficients says that they cannot have radius of convergence 1. Therefore, the first crossing between a small and large branch is at \(0<\rho <1\) (i.e., \(\rho =1\) or any other root of \(t-t^\ell \), cannot be the dominant singularity). Now, by Proposition 4.1, the geometry of the branches implies that this branching point is at a location where a large branch meets a small branch, because if the branching point comes from the intersection of small roots only (see the examples 1, 6, 8 in Table 2 for such a case), then their product will be regular. So, \(\rho \) has to be the smallest real positive number where a small branch meets a large branch.

When one does not take into account occurrences of a pattern, the generating function of bridges is essentially the logarithmic derivative of the generating function of excursions, and they have the same radius of convergence (the cycle lemma, the identity \(B=1+ E t \partial _t A\), Spitzer’s and Sparre Andersen’s formulas are alter egos of this relation, see the paragraph “On the relation between bridges and excursions” in [9, Theorem 5]). For walks with a forbidden pattern, this simple relation is not holding anymore and there is no apparent equation linking the two generating functions. Nevertheless, the numbers \(e_n\) of excursions and \(b_n\) of bridges of length n still satisfy \(e_n\le b_n \le n e_n\) (this is easily seen by doing the n cyclic shifts of each excursion). This implies that E(t) and B(t) have the same radius of convergence. \(\square \)

Equipped with this additional information on the roots and the way they cross, we can derive the following asymptotic results. Note that we use the notations \(K_t(t,u)\) for \((\partial _t K)(t,u)\), and \(K_{uu}(t,u)\) for \((\partial _u^2 K)(t,u)\). We start with the asymptotics of walks on \({\mathbb {Z}}\) with a forbidden pattern.

Theorem 6.3

(Asymptotics of walks on \({\mathbb {Z}}\)) Let \(\rho _K\) be the smallest positive root of K(t, 1). For any generic model, the asymptotic number of walks of length n avoiding a pattern p is

Proof

This follows from the partial fraction decomposition of \(W(t)=\frac{R(t,1)}{K(t,1)}\), where \(\rho _K\) is a simple pole as the model is generic. \(\square \)

Now, for excursions and bridges, the corresponding generating functions have an algebraic dominant singularity; this leads to the following theorems.

Theorem 6.4

(Asymptotics of excursions) For any generic model, the asymptotic number of excursions of length n avoiding a pattern p is

where \(\tau :=u_1(\rho )\), \(Y(t):=u_2(t)\cdots u_e(t)\), and \(D(t):=[u^0] u^e K(t,u)\).

Proof

We use the closed form given in Theorem 3.2. Since the model is generic, the product \(\frac{G(t,0)}{D(t)}Y(t)\) is analytic for \(|t|\le \rho \). (Caveat: it can be the case that some small branches are not analytic for some \(|t|< \rho \), however, their product is then analytic.) Now, for any generic pattern, D(t) is either a monomial or of the shape given in Case 3, page 23, but, as \(\rho <1\) (as shown in the course of the proof of Lemma 6.2), one thus has \(D(\rho )\ne 0\). So, the singularity and the local behaviour of E(t) is completely determined by the singular behaviour of \(u_1(t)\). This local expansion of \(u_1\) is given by a local inversion of K(t, u) at \((t,u)=(\rho ,\tau )\); this leads to

The claim is then reached by singularity analysis (see [42]) on the Puiseux expansion

Theorem 6.5

(Asymptotics of bridges) For any generic model, the asymptotic number of bridges of length n avoiding a pattern p is

Proof

We know from Lemma 6.2 that B(t) and E(t) have the same radius of convergence, where B(t) is given by Eq. (7) from Theorem 3.1. Thus, the singular behaviour of \(u_1(t)\) determines the singularity and the local behaviour of B(t). We have therefore

with a denominator \(K_t\) which is not 0 for \(t=\rho \). So, plugging the singular expansion of \(u_1\) into this formula yields the result. \(\square \)

We now introduce the notion of drift, which plays a role for the asymptotics of meanders.

Definition 6.6

(Drift of a walk) For any given set of steps \({\mathcal {S}}\) and forbidden pattern p, the drift is the quantity

Thus \(\delta >0\), \(\delta <0\), or \(\delta =0\) correspond to the fact that almost all the walks of length n on \({\mathbb {Z}}\) have a final altitude of order which is either \(+\Theta (n)\), \(-\Theta (n)\), or o(n), respectively. One says that these walks (and the corresponding meanders/excursions/bridges) have a positive, negative or zero drift, respectively.

As usual, the drift is not playing a role for the asymptotics of excursions and bridges. Indeed, the constraint to force the walk to end at altitude zero is there “killing” the drift. This is best seen by a “time reversal” argument: under this transformation, a bridge stays a bridge (which then avoids the reverse forbidden pattern), one thus gets the same generating function (note that K(t, u) then becomes K(t, 1 / u)); therefore, the asymptotics have to be independent of the drift. A similar reasoning holds for excursions. Now, the next theorem shows how the drift does play a role for the asymptotics of meanders. For meanders with negative or zero drift, the quantity \(\rho \) from Lemma 6.2 is also the radius of convergence of M(t). For meanders with positive drift, the radius of convergence of M(t) is the dominant pole of 1 / K(t, 1).

Theorem 6.7

(Asymptotics of meanders) Assume that the model is generic. Let \(\rho \), \(\rho _K\), and \(\tau \) be defined like in the previous theorems. We have one of the following three cases:

If \(\tau =1\) and \(\rho _K=\rho \), then we are in the “zero drift” case.

If \(\tau >1\) and \(u_1(\rho _K)=1\) and all large roots v satisfy \(v(t)\ne 1\) for \(\rho _K<t<\rho \), then we are in the “negative drift” case.

If either \(\tau <1\), or \(\tau =1\) but \(\rho _K<\rho \), or \(\tau >1\) but some large root v satisfies \(v(\rho _K)=1\), then we are in the “positive drift” case.

Then the asymptotics of the coefficients of the meander generating function

is given by

Proof

Before we begin the case analysis, let us mention some preliminary facts. The line \(u=1\) intersects the curve shaped by \(u_1 \cup v_1\) at some point \((t_0,1)\) (see Fig. 5). Notice that the right-most point of this curve is \((\rho ,\tau )\). Thus \(t_0\le \rho \), where equality holds if and only if \(\tau =1\). Moreover, observe that \(K(t_0,1)=0\) and thus \(\rho _K\le \rho \), where equality can only hold if \(\tau =1\). This last fact comes as no surprise, as the growth rate of all walks on \({\mathbb {Z}}\) is larger or equal to the growth rate of meanders, which are a subset of walks restricted on \({\mathbb {N}}\). It also tells us that the three cases listed in the assertion cover all possibilities that may appear for generic walks.

Zero drift case: To prove the assertion, observe that the dominant singularity of the generating function \(M(t)=(1-u_1(t))Y(t)G(t,1)/K(t,1)\) is at \(\rho _K=\rho \) and it originates from a simple zero in the denominator K(t, u) and from \(u_1\). The singular expansion of \(u_1(t)\) at \(\rho \) [see Formula (41)] gives

Negative drift case: We have \(\tau >1\) and thus, by the preliminary facts listed in the first paragraph, \(\rho _K<\rho \). So, there is a simple zero of K(t, 1) at \(\rho _K\), but this is cancelled, as \(u_1(\rho _K)=1\). Now, \(u_1\) has a square-root type singularity at \(\rho \), thus singularity analysis gives the last claim of the theorem, via the following Puiseux expansion at the dominant singularity \(\rho \)

Note that there may be a second zero of K(t, 1), say \(\rho _2\), which is smaller than \(\rho \) (and larger than \(\rho _K\)). This means there is a small root \(u_2(t)\) (large roots are excluded in the negative drift case) of the kernel satisfying \(u_2(\rho _2)=1\). As Y(t) contains the factor \(1-u_2(t)\), this zero is cancelled. In case the root \(\rho _2\) is a multiple zero of K(t, 1), say of order \(\omega \), then there must be \(\omega \) roots \(u_2(t),\dots ,u_{\omega +1}(t)\) which meet at \(t=\rho _2\), causing a singularity of order \(\omega \). But then again the factors \(1-u_i(t), i=2,\dots ,\omega \), in Y(t) cancel the pole. The same happens if further zeros of K(t, 1) appear before \(\rho \). Hence we conclude that the dominant singularity of M(t) is at \(\rho \) and originates from the dominant singularity of \(u_1(t)\).

Positive drift case: Here we have several subcases. Assume first that \(\tau <1\) or \(\tau =1\) and \(\rho _K<\rho \). In fact, by the preliminary facts from the first paragraph, we know that we have in both cases \(\rho _K<\rho \), and hence the generating function has the dominant singularity \(\rho _K\) which comes from the kernel only and is a simple pole. This implies

If \(\tau >1\), like in the negative drift case, and some large root v satisfies \(v(\rho _K)=1\), then there is no more a cancellation of the zero of K(t, 1) by one of the factors in Y(t). Thus M(t) has a simple pole at \(\rho _K\) and we get the same expression as in the other subcases. \(\square \)

These asymptotics also allow us to get results on limit laws, as presented in the next section.

Pushdown automaton and its associated adjacency matrix A. The automaton generates walks with the set of steps \({\mathcal {S}}=\{-1,1,2\}\), and marks each occurrence of the pattern \(p=[1,2,-1,1,2]\). In dashed red we marked the arrow from the last state \(X_{\ell }\) labelled by \(a_{\ell }\), the last letter of the pattern. Giving a weight v to this transition leads to enumerative formulas involving \(\det (I-tA)\) as given in Eq. (46) (Color figure online)

7 Limit Law for the Number of Occurrences of a Pattern

Our approach also allows us to count the number of occurrences of a pattern in paths. As usual, an occurrence of p in w is any substring of w that coincides with p, and when we count them we do not require that the occurrences will be disjoint. For example, the number of occurrences of 11 in 1111 is 3. One has

Theorem 7.1

(Trivariate generating function for walks) The generating function of the number of occurrences of the pattern p in walks on \({\mathbb {Z}}\) is

Proof

We give two proofs, each of them having its own interest. Both of them are of wider applicability, see [42, pp. 60 and 212].

First proof, via symbolic inclusion-exclusion. Define a cluster as a sequence of repetitions of the pattern p (possibly overlapping), where each occurrence of p is marked by the variable v, so the set \({\mathcal {C}}\) of clusters is given by \({\mathcal {C}}= v p {\text {Seq}}(v (\mathcal {{{\bar{Q}}}}-\epsilon ))\), where \(\mathcal {{{\bar{Q}}}}-\epsilon \) is the set of nonempty complements of presuffixes of p [the generating function of which is \(R(t,u)-1\), see Formula (3)]. Obviously, \(W(t,u,v+1)= {\text {Seq}}({\mathcal {S}} +{\mathcal {C}})\). This directly gives (42).

Second proof, via a system/adding a jump approach. Let \(W \equiv W(t,u,v)\) and \(W_p \equiv W_p(t,u,v)\) be the generating functions of all words and, respectively, of words ending with p, where v counts the number of occurrences of p. We show the following two identities:

To show (43), take a word and add a letter to it. If the resulting word does not end with p, it is counted by \(W - W_p\); if it does, it is counted by \(v^{-1}W_p\). To show (44), take a word w with i occurrences of p and consider the contribution of w.p to both sides of the equation. Adding the pattern p to w creates a number j of extra occurrences of p. The path w.p can be written in j ways as \(w'r\), where \(w'\) ends with p and r is an autocorrelation factor, or \(j-1\) ways if we impose that \(r\ne \varepsilon \). The word w.p therefore contributes with a factor \(v^i\) to \(Wt^\ell u^{{\text {alt}}(p)}\), with a factor \(v^{i+1} + \cdots + v^{i+j}\) to \(W_pR\) and with a factor \(v^{i+1} + \cdots + v^{i+j-1}\) to \(W_p(R-1)\). The formula (42) follows.\(\square \)

In order to get the formula for meanders, we reconsider the associated automaton and its adjacency matrix A: we add a mark v to the transition which would lead to an occurrence of p (see Fig. 6). As

the above theorem has the consequence that

This last equality follows from the fact that the denominators of the rational functions (in \({\mathbb {Q}}(t)\)) in (45) and (42) are in fact the same irreducible polynomial of degree \(\ell \) in t.

Accordingly, the trivariate kernel is thus defined as \(K(t,u,v):=\det (I-tA)\). Note that for \(v=0\) we get the kernel from the avoidance case [see eq. (4)], and for \(v=1\) we get \(1-tP\) (which is, as expected, the kernel from [9]). The formulas for the trivariate generating functions of bridges, meanders, and excursions are thus like in Theorems 3.1 and 3.2, where the \(u_i\)’s are now the small roots of the trivariate kernel. This allows us to prove a universal asymptotic behaviour, an instance of what Flajolet and Sedgewick pleasantly called Borges’s theorem (we comment more on it in the conclusion).

Theorem 7.2

(“Borges’s theorem”: Gaussian limit laws for occurrences) Let \(X_n\) be the random variable which counts the number of occurrences of a pattern in a generic walk, bridge, meander, excursion model. Then \(X_n\) has a Gaussian limiting distribution with \({\mathbb {E}}[X_n] = \mu n + O(1)\) and \({\mathbb {V}}\mathrm{ar}[X_n] = \sigma ^2 n + O(1)\) for some constants \(\mu > 0\) and \(\sigma ^2\ge 0\):

Proof

All these combinatorial structures are generated by context-free grammars [7, 36, 56, 61]. Accordingly, their generating functions satisfy a positive algebraic system. Thus, it leads to Gaussian limit laws, as proven in [8, Theorem 9]: it comes from following the dependencies in the graph associated with the system, and applying Hwang’s quasi-power theorem to each component. To apply it, a positive variance condition has to be checked: this is done via the trivariate version of the formulas of Theorems 3.1 and 3.2, with the additional variable v counting the number of occurrences of the pattern. \(\square \)

8 Examples, Pushdown Automata

Directed lattice paths can be generated by context-free grammars [56], and it is well-known that context-free grammars and pushdown automata/counter automata are related (see the “Cinderella Book” [48, Sections 6.3, 6.4, and 8.5.4]). We want here to promote the idea that pushdown automata are a powerful approach for lattice path enumeration. Conversely, any pushdown automaton enumeration can be seen as a lattice path problem (the stack encodes the altitude of the path), this allows us to solve it via our vectorial kernel method. This is due to the fact that pushdown automata have mainly two notions of acceptance:

acceptance by empty stack, this corresponds to the generation of excursions;

acceptance by final state (whatever the value of the stack is), this corresponds to the generation of meanders.

Let us now illustrate our method by diverse examples.

Example 8.1

(Forbidden patterns) We start with our initial problem of lattice paths with forbidden patterns. Our theorems rediscover, in a uniform way, numerous results by different authors obtained in the last years by different methods. We present some of them in Table 3.

Next we now give several examples of a wide variety of questions on lattice paths, that can also be tackled with our approach. In some cases, we omit the closed forms: they are just a direct application of our approach, once the adjacency matrix A is known. Some pushdown automata lead to a system for which an additional argument is needed to identify the factor G in Theorem 3.2; we study this situation in more detail in [3].

Example 8.2

(Motzkin paths without peaks and valleys)

The automaton on the left allows us to count Motzkin paths by marking each peak (i.e., the pattern \([1,-1]\)) and each valley (i.e., the pattern \([-1,1]\)). Forbidding the transitions shown by red dashed lines, we get the automaton for Motzkin paths without peaks and valleys. Then the kernel is \(K(t,u)=\frac{{t}^{3}u+{t}^{2}u-t{u}^{2}-tu-t+u}{u}\), and it has one small root \(u_0(t) = {\frac{1-t+{t}^{2}+{t}^{3}-\sqrt{(1-t^4)(1-2t-t^2)}}{2t}}\). The degree of \(\Phi \) (as in Eq. 25) is equal to the number of small roots of K, and taking care of the leading term (in u) of \(\Phi \) we obtain

Setting \(u=1\), we get the generating function for meanders

which corresponds to the sequence A308435.

Setting \(u=0\), we get the generating function for excursions

which corresponds to the sequence A004149. \(\square \)

Example 8.3

(Basketball with alternation of team scoring)

In the pre-1980s basketball rules, each team could score 1 or 2 points at once. Zeilberger and other authors [4, 11, 23] therefore considered the model of (old-time) basketball excursions, where the step set is \({\mathcal {S}}=\{2, 1,-1,-2\}\). If we now add the constraint that each team cannot score twice in a row, this gives the automaton presented on the left. It yields the bivariate generating function

which correspond to the sequences A001405 and A126120. \(\square \)

Example 8.4

(Walks/meanders/excursions of bounded height) If one considers meanders of altitude bounded by h, they are easily generated by an automaton with h states. In fact, the reader now familiar with our approach will realize that a pushdown automaton with just one state is already enough: the kernel equation will encode the boundedness by h and the positivity constraint! See [7, 12, 23] for further considerations on the closed forms obtained. This bypasses the quickly intractable resolution of a system \(h\times h\).

Example 8.5

(Partially directed self-avoiding walks) We can link some self-avoiding walks and pattern avoiding lattice paths. In fact, the enumeration and the asymptotics of self-avoiding walks in \({\mathbb {Z}}^2\) is one of the famous open problems of combinatorics and probability theory. As it is classical for intractable problems, many natural subclasses have been introduced, and solved. E.g., partially directed self-avoiding walks with an added constraint of living in a half-plane or a strip [6]: they have three kinds of steps, say \(\mathsf {n}\), \(\mathsf {e}\), and \(\mathsf {s}\), and the self-avoiding condition means that factors \(\mathsf {n}\mathsf {s}\) and \(\mathsf {s}\mathsf {n}\) are disallowed. Consider the following three models:

in the first model, the half-plane is the one over the line \(x = 0\); the heights of the steps are \({\text {alt}}(\mathsf {n}) = 1\), \({\text {alt}}(\mathsf {e}) = 0\) and \({\text {alt}}(\mathsf {s}) = -1\);

in the second model, the half-plane is the one over the line \(x = y\); the heights are \({\text {alt}}(\mathsf {n}) = 1\), \({\text {alt}}(\mathsf {e}) = -1\) and \({\text {alt}}(\mathsf {s}) = -1\);

in the third model, the half-plane is the one over the line \(x = -y\); the heights are \({\text {alt}}(\mathsf {n}) = 1\), \({\text {alt}}(\mathsf {e}) = 1\) and \({\text {alt}}(\mathsf {s}) = -1\).

Some models of self-avoiding walks are encoded by partially directed lattice paths avoiding a pattern (see [6])

These models are illustrated in Fig. 7. Each of them leads to an algebraic generating function, expressible via our method as a closed form involving the roots of the kernel. \(\square \)

Example 8.6

(Motzkin paths with horizontal steps only at even/odd altitude) If one wants to allow some specific set of steps depending on the altitude modulo some period, the vectorial kernel method will do the job! Let us illustrate this with Motzkin paths (\({\mathcal {S}}=\{1,0,-1\}\)) with horizontal steps only at even altitude.

This pushdown automaton has two states, the first one corresponds to even altitudes, the second one to odd altitudes. By design, it is clear that it generates Motzkin walks with horizontal steps only at even altitude. Our vectorial kernel method then captures the additional constraint of the positivity of the stack, and thus gives the generating function for meanders (A307557) and excursions (A090344). En passant, let us mention that excursions are given by a pleasant continued fraction (see e.g. [41] for a nice survey):

If we want to enumerate Motzkin paths with horizontal steps only at odd altitude, we adjust the automaton as shown on the left, and obtain the sequences A327421 (for meanders) and A090345 (for excursions), see e.g. [18, 32]. \(\square \)

Example 8.7

(Duchon’s club without lonely visitors)

The terminology of Duchon’s club was introduced in [9] as a playful reference to the nice article [36]. As some readers may wonder, we have to add that it is in no way an allusion to some fancy habit of our highly honourable and very respectable colleague Philippe Duchon! Here is the story. In the early evening, the club opens empty, and then, during the full night, people are entering in couples and leaving in groups of three people. Finally, the club closes empty at the end of the night. For sure, you do not want to be alone in such a club! What is the number of possible scenarios? These are excursions with step set \({\mathcal {S}}=\{2, -3\}\) and never going to altitude 1. This enumeration is encoded by a single state automaton, and the kernel equation then fully handles all the constraints. This leads to the sequences A327422 (for meanders) and A327423 (for excursions). \(\square \)

Example 8.8

(Counting/avoiding humps and peaks in Motzkin paths) Consider Motzkin walks: \({\mathcal {S}} = \{ -1, 0, 1 \}\). A peak is the pattern \([1,-1]\). A hump is an occurrence of the pattern \([1,0^*,-1]\), that is, 1 followed by a (possibly empty) sequence of 0s, followed by \(-1\). Humps were considered e.g. in [35, 60, 72]. We first consider the generating function of walks counting the number of humps, similarly to our approach from Sect. 7.

The transition which was forbidden in the pattern avoidance setting is here represented with a variable v in the adjacency matrix A. This yields the trivariate generating function with respect to length (t), final altitude (u), and number of occurrences of humps (v). In particular, this leads to a Gaussian distribution, see Borges’s Theorem 7.2.