Abstract

The aim of this study is to speed up the scaled conjugate gradient (SCG) algorithm by shortening the training time per iteration. The SCG algorithm, which is a supervised learning algorithm for network-based methods, is generally used to solve large-scale problems. It is well known that SCG computes the second-order information from the two first-order gradients of the parameters by using all the training datasets. In this case, the computation cost of the SCG algorithm per iteration is more expensive for large-scale problems. In this study, one of the first-order gradients is estimated from the previously calculated gradients without using the training dataset. To estimate this gradient, a least square error estimator is applied. The estimation complexity of the gradient is much smaller than the computation complexity of the gradient for large-scale problems, because the gradient estimation is independent of the size of dataset. The proposed algorithm is applied to the neuro-fuzzy classifier and the neural network training. The theoretical basis for the algorithm is provided, and its performance is illustrated by its application to several examples in which it is compared with several training algorithms and well-known datasets. The empirical results indicate that the proposed algorithm is quicker per iteration time than the SCG. The algorithm decreases the training time by 20–50% compared to SCG; moreover, the convergence rate of the proposed algorithm is similar to SCG.

Similar content being viewed by others

References

Abraham A (2004) Meta learning evolutionary artificial neural networks. Neurocomputing 56:1–38. doi:10.1016/S0925-2312(03)00369-2

Bazaraa MS, Sherali HD, Shetty CM (2006) Nonlinear programming, 3rd edn. Wiley, New York

Bishop CM (1996) Neural networks for pattern recognition. Oxford University Press, New York

Broyden CG (1967) Quasi-Newton methods and their applications to function minimization. Math Comput 21:368–381. doi:10.2307/2003239

Castillo E, Guijarro-Berdiñas B, Fontenla-Romero O, Alonso-Betanzos A (2006) A very fast learning method for neural networks based on sensitivity analysis. J Mach Learn Res 7:1159–1182

Chuang CC, Jeng JT (2007) CPBUM neural networks for modeling with outliers and noise. Appl Soft Comput 7:957–967. doi:10.1016/j.asoc.2006.04.009

Demuth H, Beale M, Hagan M (2008) Neural network toolbox 6 user’s guide. Mathworks Inc, Natick

Edmonson W, Principe J, Srinivasan K, Wang C (1998) A global least mean square algorithm for adaptive IIR filtering. IEEE trans. on circuits and systems-II. Analog Digit Signal Process 45(3):379–384. doi:10.1109/82.664244

Haykin S (2001) Kalman filtering and neural networks. Wiley, New York

Jang JSR (1991) Fuzzy modelling using generalized neural networks and Kalman filter algorithm. In: Proceedings of the ninth national conference on artificial intelligence (AAAI-91), pp 762–767

Jang JSR (1993) ANFIS: adaptive network based fuzzy inference systems. IEEE Trans Syst Man Cybern 23:665–685. doi:10.1109/21.256541

Jang JSR, Mizutani E (1996) Levenberg-Marquardt method for ANFIS learning. In: Proceedings of the international joint conference of the north American fuzzy information processing society biannual conference, Berkeley, pp 87–91

Jang JSR, Sun CT, Mizutani E (1997) Neuro-fuzzy and soft computing. Prentice Hall, Upper Saddle River

Kashiyama K, Tamai T, Inomata W, Yamaguchi S (2000) A parallel finite element method for incompressible Navier-Stokes flows based on unstructured grids. Comput Methods Appl Mech Eng 190(3–4):333–344

Keles A, Hasiloglu AS, Keles A, Aksoy Y (2007) Neuro-fuzzy classification of prostate cancer using NEFCLASS-J. Comput Biol Med 37:1617–1628. doi:10.1016/j.compbiomed.2007.03.006

Le Cun Y, Galland C, Hinton GE (1989) GEMINI: gradient estimation through matrix inversion after noise injection. In: Touretzky D (ed) Advances in neural information processing systems 1 (NIPS’88). Morgan Kaufman, Denver

Le Cun Y, Kanter I, Solla SA (1991) Eigenvalues of covariance matrices: application to neural network learning. Phys Rev Lett 66(18):2396–2399. doi:10.1103/PhysRevLett.66.2396

Levenberg K (1944) A method for the solution of certain problems in least squares. Q Appl Math 2:164–168

Marquardt DW (1963) An algorithm for least squares estimation of nonlinear parameters. J Soc Ind Appl Math 11:431–441. doi:10.1137/0111030

Moghaddam HA, Matinfar M (2007) Fast adaptive LDA using quasi-Newton algorithm. Pattern Recognit Lett 28:613–621. doi:10.1016/j.patrec.2006.10.011

Møller M (1993) A scaled conjugate gradient algorithm for fast supervised learning. Neural Netw 6(4):525–533. doi:10.1016/S0893-6080(05)80056-5

Møller M (1997) Efficient training of feed-forward neural networks. Ph.D. Thesis, Aarhus University, Denmark

Mukkamala S, Sung AH, Abraham A (2005) Intrusion detection using an ensemble of intelligent paradigms. J Netw Comput Appl 28(2):167–182. doi:10.1016/j.jnca.2004.01.003

Ribeiro MV, Duque CA, Romano JMT (2006) An interconnected type-1 fuzzy algorithm for impulsive noise cancellation in multicarrier-based power line communication systems. IEEE J Sel Areas Communitications 24(7):1364–1376. doi:10.1109/JSAC.2006.874417

Schraudolph NN (2002) Fast curvature matrix-vector products for second-order gradient descent. Neural Comput 14:1723–1738. doi:10.1162/08997660260028683

Shanno DF (1970) Conditioning of quasi-Newton methods for function minimization. Math Comput 24:647–656. doi:10.2307/2004840

Sinha SK, Fieguth PW (2006) Neuro-fuzzy network for the classification of buried pipe defects. Autom Constr 15:73–83. doi:10.1016/j.autcon.2005.02.005

Sözen A, Arcaklioğlu E, Özalp M, Kanit EG (2005) Solar-energy potential in Turkey. Appl Energy 8(4):367–381. doi:10.1016/j.apenergy.2004.06.001

Steil JJ (2006) Online stability of backpropagation–decorrelation recurrent learning. Neurocomputing 69:642–650. doi:10.1016/j.neucom.2005.12.012

Sun CT, Jang JSR (1993) A neuro-fuzzy classifier and its applications. In: Proceedings of IEEE international conference on fuzzy systems, San Francisco, vol 1, pp 94–98

Theoridis S, Koutroumbas K (2003) Pattern recognition, 2nd edn. Academic Press, London

Thomas GB, Finney RL (1995) Calculus and analytic geometry, 9th edn. Addison-Wesley, Reading

Toosi NA, Kahani M (2007) A new approach to intrusion detection based on an evolutionary soft computing model using neuro-fuzzy classifiers. Comput Commun 30:2201–2212. doi:10.1016/j.comcom.2007.05.002

Tran C, Abraham A, Jain L (2004) Decision support systems using hybrid neurocomputing. Neurocomputing 61:85–97. doi:10.1016/j.neucom.2004.03.006

Wang C, Principe J (1999) Training neural networks with additive noise in the desired signal. IEEE Trans Neural Netw 10(6):1511–1517. doi:10.1109/72.809097

Zhang P, Bui TD, Suen CY (2007) A novel cascade ensemble classifier system with a high recognition performance on handwritten digits. Pattern Recognit 40:3415–3429. doi:10.1016/j.patcog.2007.03.022

Acknowledgments

The authors thank Rifat Edizkan, Omer Nezih Gerek, and the reviewers for all the useful discussions and their valuable comments on this article.

Author information

Authors and Affiliations

Corresponding author

Appendix: The presentation of the complexity of the gradient estimation

Appendix: The presentation of the complexity of the gradient estimation

Our claim is that the complexity of the gradient estimation \( g_{t,k}^{est} \) is less than the complexity of the gradient calculation \( g_{t,k}^{calc} , \) that is \( O\left( {g_{t,k}^{est} } \right) < O\left( {g_{t,k}^{calc} } \right), \) where O(·) is the calculation complexity.

This can be proved with ease:

Both \( g_{t,k}^{est} \) and \( g_{t,k}^{calc} \) represent the gradient of E with respect to ρ ij .

Calculation of the operation size of \( g_{t,k}^{est} ; \)

In Eq. 9, the “mult” term represents the operation of multiplication.

\( g_{t,k}^{est} \) is estimated by LSE as shown below:

If \( {\mathbf{A}} = \left[ {\begin{array}{*{20}c} {a_{11} } & {a_{12} } & {a_{13} } \\ {a_{21} } & {a_{22} } & {a_{23} } \\ {a_{31} } & {a_{32} } & {a_{33} } \\ \end{array} } \right] , \) then the determinant of A is calculated as follows:

In addition \( O\left( {\left| {\mathbf{A}} \right|} \right) = 5\,add + 2 \times 6\,mult, \) where the term “add” represents the operation of the addition. The adjoint of A is given below:

The operation size of adj(A) is \( O\left( {adj\left( {\mathbf{A}} \right)} \right) = 9 \times \left( {1\,add + 2\,mult} \right). \) Now, A −1 and its size can be determined as;

Lastly, \( O\left( {g_{t,k}^{est} = {\varvec{\Uptheta}}_{t,k} {\mathbf{F}}} \right) = 2\,add + 2\,mult \) and \( Total\,O = 22\,add + 53\,mult. \)

On the other hand, the calculation of \( g_{t,k}^{calc} \) and its number of arithmetic operations are determined below:

Firstly, the output of NFC should be calculated from the first layer of NFC:

where \( \rho_{ij} \,\left( {\rho_{ij} \in {\varvec{\Upgamma}}_{m \times n} } \right) \) and \( \sigma_{ij} \,\left( {\sigma_{ij} \in {\varvec{\Uplambda}}_{m \times n} } \right) \) are the centre and the width of the Gaussian membership function μ ij for the ith rule and the jth feature. In Eq. 16, the exp(·) operation is approximately calculated using the Maclaurin Series;

If the upper limit for u = 10, then \( O\left( {\exp (z)} \right) = 10\,add + 117\,mult \) and \( O\left( {\mu_{ij} } \right) = 11\,add + 123\,mult . \) The output of the second layer is calculated as

where rule i represents the firing strength of the ith rule. The output of the third layer is calculated as:

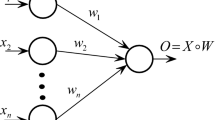

where \( w_{ik} \,\left( {w_{ik} \in {\mathbf{W}}_{m \times c} } \right) \) represents the weight of the ith rule that belongs to the kth class; m denotes the number of rules. The output of the last layer is calculated as

The mean square error function is used as the cost function:

where N represents the number of samples; c represents the number of classes; y pk and out pk are the target and the actual output of the pth sample and the kth class, respectively.\( g_{t,k}^{calc} \) of ρ ij is calculated using the chain rule:

The total arithmetic operations of \( g_{t,k}^{calc} \) of ρ ij are given below:

The comparison of the number of arithmetic operations of the gradients is given in Eq. 24.

Rights and permissions

About this article

Cite this article

Cetişli, B., Barkana, A. Speeding up the scaled conjugate gradient algorithm and its application in neuro-fuzzy classifier training. Soft Comput 14, 365–378 (2010). https://doi.org/10.1007/s00500-009-0410-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-009-0410-8