Abstract

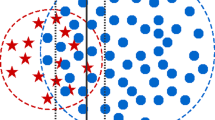

Imbalanced problems are quite pervasive in many real-world applications. In imbalanced distributions, a class or some classes of data, called minority class(es), is/are under-represented compared to other classes. This skewness in the data underlying distribution causes many difficulties for typical machine learning algorithms. The notion becomes even more complicated when machine learning algorithms are to combat multi-class imbalanced problems. The presented solutions for tackling the issues arising from imbalanced distributions, generally fall into two main categories: data-oriented methods and model-based algorithms. Focusing on the latter, this paper suggests an elegant blend of boosting and over-sampling paradigms, which is called MDOBoost, to bring considerable benefits to the learning ability of multi-class imbalanced data sets. The over-sampling technique introduced and adopted in this paper, Mahalanobis distance-based over-sampling technique (MDO in short), is delicately incorporated into boosting algorithm. In fact, the minority classes are over-sampled via MDO technique in such a way that they almost preserve the original minority class characteristics. MDO, in comparison with the popular method in this field, SMOTE, generates more similar minority class examples to original class samples. Moreover, the broader representation of minority class examples is provided via MDO, and this, in turn, causes the classifier to build larger decision regions. MDOBoost increases the generalization ability of a classifier, since it indicates better results with pruned version of C4.5 classifier; unlike other over-sampling/boosting procedures, which have difficulties with pruned version of C4.5. MDOBoost is applied to real-world multi-class imbalanced benchmarks and its performance is then compared with several data-level and model-based algorithms. The empirical results and theoretical analyses reveal that MDOBoost offers superior advantages compared to popular class decomposition and over-sampling techniques in terms of MAUC, G-mean, and minority class recall.

Similar content being viewed by others

References

Alcal J, Fernndez A, Luengo J, Derrac J, Garca S, Snchez L, Herrera F (2010) KEEL data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. J Mult Valued Log Soft Comput

Alibeigi M, Hashemi S, Hamzeh A (2012) DBFS: an effective density based feature selection scheme for small sample size and high dimensional imbalanced data sets. Data Knowl Eng

Aczl J, Darczy Z (1975) On measures of information and their characterizations. New York

Bishop CM (2007) Pattern recognition and machine learning (information science and statistics)

Bradley AP (1997) The use of the area under the roc curve in the evaluation of machine learning algorithms. Pattern Recognit 30(7):1145–1159

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP (2002) Smote: synthetic minority over-sampling technique. J Artif Intell Res 16:341–378

Chawla NV, Lazarevic A, Hall LO, Bowyer KW (2003) SMOTEBoost: improving prediction of the minority class in boosting. In: Knowledge discovery in databases: PKDD 2003. Springer, Berlin, pp 107–119

Chawla NV (2003) C4.5 and imbalanced data sets: investigating the effect of sampling method, probabilistic estimate, and decision tree structure. In: Proceedings of the ICML, vol 3

Chawla NV, Japkowicz N, Kotcz A (2004) Editorial: special issue on learning from imbalanced data sets. SIGKDD Explor Newsl 6(1):1–6

Chen K, Lu BL, Kwok JT (2006) Efficient classification of multi-label and imbalanced data using min-max modular classifiers. In: International joint conference on neural networks, IJCNN’06, IEEE, pp 1770–1775

Chen XW, Wasikowski M (2008) Fast: a roc-based feature selection metric for small samples and imbalanced data classification problems. In: Proceedings of the 14th ACM SIGKDD international conference on Knowledge discovery and data mining. ACM, New York, pp 124–132

Cohen WW (1995) Fast effective rule induction. In: ICML, vol 95, pp 115–123

Demmel J, Dumitriu I, Holtz O (2007) Fast linear algebra is stable. Numer Math 108(1):59–91

Demar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Dietterich TG (2000) Ensemble methods in machine learning. In: Multiple classifier systems. Springer, Berlin, pp 1–15

Dunn OJ (1961) Multiple comparisons among means. J Am Stat Assoc 56(293):52–64

Elkan C, Noto K (2008) Learning classifiers from only positive and unlabeled data. In: Proceedings of the 14th ACM SIGKDD international conference on knowledge discovery and data mining, pp 213–220

Fernndez A, Del Jesus MJ, Herrera F (2010) Multi-class imbalanced data-sets with linguistic fuzzy rule based classification systems based on pairwise learning. In: Computational intelligence for knowledge-based systems design. Springer, Berlin, pp 89–98

Frank A, Asuncion A (2010) UCI machine learning repository: http://archive.ics.uci.edu/ml

Freund Y, Schapire RE (1996) Experiments with a new boosting algorithm. In: ICML, vol 96, pp 148–156

Friedman M (1937) The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J Am Stat Assoc 32(200):675–701

Golub TR, Slonim DK, Tamayo P, Huard C, Gaasenbeek M, Mesirov JP, Coller H, Loh ML, Downing JR, Caligiuri MA, Bloomfield CD, Lander ES (1999) Molecular classification of cancer: class discovery and class prediction by gene expression monitoring. Science 286(5439):531–537

Henze N, Zirkler B (1990) A class of invariant consistent tests for multivariate normality. Commun Statist Theor Meth 19(10):3595–3618

Han H, Wang WY, Mao BH (2005) Borderline-SMOTE: a new over-sampling method in imbalanced data sets learning. In: Advances in intelligent computing. Springer, Berlin, pp 878–887

Hand DJ, Till RJ (2001) A simple generalisation of the area under the roc curve for multiple class classification problems. Mach Learn 45(2):171–186

Hart PE (1968) The condensed nearest neighbour rule. IEEE Trans Inform Theory 14(3):515–516

Hastie T, Tibshirani R (1998) Classification by pairwise coupling. Ann Stat 26(2):451–471

He H, Bai Y, Garcia EA, Li S (2008) ADASYN: adaptive synthetic sampling approach for imbalanced learning. In: IEEE international joint conference on neural networks. IJCNN (IEEE world congress on computational intelligence), IEEE, pp 1322–1328)

He H, Garcia EA (2009) Learning from imbalanced data. IEEE Trans Knowl Data Eng 21(9):1263–1284

Iman RL, Davenport JM (1980) Approximations of the critical region of the fbietkan statistic. Commun Stat Theory Methods 9(6):571–595

Joshi MV, Agarwal RC, Kumar V (2002) Predicting rare classes: can boosting make any weak learner strong? In: Proceedings of the eighth ACM SIGKDD international conference on knowledge discovery and data mining. ACM, New York, pp 297–306

Kotsiantis S, Kanellopoulos D, Pintelas P (2006) Handling imbalanced datasets: a review. GESTS Int Trans Comput Sci Eng 30(1):25–36

Kotsiantis SB, Zaharakis ID, Pintelas PE (2007) Supervised machine learning: a review of classification techniques

Kubat M, Matwin S (1997) Addressing the curse of imbalanced training sets: one-sided selection. In: Proceeding 14th international conference on machine learning, p 179–186

Kubat M, Holte R, Matwin S (1997) Learning when negative examples abound. In: Machine learning: ECML-97. Springer, Berlin, pp 146–153

Kullback S, Leibler RA (1951) On information and sufficiency. Ann Math Stat 22(1):79–86

Lee HJ, Cho S (2006) The novelty detection approach for different degrees of class imbalance. In: Lecture notes in computer science series as the proceedings of international conference on neural information processing, vol 4233, pp 21–30

Liao TW (2008) Classification of weld flaws with imbalanced class data. Expert Syst Appl 35(3):1041–1052

Mahalanobis PC (1936) On the generalized distance in statistics. Proc Natl Inst Sci (Calcutta) 2:49–55

Mitchell TM (1997) Machine learning. McGraw-Hill, New York

Polikar R (2006) Ensemble based systems in decision making. Circuits Syst Mag IEEE 6(3):21–45

Perrone MP, Cooper LN (1992) When networks disagree: ensemble methods for hybrid neural networks (No. TR-61). Brown University Providence RI Institute for Brain and Neural Systems

Prati RC, Batista GE, Monard MC (2004) Class imbalances versus class overlapping: an analysis of a learning system behavior. In MICAI 2004: advances in artificial intelligence. Springer, Berlin, pp 312–321

Quinlan JR (1993) C4. 5: programs for machine learning, vol 1. Morgan kaufmann

Raskutti B, Kowalczyk A (2004) Extreme re-balancing for svms: a case study. SIGKDD Explor 6(1):60–69

Rifkin R, Klautau A (2004) In defense of one-vs-all classification. J Mach Learn Res 5:101–141

Rijsbergen CV (1979) Information retrieval. Butterworths, London

Seiffert C, Khoshgoftaar TM, Van Hulse J, Napolitano A (2008) Improving learner performance with data sampling and boosting. In: Proceeding of the 20th IEEE international conference on tools with artificial intelligence. ICTAI’08, IEEE, vol 1, pp 452–459

Seiffert C, Khoshgoftaar TM, Van Hulse J, Napolitano A (2010) Rusboost: a hybrid approach to alleviating class imbalance. IEEE Trans Syst Man Cybern Part B Cybern 40(1):185–197

Sun Y, Wong AK, Wang Y (2005) Parameter inference of cost-sensitive boosting algorithms. In: Machine learning and data mining in pattern recognition. Springer, Berlin, pp 21–30

Sun Y, Kamel MS, Wang Y (2006) Boosting for learning multiple classes with imbalanced class distribution. In: Proceeding of the sixth international conference on data mining. ICDM’06, IEEE, pp 592–602

Sun Y, Kamel MS, Wong AK, Wang Y (2007) Cost-sensitive boosting for classification of imbalanced data. Pattern Recognit 40(12):3358–3378

Tan A, Gilbert D, Deville Y (2003) Multi-class protein fold classification using a new ensemble machine learning approach

Tomek I (1976) Two modifications of cnn. IEEE Trans Syst Man Cybern 11:769–772

Trujillo-Ortiz A, Hernandez-Walls R, Barba-Rojo K, Cupul-Magana L (2007) HZmvntest: Henze–Zirkler’s multivariate normality test. A MATLAB file [WWW document]. http://www.mathworks.com/matlabcentral/fileexchange/loadFile.do?objectId=17931

Wang BX, Japkowicz N (2004) Imbalanced data set learning with synthetic samples. In: Proceeding of the IRIS machine learning workshop

Wang S, Yao X (2012) Multiclass imbalance problems: analysis and potential solutions. IEEE Trans Syst Man Cybern Part B Cybern 42(4):1119–1130

Weiss GM, Provost F (2001) The effect of class distribution on classifier learning: an empirical study. Rutgers University, USA

Weiss GM, Provost FJ (2003) Learning when training data are costly: the effect of class distribution on tree induction. J Artif Intell Res (JAIR) 19:315–354

Weiss GM (2004) Mining with rarity: a unifying framework. ACM SIGKDD Explor Newsl 6(1):7–19

Wilson DL (1972) Asymptotic properties of nearest neighbour rules using edited data. IEEE Trans Syst Man Cybern 2(3):408–421

Witten IH (2005) and Frank, E. Data mining, practical machine learning tools and techniques. Morgan Kaufmann

Zhao XM, Li X, Chen L, Aihara K (2008) Protein classification with imbalanced data. Proteins Struct Funct Bioinform 70(4):1125–1132

Zhou ZH, Liu XY (2006) Training cost-sensitive neural networks with methods addressing the class imbalance problem. IEEE Trans Knowl Data Eng 18(1):63–77

Zhuang L, Dai H (2006) Parameter optimization of kernel-based one-class classifier on imbalance learning. J Comput 1(7):32–40

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by E. Lughofer.

Rights and permissions

About this article

Cite this article

Abdi, L., Hashemi, S. To combat multi-class imbalanced problems by means of over-sampling and boosting techniques. Soft Comput 19, 3369–3385 (2015). https://doi.org/10.1007/s00500-014-1291-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-014-1291-z