Abstract

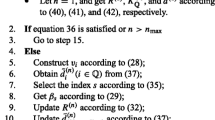

The prefitting and backfitting methods are commonly used to sparsify the full solution of naive kernel minimum squared error. As known to us, the forward learning methods including prefitting and backfitting only assist us in finding the suboptimal solutions. To enhance the testing real time further, in this paper by virtue of the idea of incorporating the backward learning algorithm into the forward learning algorithm, two improved schemes on the basis of prefitting and backfitting are proposed. Compared with the original versions, two improved algorithms obtain fewer significant nodes, which indicates much better testing real time. Due to the addition of the backward learning to the forward learning, the proposed algorithms need more training computational costs. Investigations on benchmark data sets and a robot arm example are reported to demonstrate the improved effectiveness.

Similar content being viewed by others

References

An S, Liu W, Venkatesh S (2007) Fast cross-validation algorithms for least squares support vector machine and kernel ridge regression. Pattern Recognit 40(8):2154–2162

Cortez P, Cerdeira A, Almeida F, Matos T, Reis J (2009) Modeling wine preferences by data mining from physicochemical properties. Decis Support Syst 47(4):547–553

Duda RO, Hart PE, Stork DG (2001) Pattern Classification. Wiley, UK

Efron B, Hastie T, Johnstone I, Tibshirani R (2004) Least angle regression. Ann. Stat. 32(2):407–451

Gan H (2014) Laplacian regularized kernel minimum squared error and its application to face recognition. Optik 125(14):3524–3529

Jiang J, Chen X, Gan HT (2014) Feature extraction for kernel minimum squared error by sparsity shrinkage, pp 450–453

Jiao L, Bo L, Wang L (2007) Fast sparse approximation for least squares support vector machine. IEEE Trans Neural Netw 18(3):685–697

Mika S, Ratsch G, Weston J, Scholkopf B, Muller KR (1999) Fisher discriminant analysis with kernels. In: Proceedings of the 1999 9th IEEE workshop on neural networks for signal processing, pp 41–48

Muller KR, Mika S, Ratsch G, Tsuda K, Scholkopf B (2001) An introduction to kernel-based learning algorithms. IEEE Trans Neural Netw 12(2):181–201

Nair PB, Choudhury A, Keane AJ (2003) Some greedy learning algorithms for sparse regression and classification with Mercer kernels. J Mach Learn Res 3(4–5):781–801

Natarajan BK (1995) Sparse approximate solutions to linear systems. SIAM J Comput 24(2):227–234

Rumelhart DE, Hinton GE, Williams RJ (1986) Learning representations by back-propagating errors. Nature 323(6088):533–536

Saunders C, Gammerman A, Vovk V (1998) Ridge regression learning algorithm in dual variables. In: Proceedings of the fifteenth international conference on machine learning, pp 515–521

Scholkopf B, Mika S, Burges CJC, Knirsch P, Muller KR, Ratsch G, Smola AJ (1999) Input space versus feature space in kernel-based methods. IEEE Trans Neural Netw 10(5):1000–1017

Scholkopf B, Herbrich R, Smola AJ (2001) A generalized representer theorem. In: Proceedings of 14th annual conference on computational learning theory, pp 416–426

Schölkopf B, Smola AJ (2002) Learning with Kernels. MIT Press, Cambridge

Shim J, Bin O, Hwang C (2014) Semiparametric spatial effects kernel minimum squared error model for predicting housing sales prices. Neurocomputing 124:81–88

Suykens JAK, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9(3):293–300

Vapnik VN (1995) The nature of statistical learning theory. Springer, New York

Vapnik VN (1999) An overview of statistical learning theory. IEEE Trans Neural Netw 10(5):988–999

Vincent P, Bengio Y (2002) Kernel matching pursuit. Mach Learn 48(1–3):165–187

Wang JH, Wang P, Li Q, You J (2013) Improvement of the kernel minimum squared error model for fast feature extraction. Neural Comput. Appl. 23(1):53–59

Xu Y, Zhang D, Jin Z, Li M, Yang J-Y (2006) A fast kernel-based nonlinear discriminant analysis for multi-class problems. Pattern Recognit 39(6):1026–1033

Xu Y, Yang JY, Lu JF (2005) An efficient kernel-based nonlinear regression method for two-class classification. In: Proceedings of 2005 international conference on machine learning and cybernetics, pp 4442–4445

Xu J, Zhang X, Li Y (2001) Kernel MSE algorithm: a unified framework for KFD, LS-SVM and KRR. In: Proceedings of the international joint conference on neural networks, pp 1486–1491

Zhang X (2004) Matrix analysis and applications. Tsinghua University Press, Beijing

Zhao Y-P, Sun J-G, Du Z-H, Zhang Z-A, Zhang H-B (2011) Pruning least objective contribution in KMSE. Neurocomputing 74(17):3009–3018

Zhao Y-P, Du Z-H, Zhang Z-A, Zhang H-B (2011) A fast method of feature extraction for kernel MSE. Neurocomputing 74(10):1654–1663

Zhao Y-P, Wang K-K, Liu J, Huerta R (2014) Incremental kernel minimum squared error (KMSE). Inf Sci 270:92–111

Zhu Q (2009) A method for rapid feature extraction based on KMSE. In: Proceedings of the 2009 WRI Global Congress on Intelligent Systems, pp 335–338

Zhu Q (2010) Reformative nonlinear feature extraction using kernel MSE. Neurocomputing 73(16–18):3334–3337

Zhu Q, Xu Y, Cui J, Chen C, Wang J, Wu X, Zhao Y (2009) A method for constructing simplified kernel model based on Kernel-MSE. In: Proceedings of Pacific conference on computational intelligence and industrial applications, pp 237–240

Acknowledgments

This research was supported by the National Natural Science Foundation of China under Grant number 51006052. The authors would like to thank the anonymous reviewers and editors for their valuable suggestions that greatly improved the quality of this presentation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Communicated by V. Loia.

Rights and permissions

About this article

Cite this article

Zhao, YP., Liang, D. & Ji, Z. A method of combining forward with backward greedy algorithms for sparse approximation to KMSE. Soft Comput 21, 2367–2383 (2017). https://doi.org/10.1007/s00500-015-1947-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-015-1947-3