Abstract

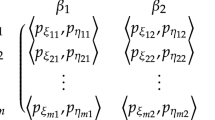

In the traditional fuzzy matrix game, given a pair of strategies, the payoffs of one player are usually associated with themselves, but not linked to the payoffs of the other player. Such payoffs can be called self-evaluated payoffs. However, according to the regret theory, the decision makers may care more about what they might get than what they get. Therefore, one player in a matrix game may pay more attention to the payoffs of the other player than his/her payoffs. In this paper, motivated by the pairwise comparison matrix, we allow the players to compare their payoffs and the other ones to provide their relative payoffs, which can be called the cross-evaluated payoffs. Moreover, the players’ preference about the cross-evaluated payoffs is usually distributed asymmetrically according to the law of diminishing utility. Then, the cross-evaluated payoffs of players can be expressed by using the asymmetrically distributed information, i.e., the interval-valued intuitionistic multiplicative number. Comparison laws are developed to compare the cross-evaluated payoffs of different players, and aggregation operators are introduced to obtain the expected cross-evaluated payoffs of players. Based on minimax and maximin principles, several mathematical programming models are established to obtain the solution of a matrix game with cross-evaluated payoffs. It is proved that the solution of a matrix game with cross-evaluated payoffs can be obtained by solving a pair of primal–dual linear-programming models and can avoid some unreasonable results. Two examples are finally given to illustrate that the proposed method is based on the cross-evaluated payoffs of players, and can directly provide the priority degree that one player is preferred to the other player in winning the game.

Similar content being viewed by others

References

Atanassov KT (1986) Intuitionistic fuzzy sets. Fuzzy Sets Syst 20:87–96

Atanassov KT (1995) Ideas for intuitionistic fuzzy equations, inequalities and optimization. Notes Intuit Fuzzy Set 1:17–24

Atanassov KT, Gargov G (1989) Interval-valued intuitionistic fuzzy sets. Fuzzy Sets Syst 31:343–349

Aubin JP (1979) Mathematical methods of game and economic theory. North-Holland, Amsterdam

Aubin JP (1981) Cooperative fuzzy game. Math Oper Res 6:1–13

Bector CR, Chandra S (2002) On duality in linear programming under fuzzy environment. Fuzzy Sets Syst 125:317–325

Bector CR, Chandra S (2005) Fuzzy mathematical programming and fuzzy matrix games. Springer, Berlin

Bector CR, Chandra S, Vijay V (2004a) Matrix games with fuzzy goals and fuzzy linear programming duality. Fuzzy Optim Decis Mak 3:255–69

Bector CR, Chandra S, Vijay V (2004b) Duality in linear programming with fuzzy parameters and matrix games with fuzzy payoffs. Fuzzy Sets Syst 146:253–69

Bell DE (1982) Regret in decision making under uncertainty. Oper Res 30:961–981

Butnariu D (1978) Fuzzy games: a description of the concept. Fuzzy Sets Syst 1:181–192

Butnariu D (1980) Stability and Shapley value for n-person fuzzy game. Fuzzy Sets Syst 4:63–72

Campos L (1989) Fuzzy linear programming models to solve fuzzy matrix games. Fuzzy Sets Syst 32:275–289

Chakeri A, Sheikholeslam F (2013) Fuzzy Nash equilibriums in crisp and fuzzy games. IEEE Trans Fuzzy Syst 21:171–176

Degani R, Bortolan G (1988) The problem of linguistic approximation in clinical decision making. Int J Approx Reason 2:143–162

Deng W, Zhao HM, Yang XH, Xiong JX, Sun M, Li B (2017a) Study on an improved adaptive PSO algorithm for solving multi-objective gate assignment. Appl Soft Comput 59:288–302

Deng W, Zhao HM, Zou L, Li GY, Yang XH, Wu DQ (2017b) A novel collaborative optimization algorithm in solving complex optimization problems. Soft Comput 21(15):4387–4398

Deng W, Yao R, Zhao H, Yang X, Li G (2017c) A novel intelligent diagnosis method using optimal LS-SVM with improved PSO algorithm. Soft Comput 2–4:1–18

Harsanyi JC (1955) Cardinal welfare, individualistic ethics, and interpersonal comparisons of utility. J Polit Econ 63:309–321

Herrera F, Herrera-Viedma E, Martínez L (2008) A fuzzy linguistic methodology to deal with unbalanced linguistic term sets. IEEE Trans Fuzzy Syst 16:354–370

Ishibuchi H, Tanaka H (1990) Multiobjective programming in optimization of the interval objective function. Eur J Oper Res 48:219–225

Li DF (2010) Mathematical-programming approach to matrix games with payoffs represented by Atanassov’s interval-valued intuitionistic fuzzy sets. IEEE Trans Fuzzy Syst 18:1112–1128

Li DF (2011) Linear programming approach to solve interval-valued matrix games. Omega 39:655–666

Li DF, Nan JX (2009) A nonlinear programming approach to matrix games with payoffs of Atanassov’s intuitionistic fuzzy sets. Int J Uncertain Fuzziness Knowl-Based Syst 17:585–607

Mares M (2001) Fuzzy cooperative games. Physica-Verlag, Heidelberg

Nan JX, Li DF (2013) Linear programming approach to matrix games with intuitionistic fuzzy goals. Int J Comput Intell Syst 6:186–197

Nishizaki I, Sakawa M (2000) Fuzzy cooperative games arising from linear production programming problems with fuzzy parameters. Fuzzy Sets Syst 114:11–21

Owen G (1982) Game theory, 2nd edn. Academic, New York

Pap E, Ralević N (1998) Pseudo-Laplace transform. Nonlinear Anal 33:533–550

Saaty TL (1980) Multicriteria decision making: the analytic hierarchy process. McGraw-Hill, New York

Sakawa M, Nishizaki I (1994) Max-min solutions for fuzzy multiobjective matrix games. Fuzzy Sets Syst 61:265–75

Sengupta A, Pal TK (2000) Theory and methodology on comparing interval numbers. Eur J Oper Res 127:28–43

Tong S (1994) Interval number and fuzzy number linear programming. Fuzzy Sets Syst 66:301–306

Torra V (2000) Knowledge based validation: synthesis of diagnoses through synthesis of relations. Fuzzy Sets Syst 113:167–176

Vijay V, Cahandra S, Bector CR (2005) Matrix game with fuzzy goals and fuzzy payoffs. Omega 33:425–429

Xia MM, Xu ZS, Liao HC (2013) Preference relations based on intuitionistic multiplicative information. IEEE Trans Fuzzy Syst 21:113–133

Xu ZS (2007a) Methods for aggregating interval-valued intuitionistic fuzzy information and their application to decision making. Control Decis 22:215–219

Xu ZS (2007b) Intuitionistic fuzzy aggregation operators. IEEE Trans Fuzzy Syst 15:1179–1187

Yu DJ (2015) Group decision making under interval-valued multiplicative intuitionistic fuzzy environment based on Archimedean t-conorm and t-norm. Int J Intell Syst 30:590–616

Zadeh LA (1965) Fuzzy sets and systems. In: Proceeding of the symposium on systems theory. Polytechnic Institute of Brooklyn, New York, pp 29–37

Zhao H, Sun M, Deng W, Yang X (2017) A new feature extraction method based on EEMD and multi-scale fuzzy entropy for motor bearing. Entropy 19(1):14

Zhao HM, Yao R, Xu L, Li GY, Deng W (2018) Study on a novel fault damage degree identification method using high-order differential mathematical morphology gradient spectrum entropy. Entropy 20(9):682

Zhou W, Xu ZS (2016a) Asymmetric hesitant fuzzy sigmoid preference relations in the analytic hierarchy process. Inf Sci 358–359:191–207

Zhou W, Xu ZS (2016b) Asymmetric fuzzy preference relations based on the generalized sigmoid scale and their application in decision making involving risk appetites. IEEE Trans Fuzzy Syst 24:741–756

Funding

This work was funded by the National Natural Science Foundation of China (Grant Numbers: 71501010, 18ZDA086, 71661167009).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval:

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendices

Proof of Theorem 1

The sufficient condition is obviously true. Next, if \({\tilde{\alpha }} _1 = {\tilde{\alpha }} _2 \), then Definition 4 implies that \(SF({\tilde{\alpha }} _1 ) = SF({\tilde{\alpha }} _2 ),AF({\tilde{\alpha }} _1 ) = AF({\tilde{\alpha }} _2 ),UI({\tilde{\alpha }} _1 ) = UI({\tilde{\alpha }} _2 )\) and \(HI({\tilde{\alpha }} _1 ) = HI({\tilde{\alpha }} _2 )\). From the definitions of \(SF( \cdot ),AF( \cdot ),UI( \cdot )\) and \(HI( \cdot )\), we have

and

By solving these four equations, we have \(\rho _{{\tilde{\alpha }} _1 }^ - = \rho _{{\tilde{\alpha }} _2 }^ - ,\rho _{{\tilde{\alpha }} _1 }^ + = \rho _{{\tilde{\alpha }} _2 }^ + ,\sigma _{{\tilde{\alpha }} _1 }^ - = \sigma _{{\tilde{\alpha }} _2 }^ -\) and \(\sigma _{{\tilde{\alpha }} _1 }^ + = \sigma _{{\tilde{\alpha }} _2 }^ +\). \(\square \)

Proof of Theorem 2

According to Definition 6, if \({\tilde{\alpha }} _1 \succ _P {\tilde{\alpha }} _2\), then \(\rho _{{\tilde{\alpha }} _1 }^ - \geqslant \rho _{{\tilde{\alpha }} _2 }^ - ,\rho _{{\tilde{\alpha }} _1 }^ + \geqslant \rho _{{\tilde{\alpha }} _2 }^ + ,\sigma _{{\tilde{\alpha }} _1 }^ - \leqslant \sigma _{{\tilde{\alpha }} _2 }^ -\) and \(\sigma _{{\tilde{\alpha }} _1 }^ + \leqslant \sigma _{{\tilde{\alpha }} _2 }^ +\), and \(SF({\tilde{\alpha }} _1 ) \geqslant SF({\tilde{\alpha }} _2 )\). Two cases will be discussed:

If \(SF({\tilde{\alpha }} _1 ) > SF({\tilde{\alpha }} _2 )\), then \({\tilde{\alpha }} _1 \succ {\tilde{\alpha }} _2 \);

If \(SF({\tilde{\alpha }} _1 ) = SF({\tilde{\alpha }} _2 )\), then \(\sqrt{\frac{{\rho _{{\tilde{\alpha }} _1 }^ - \rho _{{\tilde{\alpha }} _1 }^ + }}{{\sigma _{{\tilde{\alpha }} _1 }^ - \sigma _{{\tilde{\alpha }} _1 }^ + }}} = \sqrt{\frac{{\rho _{{\tilde{\alpha }} _2 }^ - \rho _{{\tilde{\alpha }} _2 }^ + }}{{\sigma _{{\tilde{\alpha }} _2 }^ - \sigma _{{\tilde{\alpha }} _2 }^ + }}}\), and

which reduces to two cases:

If \(AF({\tilde{\alpha }} _1 ) > AF({\tilde{\alpha }} _2 )\), then \({\tilde{\alpha }} _1 \succ {\tilde{\alpha }} _2\);

If \(AF({\tilde{\alpha }} _1 ) = AF({\tilde{\alpha }} _2 )\), then \(\sqrt{\frac{{\rho _{{\tilde{\alpha }} _1 }^ - \rho _{{\tilde{\alpha }} _1 }^ + }}{{\sigma _{{\tilde{\alpha }} _1 }^ - \sigma _{{\tilde{\alpha }} _1 }^ + }}} = \sqrt{\frac{{\rho _{{\tilde{\alpha }} _2 }^ - \rho _{{\tilde{\alpha }} _2 }^ + }}{{\sigma _{{\tilde{\alpha }} _2 }^ - \sigma _{{\tilde{\alpha }} _2 }^ + }}}\), and \(\frac{{\rho _{{\tilde{\alpha }} _1 }^ - \rho _{{\tilde{\alpha }} _1 }^ + }}{{\rho _{{\tilde{\alpha }} _2 }^ - \rho _{{\tilde{\alpha }} _2 }^ + }} = 1\) , \(\frac{{\sigma _{{\tilde{\alpha }} _1 }^ - \sigma _{{\tilde{\alpha }} _1 }^ + }}{{\sigma _{{\tilde{\alpha }} _2 }^ - \sigma _{{\tilde{\alpha }} _2 }^ + }} = 1\) , that is, \(\frac{{\rho _{{\tilde{\alpha }} _1 }^ - }}{{\rho _{{\tilde{\alpha }} _2 }^ - }} = \frac{{\rho _{{\tilde{\alpha }} _2 }^ + }}{{\rho _{{\tilde{\alpha }} _1 }^ + }}\), \(\frac{{\sigma _{{\tilde{\alpha }} _1 }^ - }}{{\sigma _{{\tilde{\alpha }} _2 }^ - }} = \frac{{\sigma _{{\tilde{\alpha }} _2 }^ + }}{{\sigma _{{\tilde{\alpha }} _1 }^ + }}\). Since \(\rho _{{\tilde{\alpha }} _1 }^ - \geqslant \rho _{{\tilde{\alpha }} _2 }^ - ,\rho _{{\tilde{\alpha }} _1 }^ + \geqslant \rho _{{\tilde{\alpha }} _2 }^ + ,\sigma _{{\tilde{\alpha }} _1 }^ - \leqslant \sigma _{{\tilde{\alpha }} _2 }^ -\) and \(\sigma _{{\tilde{\alpha }} _1 }^ + \leqslant \sigma _{{\tilde{\alpha }} _2 }^ +\), we have \(\frac{{\rho _{{\tilde{\alpha }} _1 }^ - }}{{\rho _{{\tilde{\alpha }} _2 }^ - }} \geqslant 1,\frac{{\rho _{{\tilde{\alpha }} _2 }^ + }}{{\rho _{{\tilde{\alpha }} _1 }^ + }} \leqslant 1,\frac{{\sigma _{{\tilde{\alpha }} _1 }^ - }}{{\sigma _{{\tilde{\alpha }} _2 }^ - }} \leqslant 1,\frac{{\sigma _{{\tilde{\alpha }} _2 }^ + }}{{\sigma _{{\tilde{\alpha }} _1 }^ + }} \geqslant 1\), and then we have \(\frac{{\rho _{{\tilde{\alpha }} _1 }^ - }}{{\rho _{{\tilde{\alpha }} _2 }^ - }} = \frac{{\rho _{{\tilde{\alpha }} _2 }^ + }}{{\rho _{{\tilde{\alpha }} _1 }^ + }} = 1,\frac{{\sigma _{{\tilde{\alpha }} _1 }^ - }}{{\sigma _{{\tilde{\alpha }} _2 }^ - }} = \frac{{\sigma _{{\tilde{\alpha }} _2 }^ + }}{{\sigma _{{\tilde{\alpha }} _1 }^ + }} = 1\); therefore, we have \(\rho _{{\tilde{\alpha }} _1 }^ - = \rho _{{\tilde{\alpha }} _2 }^ - ,\rho _{{\tilde{\alpha }} _1 }^ + = \rho _{{\tilde{\alpha }} _2 }^ + ,\sigma _{{\tilde{\alpha }} _1 }^ - = \sigma _{{\tilde{\alpha }} _2 }^ -\) and \(\sigma _{{\tilde{\alpha }} _1 }^ + = \sigma _{{\tilde{\alpha }} _2 }^ +\); hence, \({\tilde{\alpha }} _1 = {\tilde{\alpha }} _2\). That is to say, if \({\tilde{\alpha }} _1 \succ _P {\tilde{\alpha }} _2\), then we have \({\tilde{\alpha }} _1 \succ {\tilde{\alpha }} _2\).

\(\square \)

Proof of Theorem 3

For any \(x \in X,y \in Y\), we have \(\mathop {\max }\nolimits _{x \in X} \{ \varphi ^ - \} \geqslant \varphi ^ - \geqslant \mathop {\min }\nolimits _{y \in Y} \{ \varphi ^ - \}\); hence, \(\mathop {\min }\nolimits _{y \in Y} \mathop {\max }\nolimits _{x \in X} \{ \varphi ^ - \} \geqslant \mathop {\min }\nolimits _{y \in Y} \{ \varphi ^ - \}\). Therefore, \(\mathop {\min }\nolimits _{y \in Y} \mathop {\max }\nolimits _{x \in X} \{ \varphi ^ - \} \geqslant \mathop {\max }\nolimits _{x \in X} \mathop {\min }\nolimits _{y \in Y} \{ \varphi ^ - \}\). Similarly, it also follows that \(\mathop {\min }\nolimits _{y \in Y} \mathop {\max }\nolimits _{x \in X} \{ \varphi ^ + \} \geqslant \mathop {\max }\nolimits _{x \in X} \mathop {\min }\nolimits _{y \in Y} \{ \varphi ^ + \}\). According to Definitions 5 and 6, we have

On the other hand, for any \(x \in X\) and \(y \in Y\), we have \(\mathop {\max }\nolimits _{y \in Y} \{ \phi _{xy}^ - \} \geqslant \phi _{xy}^ - \geqslant \mathop {\min }\nolimits _{x \in X} \{ \phi _{xy}^ - \}\); hence, \(\mathop {\max }\nolimits _{y \in Y} \{ \phi _{xy}^ - \} \geqslant \mathop {\max }\nolimits _{y \in Y} \mathop {\min }\nolimits _{x \in X} \{ \phi _{xy}^ - \}\). Therefore, \(\mathop {\min }\nolimits _{y \in Y} \mathop {\max }\nolimits _{y \in Y} \{ \phi _{xy}^ - \} \geqslant \mathop {\max }\nolimits _{y \in Y} \mathop {\min }\nolimits _{x \in X} \{ \phi _{xy}^ - \}\). Similarly, it follows that \(\mathop {\min }\nolimits _{y \in Y} \mathop {\max }\nolimits _{y \in Y} \{ \phi _{xy}^ + \} \geqslant \mathop {\max }\nolimits _{y \in Y} \mathop {\min }\nolimits _{x \in X} \{ \phi _{xy}^ + \}\). According to Definitions 5 and 6, we have

Combining Eqs. (3) and (4), we have

i.e., \(\vartheta ^* \succ _P \theta ^*\). \(\square \)

Proof of Theorem 4

If \(\theta ^* = \vartheta ^*\), then \(\mathop {\max }\nolimits _{x \in X} \mathop {\min }\nolimits _{y \in Y} \{ x^{\mathrm{T}} Ay\} = \mathop {\min }\nolimits _{y \in Y} \mathop {\max }\nolimits _{x \in X} \{ x^\mathrm{T} Ay\}\), which shows there exist \((x^* ,y^* )(x^* \in X,y^* \in Y)\) such that \(\mathop {\max }\nolimits _{x \in X} \mathop {\min }\nolimits _{y \in Y} \{ x^{\mathrm{T}} Ay\} =\mathop {\min }\nolimits _{y \in Y} \{ x^{*\mathrm T} Ay\}\) and \(\mathop {\min }\nolimits _{y\in Y}\mathop {\max }\nolimits _{x\in X}\{x^\mathrm{T}Ay\}=\mathop {\max }\nolimits _{x\in X}\{x^{\mathrm{T}}Ay^*\}\); therefore, \(\mathop {\min }\nolimits _{y\in Y}\{x^{*\mathrm T}Ay\}=\mathop {\max }\nolimits _{x\in X}\{x^{\mathrm{T}}Ay^*\}\). Since \(x^{*\mathrm T}Ay^*\succ _P\mathop {\min }\nolimits _{y\in Y}\{x^{*\mathrm T}Ay\}\) and \(\mathop {\max }\nolimits _{x\in X}\{x^{\mathrm{T}} Ay^*\}\succ _P x^{*\mathrm T}Ay^*\), we have \(\mathop {\max }\nolimits _{x \in X}\{ x^\mathrm{T}Ay^*\}=x^{*\mathrm T} Ay^*=\mathop {\min }\nolimits _{y\in Y}\{x^{*\mathrm T}Ay\}\), which indicates that \(x^{*\mathrm{T}}Ay\succ _P x^{*\mathrm{T}}Ay^*\succ _P x^{\mathrm{T}}Ay^*\), and \((x^*,y^*,x^{*\mathrm{T}} Ay^*)\) is the solution of matrix game A.

On the other side, if \((x^* ,y^* ,x^{*\mathrm{T}} Ay^* )\) is the solution of matrix game A, and \(x^{*\mathrm{T}}Ay\succ _P x^{*\mathrm{T}} Ay^*\succ _P x^{\mathrm{T}} Ay^*\), for any \(x \in X\) and \(y \in Y\), we have \(\mathop {\max }\nolimits _{x\in X}\mathop {\min }\nolimits _{y\in Y}\{x^{\mathrm{T}}Ay\}\succ _P x^{{\text {*}}\mathrm{T}}Ay^{\text {*}}\succ _P\mathop {\min }\nolimits _{y\in Y}\mathop {\max }\nolimits _{x\in X}\{x^{\mathrm{T}}Ay\}\). Therefore, \(\mathop {\max }\nolimits _{x\in X}\mathop {\min }\nolimits _{y\in Y}\{x^\mathrm{T} Ay\}\succ _P \mathop {\min }\nolimits _{y\in Y}\mathop {\max }\nolimits _{x\in X}\{ x^{\mathrm{T}}Ay\}\). According to Theorem 3, we have \(\mathop {\min }\nolimits _{y\in Y}\mathop {\max }\nolimits _{x\in X}\{x^{\mathrm{T}}Ay\}\succ _P\mathop {\max }\nolimits _{x\in X}\mathop {\min }\nolimits _{y\in Y}\{x^\mathrm{T}Ay\}\) and then \(\mathop {\max }\nolimits _{x\in X}\mathop {\min }\nolimits _{y\in Y}\{x^\mathrm{T}Ay\}{\text {=}}\mathop {\min }\nolimits _{y\in Y}\mathop {\max }\nolimits _{x\in X}\{x^{\mathrm{T}}Ay\}\), which completes the proof. \(\square \)

Proof of Theorem 5

For any \(\lambda \in [0,1]\), obviously, (MOD-5) and (MOD-10) are a pair of dual–primal linear-programming models corresponding to the matrix game with the payoff matrix

According to the minimax theorem (Owen 1982), every matrix game has a solution, which can be obtained by formulating a pair of primal–dual linear-programming models ((MOD-5) and (MOD-10)). Suppose the solution of matrix game D is denoted as \((x^* ,y^* ,v^* )\), then \(x^* \) and \(y^*\) are the optimal solutions of (MOD-5) and (MOD-10), respectively. \(v^*\) is the common optimal value of (MOD-5) and (MOD-10), that is, \(v^* = \tau ^* = \varsigma ^*\). \(\square \)

Proof of Theorem 6

Since \(\tau ^ - = \lambda \ln (h(\rho ^ - )) + (1 - \lambda )\ln (g(\sigma ^ + ))\) and \(\tau ^ + = \lambda \ln (h(\rho ^ + )) + (1 - \lambda )\ln (g(\sigma ^ - ))\), we have \(\tau = \tau ^ - + \tau ^ + = \lambda \ln (h(\rho ^ - )) + (1 - \lambda )\ln (g(\sigma ^ + )){\text { + }}\lambda \ln (h(\rho ^{\text { + }} )) + (1 - \lambda )\ln (g(\sigma ^ - ))\), where \(0 \leqslant \rho ^ - \leqslant \rho ^ + < 1\), and \(0 \leqslant \sigma ^ - \leqslant \sigma ^ + < 1\). Then, the partial differential of \(\tau \) with respect to \(\lambda \) is computed as follows:

Since \(\rho ^ - \sigma ^ - \leqslant \rho ^ + \sigma ^ + \leqslant 1\), we have \(\rho ^ - \leqslant \frac{1}{{\sigma ^ - }}\), \(\rho ^ + \leqslant \frac{1}{{\sigma ^ + }}\),

and

which follows that \(\frac{{\partial \tau }}{{\partial \lambda }} \leqslant 0\). Therefore, \(\tau \) is a monotonic and decreasing function of \(\lambda \in [0,1]\).

Similarly, since \(\varsigma ^ - = \lambda \ln (h(\mu ^ - )) + (1 - \lambda )(\ln (g(\nu ^ + ))\) and \(\varsigma ^ + = \lambda \ln (h(\mu ^{\text { + }} )) + (1 - \lambda )(\ln (g(\nu ^ - ))\), we have \(\varsigma = \varsigma ^ - + \varsigma ^ + = \lambda \ln (h(\mu ^ - )) + (1 - \lambda )\ln (g(\nu ^ + )){\text { + }}\lambda \ln (h(\mu ^ + )) + (1 - \lambda )\ln (g(\nu ^ - ))\), where \(0 \leqslant \mu ^ - \leqslant \mu ^ + < 1\), and \(0 \leqslant \nu ^ - \leqslant \nu ^ + < 1\). Then, the partial differential of \(\varsigma \) with respect to \(\lambda \) is computed as follows:

Since \(\mu ^ - \nu ^ - \leqslant \mu ^ + \nu ^ + \leqslant 1\), we have \(\mu ^ - \leqslant \frac{1}{{\nu ^ - }}\), \(\mu ^ + \leqslant \frac{1}{{\nu ^ + }}\),

and

which follows that \(\frac{{\partial \varsigma }}{{\partial \lambda }} \leqslant 0\). Therefore, \(\varsigma \) is a monotonic and decreasing function of \(\lambda \in [0,1]\). \(\square \)

Proof of Theorem 7

Suppose that \((x^* ,\theta ^* )\) is not a Pareto optimal/non-inferior solution of (MOD-1), where \(\theta ^* = \mathop {\min }\nolimits _{y \in Y} \{ x^* Ay\} = ([\rho _*^ - ,\rho _*^ + ],[\sigma _*^ - ,\sigma _*^+])\). Then, there exists a feasible solution \(({\hat{x}},{\hat{\theta }} )\), where \({\hat{x}} \in X\), and \({\hat{\theta }} = \mathop {\min }\nolimits _{y \in Y} \{ {\hat{x}} Ay\} = ( [\hat{\rho } ^ - ,{\hat{\rho }} ^ + ],[{\hat{\sigma }} ^ - ,{\hat{\sigma }} ^ + ] )\) such that

and \([{\hat{\rho }} ^ - ,{\hat{\rho }} ^ + ] \succ [\rho _*^ - ,{\hat{\rho }} _*^ + ]\), \([{\hat{\sigma }} ^ - ,{\hat{\sigma }} ^ + {]} \prec [\sigma _*^ - ,\sigma _*^ + ]\), at least one of which is strictly valid. Let \(\lambda \in [0,1] \), and we have

and

Let

and

which follows that \({\hat{\tau }} ^ - \geqslant \tau _*^ -\), \({\hat{\tau }} ^ + \geqslant \tau _*^ +\), and at least one of the inequalities is strictly valid. Hence, we have \({\hat{\tau }} ^ - + {\hat{\tau }} ^ + \geqslant \tau _*^ - + \tau _*^ +\).

Since Y is a finite and compact convex set, it is reasonable to consider only the extreme points of the set Y. Thus, Eq. (5) is transformed into the following:

which is equivalent to:

Let \({\hat{\tau }} = {\hat{\tau }} ^ - + {\hat{\tau }} ^ +\), and then Eq. (6) is rewritten as follows:

i.e., \(({\hat{x}} ,{\hat{\tau }} )\) is a feasible solution of (MOD-5), and \({\hat{\tau }} > \tau ^*\). Therefore, there exists a contradiction with the fact that \((x^* ,\tau ^* )\) is the optimal solution of (MOD-1). Therefore, \((x^* ,\theta ^* )\) is a Pareto optimal/non-inferior solution of (MOD-1). Similarly, we can prove that \((y^* ,\vartheta ^* )\) is a non-inferior solution of (MOD-6).

Proof of Theorem 8

Based on Theorems 5 and 7, we have \(\tau ^* = \varsigma ^*\), and

and

Therefore,

Then,

and

Based on Theorem 3, we have \(\vartheta ^* \succ _P \theta ^*\), that is, \(\mu _*^ - \geqslant \rho _*^ - \), \(\mu _*^ + \geqslant \rho _*^ +\), \(\nu _*^ - \leqslant \sigma _*^ -\) and \(\nu _*^ + \leqslant \sigma _*^ +\) Therefore, \(\rho _*^ - = \mu _*^ -\), \(\rho _*^ + = \mu _*^ +\), \(\sigma _*^ - = \nu _*^ -\) and \(\sigma _*^ + = \nu _*^ + \), which indicates that \(\theta ^* = \vartheta ^*\).

Since

and

we have \(\theta ^* = \vartheta ^* = \sum \nolimits _{j = 1}^n {\sum \nolimits _{i = 1}^m {x_i^* y_j^* {\tilde{\alpha }} _{ij} } } = x^* Ay^* \). According to Definition 10, we can conclude that \((x^*, y^* ,x^* Ay^* )\) is a solution of the matrix game A.

Rights and permissions

About this article

Cite this article

Xia, M. Methods for solving matrix games with cross-evaluated payoffs. Soft Comput 23, 11123–11140 (2019). https://doi.org/10.1007/s00500-018-3664-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-018-3664-1