Abstract

In large-scale software-defined networks (SDNs), multiple controllers are deployed. Each controller has a logically centralized vision of the network that is used to manage a set of switches. In SDN, a challenge known as controller placement problem arises which is very important to specify the number of controllers that are needed and where they should be deployed. These specifications affect the performance of the network. Meanwhile, the assignment of switches to the controllers plays key role in the quality of solution in this problem. However, recent studies focus more on simply assigning switches to their closest controllers based on propagation delay between controller and switches. In this paper, a new controller placement framework is designed which considers both control plane architecture and relation between control and data planes. This framework is considered as a multi-objective optimization model with two objective functions to minimize the flow setup time and inter-controller latency. Furthermore, we propose a new model for flow setup time function that considers all affected metrics in the placement. To solve the framework, a multi-objective algorithm called non-dominated sorting moth flame controller placement optimizer is designed. To this end, we adapt the best–worst multi-criteria decision-making method considering three metrics, namely hop count, propagation latency and link utilization, to assign switches to controllers. A heuristic approach is also used to assign a path between switch and its controller using above three metrics and path reliability. We run theses three models iteratively to find the best location for controllers, the best switch assignment to controller and also find the best route in the network. We compare our proposed framework with other models using expected path loss and link load balancing metrics. Our performance evaluations on real wide area network topologies show the efficiency of the proposed framework.

Similar content being viewed by others

1 Introduction

Software-defined networking (SDN) is an emerging technology which separates the control plane of forwarding devices from the data plane. SDN introduces a modern network architecture aiming at flexible and dynamic management of network resources with minimal disruption of network services (Mohammadi et al. 2017). In this type of network, it is needed to optimize quality metrics such as low control plane latency to obtain high network performance. High control plane latencies might result in long flow setup times, which have negative effect on many services like DNS requests. Every failure of control plane caused by natural disasters, malicious attacks or incidental faults/human errors may have contrary influence on the true functioning of the whole system and impact on many applications and services (Karakus and Durresi 2016). In general, the control plane issues are really critical to consider the challenges related to SDN systems and the corresponding problems influence all networks aspects, from resiliency to accessibility, and operating costs to network performance (Jalili et al. 2017).

1.1 The importance of distributed control plane

Although the structure of SDN suggests a more centralized method of network control, regarding scalability, single point of failure and performance aspects, it seems that the paradigm of physically distributed controllers becomes a hopeful solution (Oktian et al. 2017). Recently, several methods have been proposed to create distributed control plane for large SDN networks. For example, Kandoo framework (Hassas Yeganeh and Ganjali 2012), HyperFlow (Tootoonchian and Ganjali 2010), and ONOS (Berde et al. 2014) as distributed SDN platforms have considered the performance and reliability affairs in large operator networks. In large-scale SDNs, multiple controllers or controller domains are deployed, where each controller has a logically centralized vision of the network that is used to manage a set of switches (Schmid and Suomela 2013). Each controller is responsible for monitoring and controlling switches of one domain for routing, traffic engineering, quality of service, fast failover, etc. The presence of multiple SDN controllers can rise significantly the performance of the network since the load of the network can be distributed among them (Berde et al. 2014). Moreover, one controller can take over other controllers when one of them fails. By distributing the load of the network equally among multiple SDN controllers in domains, the network becomes more scalable and efficient (Oktian et al. 2017).

1.2 The problem

The importance of the role of multiple controllers is unavoidable, and we have to overcome a network of distributed controllers; each of the controllers may have a partial view of the network, and they have to cooperate and exchange information together. However, partitioning network into SDN control planes opens many challenges where latency, reliability, load balancing and synchronization aspects should be considered. Given the real assumptions, there are important questions that should be answered, (i.e., how to partition a wide area network topology into several small SDN domains? where should the controller locate in each domain? and how should the assignment of controllers to switches be performed? and which parameters are important in this way?). These issues show that the problem of deploying multiple controllers to manage a network and enforce policies in such a distributed control plane is an important research issue in SDN (Wang et al. 2017).

1.3 The solution

According to the mentioned problem, a framework which presents an appropriate architecture for control plane is suggested. In addition, identification of the relation between data and control plane which is known as an important subject in SDN is considered. One of the most important research domains related to this subject is the placement of controllers. Finding the required number of controllers and their location in SDN is known as the controller placement problem (CPP) (Wang et al. 2017; Heller et al. 2012). This is one of the key issues related to these networks to increase their performance.

Usually, in controller placement literature, CPP is considered as K-median or K-center problems aiming at minimizing the average-case or the worst-case latencies, respectively (Heller et al. 2012; Wang et al. 2016). Several alternatives that go beyond the simple k-median or k-center alike problems have been studied in recent years. Given a topology, the CPP needs to find the number and the locations of required controllers while minimizing the cost associated with the placement. This cost can be expressed in terms of the number of controllers (Sallahi and St-Hilaire 2017), the switch-controller communication latency (Heller et al. 2012), the synchronization time of controllers (Zhang et al. 2017) or a combination of more than one of these metrics (Jalili et al. 2015; Lange et al. 2015) (as a multi-objective optimization problem).

In addition, some papers considered the loads which are imposed to controllers as an important factor while solving the controller placement problem. For this reason, they assumed that each controller has a certain capacity and tried to balance the loads among the controllers (Jalili et al. 2017; Yao et al. 2014). Moreover, some other papers developed the load issue on controllers and discussed dynamic controller placement (Wang et al. 2017; Yao et al. 2015). They proposed some reassignment mechanisms in these papers. If load entered into controllers exceeds a threshold value, reassignment operations should be performed on controllers that are less loaded.

1.4 The related challenges

Previous studies showed different types of contributions to cope with SDN challenges. However, there are still challenges in solving the controller placement problem. Some articles in this area have not been very much involved in the assignment of switches to controllers. Indeed, they usually implicitly assumed that during location finding of controllers each switch is assigned to the closest controller, based on propagation delay (Heller et al. 2012; Jalili et al. 2015; Liao et al. 2017). This type of assignment may lead to overload of controllers, increase in failure probability, solution placement with bad quality, load imbalance among the controllers and increase in the delay due to the queuing time at some of the controllers. Also, some other papers that investigate the assignment based on the capacity of controllers only focus on the fact that switches are allocated to controllers in a balanced way (Jalili et al. 2017; Lange et al. 2015; Yao et al. 2014). If the number of switches assigned to their closest controller is greater than its capacity, the switches are assigned to the second closest controller. This type of assignment is also very simplistic and only takes into account the capacity of the controllers, and the assignment is again made to the closest controller. In this case, if the number of switches that are close to a controller is greater than a threshold, they will be assigned to another controller. This type of assignment is very vulnerable from the control path assignment. These consequences might result in reassign path between switch and controller, while each reassignment brings a high cost to the network.

Therefore, many challenges should be addressed such as determining:

The relation between control plane and data plane especially in in-bound situations

The assignment of controllers to switches considering critical parameters

The assignment of paths among switches and assigned controllers considering important parameters.

1.5 Our contribution

In this paper, a framework is presented which considers both control plane architecture—the location of controllers—and the relation between control and data planes. The challenges of switch and control path assignment are addressed with a clustering method. For this purpose, by considering important parameters such as propagation delay, hop count, and link utilization, switches are assigned to controllers (known as cluster centers). Furthermore, the proposed clustering approach considers the three mentioned important factors as well as path reliability in control path assignment. The proposed framework tries to find optimal placement(s) with respect to flow setup time and inter-controller latency, as critical metrics in controller placement problem. Since the introduced model for calculating flow setup time in He et al. (2017) is only applicable to cases where the assignment is based only on the propagation delay, it cannot be used for the situation in which other parameters in the assignment discussion are affected. For this purpose, we propose a general model for flow setup time that considers real assumptions in control latency of assigned paths between switches and controllers.

In the model, the location of controllers is used as the center of clusters. The best–worst multi-criteria decision-making method (BWM) (Rezaei 2016) is applied to decide which cluster should host a typical switch as a cluster member. The BWM method works based on propagation delay, hop count, and link utilization. For this, several priority vectors to control the preferences of these criteria are discussed. Afterward, to determine the assignment path between the cluster member and center, our designed heuristic is executed which considers the three mentioned criteria as well as the reliability factor for the selected paths. Furthermore, we design a non-dominated sorting moth flame controller placement optimizer (NS-MFCPO) to solve the model (Mirjalili 2015). Our simulations on real wide area network (WAN) topologies illustrate the efficiency of the introduced framework, clustering approach and optimizer. The outcome of this study can help the service providers with helpful insights into the design of a resilient software-defined WAN (SD-WAN).

Overall, our main contribution is the proposed framework which includes:

- 1.

Generalization of flow setup time model.

- 2.

Real constraints in the controller placement problem especially reliability constraints.

- 3.

Considering important criteria like propagation delay, hop count, link utilization, and path reliability in switch-to-controller assignment process.

- 4.

A two-phase multi-criteria clustering approach for the assignment of controller and path assignment with following properties:

- 4.1.

Using BWM for cluster center (controller) assignment.

- 4.2.

Applying a heuristic model for path assignment between switches and their master controllers.

- 4.1.

- 5.

A multi-objective algorithm called NS-CPMFO to solve the framework.

1.6 Organization of the paper

The rest of this paper is organized as follows. In second section, the related works in controller placement problem are presented. Section 3 presents some definitions and concepts of the proposed model. The proposed model is described in Sect. 4. The fifth section includes the solution and all the relevant algorithms. Sections 6 introduces the evaluation methods used in this paper. Several results of simulations are presented in Sect. 7. In the last section, we conclude the paper.

2 Related works

In recent years, many researchers have focused on controller placement problem (CPP). Heller et al. (Heller et al. 2012) established the first study on the CPP. Their model can be considered as the mathematical k-median problem. The authors examined the effects of placements on average-case and worst-case latencies on real topologies. This paper focused on the number of controllers needed and their location. However, the assignment and importance of it has not been considered.

Yao et al. (2014) extended the problem by considering restricted capacity of controllers. In their work, it is assumed that the capacity of each controller is limited based on the bandwidth of links, and at the moment, a limited number of switches can be assigned to it. In Sallahi and St-Hilaire (2015), authors provided a mathematical model for constructing a SDN with the lowest cost. Their model allows one to simultaneously determine the number, type and location of the controllers. They also proposed a new extended model to meet the growing number of users and services (Sallahi and St-Hilaire 2017). The model aimed at minimizing the cost while making sure that the solution is feasible to implement. In these papers, they simply considered the assignment procedure. Here, the initial assignment is based on the propagation delay and only assumes that if the number of switches assigned to a certain controller exceeds its capacity, these switches are assigned to the controller that is the second closest as their initial assignment. In these papers, the importance of the assignment, the path assignment, and other parameters that are important in the assignment process have not been addressed.

In Lange et al. (2015), the controller placement problem has been formulated as a multi-objective combinatorial optimization (MOCO) problem and some important objectives, which play key role in deciding the location of controllers such as switch-controller latency, inter-controller latency, load balance between controllers and controller failure, have been proposed. This work was aimed at locating some controllers regarding these objectives that would be optimal. In Lange et al. (2015), authors developed a specialized heuristic to optimize same objectives called Pareto Capacitated k-Medoids (PCKM). They investigate PCKM, by considering a particular set of optimization objectives and returning solutions representing the possible trade-offs between them. Sahoo et al. (2017) proposed two population-based meta-heuristic algorithms, firefly and particle swarm optimization (PSO), for optimal placement of the controllers, which take a particular set of objective functions and return the best possible position in comparison with previous works. Gao et al. (2015) defined the global latency controller placement problem with capacitated controllers (CGLCPP), by considering the latency between controllers and also between switches and controllers. A particle swarm optimization algorithm has been proposed to solve this problem. Various objectives have been introduced and reviewed in these papers. New multi-objective algorithms and location of controllers have been evaluated based on these objectives. However, the flow setup time, which is one of the most important objectives in software-based networks, has not been addressed. In our article, in addition to addressing the important assignment issue, a new model for this objective function is introduced. Considering this objective together with inter-controller latency, as effective objectives in controller placement, a new multi-objective algorithm is presented for solving the multi-objective controller placement problem.

Some researchers have considered the amount of load imposed from switches to controllers while solving the controller placement problem (Wang et al. 2017; Yao et al. 2015; Rath et al. 2014; Wang et al. 2016; ul Huque et al. 2017; Gao et al. 2017). They defined capacity for each controller and in the case of controller overload, i.e., when the load to some controllers exceed some threshold, they will perform reassignment. For example, in (Rath et al. 2014), a nonzero-sum game is presented based on dynamic controller placement technique. A controller is either activated or deactivated periodically based on the traffic demand of switches. A new switch migration-based decision-making (SMDM) method has been proposed in (Wang et al. 2017). They defined a switch migration trigger metric to check the real-time controller load information and then to decide whether to perform switch migration. Yao et al. (2015) considered the node weight when placing the controllers and balanced the load of multiple controllers by a dynamic switch migration algorithm. They assumed that only one switch is allowed to change its assignment from one controller to another. The work in Wang et al. (2016) did switch-to-controller assignment update with respect to dynamic aggregate traffic in data center networks and proposed a two-phase mechanism to solve it efficiently. In ul Huque et al. (2017), authors presented a dynamic controller placement model that consists of determining the locations of controller modules to optimize latencies, and the number of controllers per module to support the load. The provisioning of controllers at each of these controller modules is to handle the dynamic load. A new load balancing scheme, called Load Balancing problem for Devolved Controllers (LBDC), for devolved controllers in data centers is presented by Gao et al. (2017). In this approach, each controller monitors the traffics of a part of the switches locally. When traffic load imbalance occurs, some of them will migrate a portion of their monitored work to other controllers so that the workload can be kept balanced dynamically.

These papers only focused on reassignment without considering the cost and difficulties of tuning of required parameters in reassignment, the importance of path assignment and also packet lost which may occur in this process. They only paid attention to the controller’s capacity. How and based on which parameters is the allocation path done are not addressed.

Recently, clustering approaches have been used to solve CPP (Liao et al. 2017; He et al. 2017; Xiao et al. 2016). Their experimental results showed that using clustering or partitioning in network provides better performance in terms of time consumption and effectiveness of the SDN controller placement. In Xiao et al. (2016), authors focused on two specific questions: how a wide area network topology is partitioned into several small SDN domains, and where should the controller be placed in each SDN domain. Using the spectral clustering placement algorithm, an approach which was based on partitioning a large network into several small SDN domains was proposed to solve SDN controller placement problem for WAN. In Liao et al. (2017), authors used a density-based switch clustering algorithm to split the network into several sub-networks. Then they deployed one controller in each sub-network. For this purpose, they proposed a density-based controller placement approach that the range of each sub-network can be decided by finding density of the topology. In addition, the size of each sub-network can be decided by the capacity of the controller that deployed. Their result showed that their algorithm is faster than the most of current approaches and improves the performance of control plane. However, in their clustering approaches, the importance of assignment process and the path between control plane and data plane have not been considered.

He et al. (2017) used the concept of clustering to model end-to-end flow setup time. They analyzed the controller placement for dynamic traffic flows based on a combined controller placement model: controller locations and switch-to-controller assignments are simultaneously optimized for minimum average flow setup time for different traffic conditions inside of the network. The model presented in the paper is only usable to the case where the assignment is based on the propagation delay and it is not useful for cases where other metrics for the assignment are considered. On the other hand, this paper did not pay attention to the path assignment.

Generally, some papers have investigated CPP based on only location of controllers but did not pay attention to assignment process from switch to controller (Heller et al. 2012; Sallahi and St-Hilaire 2017; Liao et al. 2017). Some papers have discussed the load of controllers, while the load of paths is not considered (Yao et al. 2014; Sallahi and St-Hilaire 2015). Moreover, some papers consider the dynamic traffic and reassignment mechanisms but did not consider cost of reassignment and packet lost rate (Wang et al. 2017; Yao et al. 2015). In addition, some papers have discussed the clustering issues in networks (Liao et al. 2017; He et al. 2017; Xiao et al. 2016). However, they did not consider reliability, optimality of assignment paths and relation between controllers.

Therefore, as a solution, we propose a framework for the controller placement that can find the optimal location for the controllers and optimal assignment between switches and their controllers. Before going through the model description and the proposed method, some required information about them is presented in the next section.

3 Preliminaries

The proposed framework uses quality metrics for CPP. In this section, the importance of considering some metrics in our assignment and clustering process is discussed.

3.1 Latency metric

The propagation latency between switches and associated controllers as a critical factor in area of controller placement problem has been used by many researchers (Jalili et al. 2017; Heller et al. 2012; Sallahi and St-Hilaire 2017). It impacts on every aspect of network and acts as a primary concern in terms of scalability, fault tolerance and interaction between switch and controller. This metric should be minimized in CPP. Most existing approaches use propagation latency as an input parameter in measuring latency between switch and controller for controller placement problem.

3.2 Hop count metric

Hop count between the switch and its controller is calculated as the number of intermediate nodes used in the shortest path. Controller assignment based on only propagation delay is not reasonable, and it would be better to consider other criteria to calculate a better approximation of the delay between two nodes. Since any hop incurs store and forward and other latencies, considering the number of hops can provide the better solution for CPP and allocation process. Many routing protocols, such as RIP, and replica server placement algorithms use hop count to compute the shortest path between each pair of nodes in the network (Mitra et al. 2016; Sahoo et al. 2016). Considering this metric as an assignment parameter in controller placement problem results in a more concrete and reasonable delay between switch and controller particularly when the density of given network is high.

3.3 Link utilization metric

Most of papers related to traffic engineering try to find optimal link utilization to improve quality of their service (Mohammadi et al. 2017; Kishore 2003). Link utilization factor and its correlation to quality of service are discussed by some SDN papers (Mohammadi et al. 2017; Jain et al. 2013). Although this factor is very important, it has not been considered in CPP papers. Using link utilization parameter in the controller placement problem takes several aspects of QoS metrics into account. Assigning switches to their closest controllers based on latency or hop count does not consider path capacity and the amount of load imposed to control path. This may result in overload of controllers and increase the probability of controller failure. These consequences may lead to reassigning new path between switches and controllers, which causes more cost to the network.

Considering link utilization in the controller assignment process results in having both an optimal location and an optimal path between controller and switches. This is because the assignment is done by considering the capacity of the related links as well as the amount of load which switches have to forward. It is very important to note that the amount of bandwidth used to transmit control flow is managed such that it does not exceed specified threshold. Furthermore, this can be regarded as considering CPP as a traffic engineering problem too. Here, link utilization, as the percentage of a network’s bandwidth which is currently being consumed only by control traffic is considered. For example, in Fig. 1, suppose that control traffic with 100Mbps is transmitted from node 1 to 5. There are three paths between these two nodes with different delays and hop counts. If a path is supposed to be chosen based on link utilization, the path with minimum link utilization is preferred to transmit the control traffic of this volume. Therefore, the path \( 1 \to 4 \to 5 \) is selected with link utilization \( \hbox{max} \left\{ {190,150} \right\} = 190\;{\text{Mbps}} \).

3.4 Reliability metric

Reliability in SDN with its special structure depends on different factors, and several papers discussed this matter in both data and control plane, and also in CPP (Song et al. 2017; Ros and Ruiz 2016). The reliability of the path between switches and controllers is very important. If this connection is failed, this in turn will lead to failure in the data plane network which causes some problems like packet loss and reassignment of controller.

The path for connecting a switch to its allocated controller is known as a control path. The way to create this path influences on the performance of the network either in in-band or out-of-band state. In the in-band case, it is more highlighted, because the control paths are passed through intermediate nodes and it is possible that they are overlapped. In this case, due to the complexity of path generation, the path assignment should be done properly. If a link is failed, it may affect control paths of some switches and their control connections as well. Having several controller failures at the same time will increase the packet loss. On the other hand, managing simultaneous reassignment requires administration, technical complexities, and resiliency approaches which are very complicated. Therefore, the initial assignment should be such that the influence of failure or high delay, congestion, and any other factor, impacting on the performance of the path, is minimized. Hence, the concept of reliability for control paths between controllers and switches is used. By considering this metric, we aim at rising the functionality of these paths including intermediate nodes and links. For each component of the network, i.e., node or link, a failure probability is taken into account. Using the probability of failure, we calculate the reliability of control paths. The reliability of a path is defined as follow (Ros and Ruiz 2016):

where \( F_{\text{cmp}} \) denotes the failure probability of a component.

4 Model formulation

The placement of controllers can be evaluated and determined according to different objective functions. In this section, we start by presenting the system model and the assumptions. All the data and variables used in the problem formulation can be seen in Tables 1 and 2.

4.1 Objective functions

The multi-objective problem considered in this paper contains two objectives:

Minimize Inter-controller communication latency

Minimize End-to-End Flow setup time

4.1.1 Inter-controller communication

SDN will be deployed in large-scale networks, where it is divided into multiple connected SDN domains, for better scalability and security. To utilize network resources efficiently, SDN controllers need to communicate with each other. When the network resources are distributed among multiple SDN domains, controllers from each domain need to communicate with the controller of the source domain to share network parameters. However, such an architecture also requires various forms of state synchronization between the individual controllers. This enables the controller of the source domain to confirm and process the bandwidth requirement. Therefore, the inter-controller communication affects the performance in end-to-end communication between two hosts (Jalili et al. 2017; Sallahi and St-Hilaire 2017; Zhang et al. 2017). Hence, another goal of the controller placement task is to maintain a small inter-controller latency in order to minimize synchronization times. To reduce the communication cost among controllers, the controllers should be deployed as closely as possible. Intuitively, it is required to minimize the latency among them. According to notations in Sect. 4, the latency of inter-controller communication for a special placement P is calculated as:

where \( D_{{p1,{\text{p}}2}} \) is the minimum propagation latency between the controllers in positions p1 and p2.

4.1.2 End-to-end flow setup time

One of the key functions of an SDN controller is to establish flows. When SDN works in reactive mode, the packet-in massage is sent only once to the controller by ingress switch in each domain. After the processing, the controller sends flow modes to every involved switch inside its own control domain. The time associated with reactive flow setup is the sum of the time it takes to send the packet from the ingress switch to the SDN controller; the processing time in the controller and the time it takes to send the flow modification message back to all involved switches (He et al. 2017).

Therefore, end-to-end flow setup time indicates the amount of time needed to set up forwarding rules in all involved switches and acts as a primary concern in terms of service establishment of network operators. This metric influences heavily on the performance of end-to-end communication between two hosts. In some papers, they try to minimize flow setup time as an important metric in problems of related to traffic engineering (Berde et al. 2014; Akyildiz et al. 2014). In addition, some papers related to CPP consider this metric as one of the main metrics which influence on total performance of networks (He et al. 2017; Bari et al. 2013; Zeng et al. 2015). However, an exact formulation is not presented for computing this metric. For the first time, a formula was proposed for flow setup time based on clustering approach in (He et al. 2017). However, they did not consider all the possible states which may occur.

A case in point is when the data packet reaches to intermediate nodes earlier than the flow mode. The major difference of our work is that our formulation of End-to-End flow setup time is based on more concrete assignment approach. This approach considers multi important criteria and not just based on propagation latency. Such approach occurs more often in the real cases. In this paper, for convenience of the reader, the same notation as in (He et al. 2017) is used.

According to Fig. 2, suppose an inter-domain flow which begins at Host1 and ends at Host2. As soon as the first packet of this flow arrives in Domain1, egress switch S11 sends a packet-in message to its controller in order to obtain a suitable flow rule (\( cl_{{S_{11} }} \), control latency switch S11). Right after the processing, the controller sends flow modes to every involved switch within its own control domain.

Next, according to the flow mode in switch, the data packet is forwarded. For other switches in Domain1 through which the data packet should forwarded, three cases may be occurred.

- 1.

The flow rule setup packet reaches other switches earlier than data packet. In this situation, \( cl_{{S_{11} }} + D_{{S_{11} S_{12} }} > cl_{{S_{12} }} \) and \( cl_{{S_{11} }} + D_{{S_{11} S_{13} }} > cl_{{S_{13} }} \) where \( D_{{S_{11} S_{12} }} \) is the forwarding latency between S11 and S12, \( cl_{{S_{12} }} \) and \( cl_{{S_{13} }} \) are control latencies for switches S12 and S12, respectively.

- 2.

Both flow rule setup packet and data packet reach other switches at the same time. In this situation, \( cl_{{S_{11} }} + D_{{S_{11} S_{12} }} = cl_{{S_{12} }} \) and \( cl_{{S_{11} }} + D_{{S_{11} S_{13} }} = cl_{{S_{13} }} \).

- 3.

The data packet reaches other switches earlier than flow rule setup packet. This occurs in the case that queuing and processing delays in intermediate nodes cause long latencies in arriving the flow-mode packet. In addition, if the assignment is not only based on the shortest distance according to propagation latency matrix, this case may be happened. In this situation, the following state may happen:

In two first cases, the first data packet will be directly forwarded when it arrives at every switch in the domain. Let \( t_{i}^{f} \) is the time takes for the packet to pass through one control domain i. For two first cases:

However, in third case, because of the data packet may reach other switches earlier than flow-mode packet, data packet must wait in that switch. Where

For all the three mentioned cases for one domain, the flow setup is:

Therefore, it would be better to present a formula which covers all the possible states. Since the source and destination of each cluster domain and also the path corresponding to a data packet are known, in general, the flow setup time of one domain i can be calculated as:

where \( s_{i} \) and \( d_{i} \) denotes the first and last node of the flow in the domain i. \( D_{{u,d_{i} }} \) is propagation delay between each node \( u \) and \( d_{i} \), in domain I based on shortest path algorithm. The above formula can be used for all domains related to the flow path. However, in the formula, only time corresponded to each domain is considered. The time it takes for a packet to pass from the end ingress of a domain to the egress node of the next domain should also be taken into account, which is simply by adding the following expression:

where \( d_{i} \) and \( s_{i + 1} \) denote the last and first node of domains i and i + 1, respectively, through which the flow is passed. Therefore, for an especial flow f and a placement P, the following relation is stated:

For example, again based on Fig. 2 and above assumptions, the time lasts to the request from switch \( S_{11} \) reaches the controller is equal to the time of assigned path between this switch and its controller, which is 6 ms. Right after the processing, the controller sends flow rules to every involved switch within its own control domain. This time for switches \( S_{11} ,S_{12} \), and \( S_{13} \) is 6 ms, 10 ms and 6 ms, respectively. As soon as the flow mode is set \( S_{11} \), the data packet is forwarded. The time (forwarding latency) required for this packet to reach switch \( S_{12} \) is 2 ms. Since, no flow mode is set in this switch yet, the packet has to wait. The waiting time for this packet is 2 ms. Following the same process, it takes 6 ms for this packet to reach switch \( S_{13} \). Since the controller latency of switch \( S_{13} \) is bigger than the total time for the packet to reach this switch, \( S_{13} \) forwards the packet as soon as gets it. Therefore, flow setup time for the first domain is:

The same process is followed by other domains.

This time for Domains 2 and 3 are \( t_{2}^{f} = 6 \) and \( t_{3}^{f} = 30 \), respectively. In addition, the packet transition time between domains should be considered. This time between domains 1 and 2 is 6 ms and between domains 2 and 3 is 5 ms. To add them up, total flow set up time for this flow would be:

4.2 Model

Let G = (V; E) be the graph that represents the topology of a SDN network, with V nodes and E links. The model is represented as follows:

Subject to:

Objective function (A-1) represents the inter-controller latency. The flow setup time (A-2) as the second objective function is restated in terms of clustering model and according to the notations that presented in Sect. 4.

Constraint (A-3) ensures that K controllers are to be placed. Constraint (A-4) forces each switch to be controlled by exactly one controller. Constraints (A-5) to (A-9) are used in the flow setup objective function. Constraint (A-5) defines the control latency for each node switch. For every two switches, they are assigned to the same controller if they are in the same control domain, and this is guaranteed by Constraint (A-6). Also, after checking the possibility of assigning two switches to all potential controllers with Constraint (A-7), we will find out if they can be part of different control domains. Both constraints can actually be combined; constraint (A-8) is the complement of constraint (A-7). Constraint (A-9) determines the set of controllers. Constraint (A-10) indicates that the reliability of each control path [calculated by (5)] should be at least a predefined threshold. Constraints (A-11) to (A-13) ensure that the propagation delay, hop count, and link utilization of control paths have to be maintained less than some pre-determined upper bounds.

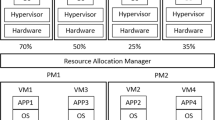

5 Proposed method

In this section, our approach is explained in detail. Figure 3 shows how our framework works to solve the problem (A-1) to (A-15). First, a population of solutions should be generated. Here, a randomization process is done for this purpose. Note that, in the second section of the framework (Evaluation), all the created placements first entered into the clustering approach for switch and control path assignment, (phase 1 and phase 2 of clustering approach (V.C.), respectively). For each solution, after clearing the assignments, it is evaluated based on objective functions (A-1) and (A-2) for further process. Afterward, the optimization process is implemented on the evaluated population to find a Pareto optimal set of solutions. Some basic information of our framework is presented in the next subsection.

5.1 Constraint handling

Here, the technique for handling the constraints is described. Consider the following multi-objective optimization problem:

The degree of violation of each of constraints is defined as follows:

where \( \delta \) is a tolerance allowed for the equality constraints.

The degree of violation for solution x is defined as follows:

Also, the quantity \( nv\left( x \right) \) as the number of violated constraints by the solution x is considered.

Definition 1 (constrained dominance)

Suppose that every solution \( i \) has three attributes:

- (1)

non-domination rank (\( nr_{i} \)) i.e., to which ranking level the solution belongs.

- (2)

The number of violated constraints \( nv_{i} \)

- (3)

The degree of violations \( cv_{i} \), Eq. (12).

Solution i said to constrained dominates solution j if any of the below states happens:

- I.

\( nv_{i} = 0 \) and \( nv_{j} > 0 \).

- II.

(\( nv_{i} = 0 \) and \( nv_{j} = 0 \)) and (\( nr_{i} < nr_{j} ) \).

- III.

(\( nv_{i} > 0 \) and \( nv_{j} > 0 \)) and \( (nv_{i} > nv_{j} ) \).

- IV.

(\( nv_{i} > 0 \) and \( nv_{j} > 0 \)) and \( \left( {nv_{i} = nv_{j} } \right) \) and \( (cv_{i} < cv_{j} ) \)

This concept is used for comparison of solutions in our algorithmic process.

Definition 2

A placement is considered as a K-vector where K is the number of controllers.

An example of a placement of controllers can be seen in Fig. 4. In the figure, it is assumed that the number of controllers is \( k = 5 \), and the position of the i-th controller is inserted in the i-th bit. In other words, we have 5 controllers located in five node positions with numbers 2, 5, 11, 18 and 23.

5.2 Solution representation

A solution of problem (A) is considered as a placement of controllers, its cluster domains, and the paths in each cluster between switches and their cluster centers (controller positions).

The algorithmic operators, which will be explained in Sect. 5.4, are implemented to produce some placements. Given such a placement, each controller position acts as a center of a cluster. Hence, K clusters and their centers are determined first. In Sect. 5.3, the process by which these clusters’ members are identified is explained as the first phase. There is also a second phase for determining the paths through which they are connected to the cluster centers. This two-phase approach uses some critical criteria in the clustering process.

5.3 Two-phase multi-criteria clustering mechanism

Figure 5 illustrates the clustering procedure for one switch of the network. Input of this approach is a placement of controllers and a typical switch. In the first phase, this switch is located in a cluster whose center is one of the controllers’ positions. Afterward, it goes through the second phase and a reliable path between the switch and the selected center cluster is determined. This trend is repeated for this placement and all switches. Hence, all switches are assigned to the current set of controllers.

Figure 6 illustrates the result of applying clustering approach on a topology. Each switch is located in one cluster and the best path to connect it to the center (controller position) is determined.

The phases of our clustering approach are explained in the following.

First Phase: Suppose that a placement of controllers \( P = \left( {p_{1} ,p_{2} ,, \ldots ,p_{k} } \right) \) is made. Therefore, there are K clusters \( c_{i} \) with center \( p_{i} , i = 1, \ldots ,K \). For each switch, a decision has to be made to determine in which cluster the switch should be located. Hence, there are K cluster candidates for each switch. Here, each cluster center \( p_{i} \) is the representative of the ith cluster. For a typical switch s, there should be some criteria for evaluating each of the cluster candidates.

For this phase, three criteria are used. The first two are the propagation delay and hop count between each switch position and the position of cluster centers (controllers). Last criterion is link utilization of the path through which a switch is connected to the cluster centers.

If the weight of candidate \( p_{i} \) with respect to criterion \( c_{j} \) is denoted by \( a_{ij} \), the score of each candidate \( p_{i} \) can be calculated as:

where \( w_{j} \) represents the preference of criterion j.

Sorting the values of \( sc_{i} ,\forall i = 1, \ldots ,k \), the best cluster candidate is identified for the switch s. There are several ways in the literature to determine the weights \( w_{j} \)(Olson 2004; Opricovic and Tzeng 2004).

A recent powerful approach called Best–worst multi-criteria decision-making method (BWM) (Rezaei 2016) is used for determining the criteria weights \( w_{j} \).

In this section, the steps of BWM that can be used to derive the weights of the criteria is described in (Rezaei 2016).

Step 1. Determine a set of decision criteria. In this step, the set of criteria \( \left\{ {c_{1} ,c_{2} , \ldots ,c_{n} } \right\} \) is used to make a decision. In our case, {propagation delay (\( c_{1} \)), hop count \( c_{2} \) and link utilization \( c_{3} \)} are considered.

Step 2. Identify the best (e.g., most desirable, most important) and the worst (e.g., least desirable, least important) criteria, B and W, respectively. In this step, the decision-maker determines the best and the worst criteria in general. No comparison is made at this stage. In this paper, different cases from these criteria as the best and worst cases are considered which will be described in Sect. 6.

Step 3. Decide on the preference of the best criterion with respect to the other criteria via a number between 1 and 9. The resulting Best-to-Others vector would be:

where \( a_{Bj} \) denotes the preference of the best criterion B over criterion j. It is clear that \( a_{BB} = 1 \).

Step 4. Decide the preference of all the criteria with respect to the worst criterion via a number between 1 and 9. The resulting Others-to-Worst vector would be:

where \( a_{jW} \) denotes the preference of the criterion j over the worst criterion W. It is clear that \( a_{WW} = 1 \).

Step 5. Find the optimal weights \( \left( {w_{1}^{ *} ,w_{2}^{ *} , \ldots ,w_{n}^{ *} ,} \right) \). The optimal weight for the criteria is the one where, for each pair of \( {\raise0.7ex\hbox{${w_{B} }$} \!\mathord{\left/ {\vphantom {{w_{B} } {w_{j} }}}\right.\kern-0pt} \!\lower0.7ex\hbox{${w_{j} }$}} \) and \( {\raise0.7ex\hbox{${w_{j} }$} \!\mathord{\left/ {\vphantom {{w_{j} } {w_{W} }}}\right.\kern-0pt} \!\lower0.7ex\hbox{${w_{W} }$}} \) we have \( {\raise0.7ex\hbox{${w_{B} }$} \!\mathord{\left/ {\vphantom {{w_{B} } {w_{j} }}}\right.\kern-0pt} \!\lower0.7ex\hbox{${w_{j} }$}} = a_{Bj} \) and \( {\raise0.7ex\hbox{${w_{j} }$} \!\mathord{\left/ {\vphantom {{w_{j} } {w_{W} }}}\right.\kern-0pt} \!\lower0.7ex\hbox{${w_{W} }$}} = a_{jW} \). To satisfy these conditions for all j, a solution where the maximum absolute differences \( \left| {\frac{{w_{B} }}{{w_{j} }} - a_{Bj} } \right| \) and \( \left| {\frac{{w_{j} }}{{w_{W} }} - a_{jW} } \right| \) for all j is minimized should be found. Therefore, the following problem should be solved:

Solving problem (16), the optimal weights \( \left( {w_{1}^{ *} ,w_{2}^{ *} , \ldots ,w_{n}^{ *} ,} \right) \) and \( \xi^{ *} \) are obtained. Finally, with this criteria weight vector and using Eq. (13), the score of each cluster candidate is identified. The cluster having the highest score is host for the stitch s.

Second phase: After finding the most suitable cluster in which the switch s is located, the best path through which the switch and its cluster center, call it v, is connected is identified. For this, four criteria are considered: propagation delay, hop count, link utilization and reliability of all the paths between s and v are evaluated. The reliability factor for the paths is really important. It considers the failure probability of the components of the paths and effects on the process of choosing a suitable path for connection. Regarding these four important criteria, a path which makes all of them (as much as possible) satisfied can be searched.

Before starting this phase, a decision should be made about the threshold for all the four items on each path connecting these two nodes. Suppose that \( T_{D} ,T_{H} ,T_{L} \), and \( ,T_{R} \) are these values [based on constraints (A-10)–(A-13)]. Hence, a path is regarded feasible if the following conditions are held.

Note that, based on the constraint (A-10), we should have: \( R\left( {path} \right) \ge T_{R} \) which is equivalent to \( - R\left( {path} \right) \le - T_{R} \). It is important to mention that constraints (A-10) to (A-13), like other constraints are tried to be satisfied via algorithm operators defined in Definition 1 and Eq. (12). However, in second phase of clustering, the thresholds are also considered to do the assignment of controllers to switches. By doing this greedy mechanism, the chance of generating feasible solutions for model (A-1) to (A-15) in a reasonable time will be considerably increased. This makes our main algorithm more powerful and fast. Also, it forces to find solutions having high reliability as well as low propagation latency, hop count and link utilization.

Definition 3

The feasibility of a path between s and v is defined as follows:

where \( D\left( {\text{path}} \right),H\left( {\text{path}} \right),L\left( {\text{path}} \right) \), and \( R\left( {\text{path}} \right) \) denote the propagation latency, hop count, link utilization, and reliability of path respectively.

If a path is feasible, \( \Delta \left( {\text{path}} \right) \) is zero. Otherwise based on formula (22), it would be a positive value. Therefore, the less the value of \( \Delta \) for a path shows the better solution.

If the set of all disjoint paths between s and v is shown with \( AP\left( {s,v} \right) \), solving model gives the path whose \( \Delta \) is smallest among all the possible paths:

Finally, after the second phase, for each switch s, the cluster in which the switch is located and the path from switch to the cluster center can be determined.

5.4 Proposed algorithm (NS-MFCPO)

The basic operators of the proposed algorithm work mainly on the placement of controllers while the clustering process is done to compute the rest of a solution. The objective functions decide on whether a solution is accepted or not. Hence, they indirectly influence on how clusters are made.

In this section, non-dominated sorting moth flame controller placement optimizer (NS-MFCPO) is proposed. This algorithm inherits the basic structures from MFO (Mirjalili 2015) and its recently multi-objective version NS-MFO (Savsani and Tawhid 2017). The NS-MFO is combined with a local search and a perturbation mechanism to escape from local optima. The input of the algorithms are \( {\text{t}}_{ \hbox{max} } ,P_{\text{size}} , G = \left( {S, E} \right),w_{ \hbox{max} } \) and \( P_{0} \) which denote the maximum number of iterations as the termination criteria, the size of population of moths (placements of controllers), the topology graph, and a maximum value for iteration without any improvement in the quality of Pareto solutions (the set of non-dominated) found, and an initial population which is created randomly, respectively.

An external set \( M \) is used to record the Pareto solutions found so far and hence, initialized with empty set and will be the output. Also, \( F_{ \hbox{max} } \) and \( {\text{wic}} \) represents the maximum number of flames (Mirjalili 2015; Savsani and Tawhid 2017), and a counter which is increased by one when performing an iteration was not able to improve the external Pareto set \( M \). First, a random population of moths is generated and the maximum number of flames is initialized with the population size. Elitist non-dominated sorting method and diversity preserving crowding distance approach of NSGA-II are proposed in the proposed NS-MFO algorithm for sorting of the population in different non-domination levels with computed crowded distance .

Using definition 1, one can compare the individual solutions and putting them in different levels. \( F = \left\{ {F_{1} , F_{2} \ldots ,} \right\} \) contains the different fronts \( F_{i} \) (obtaining by putting the objective vectors of solutions in different ranking layers (Deb et al. 2000; Glover et al. 2000)), where the first front \( F_{1} \) denotes the non-dominated level or Pareto front of the solutions. Crowding distance approach is a powerful measure to maintain the diversity among the obtained solutions. This measure is used to get an estimate of the density of solutions surrounding a particular solution in the population. It is calculated as the average distance of two points on either side of this point along each of the objectives (Deb et al. 2000; Glover et al. 2000). The number of flames in each iteration is obtained through Eq. (20). Here, \( t \) denotes the current iteration. The number of flames will decrease with increasing the iteration number.

In this algorithm, in each iteration, \( {\text{FS}} \) flames are selected as follows: given the sets \( M, P, \) and \( P\left( {F_{1} } \right) \) as the external Pareto set, the whole population and the solutions in the first (or best) front, called non-dominated solutions in current population, \( \left[ {{\raise0.7ex\hbox{${FS}$} \!\mathord{\left/ {\vphantom {{FS} 3}}\right.\kern-0pt} \!\lower0.7ex\hbox{$3$}}} \right] \) members of each set are randomly selected to create the set of flames, i.e., [] denotes the integer part of a number, M contains best solutions from the beginning of the search and \( P\left( {F_{1} } \right) \) the best ones in the current iteration. Hence, selecting flames from these set is to intensification aspect toward good solutions. Also, selecting from the whole population randomly helps the diversification of the search process to not concentrating too much on a limited set of solutions. These flames are guiding the movements of the moths in each iteration.

The path-relinking strategy (Glover et al. 2000) is used as our local search procedure to update the positions of moths in order to generate the child population \( Q_{t} \). For each moth i, a flame \( f_{i} \) is selected from their set. Then, path-relinking (\( i,f_{i} \)) is performed to obtain the best solution in the trail starting from i ending at fi. One example of this trail can be seen in Fig. 7. After finding the whole trail, all the solutions found are evaluated based on the objective functions and the best solution, where the lowest weighted sum of the objective functions is selected and added to \( Q_{t} \). This process is repeated for all the moths in \( P_{t} \).

Then the parent and child solutions \( P_{t} \) and \( Q_{t} \) are combined. Non-dominated sorting is performed on the combined population obtaining different fronts. The first front then is added to \( M \) if it is not dominated by any member of \( M \). If a solution is added to \( M \), it is then updated in such a way that all the members which are dominated by the new member are removed from \( M \). It keeps this set as a non-dominated set of solutions. If no solution is added to \( M \), it means no improvement is occurred in this iteration. Hence, without improvement counter \( wic \) increases by one. In the next step, the Crowded-Comparison Operator (Deb et al. 2000) is used to sort the combined population. In this paper, this operator is adapted to constrained optimization problem. The Constrained-Crowded-Comparison operator (\( \prec_{c} \)) is used as follows in order to compare two solutions (\( i \) and \( j \)). Using the concepts in Definition 1 as well as the crowding distance strategy (Deb et al. 2000), it is said that \( i \prec_{c} j \) if any of the following conditions are held:

- I.

i dominates j based on the comparison in definition 1.

- II.

(\( nv_{i} = nv_{j} ) \) and \( \left( {cv_{i} = cv_{j} } \right) \) and \( (cd_{i} > cd_{j} ) \).

where \( cd_{i} \) denotes the crowding distance of solution i.

After sorting the population, the best \( P_{\text{size}} \) moths are selected for the next iteration. If the without improvement counter reaches its threshold \( w_{ \hbox{max} } \), a perturbation is performed in the population. In this case, a random integer number r is chosen in the interval \( \left[ {1,P_{\text{size}} } \right] \), and then, r moths updates their positions. For each, a random integer number \( p_{r} \) is selected in the interval \( \left[ {1,\left[ {k/2} \right]} \right] \), here \( \left[ {k/2} \right] \) denotes the largest integer number smaller than or equals to \( {\raise0.7ex\hbox{$k$} \!\mathord{\left/ {\vphantom {k 2}}\right.\kern-0pt} \!\lower0.7ex\hbox{$2$}} \). Afterward, \( p_{\text{r}} \) random bits of the selected solution are exchanged randomly with other numbers. The perturbation mechanism somehow restarts the procedure with some new places of moth aiming to escape from the local optima. The output of the algorithm is the best solutions found.

6 Evaluation method

The methodology which is used in the results (Sect. 7) is outlined in this section. Four main scenarios in the implementations are followed. Validating the proposed flow set up time model is performed in two stages. For this purpose, as the first scenario, it is shown that the new flow set up time model which is referred as “generalized model” encompasses the model proposed in He et al. (2017), entitled as “baseline model.” Two topologies called Abilene and Internet2 from Internet Topology Zoo (Knight et al. 2011) are used as the case studies. These evaluations for different number of controllers (K) are performed. For Abilene topology, \( K \in \left\{ {1,2, \ldots ,7} \right\} \) while for Internet2 topology \( K \in \left\{ {1,2, \ldots ,9} \right\} \). For each k, the proposed algorithm is implemented 30 times and average values are recorded. Next, as the second scenario, the results of NS-CPMFO on the generalized model, by considering different priority for clustering process, with the baseline one is compared. One important advantage of the generalized model in comparison with baseline one is that the former is a flexible model which can be implemented with different priorities for assignment process. For this purpose, the generalized model is run regarding several priority assumptions. Four different clustering priority vectors are used in this analysis which can be seen in Table 3. As mentioned before, three criteria “propagation delay,” “hop count,” and “link utilization” are used in the first phase of the clustering method. According to this table, PV1 emphasizes almost equally on the three criteria, PV2 focuses mainly on the first two and PV3 on the second one, and finally PV4 concentrates mainly on the third criterion. These vectors are used in the steps 3 and 4 of first phase of the multi-criteria clustering mechanism (see Sect. 5.3).

In addition to flow setup time, two other indicators called “maximum link utilization of control paths,” or briefly “MLUCP” as well as “path loss” are used to compare the obtained placements. MLUCP is an important metric to evaluate the quality of achieved placements, with respect to distributing control traffic in the network. The MLUCP denotes the highest link utilization among all control paths from switches to their assigned controllers. This metric is stated in Eq. 21.

where P represents a placement of controllers and \( A\left( P \right) \) denotes the assigned paths between switches and controllers regarding the placement P. In other words, given a placement P as cluster centers, the clustering approach (V.C.) determines the controller assigned to each switch i and also the control path. Each control path has a link utilization based on definition in Sec (3.C). The \( {\text{MLUCP}} \) denotes the highest link utilization among these paths. Internet2 topology with \( K \in \left\{ {3, 5, 7, 8} \right\} \) are used for our case studies in this scenario. Another metric, “path loss” which is introduced in (Hu et al. 2013), is an important reliability metric considered in several papers in the controller placement literature to evaluate south-bound reliability of the resulted placements through algorithms (Zhong et al. 2016; Hu et al. 2014). Let m denotes the total number of control paths from switches to controllers in the control network. Regarding the failure probability of component \( {\text{cmp}} \in {\text{V }} \cup {\text{E}} \) as \( F_{\text{cmp}} \), and \( d_{\text{cmp}} \) denotes the number of control paths broken in case of failure of \( {\text{cmp}} \). Hence, the expected percentage of control path loss \( \varphi \) can be defined as follows (Hu et al. 2013):

Two probabilities \( F_{\text{cmp}} = F_{n} \) and \( p_{\text{cmp}} = F_{l} \) are defined as failure probabilities of node \( n \) and link \( l \). For all the topologies, we set the failure probabilities of each node and each link to the same values, \( \lambda_{\text{node}} = 0.01 \) and \( \lambda_{\text{link}} = 0.02 \), respectively.

The third scenario is devoted to validate of the proposed model (A-1) to (A-15). For this purpose, the results of running NS-CPMFO on this model are compared with those for this model excluding constraints (A-10) to (A-13). This evaluation will reveal the role of added constraints and so, the whole model. For evaluating the obtained solutions by each algorithm, four metrics are used: (1) maximum node to controller latency, (2) maximum node to controller hop count, (3) maximum link utilization of control paths (\( {\text{MLUCP}} \)), and (4) maximum path reliability. For this analysis, internet topology2 is used. NS-CPMFO is applied on the problems for different number of controllers, 30 times for each one. Dividing the values for each performance metric for the model with constraints on those without constraints results in relative performance metrics’ values.

The last two scenarios are used to analyze the performance of NS-CPMFO. For this evaluation as the forth scenario, NS-CPMFO is compared with another successful algorithm (NSGA-II) for controller placement problem (Jalili et al. 2015). For this purpose, the performance of two algorithms in terms of average values per 30 runs on the proposed model, (i.e., (A-1) to (A-15)), for different topologies and with different number of controllers are recorded. In our evaluations, the “coverage metric” which was first introduced by Zitzler et al. (Zitzler et al. 2000) is used. Suppose that \( M_{\text{A}} \) and \( M_{\text{B}} \) are estimated sets of the true Pareto which are resulted through running two algorithms A and B. This metric evaluates the extent to which one solution set \( M_{\text{B}} \) is covered by another solution set \( M_{\text{A}} \). This ratio maps the pair of solution sets (\( M_{\text{A}} , M_{\text{B}} \)) into the interval [0, 1].

In this metric, C (\( M_{\text{A}} , M_{\text{B}} \)) = 1 indicates that all solutions in \( M_{\text{B}} \) are covered by solutions in the set \( M_{\text{A}} \) which represents algorithm A outperforms strictly Algorithm B. However, C (\( M_{\text{A}} , M_{\text{B}} \)) = 0 indicates that none of the solutions in \( M_{\text{B}} \) is covered by solutions in \( M_{\text{A}} \). It should be mentioned that since the equation C (\( M_{B} , M_{B} \)) = 1 − C (\( M_{\text{A}} , M_{\text{B}} \)) is not necessarily true, both C (\( M_{\text{A}} , M_{\text{B}} \)) and C (\( M_{\text{B}} , M_{\text{B}} \)) should be calculated. Overall, the algorithm with the best performance is the one providing solutions with biggest coverage of the solutions comparing to others. The last scenario is to analyze the output of the algorithm within different runs. Internet2 topology is considered as our case study with four controllers. The results show the percentage of node selection as locations of controllers.

In the rest of this section, system identification and also some parameters which are used in our evaluations are described. All the experiments are run on an Intel Core i7 CPU at 4 GH and 16 GB RAM running Windows 7 and Matlab version 2014b. We use \( T_{\text{R}} = 0.98 \), \( T_{\text{H}} = 0.4 *Hop_{\text{Diam}} \), \( T_{\text{D}} = 0.4 * {\text{Delay}}_{\text{Diam}} \), and \( T_{L} = \left( {1 - \frac{k}{\left| V \right|}} \right) *0.80 \) as thresholds for reliability, hop count, propagation delay, and link utilization, respectively, where \( Hop_{\text{Diam}} \) and \( Delay_{\text{Diam}} \) denote the maximum hop count and propagation delay between each pair of switches in the graph, respectively. The reason behind selecting the variable threshold (for different topology sizes as well as different number of controllers) for link utilization is to be in relation with the number of controllers (K). For example, for a typical topology, if a fix threshold is used for different K, implementing link utilization of control paths are not reasonable.

In the following, the flow generation schema which is used in our evaluations is described. Flow density Dens is defined as a parameter determining the level of sparseness of flow distribution inside the topology. Assume flow profile F represents the set of current flows in the network, indicating one flow distribution. Each element \( f \in F \) is defined as a source–destination node pair and its forwarding path is represented as an ordered set of node pair pf from source to destination. A typical flow distribution is denoted by a flow profile. In the following, the process by which these flow profiles are generated is explained. There are in general N = |V|*|V| source–destination pairs, i.e., |V| is the set of switches. Among these, N*Dens pairs are randomly selected, the same flow generation procedure as He et al. (2017). Due to the reasons mentioned in the related works and also the findings presented in (He et al. 2017) which shows that the results for dynamic and static placements models are almost the same when the density of traffic is higher than 0.6, in all of our experiments, the traffic is considered statically with high density Dens = 0.9 for all scenarios. In the first scenario, for each k, the NS-CPMFO is executed for 30 times, each time N*Dens flow profiles are randomly generated. In the second scenario, once a flow profile is generated, all runs of the NS-CPMFO on the baseline and generalized model are recorded for different K on this flow profile to keep the similar and fair comparing environments. In the third and last scenarios, as soon as a flow profile is created all the experiments are done with the generated flow.

Regarding the variable control loads imposed by switches to controllers, in our simulations, we suppose these loads are randomly generated in an interval \( [l_{ \hbox{min} } ,l_{ \hbox{max} } \left] = \right[100\,{\text{Mbps}},200\,{\text{Mbps}}] \). The capacity of links are also considered randomly in the interval \( [cpl_{ \hbox{min} } ,cpl_{ \hbox{max} } \left] = \right[1000\,{\text{Mbps}},2000\,{\text{Mbps}}] \).

7 Result

In this section, based on the evaluation methodology which is described in Sect. 6, the results of the experimental studies are illustrated through several visualizations approaches.

7.1 Validation of flow setup time model

7.1.1 Scenario (1) comparison with similar assumptions

NS-CPMFO algorithm separately is run with the same parameters and same flow profiles on both baseline and generalized model. Since in baseline model, the assignments of controller to switches are only based on shortest propagation latency, and the same assignment method for the generalized model is used. Moreover, in this simulation, constraints (A-5) to (A-9) are excluded from the generalized model to keep every assumption the same for comparing this model to baseline model. We run the simulation 50 times for Abilene and Internet2 topologies. Using 95% confidence interval, the results show that the performance of models is the same for flow setup time metric. The results are shown in Fig. 8.

7.1.2 Scenario (2) comparisons with different priorities

This scenario includes comparing the results of NS-CPMFO, on the generalized model, considering different priority for clustering process, with the baseline one based on its own assumption. Figure 9a–c shows the results for three metrics, \( {\text{MLUCP}} \), pathloss, and flow set up time, respectively, in order to evaluate the quality of obtained placements for different number of controllers. Each bar’s color represents a priority vector as can be seen in figures. To compare findings for generalized with baseline model, the results for the three metrics are divided into their counterparts for baseline model and refereed them as relative performance metrics. The values for relative metrics in Figs. 9a, b for all set of controllers are less than 1. Indeed, the findings for two metrics \( {\text{MLUCP}} \) and pathloss are better in all cases for generalized model in comparison with baseline one. Based on Fig. 9a, b, the relative metrics get their best values for PV4, which is reasonable because the main concentration is made on link utilization and distributing control paths to the whole network. For \( {\text{MLUCP}} \) metric, 40–50% improvement can be seen considering PV4. The path loss metric has improved between 20% and 28% in its values. Meanwhile, regarding nearly identical emphasis on the three criteria (PV1) obtains almost as good performance as those for PV4. It can be seen from Fig. 9c that flow setup time values for generalized model are slightly bigger, at most 13% than those for baseline model.

To sum up in validation of the model, the generalized model encompasses the baseline one. Indeed, if the priority vector is selected based on focusing strictly on propagation delay, the two models can get the same results. However, considering different priority vectors by the generalized model allows it to obtain solutions with better path loss, maximum link utilization, with small deviation for flow setup time. The flow setup time for the new model is bigger because it considers some waiting time in some situations not taken into account in the baseline model.

7.2 Model’s constraints analysis

7.2.1 Comparisons model with and without constraints

As mentioned in Sect. 5, NS-CPMFO is performed on different number of controllers for the model with and without constraints using the fair priority vector PV1. However, for limitation on pages, only results for k = 4 are demonstrated in Fig. 10. According to the average values for relative performance metrics, constraints have their most influence on reducing hop count and link utilization metrics. It should also note that, all values for the whole model (with all constraints) are considerably better than those counterparts for the model without constraints.

7.3 Algorithmic performance analysis

7.3.1 Algorithm comparisons

Coverage metric values, averaged over instance topologies, for solutions obtained with two algorithms NS-CPMFO and NSGA-II (Jalili et al. 2015) for five topologies extracted from Internet Topology Zoo are gathered in Table 4. Values given in the first row of algorithm NSGA-II denote the coverage of solutions achieved by NS-CPMFO as opposed to those for NSGA-II and conversely for the figures of the second rows. For example, we have \( C\left( {M_{\text{NSCPMFO}} , M_{\text{NSGA - II}} } \right) = 0.78 \) and \( C\left( {M_{\text{NSGA - II}} ,M_{\text{NSCPMFO}} } \right) = 0.39 \), for AttMpls topology with n = 25 switches and k = 4 controllers.

For all case studies, solutions found by NS-CPMFO have considerably greater coverage of those obtained by NSGA-II. It can be concluded that our NS-CPMFO has much better performance than NSGA-II. Moreover, the performance of our algorithm is becoming better, in comparison with NSGA-II, with increasing the size of the search space.

7.3.2 Scenario (5) algorithm results analysis

Randomized algorithms may result in different output solutions for different runs. However, one can find the trend of them by following the trail of obtained solutions. NS-CPMFO like any other Pareto-based algorithm aims at finding a set of Pareto optimal solution. However, administrators may want to know which placements are more emphasized by the algorithm as controllers’ positions. For this purpose, we run the algorithm 100 times for Internet2 topology with k = 4 controllers and N = 34 nodes. Figure 11 depicts the results of this implementation. The selection percentage of each node among all runs is recorded and illustrated as different colors in four intervals. Some nodes are considered insignificantly by the algorithm indicated that they are not appropriate to be positions of controllers (depicted by white color). On the other hand, two nodes 6 (Denver) and 30 (Cleveland) were common in more than 60% of the achieved results. Since four controllers are desired, besides these two nodes, we have to search for two other positions for controllers. For this purpose, among nodes whose selective percentages were in between 40 and 60 percent, ones with highest figures were 25 and 27. To sum up, regarding four controllers, our algorithm focuses mainly on four nodes 6, 30, 25, and 27. Selection of this nodes are based on the two considered objective function makes sense and both of them are considered in somehow.

8 Conclusion

In this work, different aspects of deploying multiple controllers in SDN networks were presented. This work mainly proposed a framework to solve the multi-objective control placement problem, aiming at considering both control plane architecture and relation between control and data planes. In the framework, we proposed a general flow setup time model and discussed all the scenarios happening for control latencies in packet transmission process. The other important objective function considered in the framework was inter-controller latency to reduce the communications among controllers. Since the proposed controller placement model is multi-objective, we proposed a multi-objective algorithm called Non-dominated Sorting Moth Flame Controller Placement Optimizer (NS-MFCPO). This algorithm uses a new clustering approach based on Best–Worst multi-criteria decision-making method (BWM) and a heuristic approach for allocating switches to controllers. Our evaluations on real wide area network (WAN) topologies show the efficiency of our framework.

References

Akyildiz IF, Lee A, Wang P, Luo M, Chou W (2014) A roadmap for traffic engineering in SDN-OpenFlow networks. Comput Netw 71:1–30

Bari MF, Roy AR, Chowdhury SR, Zhang Q, Zhani MF, Ahmed R, Boutaba R (2013) Dynamic controller provisioning in software defined networks. In: 9th International conference on network and service management (CNSM). IEEE, pp 18–25

Berde P, Gerola M, Hart J, Higuchi Y, Kobayashi M, Koide T et al (2014) ONOS: towards an open, distributed SDN OS. In: Proceedings of the third workshop on Hot topics in software defined networking. ACM, pp 1–6

Deb K, Agrawal S, Pratap A, Meyarivan T (2000) A fast elitist non-dominated sorting genetic algorithm for multi-objective optimization: NSGA-II. In: International conference on parallel problem solving from nature. Springer, Berlin, pp 849–858

Gao C, Wang H, Zhu F, Zhai L, Yi S (2015) A particle swarm optimization algorithm for controller placement problem in software defined network. In: International conference on algorithms and architectures for parallel processing. Springer, pp 44–54

Gao X, Kong L, Li W, Liang W, Chen Y, Chen G (2017) Traffic load balancing schemes for devolved controllers in mega data centers. IEEE Trans Parallel Distrib Syst 28(2):572–585

Glover F, Laguna M, Martí R (2000) Fundamentals of scatter search and path relinking. Control Cybern 29(3):653–684

Hassas Yeganeh S, Ganjali Y (2012) Kandoo: a framework for efficient and scalable offloading of control applications. In: Proceedings of the first workshop on Hot topics in software defined networks. ACM, pp 19–24

He M, Basta A, Blenk A, Kellerer W (2017) Modeling flow setup time for controller placement in SDN: evaluation for dynamic flows. In IEEE international conference on communications (ICC). IEEE, pp 1–7

Heller B, Sherwood R, McKeown N (2012) The controller placement problem. In: Proceedings of the 1st workshop on Hot topics in software defined networks. ACM, pp 7–12

Hu Y, Wendong W, Gong X, Que X, Shiduan C (2013) Reliability-aware controller placement for software-defined networks. In: IFIP/IEEE international symposium on integrated network management (IM 2013). IEEE, pp 672–675

Hu Y, Wang W, Gong X, Que X, Cheng S (2014) On reliability-optimized controller placement for software-defined networks. China Commun 11(2):38–54

Jain S, Kumar A, Mandal S, Ong J, Poutievski L, Singh A et al (2013) B4: experience with a globally-deployed software defined WAN. ACM SIGCOMM Comput Commun Rev 43(4):3–14

Jalili A, Ahmadi V, Keshtgari M, Kazemi M (2015) Controller placement in software-defined WAN using multi objective genetic algorithm. In: 2nd International conference on knowledge-based engineering and innovation (KBEI), 2015. IEEE, pp 656–662

Jalili A, Keshtgari M, Akbari R (2017) Optimal controller placement in large scale software defined networks based on modified NSGA-II. Appl Intell 48:1–15

Karakus M, Durresi A (2016) Quality of service (QoS) in software defined networking (SDN): a survey. J Netw Comput Appl 80:200–218

Kishore M (2003) Optimal link utilization and enhanced quality of service using dynamic bandwidth reservation for pre-recorded video (Doctoral dissertation, Virginia Tech)

Knight S, Nguyen HX, Falkner N, Bowden R, Roughan M (2011) The internet topology zoo. IEEE J Sel Areas Commun 29(9):1765–1775

Lange S, Gebert S, Zinner T, Tran-Gia P, Hock D, Jarschel M, Hoffmann M (2015a) Heuristic approaches to the controller placement problem in large scale SDN networks. IEEE Trans Netw Serv Manage 12(1):4–17

Lange S, Gebert S, Spoerhase J, Rygielski P, Zinner T, Kounev S, Tran-Gia P (2015) Specialized heuristics for the controller placement problem in large scale SDN networks. In: 27th International teletraffic congress (ITC 27). IEEE, pp 210–218

Liao J, Sun H, Wang J, Qi Q, Li K, Li T (2017) Density cluster based approach for controller placement problem in large-scale software defined networkings. Comput Netw 112:24–35

Mirjalili S (2015) Moth-flame optimization algorithm: a novel nature-inspired heuristic paradigm. Knowl. Based Syst. 89:228–249

Mitra D, Sarkar S, Hati D (2016) A comparative study of routing protocols. Eng Sci 2(1):46–50

Mohammadi R, Javidan R, Keshtgari M (2017) OpenIPTV: a comprehensive SDN-based IPTV service framework. Multimed Syst 24:1–13

Oktian YE, Lee S, Lee H, Lam J (2017) Distributed SDN controller system: a survey on design choice. Comput Netw 121:100–111

Olson D (2004) Comparison of weights in TOPSIS models. Math Comput Model 40:721–727

Opricovic S, Tzeng G-H (2004) Compromise solution by MCDM methods: a comparative analysis of VIKOR and TOPSIS. Eur J Oper Res 156:445–455

Rath HK, Revoori V, Nadaf SM, Simha A (2014) Optimal controller placement in software defined networks (SDN) using a non-zero-sum game. In: IEEE 15th International Symposium on A world of wireless, mobile and multimedia networks (WoWMoM), 2014. IEEE, pp 1–6