Abstract

In this paper, we propose a method to estimate the number and locations of change points and further estimate parameters of different regions for piecewise stationary vector autoregressive models. The procedure decomposes the problem of change points detection and parameter estimation along the component series. By reformulating the change point detection problem as a variable selection one, we apply group Lasso method to estimate the change points initially. Then, from the preliminary estimate of change points, a subset is selected based on the loss functions of Lasso method and a backward elimination algorithm. Finally, we propose a Lasso + OLS method to estimate the parameters in each segmentation for high-dimensional VAR models. The consistent properties of the estimation for the number and the locations of the change points and the VAR parameters are proved. Simulation experiments and real data examples illustrate the performance of the method.

Similar content being viewed by others

1 Introduction

Recent years the problem of change point detection has been extensively studied and widely used in various fields, such as economics (Bai 1999), molecular biology (Picard et al. 2005) and climate data analysis (Li and Lund 2012). In the field of time series analysis, change point detection methods offer an easy interpretable means to model nonstationary data. Most methods of change points detection are based on statistical theory and optimization approach, for example minimum description length principle in Davis et al. (2006), genetic algorithm in Doerr et al. (2017) and focused time delay neural network in Panigrahi et al. (2018). For univariate time series, considerable researches are focused on the structural break AR model [Andreou and Ghysels 2008; Shao and Zhang 2010; Chan et al. 2014).

Lasso technique (Tibshirani 1996; Yuan and Lin 2006) has advantages over other methods in computation, variable selection, simultaneous parameter estimation and grasping key factors and has got the extensively research attention in statistics including change point detection. In one-dimensional linear regressive model, through reformulating the estimation of change points in a model selection context, Lasso (Harchaoui and Levy-Leduc 2008, 2010) and group fused Lasso (Bleakley and Vert 2011) are used to estimate locations of change points. The class of methods based on Lasso is also applied to time series data for estimating change points in structural break autoregressive models. Angelosante and Giannakis (2012) developed an efficient block-coordinate descent algorithm to implement the novel segmentation method. Chan et al. (2014) incorporated group Lasso and the stepwise regression variable selection technique to obtain consistent estimates for the change points.

Existing methods for analyzing piecewise stationary time series primarily focused on univariate time series. For the VAR models advocated by Sims (1980), the change points detection has become complicated since the number of parameters increases exponential with the dimension. Kirch et al. (2015) extended the class of score type change point statistics to the VAR model and developed novel statistical methods. Ding et al. (2017) estimated high-dimensional time-varying VARs by solving time-varying Yule–Walker equations based on kernelized estimates of variance and auto-covariance matrices. Safikhani and Shojaie (2017) proposed a three-stage procedure for consistent estimation of both structural change points and parameters of high-dimensional piecewise vector autoregressive (VAR) models. The proposed approach first identifies the number of break points and then determines the location of the break points and provides consistent estimates of model parameters.

However, existing methods for estimating change points of VAR models have some problems. Firstly, simultaneously estimating the parameters of multivariate time series causes complex calculation of large matrix. Secondly, because of the bias of the estimation, for a change point, different components series may have different estimation, and the information criterion based on parameter estimation between this kind of change points may suffer from small sample. Thirdly, for the Lasso estimation, the existence of a tuning parameter which can lead to both variable selection consistency and asymptotic normality cannot be ensured (Zou 2006). Considering this problem, two-stage estimators to improve the performance of the Lasso, such as the Lars-OLS hybrid (Efron et al. 2004) and adaptive Lasso (Zou 2006), have been previously proposed.

Considering problems above, in this paper we propose a group Lasso-based method to estimate the number and locations of multiple change points. A Lasso +OLS algorithm is also proposed to estimate parameters of the piecewise stationary VAR models. The problem of change points detection and parameter estimation are decomposed along the component series. By reformulating the change point detection problem as a variable selection one, we apply a group Lasso method to obtain the initial estimation of the change points. Then, we select a subset from the preliminary estimate of change points based on the loss functions of Lasso method and a backward elimination algorithm. We also propose a Lasso + OLS method to estimate the parameters in each segmentation for high-dimensional VAR models. The consistent properties of the estimation for both the number and the locations of the change points along with VAR parameters are proved.

Compared to the existing work for VAR model, our method identifies the break points sequentially according to component series, which can realize parallel processing and has high calculation efficiency, that lead to a good prospect of application. Before the backward elimination algorithm, we make a selection among the change points with distance sufficiently small detected by different components series of the multivariate time series. This procedure can reduce calculation amount and improve calculation accuracy. Furthermore, we propose a Lasso + OLS method to estimate parameters, which improves the performance of the Lasso estimator in each segmentation for high-dimensional VAR models.

Section 2 proposes the group Lasso-based change points detection procedure for piecewise stationary VAR models and proves the statistic properties of the method. Section 3 presents the consistent estimation for the number and the locations of the change points and the VAR parameters. Section 4 summarizes results from numerical experiments and real data. Section 5 gives some concluding remarks. Proofs are put in “Appendix.”

2 Group Lasso estimation for piecewise stationary VAR

2.1 Problem statement

Consider a piecewise stationary VAR(p) model

where \(Y_t=(Y_{1,t},\ldots ,Y_{d,t})^T\) is a \(d\times 1\) vector, \(\epsilon _t\) is a \(d\times 1\) white noise with zero mean. \(v^j=(v_1^j,v_2^j,\ldots ,v_d^j)^T\) is a \(d\times 1\) vector of intercept term, \(A^j(k),k=1,\ldots ,p\), \(j=1,\ldots ,m+1\), are \(d\times d\) coefficient matrices. The time instincts \(\{t_1,\ldots ,t_m \}\) with \(1=t_0<t_1<\cdots <t_{m+1}=n+1\) denote the change points when the parameters \(A^j(k)\) change to \(A^{j+1}(k),k=1,\ldots ,p\) at time \(t_j\).

Without loss of generality, we suppose the intercept term \(v^j,j=1,\ldots ,m+1\) in Eq. (1) be zero. Then, for \(t_{j-1} \le t<t_j\), \(j=1,\ldots ,m+1\),

where \(B^j=\left[ A^j(1), ~\ldots ~ ,A^j(p)\right] \) is a \(d \times pd\) matrix and \(X_{t-1}=\left[ Y_{t-1}^T,\ldots ,Y_{t-p}^T\right] ^T \) is a \(pd\times 1\) vector.

We design a procedure that decomposes the problems of the estimation for the number and locations of change points, coefficient matrices \(B^j,j=1,\ldots ,m\) along the series. Then, we estimate change points and parameters for each component separately and join them together. One benefit of such decomposition is that the estimation problem is reduced to a set of atomic problems, one for each component \(i(i=1,\ldots ,d)\). For the i-th component of \(Y_t\), the regressive equation of \(Y_{i,t}\) is

where \(B_{i\cdot }^j=\left[ A_{i\cdot }^j(1), ~\ldots ~ ,A_{i\cdot }^j(p)\right] \) denotes the i-th row of matrix \(B^j\), \(A_{i\cdot }^j(k)\) is the i-th row of matrix \(A^j(k)\), \(k=1,\ldots ,p\). \(t_{j}^i,j=1,\ldots ,m_i\) are the change points of the i-th series \(Y_{i,t}\), and \(\cup _{i=1}^d\{t_1^i,\ldots ,t_{m_i}^i\}=\{t_1,\ldots ,t_m\}\).

We generalize the change point detection ideas of Chan et al. (2014) to the multivariate setting. The problem of estimating change points is reformulated in a model selection context. Set \(\phi _{i,1}=B_{i\cdot }^1\) and

for \(q=2,\ldots ,n\), where \(t_j^i\) is a change point in Eq. (3), \(\phi _{i,q}\) is a \(1\times pd\) vector, \(\phi _{i,q}=0\) means that \(\phi _{i,q}\) has all entries equaling zero and \(\phi _{i,q} \ne 0 \) means that \(\phi _{i,q}\) has at least one nonzero entry.

Then, Eq. (3) can be expressed as a high-dimensional regression model with the effective sample size \(n-p\),

Let \(\varvec{Y}_i^0=(Y_{i,p+1},\ldots ,Y_{i,n})^T\). \(\varvec{\epsilon }_i^0=(\epsilon _{i,p+1},\ldots ,\epsilon _{i,n})^T\), \(\varvec{\phi }_i=(\phi _{i,1},\phi _{i,2},\ldots ,\phi _{i,n})^T\), \(\varvec{X}\) be the \((n-p)\times ndp\) matrix, Eq. (5) can be written in a vector form as

From the definition of coefficients, when \({\hat{\phi }}{_{i,q}}\ne 0, q\ge 2\), there is a change in the parameter \({\hat{B}}_{i\cdot }\). Thus, the structural breaks \(t_j^i,j=1,2,\ldots ,m_i\) can be estimated by identifying those \({\hat{\phi }}_{i,q} ,(q\ge 2)\) which are not zero.

Notice that \(\varvec{\phi }_i\) is \(npd-\)dimensional column vectors, then the estimation of the parameters is a difficult problem. We observe that only \(m_i\) groups \(\phi _{i,q}\) in \(\varvec{\phi }_i\) are nonzero, which cause a natural group structure to group the parameters along the time. Then, there are n groups, \(\phi _{i,q}, q=1,\ldots ,n\).

Thus, we propose to estimate \(\varvec{\phi }_i\) in the high-dimensional regression model (6) by the group Lasso method

where \(\Vert \cdot \Vert _2\) denotes the \(l_2\) norm, \(\lambda _n\) is the regularization penalty weight.

The estimates of the change points are

Denote \({\hat{m}}_i=\vert {\mathscr {A}}_{ni}\vert \) the estimated number of change points, \({\hat{t}}_1^i,{\hat{t}}_2^i,\ldots ,{\hat{t}}_{{\hat{m}}_i}^i\) be the elements of \({\mathscr {A}}_{ni}\). In view of the relationship between \(\varvec{\phi }_i\) and \(B_{i\cdot }\) in Eq. (4), the parameters in each segment of Eq. (3) can be estimated by

Applying Eq. (7) to each component \(Y_{i,t}, i=1,\ldots ,d\) and then combining the results of each components together, we can obtain the estimates for change points and regressive coefficients in Eq. (2) as follows:

2.2 Properties of the group Lasso estimation

In this section, we study the properties of the estimations from group Lasso approach.

Define

Hence, \(I_{min}\) is the minimal interval between two consecutive change points, and \(J_{min}, J_{max}\) are the minimum and maximum of the difference between two consecutive different coefficient matrices, respectively. Let \(S_{ij}\) be the number of elements which are nonzero in coefficients vector \(B_{i\cdot }^j,i=1,\ldots ,d\), \(j=1,\ldots ,m+1\). \(S_{max}=\max \limits _{\begin{array}{c} 1\le i\le d\\ 1\le j\le m+1 \end{array}}S_{ij}\).

To discuss the asymptotic results for the group Lasso procedure, we first impose the following assumptions.

Assumption 1

Suppose the covariance matrices \(\varGamma ^j(h)=cov(Y_t,Y_{t+h}),~t_{j-1}\le t\le t_j, t,h\in {\mathbb {Z}}\). The spectral density matrices \(f_j(\kappa )=(2\pi )^{-1}\sum _{l\in {\mathbb {Z}}}\varGamma ^j(l)e^{-il\kappa },\kappa \in \left[ -\pi ,\pi \right] \) satisfy

That is, the largest and smallest eigenvalue of the spectral density matrices, \(\varLambda _{max}(\cdot )\) and \(\varLambda _{min}(\cdot )\), is bounded.

Assumption 2

\(\epsilon _t\) are i.i.d. Gaussian random variables with mean 0 and covariance matrix \(\sigma ^2I_d\). The process \(Y_{i,t}=B_{i\cdot }^j{\varvec{X}}_{t-1}+\epsilon _{i,t},t=t_{j-1}^i,\ldots ,t_j^i-1\), \(j=1,\ldots ,m+1\) are stationary process.

Assumption 3

\(J_{min}>v_B\), \(\max \limits _{\begin{array}{c} 1\le i,k\le d\\ 1\le j\le m+1 \end{array}}\vert B_{ik}^j\vert \le M_B\), \(I_{min}/(n\gamma _n)\rightarrow \infty \), \(\gamma _n/(S_{max}\lambda _n)\rightarrow \infty \), \(log(d)/(n\lambda _n)\rightarrow 0\).

Let \(\varvec{\phi }\) be the true value. First consider the consistency result of the VAR parameters in terms of prediction error.

Theorem 1

Under Assumptions 1 and 2, if \(\lambda _n=2c_0 \sqrt{log (npd^2)/n}\) for some \(c_0>0\) and \(m\le m_n\) for some \(m_n=o(\lambda _n^{-1})\), then with probability approaching 1 as \(n\rightarrow \infty \)

Theorem 2

Under Assumptions 1, 2 and 3, if m is known and \(\vert {\mathscr {A}}_n\vert =m\), then

Theorem 3

Under Assumptions 1, 2 and 3, as \(n\rightarrow \infty \),

and

where \(d_H(A,B)=\max \limits _{b\in B}\min \limits _{a\in A}\vert b-a\vert \) is the Hausdorff distance between two sets A and B.

The proofs of Theorems 1 to 3 will be given in the appendix.

3 Consistent estimation procedure of the change points and parameters

3.1 Consistent estimation of change points

From Theorems 2 and 3, the set \({\mathscr {A}}_{n}=\{{\hat{t}}_1,\ldots ,{\hat{t}}_{{\hat{m}}}\}\) is usually larger than and has bias with the true \({\mathscr {A}}=\{t_1,t_2,\ldots ,t_m\}\). In this subsection, we propose a procedure to further identify the true change points in \({\mathscr {A}}_{n}\). The procedure is constituted by two steps.

Firstly, we make a selection for those change points which are close to each other. Because of the bias of the estimation, for a change point \(t_j,j\in \{1,\ldots ,m\}\), different components series (\(i=1,\ldots ,d\) in Eq. (7)) may have different estimations, \({\hat{t}}_j^1,{\hat{t}}_j^2,\ldots ,{\hat{t}}_j^d\). Our goal is to identify the estimate which is the closest to \(t_j\). We develop a method based on the penalty function of Lasso estimate.

Group the elements in \({\mathscr {A}}_{n}\) according to their distance between each other. For example, from Theorem 2, if \({\hat{t}}_k-{\hat{t}}_1\le c_s n\gamma _n\) for some constant \(0<c_s<1\), then \({\hat{t}}_k\) is in the first group. If there is no \({\hat{t}}_k\) satisfying \({\hat{t}}_k-{\hat{t}}_r\le c_s n\gamma _n\), then \({\hat{t}}_r\) is a group alone. Suppose there are G groups, denoted as \({\hat{t}}_{(1)}^1,\ldots ,{\hat{t}}_{(1)}^{l_1},\ldots ,{\hat{t}}_{(G)}^1,\ldots ,{\hat{t}}_{(G)}^{l_G}\). Let \({\tilde{t}}_0=1\). We establish the following regression models,

for \(s=1,\ldots ,l_j\) and estimate the parameters \({\hat{\beta }}_i^1,{\hat{\beta }}_i^2\) as follows:

Then, we select the change points \({\tilde{t}}_j\) as follows:

Let \(\tilde{{\mathscr {A}}}_n=\{{\tilde{t}}_1,\ldots ,{\tilde{t}}_{{\tilde{m}}}\}\). In this step, we do select only in those \({\hat{t}}_k\)s which satisfy \({\hat{t}}_k-{\hat{t}}_{(j)}^1<c_s n\gamma _n,j=1,\ldots ,G\). From Assumption 3, \(I_{min}/(n\gamma _n)\rightarrow \infty \), \(\vert t_k-t_j\vert >n\gamma _n\), then the \(t_j\)s still remain in \(\tilde{{\mathscr {A}}_n}\), that is \(\vert \tilde{{\mathscr {A}}_n}\vert \ge m\). Then, as \(n\rightarrow \infty \), we have

Secondly, we generalize the backward elimination algorithm in Chan et al. (2014) to the multivariate time series. The algorithm starts with \(\tilde{{\mathscr {A}}}_n\) and removes one “most unnecessary change point” according to some prescribed information criterion in each step until no further reduction is possible. Consider a general IC of the form

where \(\omega _n\) is the penalty term.

For regression model

with the Lasso estimates of parameters \({\hat{\beta }}_i^j\)

define \(L_n(t_1,\ldots ,t_{m})=\sum _{j=1}^{m+1}L_n(t_{j-1},t_j)\) as the goodness-of-fit measure,

The algorithm is as follows:

Step 1 Set \(K=\vert \tilde{{\mathscr {A}}}_n\vert , {\mathbf {t}}_K=(t_{K.1},\ldots ,t_{K.K})=\tilde{{\mathscr {A}}}_n\) and \(V_K=IC(K,\tilde{{\mathscr {A}}}_n\)).

Step 2 For \(k=1,\ldots ,K\), compute \(V_{K.k}=IC(K-1,{\mathbf {t}}_K\backslash \{t_{K,k}\})\). Set \(V_{K-1}=min_kV_{K,k}\).

Step 3 If \(V_{K-1}>V_K\), then there is no change point that can be removed, \(\hat{{\mathscr {A}}}_n^*={\mathbf {t}}_K\).

If \(V_{K-1}\le V_K\) and \(K=1\), then there is no change point in the time series, \(\hat{{\mathscr {A}}}_n^*=\varnothing \).

If \(V_{K-1}\le V_K\) and \(K>1\), then set \(h=arg min_kV_{K,k}, {\mathbf {t}}_{K-1}:={\mathbf {t}}_K\backslash \{t_{K-1,h}\}\) and \(K=K-1\). Go to Step 2.

Let \({\mathscr {A}}_{n}^*=({\hat{t}}_1^*,\ldots ,{\hat{t}}_{m*}^*)\) be the estimation of the change points. Let \(S=\sum _{j=1}^{m+1}\sum _{i=1}^dS_{ij}\). We then impose the assumption to prove the consistency of the estimation.

Assumption 4

As \(n\rightarrow \infty \), \(\frac{n\gamma _{n} m S^{2}}{\omega _{n}}\rightarrow 0\), \(\frac{m\omega _{n}}{I_{min}}\rightarrow 0\).

Theorem 4

Under Assumption 1 to 4, and choose \(\eta _n=2(c\sqrt{log d/I_{min}}+M_BS)\), the estimate \({\mathscr {A}}_{n}^*=({\hat{t}}_1^*,\ldots ,{\hat{t}}_{\vert {\mathscr {A}}_{n}^*\vert }^*)\) satisfies as \(n\rightarrow \infty \),

and there exists a constant \(C>0\) such that

If we use the backward elimination algorithm straightly to the estimations \({\hat{t}}_1,{\hat{t}}_2,\ldots \), \({\hat{t}}_{{\hat{m}}}\), for those \(\vert {\hat{t}}_k-{\hat{t}}_q\vert \le cI_{min}\), the parameter estimation between this kind of change point in Eq. (20) may suffer from less sample size (such as 1) and large amount of calculation in the algorithm. The select procedure Eq. (19) overcomes this shortcoming, reduces calculation amount and improves calculation accuracy.

3.2 Consistent estimation of parameters

The Lasso estimator generates sparse models and is also computationally feasible. However, for the case that the number of parameters is far larger than sample size, statistical inference for the Lasso estimator with theoretical guarantees is still an insufficiently explored area. Several authors have previously considered two-stage estimators to improve the performance of the Lasso, such as the Lar-OLS hybrid (Efron et al. 2004), Lasso + mLS and Lasso + Ridge (Liu and Yu 2013). In this subsection, we propose a post-Lasso estimator for the coefficients of VAR(p) model Eq. (2), denoted as Lasso + OLS. The procedure is composition of two parts, (1) selection stage: we select predictors using the Lasso; and (2) estimation stage: we apply ordinary least squares (OLS) to estimate the coefficients of the selected predictors.

The idea of our Lasso algorithm in the selection stage, which is similar to that in Safikhani and Shojaie (2017), is to remove the selected break points together with large enough \(R_n\) neighborhoods and obtain stationary segments at cost of loss some observations. Denote the estimated change points by \({\hat{t}}_1^*,{\hat{t}}_2^*,\ldots ,{\hat{t}}_{m^*}^*\), then the \({\hat{m}}^*+1\) stationary segments are \(r_j=\{t:{\hat{t}}_{j-1}^*+R_n+1\le t\le {\hat{t}}_j^*-R_n-1\}\), \(j=2,3,\ldots {\hat{m}}^*\), \(r_1=\{t:1\le t\le {\hat{t}}_1^*-R_n-1\}\), \(r_{{\hat{m}}^*}=\{t:{\hat{t}}_{{\hat{m}}^*}^*+R_n+1\le t\le n\}\). For regression models

the Lasso estimation of parameters \(\beta _{i\cdot }^j\) is as follows:

We propose a hard thresholding to shrink those \({\hat{\beta }}_{ik}^j\) with absolute value smaller than \(\tau _n(\tau _n>0) \) to zero. One gets a set of selected predictors \({\hat{S}}_{ij}=\{k:\vert {\hat{\beta }}_{ik}^j\vert \ge \tau _n,1\le k\le dp\}\).

In the second stage, a low-dimensional estimation method is applied to the selected predictors in \({\hat{S}}_{ij}\). For example, one can adopt OLS after model selection (denoted by Lasso + OLS):

Then, the estimators for the \(i-\)th row of coefficients matrix \(B^j\) are \({\hat{B}}_{i\cdot (Lasso+OLS)}^j=({\hat{\beta }}_{i\cdot (Lasso+OLS)}^j)^T\), \(j=1,\ldots ,d\), and

Denote the pd predictors as

Assume \(B_{i\cdot }^j=(\beta _1,\ldots ,\beta _{S_i},\beta _{S_i+1},\ldots ,\beta _{pd})\), where \(\beta _{k}\ne 0\) for \(k=1,\ldots ,S_i\) and \(\beta _{k}=0\) for \(k=S_i+1,\ldots ,pd\). Let \(\beta _{(1)}=(\beta _1,\ldots ,\beta _{S_i})^T\) and \(\beta _{(2)}=(\beta _{S_i+1},\ldots ,\beta _{pd})^T\). Arrange \(X_{t-1}\) according to the same columns order as \(\beta _{(1)},\beta _{(2)}\). Let \(\varSigma ^n=\frac{1}{n-p}\sum _{t=p+1}^{n}X_{t-1}X_{t-1}^T\), and rewrite \(\varSigma ^n\) as a block-wise form

To state the consistency of the estimation, we need the following assumptions.

Assumption 5

\(X_{t-1}\) are standardized, \(\frac{1}{n}\sum _{t=p+1}^{n}X_{t-1,k}=0\) and \(\frac{1}{n}\sum _{t=p+1}^{n}X_{t-1,k}^2=1\)\(k=1,\ldots ,pd\). There exists \(0\le c_1 <c_3\le 1\) and \(0<c_2<c_3-c_1\), such that \(S_{ij}=s_n={\mathcal {O}}(n^{c_1})\), \(pd=p_nd_n={\mathcal {O}}(e^{n^{c_2}})\). For \(M_1,M_2>0\), the following holds:

Assumption 6

There exists a constant \(c_m>0\), \(\varLambda _{min}(\varSigma _{11}^n)\ge c_m\). \(\tau _n\propto \frac{1}{n}\).

Assumption 7

(Strong irrepresentable condition) Suppose \(\varSigma _{11}^n\) is invertible, there exists a positive constant vector \(\xi \)

Assumption 8

For \(S_{ij}\) and \(B_{i\cdot }^j\) are all fixed as \(n\rightarrow \infty \), and a positive matrix \(\varSigma \),

Theorem 5

Suppose that Assumptions 1 to 8 hold. Then, for \(i=1,\ldots ,d\), \(j=1,\ldots ,{\hat{m}}^*\),

- (1)

Subset selection consistency:\(lim_{n\rightarrow \infty }P\left( {\hat{S}}_{ij}=S_{ij}\right) =1.\)

- (2)

Asymptotic normality: \(\sqrt{n}\left( {\hat{B}}_{i\cdot (Lasso+OLS)}^j-B_{i\cdot }^j\right) {\mathop {\longrightarrow }\limits ^{d}}N\left( 0,\sigma ^2\varSigma _{11}\right) .\)

Remark 1

The order p of VAR model is assumed the same for all segments for convenience. In practice, one can first estimate a sufficiently large order p by applying standard procedures such as BIC or AIC on the VAR model, then estimate the change points by the procedure and finally identify the order of each segment simultaneously with the parameter estimation.

Remark 2

The Lasso + OLS method in this subsection is suitable for the case that the number of parameters is large and the least-squares estimate is not unique. When the number of parameters and sample size satisfy the conditions that the OLS is unique, one can use OLS directly to estimate the parameters.

4 Numerical experiments and real data

In this section, we evaluate the performance of the proposed methods with respect to both change points detection and parameter estimation from numerical experiments and a real data example. Liu and Ye (2010) proposed a sub-gradient-based approach to solve the group Lasso problem and developed a MATLAB interfaced module called SLEP. We utilized SLEP to conduct all experiments in this section.

4.1 Numerical experiments

For the convenience of comparing, we consider the same three models as in Safikhani and Shojaie (2017).

Model 1. The time series in this example is generated from the VAR(1) model with two change points,

where \(Y_t\) is a \(d-\)dimensional time series, \(\epsilon _t~\sim i.i.d.N({\mathbf {0}},0.1^2I_d), d=20,n=300\). The nonzero elements of matrix A are defined as follows.

and all the other elements are zeros.

In the example, 100 realizations are simulated from model (29) and estimated by the method. The estimation results are summarized in Table 1, in which the performance of three-stage method reported in Safikhani and Shojaie (2017) is compared. Our method gives the correct number of change points in all the 100 realizations. The mean and standard error of the location estimates are also reported in Table 1. Table 1 shows the performance of our method is similar to the three-stage method for this example.

Model 2. The time series in this example is generated from the VAR(1) model with the same coefficient matrices as Eq. (29), but the change points are closer to the boundaries, \(t_1=50,t_2=250\).

Similar to the preceding example, 100 realizations are simulated from Model 2. The percentage of the estimated corrected number and the mean and standard error of the location estimates are shown in Table 2. Our method has a smaller bias than the three-stage method, which shows the good performance of the method when the change point is near the boundary.

Model 3. The time series in this example is generated from the VAR(1) model with two change points \(t_1=100,t_2=200\). The coefficients matrices are chosen to be randomly structured as shown in Fig. 1 (Figure 6 in Safikhani and Shojaie 2017). Suppose the values for the nonzero coefficients all be 0.5.

Table 3 shows the results of the estimated change points. Compared with the three-stage method, the performance of our method is better at the percentage of times where the change points are correctly detected and the mean of estimated change points locations.

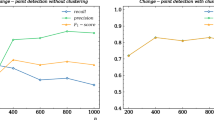

Then, we analyze the performance of the Lasso + OLS method in Sect. 3.2 for the predictor selection and parameter estimation for the three examples. The effectiveness of predictor selection is evaluated by true positive rate (TPR), true negative rate (TNR), false positive rate (FPR) and false negative rate (FNR) of the Lasso estimates with the hard threshold \(\tau =0.1\). The measures are defined as follows:

where \(j=1,2,3\) denote the segments of the time series. Then,

Based on the predictors selected by Lasso, we estimate the parameters of coefficient matrix \(A^j,j=1,2,3\) by ordinary least squares. The performance is evaluated by the mean and standard deviation of the relative estimation error (denoted by REE),

The results of 100 simulated data for predictor selection and parameter estimation in all the three models are reported in Table 4. Compared with the results of the methods in Safikhani and Shojaie (2017), the performance of our method is better than the results of Lasso estimation both in predictor selection and parameter estimation, especially for Model 2. The REE is greatly improved, which shows that the Lasso + OLS method reduces error of the parameter estimation compared with Lasso estimation only.

4.2 Chinese stock market

In this subsection, we apply our method to analyze the returns of Shanghai Stock Index, Shenzhen Stock Index and Hangseng Index from September 1, 2016, to October 10, 2018, obtained from https://www.investing.com/. The log returns of three indexes are shown in Fig. 2a. The fluctuation of the returns suggests structural changes in the volatility. Since it is well known that the square of the log returns can be regarded as a VAR process, the structural changes in volatility in the returns can be regarded as the structural changes in the VAR structure of the squared returns series. Thus, we consider the squared returns series to identify structural break points. We consider a standard version of the data to apply the Lasso algorithm. The three-dimensional squared returns series data over 519 observations are shown in Fig.2b.

The procedure of change points selection is shown in Table 5. At the first stage, the group Lasso estimation obtains 68 change points \({\hat{t}}_j,j=1,\ldots ,68\). We then select the most optimal one among each group. According to Eq. (19), there are 7 change points \(({\tilde{t}}_j, j=1,2,\cdots ,7)\) be selected, which are 47, 73, 301, 352, 383, 441, 485, respectively. And finally, applying the backward elimination algorithm according to the IC criterion Eq. (20), change points 47 are deleted. We obtained the estimation for change points shown in Table 6.

Table 6 shows the 6 change points estimated by the algorithm. The estimated change points are located on December 16, 2016, November 20, 2017, February 2, 2018, March 21, 2018, June 19, 2018, and August 21, 2018. Based on the history of the financial events, these estimated locations can be well interpreted. On December 5, 2016, Shenzhen-Hong Kong Stock Connect was officially opened, which reduces the level of volatility of the stock index. On February 5, 2018, a slump in the American stock market caused the acute fluctuation of the volatility. On March 23, 2018, America announced a tax increase of 60 billion US dollars on China triggered the largest trade war in the history of China and the USA, and the stock market of China and the USA fell sharply. On June 1, 2018, 234 A-shares were included in the MSCI index system with a factor of \(2.5\%\), and the factor was increased to \(5\%\) on September 1, 2018, which corresponds to the last estimated change point.

5 Conclusion

In this paper, we propose a method which is based on group Lasso and information criterion to estimate the number and locations of change points for piecewise stationary vector autoregressive models. The procedure decomposes the problem of change points detection and parameter estimation along the component series. By reformulating the change point detection problem as a variable selection one, we applied group Lasso method to estimate the change points initially. Then, a subset of the change points that have similar distance detected by different components series of the multivariate time series is selected, which can reduce calculation amount and improve calculation accuracy. Finally, the backward elimination algorithm is used to determine the number and locations of the change points. This procedure can reduce calculation amount and improve calculation accuracy. The method achieves identification, selection and estimation all in one procedure. Simulation experiments and practical example suggest that our approach shows promising results for VAR models, especially in the identification for change points of VAR models.

References

Andreou E, Ghysels E (2008) Structural breaks in financial time series. Handbook of financial time series. Springer, Berlin, pp 839–866

Angelosante D, Giannakis GB (2012) Group lassoing change-points in piecewise-constant AR processes. Eurasip J Adv Signal Proces 70(1):1–16

Bai J (1999) Likelihood ratio tests for multiple structural changes. J Econom 91:299–323

Basu S, Michailidis G (2015) Regularized estimation in sparse high-dimensional time series models. Ann Stat 43(4):1535–1567

Bleakley K, Vert JP (2011) The group fused lasso for multiple change-point detection. arXiv preprint arXiv:1106.4199

Chan NH, Yau CY, Zhang RM (2014) Group Lasso for structural break time series. J Am Stat Assoc 109(506):590–599

Davis RA, Lee TCM, Rodriguez-Yam GA (2006) Structure break estimation for nonstationary time series models. J Am Stat Assoc 101(473):223–239

Ding X, Qiu ZY, Chen XH (2017) Sparse transition matrix estimation for high-dimensional and locally stationary vector autoregressive models. Electron J Stat 11(2):3871–3902

Doerr B, Fischer P, Hilbert A, Witt C (2017) Detecting structural breaks in time series via genetic algorithms. Soft Comput 21(16):4707–4720

Efron B, Hastie T, Tibshirani R (2004) Least angle regression. Ann Stat 32(2):407–499

Harchaoui Z, Levy-Leduc C (2008) Catching change points with Lasso. Advances in neural information processing system. MIT Press, Vancouver, pp 161–168

Harchaoui Z, Levy-Leduc C (2010) Multiple change point estimation with a total variation penalty. J Am Stat Assoc 105(492):1480–1493

Kirch C, Muhsal B, Ombao H (2015) Detection of changes in multivariate time series with application to EEG data. J Am Stat Assoc 110(511):1197–1216

Li S, Lund R (2012) Multiple change point detection via genetic algorithms. J Clim 25(2):674–686

Liu HZ, Yu B (2013) Asymptotic properties of Lasso + mLS and Lasso + ridge in sparse high-dimensional linear regression. Electron J Stat 7:3124–3169

Liu J, Ji S, Ye J (2011) SLEP: sparse learning with efficient projections. http://www.public.asu.edu/~jye02/Software/SLEP

Liu J, Ye J (2010) Moreau-yosida regularization for grouped tree structure learning. Int Conf Neural Inf Process Syst (NIPS) 23:1459–1467

Picard F, Robin S, Lavielle M, Vaisse C, Daudin JJ (2005) A statistical approach for array CGH data analysis. Bioinformatics 6(27):1–14

Panigrahi S, Verma K, Tripathi P (2018) Land cover change detection using focused time delay neural network. Soft Comput https://doi.org/10.1007/s00500-018-3395-3

Safikhani A, Shojaie A (2017) Joint structural break detection and parameter estimation in high-dimensional non-stationary VAR models. arXiv preprint arXiv:1708.02736

Shao X, Zhang X (2010) Testing for change-points in time series. J Am Stat Assoc 105(491):1228–1240

Sims CA (1980) Macroeconomics and reality. Econometrica 48(1):1–48

Tibshirani R (1996) Regression shrinkage and selection via The Lasso. J R Stat Soc 58B(1):267–288

Yuan M, Lin Y (2006) Model selection and estimation in regression with grouped variables. J R Stat Soc 68B(1):49–67

Zhao P, YU B (2006) On model selection consistency of Lasso. J Mach Learn Res 7(Nov):2541–2563

Zou H (2006) The adaptive Lasso and its Oracle properties. J Am Stat Assoc 101(476):1418–1429

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant No. 11601404, the National Statistical Research Program funded by National Bureau of Statistics of China under Grant No. 2016LZ37, the Youth Innovation Team of Shaanxi Universities, Yanta Scholars Foundation and talent development foundation of Xian University of Finance and Economics.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Author Wei Gao declares that she has no conflict of interest. Author Haizhong Yang declares that he has no conflict of interest. Author Lu Yang declares that she has no conflict of interest.

Human and animal rights

This article does not contain any studies with human or animal participants performed by the author.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Communicated by X. Li.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix Proofs of Theorems

Appendix Proofs of Theorems

Since the estimation problem decomposes across different time series \(Y_{it}\) with regressive coefficient vector \(B_{i\cdot }^j\), the number of change points \(m_i\) and change points \(t_k^i\), it is enough to show that the estimation \({\hat{B}}_{i\cdot }^j\), \({\hat{m}}_i\) and \({\hat{t}}_k^i\) are consistent and then apply the union bound to show that the whole parameter matrix B and change points are estimated consistent.

Suppose

Lemma 1

Suppose Assumptions 1 and 2 hold. For any \(c_0>0\), there exists some constant \(C>0\) such that for \(n\ge Clog(nd^2p)\)

Proof

For any t, l, h, \(cov(Y_{k,p+t}\epsilon _{i,p+t+l})=0\), it follows from Proposition 2.4(b) of Basu and Michailidis (2015) that for \(d\times 1\) vectors u, v with zeros except the k, i-th elements, respectively,

Suppose \(\eta =k_3\sqrt{\frac{1}{n}log (nd^2p)}\), and \(k_3>0\) be large enough yieal Eq. (32). \(\square \)

Lemma 2

Suppose Assumptions 1 and 2 hold. For any \(c_i>0,i=1,2,3\),

and

Proof

From Proposition 2.4 of Basu and Michailidis (2015),

and

Let \(\eta =k_3\sqrt{\frac{log(pd^2)}{n\gamma _n}}\), we get the results. \(\square \)

Lemma 3

Let \({\hat{\phi }}_{i}\) and \(\phi _i\) be defined as in Eq. (7), \(\mathbf X _{l-1}\) denote the row in matrix \(\mathbf X \) where \(X_{l-1}\) is located in. Under the condition of Theorem 1, we have

and

Lemma 3 concerns the KKT conditions of the group Lasso algorithm. Here, we omit the proof which can be deduced directly.

Proof of Theorem 1

From the similar proof strategy to Theorem 2.1 in Chan et al. (2014) (using Lemma 1 to replace the Lemma A.1), for some \(m_n=o(\lambda _n^{-1})\), then with some \(c_0>0\) and high probability as \(n\rightarrow \infty \),

where \(\max \limits _{\begin{array}{c} 1\le k\le d\\ 1\le j\le m+1 \end{array}}\vert B_{ik}^j\vert \le M_B^i\). Then,

\(\square \)

Proof of Theorem 2

Firstly, for \(i=1,\ldots ,d\), we prove

Suppose \(T_{ij}=\{\vert {\hat{t}}_j^i-t_j^i\vert > n\gamma _n\}, j=1,2,\ldots ,m_i\), then

Let \(C_n=\{\max \limits _{1\le j\le m_i}\vert {\hat{t}}_j^i-t_j\vert \le \min _i\vert t_j-t_{j-1}\vert /2\}\). To prove Eq. (42), it suffices to show that

where \(C_n^c\) is the complement of the set \(C_n\).

We only outline the proof for \(\sum _{j=1}^{m_i} P(T_{ij} C_n)\rightarrow 0.\) The proof for \(\sum _{j=1}^{m_i}P(T_{ij}C_n^c)\rightarrow 0\) is similar.

The number of change points \(m_i\) has two cases, \(m_i\) is fixed or \(m_i\rightarrow \infty \). For the case \(m_i\) is fixed, we consider two cases \({\hat{t}}_j^i<t_j^i\) and \({\hat{t}}_j^i>t_j^i\).

From the KKT conditions, we have

which implies that

Then, for \({\hat{t}}_j^i<t_j^i\),

let \({\mathscr {B}}_j=\Big \Vert \sum _{t={\hat{t}}_j^i}^{t_j^i-1}X_{t-1}\left( (B_{i\cdot }^{j})^T-(B_{i\cdot }^{j+1})^T\right) X_{t-1}\Big \Vert \)

In the similar ways as Theorem 2.2 Chan et al. (2014), using Lemma 2 and Lemma 3 to replace their Lemma A.2 and Lemma A.3, respectively, we can get that \(P(T_{ij1})\rightarrow 0\), \(P(T_{ij2})\rightarrow 0\) and \(P(T_{ij3})\rightarrow 0\). Combining these results, we have \(P(T_{ij}C_n\cap \{{\hat{t}}_j^i<t_j^i\})\rightarrow 0\). The proof of \(P(T_{ij}C_n\cap \{{\hat{t}}_j^i>t_j^i\})\rightarrow 0\) is similar. Then, \(P(T_{ij}C_n)\rightarrow 0\).

From Lemma 2, one can prove the case \(m_i\rightarrow \infty \). The rate of convergence of the \(P(T_{ij}),j=1,\ldots ,m_i\) can be fast enough to get \(\sum _{j=1}^{m_i} P(T_{ij})\rightarrow 0\). Then, Eq. (42) is proved.

Theorem 2 is proved from the definition of \({\hat{t}}_j,j=1,\ldots ,{\hat{m}}\) and \(\max \limits _{1\le j\le m}\vert {\hat{t}}_j-t_j\vert \le \max \limits _{1\le i\le d}\max \limits _{1\le j\le m}\vert {\hat{t}}_j^i-t_j^i\vert \). \(\square \)

Proof of Theorem 3

Firstly, we prove that for \(i=1,\ldots ,d\), if Assumptions 1 to 3 are satisfied, then

and

Applying Lemma 3 and Lemma 2 to similar augment of Theorem 2, according to the procedure of proof of Chan et.al.(2014), Eqs. (48) and (49) can be proved.

Then, from \({\mathscr {A}}_n=\cup _{i=1}^d{\mathscr {A}}_{ni}\), we get \(\left( \vert {\mathscr {A}}_{n}\vert \ge m\right) \subseteq \left( \vert {\mathscr {A}}_{ni}\vert \ge m_i\right) \) and \(d_H({\mathscr {A}}_{n}, {\mathscr {A}})=d_H({\mathscr {A}}_{ni}, {\mathscr {A}}_i)\), which completes the proof of Theorem 3. \(\square \)

Proof of Theorem 4

In the proof of the theorem, we will apply the conclusion of Lemma 4 in Safikhani and Shojaie (2017), for \(m_r<m\)

We prove the first conclusion by showing (a)\(P({\hat{m}}^*<m)\rightarrow 0\) and (b) \(P({\hat{m}}^*>m)\rightarrow 0\).

For (a) \(P({\hat{m}}^*<m)\rightarrow 0\), Theorem 3 implies that there are points \({\hat{t}}_{nj}\in {\mathscr {A}}_n\) such that \(max_{1\le j\le m}\vert {\hat{t}}_{nj}-t_j\vert \le n\gamma _n\).

By similar arguments as in Theorem 4 (Safikhani and Shojaie (2017)), we get that

By Eq. (50), we get

The last inequality comes from the conditions \(m\omega _n/I_{min}\rightarrow 0\) and \(lim_{n\rightarrow \infty }n\gamma _nS^2/\omega _n\le 1\). Then, \(P({\hat{m}}^*<m)\rightarrow 0\).

To prove (b), given \({\hat{m}}^*>m\),

Combining with Eq. (51), we get

When \({\hat{m}}^*>m\), we have that

Combining Eqs. (54) and (55), with the similar discussion as in Eq. (52), \(P({\hat{m}}^*>m)\rightarrow 0)\). The second conclusion follows as Theorem 4 in Safikhani and Shojaie (2017). \(\square \)

Proof of Theorem 5

From Theorem 4 in Zhao and YU (2006), under the irrepresentable condition (Assumption 7) and Assumption 8, for \(\lambda _n/n\rightarrow 0\) and \(\lambda _n/n^{\frac{1+c}{2}}\rightarrow \infty \) with \(0\le c<1\), the probability of the Lasso selecting wrong models satisfies \(P({\hat{S}}_i\ne S_i)=0(e^{-n^{c_2}})\).

The second part is proved from the results Theorem 3 in Liu and Yu (2013). \(\square \)

Rights and permissions

About this article

Cite this article

Gao, W., Yang, H. & Yang, L. Change points detection and parameter estimation for multivariate time series. Soft Comput 24, 6395–6407 (2020). https://doi.org/10.1007/s00500-019-04135-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-019-04135-8