Abstract

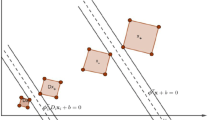

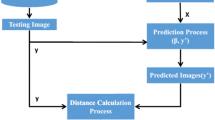

AdaBoost methods find an accurate classifier by combining moderate learners that can be computed using traditional techniques based, for instance, on separating hyperplanes. Recently, we proposed a strategy to compute each moderate learner using a linear ensemble of weak classifiers that are built through the kernel support vector machine (KSVM) hypersurface geometry. In this way, we apply AdaBoost procedure in a nested loop: Each iteration of the inner loop boosts weak classifiers to a moderate one while the outer loop combines the moderate classifiers to build the global decision rule. In this paper, we explore this methodology in two ways: (a) For classification in principal component analysis (PCA) spaces; (b) For multi-class nonlinear discriminant PCA, named MNDPCA. Up to the best of our knowledge, the former is a new AdaBoost-based classification technique. Besides, in this paper we study the influence of kernel types for MNDPCA in order to set a near optimum configuration for feature selection and ranking in PCA subspaces. We compare the proposed methodologies with counterpart ones using facial expressions of the Radboud Faces database and Karolinska Directed Emotional Faces (KDEF) image database. Our experimental results have shown that MNDPCA outperforms counterpart techniques for selecting PCA features in the Radboud database while it performs close to the best technique for KDEF images. Moreover, the proposed classifier achieves outstanding recognition rates if compared with the literature techniques.

Similar content being viewed by others

References

Ang JC, Mirzal A, Haron H, Hamed HNA (2016) Supervised, unsupervised, and semi-supervised feature selection: a review on gene selection. IEEE/ACM Trans Comput Biol Bioinf 13(5):971–989

Antipov G, Berrani SA, Ruchaud N, Dugelay JL (2015) Learned vs. hand-crafted features for pedestrian gender recognition. In: ACM multimedia

Bishop CM (1997) Neural networks for pattern recognition. Oxford University Press Inc, New York

Blog DS (2020) Performance measures for multi-class problems. https://www.datascienceblog.net/post/machine-learning/performance-measures-multi-class-problems/. Accessed Jan 2020

Cai J, Luo J, Wang S, Yang S (2018) Feature selection in machine learning: a new perspective. Neurocomputing 300:70–79

Chan T, Jia K, Gao S, Lu J, Zeng Z, Ma Y (2015) PCANet: a simple deep learning baseline for image classification? IEEE Trans Image Process 24(12):5017–5032

Chen T, Guestrin C (2016) Xgboost: a scalable tree boosting system. In: Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining. Association for Computing Machinery, New York, NY, USA

Cheng H, Chen H, Jiang G, Yoshihira K (2007) Nonlinear feature selection by relevance feature vector machine. In: Perner P (ed) Machine learning and data mining in pattern recognition. Springer, Berlin, pp 144–159

Chen J, Wang G, Giannakis GB (2019) Nonlinear dimensionality reduction for discriminative analytics of multiple datasets. IEEE Trans Signal Process 67(3):740–752

Cunningham JP, Ghahramani Z (2015) Linear dimensionality reduction: survey, insights, and generalizations. J Mach Learn Res 16:2859–2900

Díaz-Uriarte R, Alvarez de Andrés S (2006) Gene selection and classification of microarray data using random forest. BMC Bioinform 7:3

Ding C, Peng H (2003) Minimum redundancy feature selection from microarray gene expression data. In: Proceedings of the 2003 IEEE bioinformatics conference on computational systems bioinformatics. CSB2003. CSB2003, Aug 2003, pp 523–528

Ding C, Peng H (2005) Minimum redundancy feature selection from microarray gene expression data. J Bioinform Comput Biol 03(02):185–205

Dorfer M, Kelz R, Widmer G (2015) Deep linear discriminant analysis. In: International conference of learning representations (ICLR) arXiv:1511.04707

Duan S, Chen K, Yu X, Qian M (2018) Automatic multicarrier waveform classification via PCA and convolutional neural networks. IEEE Access 6:51365–51373

Ekman P, Friesen W (1978) Facial action coding system: manual, vol 1–2. Consulting Psychologists Press, Berkeley

Fang Y (2018) Feature selection, deep neural network and trend prediction. J Shanghai Jiaotong Univ (Sci) 23(2):297–307

Filisbino T, Leite D, Giraldi G, Thomaz C (2015) Multi-class discriminant analysis based on SVM ensembles for ranking principal components. In: 36th Ibero-Latin American congress on computational methods in engineering (CILAMCE), Nov 2015

Filisbino T, Giraldi G, Thomaz C (2016) Approaches for multi-class discriminant analysis for ranking principal components. In: XII Workshop de Visao Computacional (WVC’16), Nov 2016

Filisbino T, Giraldi G, Thomaz C (2016) Ranking principal components in face spaces through AdaBoost.M2 linear ensemble. In: 2016 26th SIBGRAPI conference on graphics, patterns and images (SIBGRAPI), São Jose dos Campos, SP, Brazil, October 2016

Filisbino T, Giraldi G, Thomaz C (2017) Multi-class nonlinear discriminant feature analysis. In: 38th Ibero-Latin American congress on computational methods in engineering (CILAMCE), Nov 2017

Filisbino TA, Giraldi GA, Thomaz CE, Barros BMN, da Silva MB (2017) Ranking texture features through AdaBoost.M2 linear ensembles for granite tiles classification. In: Xth EAMC, Petropolis, Brazil, 1–3 Feb 2017

Freund Y, Schapire RE (1997) A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55(1):119–139

Fukunaga K (1990) Introduction to statistical pattern recognition. Academic Press, New York

Garcia-Garcia A, Orts S, Oprea S, Villena-Martinez V, Martinez-Gonzalez P, Rodríguez JG (2018) A survey on deep learning techniques for image and video semantic segmentation. Appl Soft Comput 70:41–65

Garcia E, Lozano F (2007) Boosting support vector machines. In: Proceedings of international conference of machine learning and data mining (MLDM’2007), pp 153–167. IBal Publishing, Leipzig, Germany

Giraldi GA, Rodrigues PS, Kitani EC, Thomaz CE (2008) Dimensionality reduction, classification and reconstruction problems in statistical learning approaches. Revista de Informatica Teorica e Aplicada 15(1):141–173

Giraldi GA, Filisbino TA, Simao LB, Thomaz CE (2017) Combining deep learning and multi-class discriminant analysis for granite tiles classification. In: Proceedings of the XIII Workshop de Visao Computacional, WVC 2017, pp 19–24. Springer, Berlin, Heidelberg, Natal, Rio Grande do Norte, Brazil

Giraldi GA, Filisbino TA, Thomaz CE (2018) Non-linear discriminant principal component analysis for image classification and reconstruction. In: Proceedings of the 7th Brazilian conference on intelligent systems, BRACIS 2018, Sao Paulo, Sao Paulo, Aug 2018, p 6

Goeleven E, Raedt RD, Leyman L, Verschuere B (2008) The karolinska directed emotional faces: a validation study. Cogn Emot 22(6):1094–1118

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT Press, Cambridge

Guo J, Zhu W (2018) Dependence guided unsupervised feature selection. In: Thirty-second AAAI conference on artificial intelligence

Guo J, Guo Y, Kong X, He R (2017) Unsupervised feature selection with ordinal locality. In: 2017 IEEE international conference on multimedia and expo (ICME), pp 1213–1218

Guyon I, Elisseeff A (2003) An introduction to variable and feature selection. J Mach Learn Res 3:1157–1182

Guyon I, Weston J, Barnhill S, Vapnik V (2002) Gene selection for cancer classification using support vector machines. Mach Learn 46:389–422

Guyon I, Gunn S, Nikravesh M, Zadeh LA (2006) Feature extraction: foundations and applications (Studies in Fuzziness and Soft Computing). Springer-Verlag, Berlin, Heidelberg

Hall MA (2000) Correlation-based feature selection for discrete and numeric class machine learning. In: Proceedings of the seventeenth international conference on machine learning, series. ICML ’00, pp 359–366. Morgan Kaufmann Publishers Inc., San Francisco, CA, USA

Hastie T, Tibshirani R, Friedman J (2001) The elements of statistical learning. Springer, Berlin

Hira ZM, Gillies DF (2015) A review of feature selection and feature extraction methods applied on microarray data. Adv Bioinform 2015:1–13

Hoque N, Bhattacharyya D, Kalita J (2014) MIFS-ND: a mutual information-based feature selection method. Expert Syst Appl 41(14):6371–6385

Hossin M, Sulaiman MN (2015) A review on evaluation metrics for data classification evaluations. Int J Data Min Knowl Manag Process 5:01–11

Hu P, Peng D, Sang Y, Xiang Y (2019) Multi-view linear discriminant analysis network. IEEE Trans Image Process 28(11):5352–5365

Huberty C (1994) Applied discriminant analysis. Wiley, Hoboken

Ioffe S (2006) Probabilistic linear discriminant analysis. In: Proceedings of the 9th European conference on computer vision—volume part IV, series. ECCV’06, pp 531–542. Springer, Berlin

Jovic A, Brkic K, Bogunovic N (2015) A review of feature selection methods with applications. In: 2015 38th international convention on information and communication technology, electronics and microelectronics (MIPRO), May 2015, pp 1200–1205

Jurek A, Bi Y, Wu S, Nugent C (2013) A survey of commonly used ensemble-based classification techniques. Knowl Eng Rev 29(5):551–581

Kononenko I (1994) Estimating attributes: analysis and extensions of relief. In: Bergadano F, De Raedt L (eds) Machine learning. Springer, Berlin, pp 171–182

Kononenko I, Šimec E, Robnik-Šikonja M (1997) Overcoming the myopia of inductive learning algorithms with RELIEFF. Appl Intell 7(1):39–55

Lan Z, Yu S-I, Lin M, Raj B, Hauptmann AG (2015) Handcrafted local features are convolutional neural networks, eprint arXiv:1511.05045

Langner O, Dotsch R, Bijlstra G, Wigboldus DHJ, Hawk ST, van Knippenberg A (2010) Presentation and validation of the Radboud Faces Database. Cogn Emot 24(8):1377–1388

Lee JA, Verleysen M (2007) Nonlinear dimensionality reduction, 1st edn. Springer, Berlin

Li J, Cheng K, Wang S, Morstatter F, Trevino RP, Tang J, Liu H (2017) Feature selection: a data perspective. ACM Comput Surv 50(6):94:1–94:45

Li L, Doroslovacki M, Loew MH (2019) Discriminant analysis deep neural networks. In: 2019 53rd annual conference on information sciences and systems (CISS), pp 1–6

Lu H, Plataniotis KN, Venetsanopoulos AN (2011) A survey of multilinear subspace learning for tensor data. Pattern Recogn 44(7):1540–1551

Lundqvist D, Flykt A, Ohman A (1998) The Karolinska directed emotional faces—kdef, cd rom from department of clinical neuroscience. Psychology section, Karolinska Institutet

Ma S, Huang J (2008) Penalized feature selection and classification in bioinformatics. Brief Bioinform 9(5):392–403

Manikandan G, Abirami S (2018) A survey on feature selection and extraction techniques for high-dimensional microarray datasets. In: Margret Anouncia S, Wiil U (eds) Knowledge computing and its applications: knowledge computing in specific domains, vol II. Springer, Berlin, pp 311–333

Marques J, Igel C, Lillholm M, Dam EB (2013) Linear feature selection in texture analysis—a PLS based method. Mach Vis Appl 24(7):1435–1444

MathWorks (2020) Fit ensemble of learners for classification. https://www.mathworks.com/help/stats/fitcensemble.html. Accessed Jan 2020

Mendes-Moreira J, Soares CG, Jorge AM, de Sousa JF (2012) Ensemble approaches for regression: a survey. ACM Comput Surv 45:10:1–10:40

Muthukrishnan R, Rohini R (2016) Lasso: a feature selection technique in predictive modeling for machine learning. In: 2016 IEEE international conference on advances in computer applications (ICACA), Oct 2016, pp 18–20

Navada A, Ansari AN, Patil S, Sonkamble BA (2011) Overview of use of decision tree algorithms in machine learning. In: 2011 IEEE control and system graduate research colloquium, June 2011, pp 37–42

Neves LAP, Giraldi GA (2013) SVM framework for incorporating content-based image retrieval and data mining into the sbim image manager. Springer, Dordrecht, pp 49–66

Opitz DW (1999) Feature selection for ensembles. In: AAAI/IAAI, pp 379–384

Peng H, Long F, Ding C (2005) Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans Pattern Anal Mach Intell 27(8):1226–1238

Roffo G, Melzi S, Castellani U, Vinciarelli A (2017) Infinite latent feature selection: a probabilistic latent graph-based ranking approach. In: 2017 IEEE international conference on computer vision (ICCV)

Saeys Y, Abeel T, Van de Peer Y (2008) Robust feature selection using ensemble feature selection techniques. In: Daelemans W, Goethals B, Morik K (eds) Machine learning and knowledge discovery in databases. Springer, Berlin, pp 313–325

Scikit-Learn (2020) Compute confusion matrix. https://scikit-learn.org/stable/modules/generated/sklearn.metrics.confusion_matrix.html. Accessed Jan 2020

Seuret M, Alberti M, Liwicki M, Ingold R (2017) PCA-initialized deep neural networks applied to document image analysis. In: ICDAR, pp 877–882. IEEE

Sheela A, Prasad S (2007) Linear discriminant analysis F-ratio for optimization of TESPAR & MFCC features for speaker recognition. J Multimedia 2:34–43

Shieh M-D, Yang C-C (2008) Multiclass SVM-RFE for product form feature selection. Expert Syst Appl 35(1–2):531–541

Shorten C, Khoshgoftaar TM (2019) A survey on image data augmentation for deep learning. J Big Data 6:1–48

Song X, Lu H (2017) Multilinear regression for embedded feature selection with application to FMRI analysis. In: Thirty-first AAAI conference on artificial intelligence

Stuhlsatz A, Lippel J, Zielke T (2012) Feature extraction with deep neural networks by a generalized discriminant analysis. IEEE Trans Neural Netw Learn Syst 23:596–608

Tang J, Alelyani S, Liu H (2014) Feature selection for classification: a review. In: Data classification. CRC Press, pp 37–64. https://doi.org/10.1201/b17320

Theodoridis S, Koutroumbas K (2008) Pattern recognition, 4th edn. Academic Press Inc, Cambridge

Thomaz CE, Giraldi GA (2010) A new ranking method for principal components analysis and its application to face image analysis. Image Vis Comput 28(6):902–913

Thomaz C, Kitani E, Gillies D (2006) A maximum uncertainty LDA-based approach for limited sample size problems—with application to face recognition. J Braz Comput Soc 12(2):7–18

Turk M, Pentland A (1991) Eigenfaces for recognition. J Cogn Neurosci 3:71–86

Vapnik V (1998) Statistical learning theory. Wiley, Hoboken

Vejmelka M, Hlavackova-Schindler K (2007) Mutual information estimation in higher dimensions: a speed-up of a k-nearest neighbor based estimator. In: International conference on adaptive and natural computing algorithms

Wang Q, Qin Z, Nie F, Yuan Y (2017) Convolutional 2D LDA for nonlinear dimensionality reduction. In: Proceedings of the 26th international joint conference on artificial intelligence, IJCAI’17, pp 2929–2935. AAAI Press

Wu L, Shen C, van den Hengel A (2017) Deep linear discriminant analysis on fisher networks: a hybrid architecture for person re-identification. Pattern Recogn 65:238–250

Wu J, Qiu S, Kong Y, Jiang L, Chen Y, Yang W, Senhadji L, Shu H (2018) PCANet: an energy perspective. Neurocomputing 313:271–287

XGBoost Developers (2020) optimized distributed gradient boosting library. https://xgboost.readthedocs.io/en/latest/contrib/release.html. Accessed Jan 2020

Yang HH, Moody J (1999) Data visualization and feature selection: new algorithms for non-Gaussian data. In: Proceedings of the 12th international conference on neural information processing systems, NIPS’99, pp 687–693. MIT Press, Cambridge, MA, USA

Zhang H (2004) The optimality of Naive Bayes. In: Proceedings of the seventeenth international florida artificial intelligence research society conference (FLAIRS). AAAI Press

Zheng YF (2005) One-against-all multi-class SVM classification using reliability measures. In: Proceedings. 2005 IEEE international joint conference on neural networks, 2005, vol 2, pp 849–854

Zhong Y (2016) The analysis of cases based on decision tree. In: 2016 7th IEEE international conference on software engineering and service science (ICSESS), pp 142–147

Zhou Z-H (2012) Ensemble methods: foundations and algorithms, 1st edn. CRC Press, Boca Raton

Zhou N, Wang L (2007) A modified t-test feature selection method and its application on the hapmap genotype data. Genom Proteom Bioinform 5(3–4):242–249

Zhu M, Martinez A (2006) Selecting principal components in a two-stage LDA algorithm. In: IEEE computer society conference on computer vision and pattern recognition (CVPR’06), vol 1, pp 132–137

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Human participants and/or animals

Also, authors declare that this research does not involve human participants and/or animals.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Filisbino, T.A., Giraldi, G.A. & Thomaz, C.E. Nested AdaBoost procedure for classification and multi-class nonlinear discriminant analysis. Soft Comput 24, 17969–17990 (2020). https://doi.org/10.1007/s00500-020-05045-w

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-020-05045-w