Abstract

The detection of moving objects from stationary cameras is usually approached by background subtraction, i.e. by constructing and maintaining an up-to-date model of the background and detecting moving objects as those that deviate from such a model. We adopt a previously proposed approach to background subtraction based on self-organization through artificial neural networks, that has been shown to well cope with several of the well known issues for background maintenance. Here, we propose a spatial coherence variant to such approach to enhance robustness against false detections and formulate a fuzzy model to deal with decision problems typically arising when crisp settings are involved. We show through experimental results and comparisons that higher accuracy values can be reached for color video sequences that represent typical situations critical for moving object detection.

Similar content being viewed by others

1 Introduction

Many computer vision applications, such as video surveillance or video compression, rely on the task of detecting moving objects in video streams, that provides the segmentation of the scene into background and foreground components.

Compared to other approaches for detecting moving objects, such as optical flow [4], background subtraction is computationally affordable for real-time applications. It consists in maintaining an up-to-date model of the background and detecting moving objects as those that deviate from such a model; thus, the main problem is its sensitivity to dynamic scene changes, and the consequent need for the background model adaptation via background maintenance. Such problem is known to be significant and difficult [16], and several well-known issues in background maintenance have to be taken into account, such as light changes, moving background, cast shadows, bootstrapping and camouflage. Due to its pervasiveness in various contexts, background subtraction has been afforded by several researchers, and plenty of literature has been published (see surveys in [5, 11, 14], and more recently in [7]). In [12, 13], we proposed the self-organizing background subtraction (SOBS) algorithm, which implements an approach to moving object detection based on the background model automatically generated by a self-organizing method without prior knowledge about the involved patterns. Such adaptive model can handle scenes containing moving backgrounds, gradual illumination variations and camouflage, can include into the background model shadows cast by moving objects, and achieves robust detection for different types of videos taken with stationary cameras.

One of the main issues to be pursued in background subtraction is the uncertainty in the detection caused by the cited background maintenance issues. Usually, crisp settings are needed to define the method parameters, and this does not allow to properly deal with uncertainty in the background model. Recently, several authors have explored the adoption of fuzzy approaches to tackle different aspects of detecting moving objects. In [18], an approach using fuzzy Sugeno integral is proposed to fuse texture and color features for background subtraction, while in [2, 3], the authors adopt the Choquet integral to aggregate the same features. In [15], the authors propose a fuzzy approach to selective running average background modeling, and in [1], the authors model the background by the Type-2 fuzzy mixture of Gaussian model proposed in [17].

In the present work, we propose to extend and enhance SOBS algorithm by introducing spatial coherence and by taking into account uncertainty in the background model. Specifically, we present a variant of SOBS algorithm that incorporates spatial coherence into background subtraction. It is useful to think of spatial coherence in terms of the intensity difference between locally contiguous pixels. This means that neighboring pixels showing small intensity differences are coherent, while neighboring pixels with high intensity differences are incoherent. We will show that exploiting spatial coherence of scene objects when compared with scene background guarantees more robustness against false detection. Moreover, we propose a fuzzy approach to the SOBS algorithm variant, with the aim of introducing during the update phase of the background model an automatic and data-dependent mechanism for further reinforcing into the background model the contribution of pixels that belong to it. The above considerations lead to formulate the algorithm as a fuzzy rule-based procedure, where fuzzy functions are computed, pixel-by-pixel, on the basis of the background subtraction phase and combined through the product rule. It will be shown that the proposed fuzzy approach, implemented in what will be called SOBS_CF (fuzzy and coherence-based SOBS) algorithm, further improves the accuracy of the corresponding crisp moving object detection procedure and performs better than other compared methods.

The paper is organized as follows. In Sect. 2, we describe the basic model adopted from [12, 13] and the proposed modification. In Sect. 3, we detail the proposed fuzzy approach to moving object detection, while in Sect. 4, we give a qualitative and quantitative evaluation of the proposed approach accuracy, comparing obtained results with those obtained by the crisp analogous approach and by other moving object detection approaches. Conclusions are drawn in Sect. 5.

2 SOBS algorithm and introduction of spatial coherence

The background model constructed and maintained in SOBS algorithm [12, 13], here adopted, is based on a self-organizing neural network, organized as a 2D flat grid of neurons. Each neuron computes a function of the weighted linear combination of incoming inputs, with weights resembling the neural network learning, and can be therefore represented by a weight vector obtained collecting the weights related to incoming links. An incoming pattern is mapped to the neuron whose set of weight vectors is most similar to the pattern, and weight vectors in a neighborhood of such node are updated.

For each pixel, we build a neuronal map consisting of n × n weight vectors, all represented in the HSV colour space, that allows to specify colours in a way that is close to human experience of colours. Each weight vector c i , i = 1,…, n 2, is therefore a 3D vector, initialized to the HSV components of the corresponding pixel of the first sequence frame I 0. The complete set of weight vectors for all pixels of an image I with N rows and M columns is represented as a neuronal map \(\tilde{B}\) with n × N rows and n × M columns, where adjacent blocks of n × n weight vectors correspond to adjacent pixels in image I. An example of such neuronal map structure for a simple image I with N = 2 rows and M = 3 columns obtained choosing n = 3 is given in Fig. 1. The upper center pixel b of sequence frame I in Fig. 1a has weight vectors (b 1, …,b 9) stored into the 3 × 3 elements of the upper center part of neuronal map \(\tilde{B}\) in Fig. 1b, and analogous relations exist for each pixel of I and corresponding weight vectors storage.

By subtracting the current image from the background model, each pixel p t of the tth sequence frame I t is compared to the current pixel weight vectors to determine if there exists a weight vector that matches it. The best matching weight vector is used as the pixel’s encoding approximation, and therefore p t is detected as foreground if no acceptable matching weight vector exists; otherwise it is classified as background.

Matching for the incoming pixel p t is performed by looking for a weight vector c m (p t ) in the set \(C(p_t)=(c_1(p_t), \ldots, c_{n^2}(p_t))\) of the current pixel weight vectors satisfying:

where the metric d(·) and the threshold ɛ are suitably chosen as in [12, 13].

Let the best matching weight vector c m (p t ) be situated in position \((\overline{x}, \overline{y})\) of the background model \(\tilde{B}_t;\) such weight vector, together with all other weight vectors in a n × n neighborhood \({\mathcal{N}}_{c_m(p_t)}\) of the background model \(\tilde{B}_t\) are updated according to selective weighted running average:

\(\begin{array}{l} i= \overline{x}-\lfloor {\frac{n} {2}}\rfloor,\ldots,\overline{x}+\lfloor {\frac{n} {2}}\rfloor\end{array}\), \(j= \overline{y}-\lfloor {\frac{n} {2}}\rfloor, \ldots, \overline{y}+\lfloor {\frac{n} {2}}\rfloor,\) where αi,j(t) is a learning factor belonging to [0, 1] and depends on scene variability. As an example, in Fig. 1 if b 9 is the best match to image pixel p t = b according to Eq. 1, then weight vectors of the background model \(\tilde{B}_t\) belonging to the highlighted neighborhood \({\mathcal{N}}_{b_9}\) are updated according to Eq. 2.

It should be observed that if the best match c m (p t ) satisfying Eq. 1 is not found, the background model \(\tilde{B}\) remains unchanged. Such selectivity allows to adapt the background model to scene modifications without introducing the contribution of pixels not belonging to the background scene.

As claimed in the introduction, we propose in this paper a variant of SOBS algorithm obtained by introducing spatial coherence in the updating formula, in order to enhance robustness against false detections.

Let p = (p x , p y ) the generic pixel of image I, and let

the spatial square neighborhood of pixel p ∈I having fixed width \(k \in {\mathbb{N}}.\) We consider the set Ω p of pixels belonging to N p that have a best match in their background model according to Eq. 1, i.e.

In analogy with [6], the neighborhood coherence factor is defined as:

where | · | refers to the set cardinality. Such factor gives a relative measure of the number of pixels belonging to the spatial neighborhood N p of a given pixel p that are well represented by the background model. If NCF (p) > 0.5, most of the pixels in such spatial neighborhood are well represented by the background model, and this should imply that also pixel p is well represented by the background model.

Values for αi,j(t) in Eq. 2 can therefore be expressed as

where w i,j are Gaussian weights in the n × n neighborhood, α(t) represents the learning factor, that is the same for each pixel of the tth sequence frame, and M(p t ) is the crisp hard-limited function

Function M(·) gives the background/foreground segmentation for pixel p t , also taking into account spatial coherence.

3 Fuzzy rule

The background updating rule may be formulated in terms of a production rule of the type: \(\tt{if}\) (condition) \(\tt{then}\) (action) [8], incorporating knowledge of the world in which the system works, such as knowledge of objects and their spatial relations. When the condition in the production rule is satisfied, the action is performed. In most real systems, many conditions are partially satisfied. Therefore, reasoning should be performed with partial or incomplete information representing multiple hypotheses. For example, in a rule-based outdoor scene understanding system, a typical rule may be: \(\tt{if}\) (a region is rather straight and highly uniform and the region is surrounded by a field region) \(\tt{then}\) (confidence of road is high). The terms such as “rather straight”, “highly uniform”, and “surrounded by” are known as linguistic labels. Fuzzy set theory provides a natural mechanism to represent the vagueness inherent in these labels effectively. The flexibility and power provided by fuzzy set theory for knowledge representation makes fuzzy rule-based systems very attractive when compared with traditional rule-based systems.

In our case, the uncertainty resides in determining suitable thresholds in the background model. According to this way of reasoning, the fuzzy background subtraction and update algorithm for the generic pixel \(p_t \in I_t\) can be stated through a fuzzy rule-based system as follows:

Fuzzy rule-based background subtraction and update algorithm

-

\(\tt{if}\) (\(d(c_m(p_t),p_t\)) \(\tt{is\;Low}\)) \(\tt{and}\) (\(NCF(p_t)\)\(\tt{is\;Low)}\)\(\tt{then}\)

-

\(\qquad {\tt{Update}}\;\tilde{B}_{t}\)

-

\(\tt{endif}\)

Let F 1(p t ) be the fuzzy membership function of d(c m (p t ),p t ) to the fuzzy set \(\tt{Low}\) and F 2(p t ) be the fuzzy membership function of NCF(p t ) to the fuzzy set \(\tt{Low}\) . The fuzzy rule becomes:

where functions F 1(p t ) and F 2(p t ) will be derived in the following subsections.

3.1 Fuzzy updating

In order to take into account the uncertainty in the background model deriving by the need of the choice of a suitable threshold ɛ in Eq. 1, F 1(p t ) is chosen as a saturating linear function given by

The function F 1(p t ), whose values are normalized in [0, 1], can be considered as the membership degree of p t to the background model: the closer is the incoming sample p t to the background model \(C(p_t) =(c_1(p_t), c_2(p_t), \ldots, c_{n^2}(p_t)),\) the larger is the corresponding value F 1(p t ). Therefore, incorporating F 1(p t ) in Eq. 5 ensures that the closer is the incoming sample p t to the background model, the more it contributes to the background model update, thus further reinforcing the corresponding weight vectors.

3.2 Fuzzy coherence

Also spatial coherence introduced through Eq. 4 can be formulated with a fuzzy approach. Indeed, we can observe that the greater is NCF(p), the greater number of pixels in N p are well represented by the background model, and the better the pixel p should be considered as represented by the background model. To take into account such consideration, we modify learning factors defined in Eq. 3 as follows:

where F 2(p t ) is given as

and can be considered as the membership degree of pixel p t to the background model.

3.3 Fuzzy algorithm

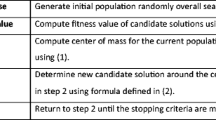

Summarizing, the proposed fuzzy background subtraction and update procedure can be stated as follows. Given an incoming pixel value p t in sequence frame I t , the estimated background model \(\tilde{B}_{t}\) is obtained through the following algorithm:

Fuzzy background subtraction and update algorithm

-

\(\tt{Initialize\ weight\ vectors}\;C(p_0)\;\tt{for\ pixel}\;p_0\;\tt{and\ store\ it\ into}\;\tilde{B}_0\)

-

\({\bf for}\ \tt{t}=1, \tt{LastFrame}\)

-

\({\quad\tt{Find\ best\ match}}\ c_m(p_t)\ {\tt{in}}\ C(p_t)\ {\tt{to\ current\ sample}}\ p_t\ {\tt{as\ in\ Eq.}}\,1\)

-

\({\quad\tt{Compute\ learning\ factors}}\;\alpha_{i,j}(t)\)

-

\(\quad{\tt{Update}}\ \tilde{B}_{t}\ {\tt{in\ the\ neighborhood\ of}}\ c_m\ {\tt{as\ in\ Eq.}}\,2\)

-

\({\bf endfor}\)

The original crisp SOBS algorithm is obtained if learning factors αi,j(t) for the update step are chosen as in Eq. 3, while the proposed multivalued algorithm, in the following denoted as SOBS_CF, is obtained if learning factors are chosen as in Eq. 5.

4 Experimental results

Results obtained by the proposed SOBS_CF algorithm have been compared with those obtained by other existing algorithms on several image sequences, in terms of different metrics. Compared methods, test data, qualitative and quantitative results will be described in the following.

4.1 Methods considered for comparison

Compared methods will be referred to as SOBS, CB, and FBGS.

SOBS algorithm, already described in Sect. 2, is the crisp version of the proposed SOBS_CF algorithm. It has been shown [12, 13] that SOBS adaptive model can handle scenes containing moving backgrounds, gradual illumination variations and camouflage, can include into the background model shadows cast by moving objects, and achieves robust detection for different types of videos taken with stationary cameras. We will show that SOBS_CF, that is strongly based on SOBS model, preserves such features.

The CB algorithm, reported in [9], is based on vector quantization to incrementally construct a codebook in order to generate a background model, and the best match is found based on a color distortion measure and brightness bounds. It has been shown to handle dynamic backgrounds and illumination variations.

FBGS algorithm, presented in [15], adopts selective running average for background modeling, where model learning factors for each pixel are defined in terms of a fuzzy function for background subtraction.

For SOBS and FBGS algorithms, we implemented prototype versions in C programming language, while an implementation of the CB algorithm is available on the web (http://www.umiacs.umd.edu/~knkim/UMD-BGS/index.HTML). For all algorithms, we experimented with different settings of adjustable parameters until the results seemed optimal over the entire image sequence. No morphological operations have been added to post-process the obtained detection masks.

Further comparisons have been made with two other methods referred to as T2 FMGM-UM, and T2 FMGM-UV. In [1], the authors model the background using the Type-2 Fuzzy Mixture of Gaussian Model (T2 FMGM) proposed in [17], that allows to take into account the uncertainty of mean vectors (UM) or of covariance matrix (UV). The comparison of our approach with T2 FMGM-UM and T2 FMGM-UV algorithms is based on accuracy results reported in [1] for the frames of sequence Seq00 shown in Fig. 4 (see Sect. 4.2).

4.2 Data and qualitative results

Experimental results for moving object detection using the proposed approach have been produced for several image sequences. Here, we describe three different sequences, in the following named IR, WT, and Seq00, that represent typical situations critical for moving object detection, and present qualitative results obtained with the proposed and the compared methods.

Sequence Intelligent Room (IR) comes from publicly available sequences (http://cvrr.ucsd.edu/aton/shadow/index.html). The indoor scene consists in an initially empty meeting room, where a man comes in and walks around. The sequence consists of 300 frames of 320 × 240 spatial resolution, and we consider the hand-segmented background mask available for frame 230. The considered test image and the related binary ground truth are reported in Fig. 2a, b, respectively, while the corresponding foreground masks computed by SOBS_CF, SOBS, CB, and FBGS algorithms are reported in Fig. 2c–f. From such results, it can be observed that all the algorithms were quite successful in modeling the background and in detecting the walking man. Therefore, in the case of indoor scenes, no appreciable difference among the considered approaches can be subjectively observed.

Sequence waving trees (WT) belongs to a set of sequences that represent some of the canonical problems for background subtraction highlighted in the paper of Toyama et al. [16]. It has been chosen in order to test our method ability to cope with moving background. The outdoor scene includes trees moving in the background and, finally, a man passing in front of the camera; here, we are not interested in the waving trees, but only in extraneous moving objects (the man). The sequence contains 287 frames of 160 × 120 spatial resolution, captured at a frequency of 15 frames per second (fps). Hand-segmented background (Fig. 3b) is given for just one test frame (Fig. 3a). The foreground mask computed by the proposed SOBS_CF algorithm is reported in Fig. 3c, while those computed by SOBS, CB, and FBGS algorithms are reported in Fig. 3d–f, respectively. Here, it can be observed that, although the walking man has been almost perfectly detected in all cases, SOBS_CF algorithm is the one that best models the waving trees as background, thanks to its inherent spatial coherence and fuzzy background update.

The outdoor scene of sequence Seq00, coming from publicly available sequences (http://www.cs.cmu.edu/~yaser/Data/), consists of a street crossing, where several people and cars pass by. The sequence consists of 500 frames of 360 × 240 spatial resolution, all provided with ground truth masks. Some of the considered test images with the related binary ground truth are reported in the first and second rows of Fig. 4, while the corresponding foreground masks computed by SOBS_CF, SOBS, CB, and FBGS algorithms are reported in the third, fourth, fifth, and sixth rows of Fig. 4, respectively. From such results it can be observed that, although all the algorithms were quite successful in detecting the walking man and the moving car, CB and FBGS algorithms, and to some extent also SOBS algorithm, detected several false-positive pixels, while spatial coherence together with fuzzy background update make SOBS_CF algorithm attain the most accurate detection results.

4.3 Quantitative evaluation

Results obtained by the proposed SOBS_CF algorithm have been compared with those obtained by other methods in terms of different pixel-based metrics, namely Precision, Recall, and F-measure.

Recall, also known as detection rate, gives the percentage of detected true-positive pixels when compared with the total number of true-positive pixels in the ground truth:

where tp is the total number of true-positive pixels, fn is the total number of false-negative pixels, and (tp + fn) indicates the total number of pixels present in the ground truth.

Recall alone is not enough to compare different methods, and is generally used in conjunction with Precision, also known as positive prediction, that gives the percentage of detected true-positive pixels as compared to the total number of pixels detected by the method:

where fp is the total number of false-positive pixels and (tp + fp) indicates the total number of detected pixels.

Using the above-mentioned metrics, generally a method is considered good if it reaches high Recall values, without sacrificing Precision.

Moreover, we considered the F-measure, also known as figure of merit or F 1 metric, that is the weighted harmonic mean of Precision and Recall:

Such measure allows to obtain a single measure that can be used to “rank” different methods.

All the above-considered measures attain values in [0, 1], and the higher is the value, the better is the accuracy.

Accuracy values obtained by SOBS_CF, SOBS, CB, and FBGS algorithms for sequences IR and WT are reported in Fig. 5a, b. Here, we can observe that, although all considered algorithms perform quite well, SOBS_CF performs slightly better than the others in terms of all considered metrics.

In Fig. 6, we report accuracy values obtained by all methods considered for comparison on the outdoor sequence Seq00. Such values have been obtained averaging accuracy results on the selected frames shown in the first row of Fig. 4. Here, we can observe that SOBS_CF algorithm performs better than all other methods, while the T2-MGM methods perform worse in terms of all considered accuracy metrics.

Computational complexity of SOBS_CF algorithm, both in terms of space and time, is O(n 2 N M), where n 2 is the number of weight vectors used to model each pixel and N × M is the image dimension. Average frame rates on a Pentium 4 with 2.40 GHz and 512 MB RAM, running Windows XP operating system, choosing n = 3, range from 75 fps for sequence WT to 15 fps for sequence Seq00 of higher resolution.

5 Conclusions

In this paper, we propose to extend a previously proposed method for moving object detection [12, 13] by introducing spatial coherence and a fuzzy learning factor into the background model update procedure. The adopted method is based on self-organization through artificial neural networks, and implements the idea of using visual attention mechanisms to help detecting objects that keep the user attention in accordance with a set of predefined scene features. Here, we present a variant to the adopted method that incorporates spatial coherence to enhance robustness against false detections, and introduce a fuzzy function, computed pixel-by-pixel on the basis of the background subtraction phase. Such function is used in the background model update phase, providing an automatic and data-dependent mechanism for further reinforcing into the background model the contribution of pixels that belong to it. It has been shown that the proposed fuzzy approach further improves the accuracy of the corresponding crisp moving object detection procedure and compares favorably with other existing methods, providing experimental results on real color video sequences that represent typical situations critical for moving object detection.

References

Baf FE, Bouwmans T, Vachon B (2008) Type-2 fuzzy mixture of Gaussians model: application to background modeling. 4th international symposium on visual computing, ISVC 2008, Las Vegas, USA, pp 772–781

Baf FE, Bouwmans T, Vachon B (2008) A fuzzy approach for background subtraction. IEEE international conference on image processing, ICIP 2008, San Diego, CA, USA

Baf FE, Bouwmans T, Vachon B (2008) Fuzzy integral for moving object detection. IEEE international conference on fuzzy systems, FUZZ-IEEE 2008, Hong-Kong, China, 1–6 June 2008, pp 1729–1736

Barron JL, Fleet DJ, Beauchemin SS (1994) Performance of optical flow techniques. Int J Comput Vis 12(1):42–77

Cheung S-C, Kamath C (2004) Robust techniques for background subtraction in urban traffic video. In: Proceedings of EI-VCIP, pp 881–892

Ding J, Ma R, Chen S (2008) A scale-based connected coherence tree algorithm for image segmentation. IEEE Trans Image Process 17(2):204–216

Elhabian SY, El-Sayed KM, Ahmed SH (2008) Moving object detection in spatial domain using background removal techniques—state-of-art. Recent Pat Comput Sci 1:32–54

Keller JM (1996) Fuzzy logic rules in low and mid level computer vision tasks. In: Proceedings of fuzzy information processing society, Berkeley, CA, USA, pp 19–22

Kim K, Chalidabhongse TH, Harwood D, Davis LS (2005) Real-time foreground-background segmentation using codebook model. Real-Time Imaging 11:172–185

Kohonen T (1988) Self-organization and associative memory, 2nd edn. Springer, Berlin

Piccardi M (2004) Background subtraction techniques: a review. In: Proceedings of IEEE international conference on systems, man and cybernetics, pp 3099–3104

Maddalena L, Petrosino A (2008) A self-organizing approach to background subtraction for visual surveillance applications. IEEE Trans Image Process 17(7):1168–1177

Maddalena L, Petrosino A, Ferone A (2008) Object motion detection and tracking by an artificial intelligence approach. Int J Pattern Recognit Artif Intell 22(5):915–928

Radke RJ, Andra S, Al-Kofahi O, Roysam B (2005) Image change detection algorithms: a systematic survey. IEEE Trans Image Process 14(3):294–307

Sigari MH, Mozayani N, Pourreza HR (2008) Fuzzy running average and fuzzy background subtraction: concepts and application. Int J Comput Sci Netw Secur 8(2):138–143

Toyama K, Krumm J, Brumitt B, Meyers B (1999) Wallflower: principles and practice of background maintenance. Proc Seventh IEEE Conf Comput Vis 1:255–261

Zeng J, Xie L, Liu Z (2008) Type-2 fuzzy Gaussian mixture models. Pattern Recognit 41(12):3636–3643

Zhang H, Xu D (2006) Fusing color and texture features for background model. In: Wang L et al (eds) FSKD 2006, LNAI 4223. Springer, Berlin, pp 887–893

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Maddalena, L., Petrosino, A. A fuzzy spatial coherence-based approach to background/foreground separation for moving object detection. Neural Comput & Applic 19, 179–186 (2010). https://doi.org/10.1007/s00521-009-0285-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-009-0285-8