Abstract

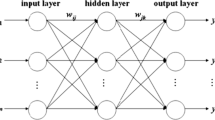

A new optimized classification algorithm assembled by neural network based on Ordinary Least Squares (OLS) is established here. While recognizing complex high-dimensional data by neural network, the design of network is a challenge. Besides, single network model can hardly get satisfying recognition accuracy. Firstly, feature dimension reduction is carried on so that the design of network is more convenient. Take Elman neural network algorithm based on PCA as sub-classifier I. The recognition precision of this classifier is relatively high, but the convergence rate is not satisfying. Take RBF neural network algorithm based on factor analysis as sub-classifier II. The convergence rate of the classifier algorithm is fast, but the recognition precision is relatively low. In order to make up for the deficiency, by carrying on ensemble learning of the two sub-classifiers and determining optimal weights of each sub-classifier by OLS principle, assembled optimized classification algorithm is obtained, so to some extent, information loss caused by dimensionality reduction in data is made up. In the end, validation of the model can be tested by case analysis.

Similar content being viewed by others

References

Mccllochw S, Pitts W (1943) A logical calculus of the ideas immanent in nervous activity. Bull Math Biol 10(5):115–133

Ding SF, Jia WK, Su CY et al (2011) Research of neural network algorithm based on factor analysis and cluster analysis. Neural Comput Appl 20(2):297–302

Sun JX (2002) Modern pattern recognition. National University of Defence Technology Press, Changsha

Bian ZQ, Zhang XG (2000) Pattern recognition. Tsinghua University Press, Beijing

Moody J, Dkaren CJ (1989) Fast learning in networks locally-tuned processing units. Neural Comput 1(2):281–294

Elman JL (1990) Finding structure in time. Cogn Sci 14(2):179–211

Tang CS, Jin YH (2003) A multiple classifiers integration method based on full information matrix. J Softw 14(6):1103–1109

Sun L, Han CZ, Shen JJ et al (2008) Generalized rough set method for ensemble feature selection and multiple classifier fusion. Acta Automatica Sinica 34(3):298–304

Gu Y, Xu ZB, Sun J et al (2006) An intrusion detection ensemble system based on the features extracted by PCA and ICA. J Comput Res Develop 43(4):633–638

Ding SF, Jia WK, Su CY et al (2008) Research of pattern feature extraction and selection. Proc seventh Int Conf Mach Learn Cybernetics 1:466–471

Ding SF, Jia WK, Su CY et al (2008) A survey on statistical pattern feature extraction. Lect Notes Artif Intell 5227:701–708

Foman G (2003) An exnetsive empirical study of feater selection metrics for text classification. J Mach Learn Res 3:1289–1305

Johnson RA, Wichern DW (2007) Applied multivariate statistical analysis, 6th edn. Prentice Hall, Englewood Cliffs

Rumelhart DE, Hinton GE, Williams RJ (1986) Learning representation by back-propagating errors. Nature 3(6):533–536

Ding SF, Jia WK, Su CY et al (2008) PCA-based Elman neural network algorithm. Lect Notes Comput Sci 5370:315–321

Zhou ZH, Chen SF (2002) Neural network ensemble. Chinese J Comput 25(1):1–8

Liu Y, Yao X (1999) Ensemble learning via negative correlation. Neural Netw 12(10):1399–1404

http://archive.ics.uci.edu/ml/machine-learning-databases/parkinsons/[OL].2009.3

Acknowledgments

This work is supported by the Basic Research Program (Natural Science Foundation) of Jiangsu Province of China (No.BK2009093), the National Natural Science Foundation of China (No.60975039, and No.41074003), and the Opening Foundation of Key Laboratory of Intelligent Information Processing of Chinese Academy of Sciences (No.IIP2010-1).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Xu, X., Ding, S., Jia, W. et al. Research of assembling optimized classification algorithm by neural network based on Ordinary Least Squares (OLS). Neural Comput & Applic 22, 187–193 (2013). https://doi.org/10.1007/s00521-011-0694-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-011-0694-3