Abstract

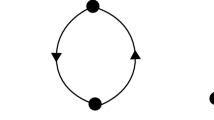

This paper studies the continuous attractors of discrete-time recurrent neural networks. Networks in discrete time can directly provide algorithms for efficient implementation in digital hardware. Continuous attractors of neural networks have been used to store and manipulate continuous stimuli for animals. A continuous attractor is defined as a connected set of stable equilibrium points. It forms a lower dimensional manifold in the original state space. Under some conditions, the complete analytical expressions for the continuous attractors of discrete-time linear recurrent neural networks as well as discrete-time linear-threshold recurrent neural networks are derived. Examples are employed to illustrate the theory.

Similar content being viewed by others

References

Yi Z (2010) Foundations of implementing the competitive layer model by Lotka-Volterra recurrent neural networks. IEEE Trans Neural Netw 21:494–507

Rolls ET (2007) An attractor network in the hippocampus: theory and neurophysiology. Learn Mem 14:714–731

Machens CK, Brody CD (2008) Design of continuous attractor networks with monotonic tuning using a symmetry principle. Neural Comput 20:452–485

Seung HS (1998) Continous attractors and oculomotor control. Neural Netw 11:1253–1258

Seung HS (1996) How the brain keeps the eyes still. Proc Natl Acad Sci USA 93:13339–13344

Zhang KC (1996) Representation of spatial orientation by the intrinsic dynamics of the head-direction cell ensemble: a theory. J Neurosci 16:2112–2126

Seung HS, Lee DD (2000) The manifold ways of perception. Science 290:2268–2269

Stringer SM, Rolls ET, Trappenberg TP, Araujo IET (2003) Self-organizing continuous attractor networks and motor function. Neural Netw 16:161–182

Robinson DA (1989) Integrating with neurons. Ann Rev Neurosci 12:33–45

Koulakov A, Raghavachari S, Kepecs A, Lisman JE (2002) Model for a robust neural integrator. Nature Neurosci 5(8):775–782

Stringer SM, Trappenberg TP, Rolls ET, de Araujo IET (2002) Self-organizing continuous attractor networks and path integration: one-dimensional models of head direction cells. Netw Comput Neural Syst 13:217–242

Samsonovich A, McNaughton BL (1997) Path integration and cognitive mapping in a continuous attractor neural network model. J Neurosci 17:5900–5920

Pouget A, Dayan P, Zemel R (2000) Information processing with population codes. Nature Rev Neurosci 1:125–132

Wu S, Hamaguchi K, Amari S (2008) Dynamics and computation of continuous attractors. Neural Comput 20:994–1025

Yu J, Yi Z, Zhang L (2009) Representations of continuous attractors of recurrent neural networks. IEEE Trans Neural Netw 20:368–372

Wu S, Amari S (2005) Computing with continuous attractors: stability and online aspects. Neural Comput 17:2215–2239

Yu J, Yi Z, Zhou J (2010) Continuous attractors of Lotka-Volterra recurrent neural networks with infinite neurons. IEEE Trans Neural Netw 21:1690–1695

Zou L, Tang H, Tan KC, Zhang W (2009) Nontrivial global attractors in 2-D multistable attractor neural networks. IEEE Trans Neural Netw 20:1842–1851

Perez-Ilzarbe MJ (1998) Convergence analysis of a discrete-time recurrent neural networks to perform quadratic real optimization with bound constraints. IEEE Trans Neural Netw 9:1344–1351

Wersing H, Beyn WJ, Ritter H (2001) Dynamical stability conditions for recurrent neural networks with unsaturating piecewise linear transfer functions. Neural Comput 13:1811–1825

Si J, Michel AN (1995) Analysis and synthesis of a class of discrete-time neural networks with multilevel threshold neurons. IEEE Trans Neual Netw 6:105–116

Yi Z, Zhang L, Yu J, Tan KK (2009) Permitted and forbidden sets in discrete-time linear threshold recurrent neural networks. IEEE Trans Neural Netw 20:952–963

Park DC, Woo YJ (2001) Weighted centroid neural network for edge preserving image compression. IEEE Trans Neural Netw 12:1134–146

Hopfield JJ (1982) Nerual networks and physical systems with emergent collective computational abilities. Proc Natl Acad Sci USA 79:2554–2558

Brucoli M, Carnimeo L, Grassi G (1995) Discrete-time cellular neural networks for associative memories with learning and forgetting capabilities. IEEE Trans Circ Syst I 42:396–399

Grassi G (1998) A new approach to design cellular neural networks for associative memories. IEEE Trans Circuits Syst I 44:835–838

Liu D, Michel AN (1992) Asymptotic stability of discrete-time systems with saturation nonlinearities with applications to digital filters. IEEE Trans Circuits Syst I 39:798–807

Liang XB, Tso SK (2002) An improved upper bound on step-size parameters of discrete-time recurrent neural networks for linear inequality and equation system. IEEE Trans Circuits Syst I 49:695–698

Tan KC, Tang H, Yi Z (2004) Global exponential stability of discrete-time neural networks for constrained quadratic optimization. Neurocomputing 56:399–406

Tang H, Li H, Yi Z (2010) A discrete-time neural network for optimization problems with hybrid constraints. IEEE Trans Neural Netw 21:1184–1189

Yi Z, Heng PA, Fung PF (2000) Winner-take-all discrete recurrent neural networks. IEEE Trans Circuits Syst II 47:1584–1589

Yi Z, Tan KK (2004) Multistabiltiy of discrete-time recurrent neural networks with unsaturating piecewise linear activation functions. IEEE Trans Neural Netw 15(2):329–336

Qu H, Yi Z, Wang X (2008) Switching analysis of 2-D neural networks with nonsaturating linear threshold transfer functions. Neurocomputing 72:413–419

Tang H, Tan KC, Zhang W (2005) Analysis of cyclic dynamics for networks of linear threshold neurons. Neural Comput 17:97–114

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yu, J., Tang, H. & Li, H. Continuous attractors of discrete-time recurrent neural networks. Neural Comput & Applic 23, 89–96 (2013). https://doi.org/10.1007/s00521-012-0975-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-012-0975-5