Abstract

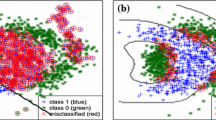

The extreme learning machine (ELM) is a new method for using single hidden layer feed-forward networks with a much simpler training method. While conventional kernel-based classifiers are based on a single kernel, in reality, it is often desirable to base classifiers on combinations of multiple kernels. In this paper, we propose the issue of multiple-kernel learning (MKL) for ELM by formulating it as a semi-infinite linear programming. We further extend this idea by integrating with techniques of MKL. The kernel function in this ELM formulation no longer needs to be fixed, but can be automatically learned as a combination of multiple kernels. Two formulations of multiple-kernel classifiers are proposed. The first one is based on a convex combination of the given base kernels, while the second one uses a convex combination of the so-called equivalent kernels. Empirically, the second formulation is particularly competitive. Experiments on a large number of both toy and real-world data sets (including high-magnification sampling rate image data set) show that the resultant classifier is fast and accurate and can also be easily trained by simply changing linear program.

Similar content being viewed by others

References

Lanckriet G, Cristianini N, Ghaoui LE, Bartlett P, Jordan MI (2004) Learning the kernel matrix with semi-definite programming. J Mach Learn Res 5:27–72

Schölkopf B, Smola AJ (2002) Learning with kernels. The MIT Press, Cambridge

Shawe-Taylor J, Cristianini N (2004) Kernel methods for pattern analysis. The Cambridge Univ Press, Cambridge

Huang GB, Chen L, Siew CK (2006) Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans Neural Netw 17:879–892

Huang GB, Zhu QY, Siew CK (2006) Extreme learning machine: theory and applications. Neurocomputing 70:489–501

Huang GB, Zhou H, Ding X, Zhang R (2012) Extreme learning machine for regression and multiclass classification. IEEE Trans Syst Man Cybern B Cybern 42:513–529

Han F, Huang DS (2006) Improved extreme learning machine for function approximation by encoding a priori information. Neurocomputing 69:2369–2373

Kim CT, Lee JJ (2008) Training two-layered feedforward networks with variable projection method. IEEE Trans Neural Netw 19:371–375

Zhu QY, Qin AK, Suganthan PN, Huang GB (2005) Evolutionary extreme learning machine. Pattern Recogn 38:1759–1763

Wang Y, Cao F, Yuan Y (2011) A study on effectiveness of extreme learning machine. Neurocomputing 74:2483–2490

Li GH, Liu M, Dong MY (2010) A new online learning algorithm for structure-adjustable extreme learning machine. Comput Math Appl 60:377–389

Huang GB, Chen L (2007) Convex incremental extreme learning machine. Neurocomputing 70:3056–3062

Huang GB, Li MB, Chen L, Siew CK (2008) Incremental extreme learning machine with fully complex hidden nodes. Neurocomputing 71:576–583

Feng G, Huang GB, Lin Q, Gay R (2009) Error minimized extreme learning machine with growth of hidden nodes and incremental learning. IEEE Trans Neural Netw 20:1352–1357

Rong HJ, Ong YS, Tan AH, Zhu Z (2008) A fast pruned-extreme learning machine for classification problem. Neurocomputing 72:359–366

Rong HJ, Huang GB, Sundararajan N, Saratchandran P (2009) Online sequential fuzzy extreme learning machine for function approximation and classification problems. IEEE Trans Syst Man Cybern B Cybern 39:1067–1072

Yoan M, Sorjamaa A, Bas P, Simula O, Jutten C, Lendasse A (2010) OP-ELM: optimally pruned extreme learning machine. IEEE Trans Neural Netw 21:158–162

Lan Y, Soh YC, Huang GB (2010) Constructive hidden nodes selection of extreme learning machine for regression. Neurocomputing 73:3191–3199

Yu S, Falck T, Daemen A et al (2010) L2-norm multiple kernel learning and its application to biomedical data fusion. BMC Bioinformatics 11:1–53

Ye JP, Ji SW, Chen JH (2008) Multi-class discriminant kernel learning via convex programming. J Mach Learn Res 9:719–758

Serre D (2002) Matrices: Theory and Applications. Springer, New York

Sonnenburg S, Ratsch G, Schafer C, Scholkopf B (2006) Large scale multiple kernel learning. J Mach Learn Res 7:1531–1565

Andersen ED, Andersen AD (2000) The MOSEK interior point optimizer for linear programming: an implementation of the homogeneous algorithm. In: Frenk H, Roos C, Terlaky T, Zhang S (eds) High performance optimization. Kluwer Academic Publishers, Norewell, USA, pp 197–232

Bach FR, Lanckriet GRG, Jordan MI (2004) Multiple kernel learning, conic duality, and the SMO algorithm. In: Proceedings of the 21st International Conference on Machine Learning (ICML). ACM, Banff, pp 6–13

Hoerl AE, Kennard RW (1970) Ridge regression: biased estimation for nonorthogonal problems. Technometrics 12:55–67

Yang H, Xu Z, Ye J, King I, Lyu MR (2011) Efficient sparse generalized multiple kernel learning. IEEE Trans Neural Netw 22:433–446

Gönen M, Alpaydın E (2011) Multiple kernel learning algorithms. J Mach Learn Res 12:2211–2268

Gu Y, Qu Y, Fang T, Li C, Wang H (2012) Image super-resolution based on multikernel regression. The 21st International Conference on in Pattern Recognition (ICPR)

Zong WW, Huang G-B, Chen Y (2013) Weighted extreme learning machine for imbalance learning. Neurocomputing 101:229–242

Parviainen E, Riihimäki J, Miche Y, Lendasse A (2010) Interpreting extreme learning machine as an approximation to an infinite neural network. In: Proceedings of the International Conference on Knowledge Discovery and Information Retrieval, pp 65–73

Huang GB, Wang D, Lan Y (2011) Extreme learning machines: a survey. Int J Mach Learn Cybernet 2:107–122

Hinrichs C, Singh V, Peng J, Johnson S (2012) Q-MKL: Matrix-induced regularization in multi-kernel learning with applications to neuroimaging. In: NIPS, pp 1430–1438

Liu X, Wang L, Huang G-B, Zhang J, Yin J (2013) Multiple kernel extreme learning machine. Neurocomputing. doi:10.1016/j.neucom.2013.09.072

Acknowledgments

We give warm thanks to Prof. Guangbin Huang, Prof. Zhihong Men, and the anonymous reviewers for helpful comments. This work was supported by the Zhejiang Provincial Natural Science Foundation of China (No. LR12F03002) and the National Natural Science Foundation of China (No. 61074045).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, X., Mao, W. & Jiang, W. Multiple-kernel-learning-based extreme learning machine for classification design. Neural Comput & Applic 27, 175–184 (2016). https://doi.org/10.1007/s00521-014-1709-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-014-1709-7