Abstract

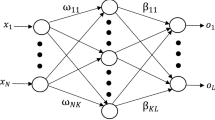

The Elman neural network has good dynamic properties and strong global stability, being most widely used to deal with nonlinear, dynamic, and complex data. However, as an optimization of the backpropagation (BP) neural network, the Elman model inevitably inherits some of its inherent deficiencies, influencing the recognition precision and operating efficiency. Many improvements have been proposed to resolve these problems, but it has proved difficult to balance the many relevant features such as storage space, algorithm efficiency, recognition precision, etc. Also, it is difficult to obtain a permanent solution from a temporary solution simultaneously. To address this, a genetic algorithm (GA) can be introduced into the Elman algorithm to optimize the connection weights and thresholds, which can prevent the neural network from becoming trapped in local minima and improve the training speed and success rate. The structure of the hidden layer can also be optimized using the GA, which can solve the difficult problem of determining the number of neurons. Most previous studies on such evolutionary Elman algorithms optimized the connection weights or network structure individually, which represents a slight deficiency. We propose herein a novel optimized GA–Elman neural network algorithm where the connection weights are real-encoded, while the neurons of the hidden layer also adopt real-coding but with the addition of binary control genes. In this new algorithm, the connection weights and the number of hidden neurons are optimized using hybrid encoding and evolution simultaneously, greatly improving the performance of the resulting novel GA–Elman algorithm. The results of three experiments show that this new GA–Elman model is superior to the traditional model in terms of all calculated indexes.

Similar content being viewed by others

References

Arriandiaga A, Portillo E, Sánchez JA et al (2016) A new approach for dynamic modelling of energy consumption in the grinding process using recurrent neural networks. Neural Comput Appl 27(6):1577–1592

Raghu S, Sriraam N, Kumar GP (2017) Classification of epileptic seizures using wavelet packet log energy and norm entropies with recurrent Elman neural network classifier. Cogn Neurodyn 11(1):51–56

Lu JJ, Chen H (2006) Researching development on BP neural networks. Control Eng China 13(5):449–451 (in Chinese)

Zhang HX, Lu J (2010) Creating ensembles of classifiers via fuzzy clustering and deflection. Fuzzy Sets Syst 161(13):1790–1802

Ltaief M, Bezine H, Alimi AM (2016) A spiking neural network model with fuzzy learning rate application for complex handwriting movements generation. Adv Intell Syst Comput 552:403–412

Lo TH, Gui Y, Peng Y (2015) The normalized risk-averting error criterion for avoiding nonglobal local minima in training neural networks. Neurocomputing 149:3–12

Kapanova KG, Dimov I, Sellier JM (2016) A genetic approach to automatic neural network architecture optimization. Neural Comput Appl. doi:10.1007/s00521-016-2510-6

Jia WK, Zhao DA, Ding L (2016) An optimized RBF neural network algorithm based on partial least squares and genetic algorithm for classification of small sample. Appl Soft Comput 48:373–384

Sivaraj R, Ravichandran DT (2011) A review of selection methods in genetic algorithm. Int J Eng Sci Technol 3(5):3792–3797

Qi F, Liu XY, Ma YH (2012) Synthesis of neural tree models by improved breeder genetic programming. Neural Comput Appl 21(3):515–521

Yao X (1999) Evolving artificial neural networks. Proc IEEE 87(9):1423–1447

Ding SF, Li H, Su CY et al (2013) Evolutionary artificial neural networks: a review. Artif Intell Rev 39(3):251–260

Kalinli A (2012) Simulated annealing algorithm-based Elman network for dynamic system identification. Turk J Electr Eng Comput Sci 20(4):569–582

Sheikhan M, Arabi MA, Gharavian D (2015) Structure and weights optimisation of a modified Elman network emotion classifier using hybrid computational intelligence algorithms: a comparative study. Connect Sci 27(4):1–18

Chen H, Zeng Z, Tang H (2015) Landslide deformation prediction based on recurrent neural network. Neural Process Lett 41(2):1–10

Chandra R, Zhang MJ (2012) Cooperative coevolution of Elman recurrent neural networks for chaotic time series prediction. Neurocomputing 86(1):116–123

Nate K, Risto M (2009) Evolving neural networks for strategic decision-making problems. Neural Netw 22(3):326–337

Ding SF, Zhang YN, Chen JR et al (2013) Research on using genetic algorithms to optimize Elman neural networks. Neural Comput Appl 23(2):293–297

Zhang YM (2003) The application of artificial neural network in the forecasting of wheat midge. Master's thesis, Northwest A&F University, Xi'an, China

http://www.ics.uci.edu/~mlearn/databases/Waveform Database Generator (Version 2). Accessed 16 Nov 2014

http://archive.ics.uci.edu/ml/datasets/Iris. Accessed 16 Nov 2014

Zheng XW, Lu DJ, Wang XG et al (2015) A cooperative coevolutionary biogeography-based optimizer. Appl Intell 43(1):1–17

Azali S, Sheikhan M (2016) Intelligent control of photovoltaic system using BPSO-GSA-optimized neural network and fuzzy-based PID for maximum power point tracking. Appl Intell 44(1):1–23

Hou SJ, Chen L, Tao D et al (2017) Multi-layer multi-view topic model for classifying advertising video. Pattern Recognit 68:66–81

Pareek NK, Patidar V (2016) Medical image protection using genetic algorithm operations. Soft Comput 20(2):763–772

Song XM, Ju HP (2017) Linear optimal estimation for discrete-time measurement delay systems with multichannel multiplicative noise. IEEE Trans Circuits Syst II Express Briefs 64(2):156–160

Ding SH, Li SH (2017) Second-order sliding mode controller design subject to mismatched term. Automatica 77:388–392

Acknowledgements

This work is supported by the National Natural Science Foundation of China (nos. 61572300, 31571571, and 61379101), Natural Science Foundation of Shandong Province in China (no. ZR2014FM001) and Taishan Scholar Program of Shandong Province of China (no. TSHW201502038).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there are no conflicts of interest regarding publication of this paper.

Rights and permissions

About this article

Cite this article

Jia, W., Zhao, D., Zheng, Y. et al. A novel optimized GA–Elman neural network algorithm. Neural Comput & Applic 31, 449–459 (2019). https://doi.org/10.1007/s00521-017-3076-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-017-3076-7