Abstract

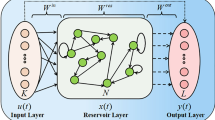

Echo state networks (ESNs) have been widely used in the field of time series prediction. However, it is difficult to automatically determine the structure of ESN for a given task. To solve this problem, the dynamical regularized ESN (DRESN) is proposed. Different from other growing ESNs whose existing architectures are fixed when new reservoir nodes are added, the current component of DRESN may be replaced by the newly generated network with more compact structure and better prediction performance. Moreover, the values of output weights in DRESN are updated by the error minimization-based method, and the norms of output weights are controlled by the regularization technique to prevent the ill-posed problem. Furthermore, the convergence analysis of the DRESN is given theoretically and experimentally. Simulation results demonstrate that the proposed approach can have few reservoir nodes and better prediction accuracy than other existing ESN models.

Similar content being viewed by others

Notes

The mathematical operation fix(A) is to round the value of A to the nearest integer toward zero.

References

Sheikhan M, Mohammadi N (2013) Time series prediction using PSO-optimized neural network and hybrid feature selection algorithm for IEEE load data. Neural Comput Appl 23(3–4):1185–1194

Zhang HJ, Cao X, John H, Tommy C (2017) Object-level video advertising: an optimization framework. IEEE Trans Ind Inf 13(2):520–531

Zhang HJ, Li JX, Ji YZ, Yue H (2017) Subtitle understanding by character-level sequence-to-sequence learning. IEEE Trans Ind Inf 13(2):616–624

Li HT (2016) Research on prediction of traffic flow based on dynamic fuzzy neural networks. Neural Comput Appl 27(7):1969–1980

Xia K, Gao HB, Ding L, Liu GJ, Deng ZQ, Liu Z, Ma CY (2016) Trajectory tracking control of wheeled mobile manipulator based on fuzzy neural network and extended Kalman filtering. Neural Comput Appl 6:1–16

Zhang R, Lan Y, Huang GB, Xu ZB, Soh YC (2013) Dynamic extreme learning machine and its approximation capability. IEEE Trans Cybern 43(6):2054–2065

Zhang HJ, Tommy C, Jonathan W, Tommy W (2016) Organizing books and authors using multi-layer SOM. IEEE Trans Neural Netw Learn Syst 27(12):2537–2550

Jaeger H, Haas H (2004) Harnessing nonlinearity: predicting chaotic systems and saving energy in wireless communication. Science 304:78–80

Huang GB, Qin GH, Zhao R, Wu Q, Shahriari A (2016) Recursive Bayesian echo state network with an adaptive inflation factor for temperature prediction. Neural Comput Appl 1:1–9

Peng Y, Lei M, Li JB, Peng XY (2014) A novel hybridization of echo state networks and multiplicative seasonal ARIMA model for mobile communication traffic series forecasting. Neural Comput Appl 24(3–4):883–890

Duan HB, Wang XH (2016) Echo state networks with orthogonal pigeon-inspired optimization for image restoration. IEEE Trans Neural Netw Learn Syst 27(11):2413–2425

Xu M, Han M (2016) Adaptive elastic echo state network for multivariate time series prediction. IEEE Trans Cybern 46(10):2173–2183

Koryakin D, Lohmann J, Butz MV (2012) Balanced echo state networks. Neural Netw 36(8):35–45

Wang HS, Yan XF (2014) Improved simple deterministically constructed cycle reservoir network with sensitive iterative pruning algorithm. Neurocomputing 145(18):353–362

Wang HS, Yan XF (2015) Optimizing the echo state network with a binary particle swarm optimization algorithm. Knowl Based Syst 86(C):182–193

Otte S, Butz MV, Koryakin D, Becker F, Liwicki M, Zell A (2016) Optimizing recurrent reservoirs with neuro-evolution. Neurocomputing 192:128–138

Dutoit X, Schrauwen B, Campenhout J, Stroobandt D, Brussel H, Nuttin M (2009) Pruning and regularization in reservoir computing. Neurocomputing 72(7–9):1534–1546

Scardapane S, Comminiello D, Scarpiniti M, Uncini A (2015) Significance-based pruning for reservoirs neurons in echo state networks. In: Advances in neural networks, computational and theoretical issues, Springer, pp 31–38

Qiao JF, Li FJ, Han GG, Li WJ (2016) Growing echo-state network with multiple subreservoirs. IEEE Trans Neural Netw Learn Syst 1:1–14

Zhang HJ, Jaime L, Christopher D, Stuart M (2012) Nature-Inspired self-organization, control and optimization in heterogeneous wireless networks. IEEE Trans Mobile Comput 11(7):1207–1222

Han HG, Zhang S, Qiao JF (2017) An adaptive growing and pruning algorithm for designing recurrent neural network. Neurocomputing 242:51–62

Juang CF, Huang RB, Lin YY (2009) A recurrent self-evolving interval type-2 fuzzy neural network for dynamic system processing. IEEE Trans Fuzzy Syst 17(5):1092–1105

Golub GH, Loan CF (2012) Matrix computations. The Johns Hopkins University Press, London, pp 70–71

Rao CR, Mitra SK (1971) Generalized inverse of matrices and its applications. Wiley, New York

Shutin D, Zechner C, Kulkarni SR, Poor HV (2012) Regularized variational Bayesian learning of echo state networks with delay sum readout. Neural Comput 24(4):967–995

Zou H (2006) The adaptive lasso and its oracle properties. J Am Stat Assoc 101(476):1418–1429

Tikhonov A (1963) Solution of incorrectly formulated problems and the regularization method. Sov Math Dokl 5(4):1035–1038

Xu ZX, Yao M, Wu ZH, Wei ZH (2016) Incremental regularized extreme learning machine and its enhancement. Neurocomputing 174:134–142

Jaeger H (2001) The “echo state” approach to analysing and training recurrent neural networks-with an erratum note. German National Research Center for Information Technology GMD, Bonn, Germany, technical report

Lorenz EN (1963) Deterministic nonperiodic flow. J Atmos Sci 20:130–141

Rodan A, Tino P (2011) Minimum complexity echo state network. IEEE Trans Neural Netw 22:131–144

Barat R, Montoya T, Seco A, Ferrer J (2011) Modelling biological and chemically induced precipitation of calcium phosphate in enhanced biological phosphorus removal systems. Water Res 45(12):3744–3752

Zhou DX (2013) On grouping effect of elastic net. Stat Probab Lett 83(9):2108–2112

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grants 61603012 and 61533002, the Beijing Municipal Education Commission Foundation under Grant KM201710005025, the Beijing Postdoctoral Research Foundation under Grant 2017ZZ-028, the China Postdoctoral Science Foundation funded project as well as the Beijing Chaoyang District Postdoctoral Research Foundation under Grant 2017ZZ-01-07.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Appendix 1: Parameters setting for OESN, RESN, ESN-LAR, ESN-DE and GESN

Appendix 1: Parameters setting for OESN, RESN, ESN-LAR, ESN-DE and GESN

For OESN, RESN and ESN-LAR, the reservoir size varies from 100 to 1000 by the step 50, the sparsity varies from 0.005 to 0.5 by step of 0.005, the spectral radius varies from 0.5 to 0.95 by step of 0.05. In GESN, the size of the added subreservoir equals to 5, and the subreservoirs are added group by group until the predefined algorithm iteration is reached. For the ESN-DE, the parameters of DE algorithm are given as: the population size is 5, the maximum generation equals to 100, and the mutation rata and crossover probability are set as 0.2 and 0.4, respectively.

Rights and permissions

About this article

Cite this article

Yang, C., Qiao, J., Wang, L. et al. Dynamical regularized echo state network for time series prediction. Neural Comput & Applic 31, 6781–6794 (2019). https://doi.org/10.1007/s00521-018-3488-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-018-3488-z