Abstract

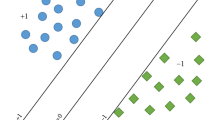

Twin support vector machine (TWSVM) is proved to be better than support vector machine (SVM) in most cases, since it only deals with two smaller quadratic programming problems, which leads to high computational efficiency. It is proposed to solve a single-task learning problem, just like many other machine learning algorithms. However, a learning task may have relationships with other tasks in many practical problems. Training those tasks independently may neglect the underlying information among all tasks, while such information may be useful to improve the overall performance. Inspired by the multi-task learning theory, we propose two novel multi-task \(\nu\)-TWSVMs. Both models inherit the merits of multi-task learning and \(\nu\)-TWSVM. Meanwhile, they overcome the shortcomings of other multi-task SVMs and multi-task TWSVMs. Experimental results on three benchmark datasets and two popular image datasets also clearly demonstrate the effectiveness of our methods.

Similar content being viewed by others

References

Burges CJ (1998) A tutorial on support vector machines for pattern recognition. Data Min Knowl Discov 2(2):121–167

Jayadeva, Khemchandani R, Chandra S (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell 29(5):905–910

Kumar MA, Gopal M (2009) Least squares twin support vector machines for pattern classification. Expert Syst Appl 36(4):7535–7543

Shao YH, Zhang CH, Wang XB, Deng NY (2011) Improvements on twin support vector machines. IEEE Trans Neural Netw 22(6):962–968

Qi Z, Tian Y, Shi Y (2013) Robust twin support vector machine for pattern classification. Pattern Recogn 46(1):305–316

Tian Y, Ju X, Qi Z, Shi Y (2014) Improved twin support vector machine. Sci China Math 57(2):417–432

Peng X (2010) A \(\nu\)-twin support vector machine (\(\nu\)-TSVM) classifier and its geometric algorithms. Inf Sci 180(20):3863–3875

Schlkopf B, Smola AJ, Williamson RC, Bartlett PL (2000) New support vector algorithms. Neural Comput 12(5):1207–1245

Xu Y, Yang Z, Pan X (2017) A novel twin support-vector machine with pinball loss. IEEE Trans Neural Netw 28(2):359–370

Xu Y, Li X, Pan X, Yang Z (2018) Asymmetric \(\nu\)-twin support vector regression. Neural Comput Appl 30(12):3799–3814

Xie X (2018) Regularized multi-view least squares twin support vector machines. Appl Intell 48(9):3108–3115

Xie X, Sun S, Chen H, Qian J (2018) Domain adaptation with twin support vector machines. Neural Process Lett 48:1213–1226

Wang Z, Shao YH, Bai L, Deng NY (2015) Twin support vector machine for clustering. IEEE Trans Neural Netw 26(10):2583–2588

Xie X (2017) Pac-bayes bounds for twin support vector machines. Neurocomputing 234(19):137–143

Pan X, Yang Z, Xu Y, Wang L (2018) Safe screening rules for accelerating twin support vector machine classification. IEEE Trans Neural Netw 29(5):1876–1887

Wang H, Xu Y (2018) Scaling up twin support vector regression with safe screening rule. Inf Sci 465:174–190

Ding S, Zhang N, Zhang X, Wu F (2017) Twin support vector machine: theory, algorithm and applications. Neural Comput Appl 28(11):3119–3130

Ding S, Zhao X, Zhang J, Zhang X, Xue Y (2019) A review on multi-class TWSVM. Artif Intell Rev 52(2):775–801

Qi K, Liu W, Yang C, Guan Q, Wu H (2017) Multi-task joint sparse and low-rank representation for the scene classification of high-resolution remote sensing image. Remote Sens 9(1):10

Jeong JY, Jun CH (2018) Variable selection and task grouping for multi-task learning. In: Proceedings of the 24th ACM SIGKDD international conference on knowledge discovery and data mining, pp 1589–1598

Caruana R (1998) Multitask learning. In: Learning to learn, pp 95–133

Zhang Y, Yang Q (2017) A survey on multi-task learning. arXiv preprint arXiv:1707.08114

Thung KH, Wee CY (2018) A brief review on multi-task learning. Multimed Tools Appl 77(22):29705–29725

Caruana R (1993) Multitask learning: a knowledge-based source of inductive bias. In: Proceedings of the tenth international conference on machine learning (ICML), pp 41–48

Baxter J (2000) A model of inductive bias learning. J Artif Intell Res 12(1):149–198

Bakker B, Heskes T (2003) Task clustering and gating for bayesian multitask learning. J Mach Learn Res 4:83–99

Yu K, Tresp V, Schwaighofer A (2005) Learning Gaussian processes from multiple tasks. In: Proceedings of the 22nd international conference on machine learning (ICML), pp 1012–1019

Zhang Y, Yang Q (2018) An overview of multi-task learning. Natl Sci Rev 5(1):30–43

Evgeniou T, Pontil M (2004) Regularized multi-task learning. In: Proceedings of the tenth ACM SIGKDD international conference on knowledge discovery and data mining, pp 109–117

Jebara T (2004) Multi-task feature and kernel selection for SVMs. In: Proceedings of the 21st international conference on machine learning (ICML), p 55

Micchelli CA, Pontil M (2004) Kernels for multi-task learning. In: Advances in neural information processing systems (NIPS), pp 921–928

Liu A, Xu N, Su Y, Lin H, Hao T, Yang Z (2015) Single/multi-view human action recognition via regularized multi-task learning. Neurocomputing 151:544–553

Cai F, Cherkassky VS (2012) Generalized SMO algorithm for SVM-based multitask learning. IEEE Trans Neural Netw 23(6):997–1003

Xu S, An X, Qiao X, Zhu L (2014) Multi-task least-squares support vector machines. Multimed Tools Appl 71(2):699–715

Li Y, Tian X, Song M, Tao D (2015) Multi-task proximal support vector machine. Pattern Recogn 48(10):3249–3257

Lu L, Lin Q, Pei H, Zhong P (2018) The ALS-SVM based multi-task learning classifiers. Appl Intell 48(8):2393–2407

Zhu J, Chen N, Xing EP (2011) Infinite latent SVM for classification and multi-task learning. In: Advances in neural information processing systems (NIPS), vol 24, pp 1620–1628

Ji Y, Sun S, Lu Y (2012) Multitask multiclass privileged information support vector machines. In: Proceedings of the 21st international conference on pattern recognition (ICPR), pp 2323–2326

Zhang J, He Y, Tang J (2018) Multi-view multi-task support vector machine. In: International conference on computational science (ICCS), pp 419–428

Markatopoulou F, Mezaris V, Patras I (2016) Online multi-task learning for semantic concept detection in video. In: IEEE international conference on image processing (ICIP), pp 186–190

Liang X, Zhu L, Huang D (2017) Multi-task ranking SVM for image cosegmentation. Neurocomputing 247:126–136

Jia X, Wang S, Yang Y (2018) Least-squares support vector machine for semi-supervised multi-tasking. In: IEEE 16th international conference on software engineering research, management and applications (SERA), pp 79–86

Xie X, Sun S (2012) Multitask twin support vector machines. In: Proceedings of the 19th international conference on neural information processing (ICONIP), pp 341–348

Xie X, Sun S (2015) Multitask centroid twin support vector machines. Neurocomputing 149:1085–1091

Mei B, Xu Y (2019) Multi-task least squares twin support vector machine for classification. Neurocomputing 338:26–33

Dua D, Graff C (2019) UCI machine learning repository. University of California, School of Information and Computer Science, Irvine. http://archive.ics.uci.edu/ml

Tsoumakas G, Spyromitros-Xioufis E, Vilcek J, Vlahavas I (2011) Mulan: a Java library for multi-label learning. J Mach Learn Res 12:2411–2414

Li F, Fergus R, Perona P (2004) Learning generative visual models from few training examples: an incremental bayesian approach tested on 101 object categories. In: 2004 Conference on computer vision and pattern recognition workshop, pp 178–178

Li F, Fergus R, Perona P (2006) One-shot learning of object categories. IEEE Trans Pattern Anal Mach Intell 28(4):594–611

Griffin G, Holub AD, Perona P. The Caltech 256. Caltech Technical Report

Lowe D (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Li F, Fergus P (2005) A Bayesian hierarchical model for learning natural scene categories. In: IEEE computer society conference on computer vision and pattern recognition (CVPR), vol 2, pp 524–531

Ehab S, Qasaimeh M (2017) Recent advances in features extraction and description algorithms: a comprehensive survey. In: IEEE international conference on industrial technology (ICIT), pp 1059–63

Zheng L, Yang Y, Tian Q (2018) SIFT meets CNN: a decade survey of instance retrieval. IEEE Trans Pattern Anal Mach Intell 40(5):1224–1244

Baroffio L, Redondi A, Tagliasacchi M, Tubaro S (2016) A survey on compact features for visual content analysis. APSIPA Trans Signal Inf Process 5:e13

Seidenari L, Serra G, Bagdanov AD, Bimbo AD (2014) Local pyramidal descriptors for image recognition. IEEE Trans Pattern Anal Mach Intell 36(5):1033–1040

Satpathy A, Jiang X, Eng H-L (2014) LBP-based edge-texture features for object recognition. IEEE Trans Image Process 23(5):1953–1964

Kim J, Tahboub K, Delp EJ (2017) Spatial pyramid alignment for sparse coding based object classification. In: 2017 IEEE international conference on image processing (ICIP), pp 1950–1954

Mahmood A, Bennamoun M, An S, Sohel FA (2017) Resfeats: residual network based features for image classification. In 2017 IEEE international conference on image processing (ICIP), pp 1597–1601

Pan Y, Xia Y, Song Y, Cai W (2018) Locality constrained encoding of frequency and spatial information for image classification. Multimed Tools Appl 77(19):24891–24907

Acknowledgements

The authors gratefully acknowledge the helpful comments and suggestions of the reviewers, which have improved the presentation. This work was supported in part by the National Natural Science Foundation of China (No. 11671010), Beijing Natural Science Foundation (No. 4172035) and Chinese People’s Liberation Army General Hospital (No. 2017MBD-002).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mei, B., Xu, Y. Multi-task \(\nu\)-twin support vector machines. Neural Comput & Applic 32, 11329–11342 (2020). https://doi.org/10.1007/s00521-019-04628-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-019-04628-5