Abstract

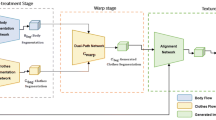

During the past decades, applying deep learning technologies on fashion industry are increasingly the mainstream. Due to the different gesture, illumination or self-occasion, it is hard to directly utilize the clothes images in real-world applications. In this paper, to handle this problem, we present a novel multi-stage, category-supervised attention-based conditional generative adversarial network by generating clear and detailed tiled clothing images from certain model images. This newly proposed method consists of two stages: in the first stage, we generate the coarse image which contains general appearance information (such as color and shape) and category of the garment, where a spatial transformation module is utilized to handle the shape changes during image synthesis and an additional classifier is employed to guide coarse image generated in a category-supervised manner; in the second stage, we propose a dual path attention-based model to generate the fine-tuned image, which combines the appearance information of the coarse result with the high-frequency information of the model image. In detail, we introduce the channel attention mechanism to assign weights to the information of different channels instead of connecting directly. Then, a self-attention module is employed to model long-range correlation making the generated image close to the target. In additional to the framework, we also create a person-to-clothing data set containing 10 categories of clothing, which includes more than 34 thousand pairs of images with category attribute. Extensive simulations are conducted, and experimental result on the data set demonstrates the feasibility and superiority of the proposed networks.

Similar content being viewed by others

References

Arjovsky M, Chintala S, Bottou L (2017) Wasserstein gan. arXiv preprint arXiv:1701.07875

Berthelot D, Schumm T, Metz L (2017) Began: Boundary equilibrium generative adversarial networks. arXiv preprint arXiv:1703.10717

Brock A, Donahue J, Simonyan K (2018) Large scale gan training for high fidelity natural image synthesis. arXiv preprint arXiv:1809.11096

Chen L, Zhang H, Xiao J, Nie L, Shao J, Liu W, Chua TS (2017) Sca-cnn: Spatial and channel-wise attention in convolutional networks for image captioning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5659–5667

Fan J, Chow TW (2019) Exactly robust kernel principal component analysis. IEEE Trans Neural Netw Learn Syst

Fan J, Udell M (2019) Online high rank matrix completion. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8690–8698

Fu J, Liu J, Tian H, Li Y, Bao Y, Fang Z, Lu H (2019) Dual attention network for scene segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3146–3154

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. In: Advances in neural information processing systems, pp 2672–2680

Gulrajani I, Ahmed F, Arjovsky M, Dumoulin V, Courville AC (2017) Improved training of wasserstein gans. In: Advances in neural information processing systems, pp 5767–5777

Han X, Wu Z, Wu Z, Yu R, Davis LS (2018) Viton: An image-based virtual try-on network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7543–7552

Heusel M, Ramsauer H, Unterthiner T, Nessler B, Hochreiter S (2017) Gans trained by a two time-scale update rule converge to a local nash equilibrium. In: Advances in neural information processing systems, pp 6626–6637

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Huang X, Li Y, Poursaeed O, Hopcroft J, Belongie S (2017) Stacked generative adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5077–5086

Huang X, Liu MY, Belongie S, Kautz J (2018) Multimodal unsupervised image-to-image translation. In: Proceedings of the European conference on computer vision (ECCV), pp 172–189

Isola P, Zhu JY, Zhou T, Efros AA (2017) Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1125–1134

Jaderberg M, Simonyan K, Zisserman A, et al (2015) Spatial transformer networks. In: Advances in neural information processing systems, pp 2017–2025

Johnson J, Alahi A, Fei-Fei L (2016) Perceptual losses for real-time style transfer and super-resolution. In: European conference on computer vision. Springer, Berlin, pp 694–711

Kang Z, Pan H, Hoi SC, Xu Z (2019) Robust graph learning from noisy data. IEEE Trans Cybern

Karras T, Aila T, Laine S, Lehtinen J (2017) Progressive growing of gans for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196

Kim J, Kim M, Kang H, Lee K (2019) U-gat-it: unsupervised generative attentional networks with adaptive layer-instance normalization for image-to-image translation. arXiv preprint arXiv:1907.10830

Kingma DP, Welling M (2013) Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114

Ledig C, Theis L, Huszár F, Caballero J, Cunningham A, Acosta A, Aitken A, Tejani A, Totz J, Wang Z, et al (2017) Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4681–4690

Liu KH, Chen TY, Chen CS (2016) Mvc: A dataset for view-invariant clothing retrieval and attribute prediction. In: Proceedings of the 2016 ACM on international conference on multimedia retrieval, pp 313–316. ACM

Liu L, Zhang H, Ji Y, Wu QJ (2019) Toward ai fashion design: an attribute-gan model for clothing match. Neurocomputing 341:156–167

Liu L, Zhang H, Xu X, Zhang Z, Yan S (2019) Collocating clothes with generative adversarial networks cosupervised by categories and attributes: a multidiscriminator framework. IEEE Trans Neural Netw Learn Syst

Liu MY, Breuel T, Kautz J (2017) Unsupervised image-to-image translation networks. In: Advances in neural information processing systems, pp 700–708

Liu Z, Luo P, Qiu S, Wang X, Tang X (2016) Deepfashion: powering robust clothes recognition and retrieval with rich annotations. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1096–1104

Ma J, Zhang H, Yi P, Wang ZY (2019) SCSCN: a separated channel-spatial convolution net with attention for single-view reconstruction. IEEE Trans Ind Electron

Mao X, Li Q, Xie H, Lau RY, Wang Z, Paul Smolley S (2017) Least squares generative adversarial networks. In: Proceedings of the IEEE international conference on computer vision, pp 2794–2802

Mirza M, Osindero S (2014) Conditional generative adversarial nets. arXiv preprint arXiv:1411.1784

Odena A, Olah C, Shlens J (2017) Conditional image synthesis with auxiliary classifier gans. In: Proceedings of the 34th international conference on machine learning, vol 70, pp 2642–2651. JMLR. org

Radford A, Metz L, Chintala S (2015) Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434

Reed S, Akata Z, Yan X, Logeswaran L, Schiele B, Lee H (2016) Generative adversarial text to image synthesis. arXiv preprint arXiv:1605.05396

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention. Springer, Berlin, pp 234–241

Salimans T, Goodfellow I, Zaremba W, Cheung V, Radford, A, Chen X (2016) Improved techniques for training gans. In: Advances in neural information processing systems, pp 2234–2242

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Sohn K, Lee H, Yan X (2015) Learning structured output representation using deep conditional generative models. In: Advances in neural information processing systems, pp 3483–3491

Wang B, Zheng H, Liang X, Chen Y, Lin L, Yang M (2018) Toward characteristic-preserving image-based virtual try-on network. In: Proceedings of the European conference on computer vision (ECCV), pp 589–604

Wang TC, Liu MY, Zhu JY, Tao A, Kautz J, Catanzaro B (2018) High-resolution image synthesis and semantic manipulation with conditional gans. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8798–8807

Wang X, Girshick RB, Gupta A, He K (2018) Non-local neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7794–7803

Wang X, Yu K, Wu S, Gu J, Liu Y, Dong C, Qiao Y, Change Loy C (2018) Esrgan: enhanced super-resolution generative adversarial networks. In: Proceedings of the European conference on computer vision (ECCV)

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP et al (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process. 13(4):600–612

Woo S, Park J, Lee JY, So Kweon I (2018) Cbam: convolutional block attention module. In: Proceedings of the European conference on computer vision (ECCV), pp 3–19

Xu H, Liang P, Yu W, Jiang J, Ma J (2019) Learning a generative model for fusing infrared and visible images via conditional generative adversarial network with dual discriminators. In: Proceedings of international joint conference artificial intelligence, pp 3954–3960

Zhang H, Goodfellow I, Metaxas D, Odena A (2018) Self-attention generative adversarial networks. arXiv preprint arXiv:1805.08318

Zhang H, Ji Y, Huang W, Liu L (2019) Sitcom-star-based clothing retrieval for video advertising: a deep learning framework. Neural Comput Appl 31(11):7361–7380

Zhang H, Sun Y, Liu L, Wang X, Li L, Liu W (2018) Clothingout: a category-supervised gan model for clothing segmentation and retrieval. Neural Comput Appl, pp 1–12

Zhang H, Sun Y, Liu L, Xu X (2019) Cascadegan: a category-supervised cascading generative adversarial network for clothes translation from the human body to tiled images. Neurocomputing

Zhang H, Xu T, Li H, Zhang S, Wang X, Huang X, Metaxas DN (2017) Stackgan: text to photo-realistic image synthesis with stacked generative adversarial networks. In: Proceedings of the IEEE international conference on computer vision, pp 5907–5915

Zhang Y, Li K, Li K, Wang L, Zhong B, Fu Y (2018) Image super-resolution using very deep residual channel attention networks. In: Proceedings of the European conference on computer vision (ECCV), pp 286–301

Zhu H, Cheng Y, Peng X, Zhou JT, Kang Z, Lu S, Fang Z, Li L, Lim JH (2019) Single-image dehazing via compositional adversarial network. IEEE Trans Cybern

Zhu JY, Park T, Isola P, Efros AA (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision, pp 2223–2232

Zhu S, Urtasun R, Fidler S, Lin D, Change Loy C (2017) Be your own prada: fashion synthesis with structural coherence. In: Proceedings of the IEEE international conference on computer vision, pp 1680–1688

Acknowledgements

This work is partially supported by National Key Research and Development Program of China (2019YFC1521300), supported by National Natural Science Foundation of China (61971121, 61672365), supported by the Fundamental Research Funds for the Central Universities of China and DHU Distinguished Young Professor Program, and also supported by the Fundamental Research Funds for the Central Universities of China (JZ2019HGPA0102).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zeng, W., Zhao, M., Gao, Y. et al. TileGAN: category-oriented attention-based high-quality tiled clothes generation from dressed person. Neural Comput & Applic 32, 17587–17600 (2020). https://doi.org/10.1007/s00521-020-04928-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-020-04928-1