Abstract

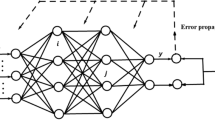

The scientific researches are focused on network topologies and training algorithms fields because they reduce overfitting problem in artificial neural networks. In this context, we showed in our previous work that Newman–Watts small-world feed-forward artificial neural networks present better classification and prediction performance than conventional feed-forward artificial neural networks. In this study, we investigate the effects of the Resilient back-propagation algorithm on SW network topology and propose a Resilient Newman–Watts small-world feed-forward artificial neural network model by assuming fixed initial topological conditions. We find that Resilient small-world network further reduces overfitting and further increases the network performance when compared to the conventional feed-forward artificial neural networks. Furthermore, it is shown that the proposed network model does not increase the algorithmic complexity as per other models. The obtained results imply that the proposed model can contribute to the solving of overfitting problem encountered in both deep neural networks and conventional artificial neural networks.

Similar content being viewed by others

References

Abiodun OI, Jantan A, Omolara AE, Dada KV, Mohamed NA, Arshad H (2018) State-of-the-art in artificial neural network applications: a survey. Heliyon 4(11):e00938

He C, Ma M, Wang P (2020) Extract interpretability-accuracy balanced rules from artificial neural networks: a review. Neurocomputing 387:346–358

Shahid N, Rappon T, Berta W (2019) Applications of artificial neural networks in health care organizational decision-making: a scoping review. PLoS ONE 14(2):e0212356

Kowsalya S, Periasamy PS (2019) Recognition of Tamil handwritten character using modified neural network with aid of elephant herding optimization. Multimed Tools Appl 78(17):25043–25061

Mehtani P, Priya A (2011) Pattern classification using artificial neural networks. Dissertation, National Institute of Technology Rourkela

Yousif JH, Kazem HA, Alattar NN, Elhassan II (2019) A comparison study based on artificial neural network for assessing PV/T solar energy production. Case Stud Therm Eng 13:100407

Stojčić M, Stjepanović A, Stjepanović D (2019) ANFIS model for the prediction of generated electricity of photovoltaic modules. Decis Mak Appl Manag Eng 2(1):35–48

Sremac S, Zavadskas EK, Matić B, Kopić M, Stević Ž (2019) Neuro-fuzzy inference systems approach to decision support system for economic order quantity. Econ Res 32(1):1114–1137

Kim B (2015) Interactive and interpretable machine learning models for human machine collaboration. Dissertation, Massachusetts Institute of Technology

Madani K (2006) Industrial and real world applications of artificial neural networks illusion or reality? Informatics in control, automation and robotics I. Springer, Berlin, pp 11–26

Haykin S (1999) Neural networks—a comprehensive foundation, 2nd edn. Prentice-Hall, Englewood Cliffs

Magnitskii NA (2001) Some new approaches to the construction and learning of artificial neural networks. Comput Math Mod 2(4):293–304

Zhang L, Hong L, Xian-Guang Kong X (2019) Evolving feed forward artificial neural networks using a two-stage approach. Neurocomputing 360:25–36

Heravi AR, Hodtani GA (2018) A new correntropy-based conjugate gradient backpropagation algorithm for improving training in neural networks. IEEE Trans Neural Netw Learn Syst 29(12):6252–6263

Riedmiller M, Braun H (1993) A direct adaptive method for faster backpropagation learning: the RPROP Algorithm. In: Proceedings of the IEEE international conference on neural networks. IEEE, pp 586–591

Riedmiller M, Braun H (2015) Neural speed controller trained online by means of modified rprop algorithm. IEEE Trans Ind Inform 11:586–591

Shrestha SB, Song Q (2017) Robust learning in SpikeProp. Neural Netw 86:54–68

Pavel MS, Schulz H, Behnke S (2017) Object class segmentation of RGB-D video using recurrent convolutional neural networks. Neural Netw 88:105–113

Mahdavifar S, Ghorbani AA (2019) Application of deep learning to cybersecurity: a survey. Neurocomputing 347:149–176

Erkaymaz O, Ozer M, Yumusak N (2014) Impact of small-world topology on the performance of a feed-forward artificial neural network based on 2 different real-life problems. Turk J Electr Eng Comput Sci 22:708–718

Watts DJ, Strogatz SH (1998) Collective dynamics of ‘small-world’ networks. Nature 393:409–410

Latora V, Marchiori M (2001) Efficient behavior of small-world networks. Phys Rev Lett 87(19):198701

Watts DJ (2003) Small worlds: the dynamics of networks between order and randomness. Princeton University Press, Princeton

Bassett DS, Bullmore E (2006) Small-world brain networks. Neuroscientist 12(6):512–523

Kawai Y, Park J, Asada M (2019) A small-world topology enhances the echo state property and signal propagation in reservoir computing. Neural Netw 112:15–23

Erkaymaz O, Ozer M (2016) Impact of small-world network topology on the conventional artificial neural network for the diagnosis of diabetes. Chaos Solitons Fract 83:178–185

Erkaymaz O, Ozer M (2016) Impact of Newman–Watts small-world approach on the performance of feed-forward artificial neural networks. Karaelmas Sci Eng J 6(1):187–194

Erkaymaz O, Ozer M, Perc M (2017) Performance of small-world feedforward neural networks for the diagnosis of diabetes. Appl Math Comput 311:22–28

Simard D, Nadeau L, Kröger H (2005) Fastest learning in small-world neural networks. Phys Lett A 336(1):8–15

Li X, Xu F, Zhang J, Wang S (2013) A multilayer feed forward small-world neural network controller and its application on electrohydraulic actuation system. J App Math. https://doi.org/10.1155/2013/872790

Newman MEJ, Watts DJ (1999) Scaling and percolation in the small-world network model. Phys Rev E 60:7332–7342

Kiranyaz S, Ince T, Yildirim A, Gabbouj M (2009) Evolutionary artificial neural networks by multi-dimensional particle swarm optimization. Neural Netw 22(10):1448–1462

Tang R, Fong S, Deb S, Vasilakos AV, Millham RC (2018) Dynamic group optimisation algorithm for training feed-forward neural networks. Neurocomputing 314:1–19

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Erkaymaz, O. Resilient back-propagation approach in small-world feed-forward neural network topology based on Newman–Watts algorithm. Neural Comput & Applic 32, 16279–16289 (2020). https://doi.org/10.1007/s00521-020-05161-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-020-05161-6