Abstract

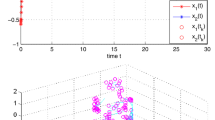

We start by presenting the concept of Stepanov-like weighted pseudo almost automorphic on time-space scales. Besides, we introduce a novel model of high-order BAM neural networks with mixed delays. To the best of our knowledge, this is the first time to study the convergence analysis for one system modeling the recurrent neural networks. Since it is a \(\Delta \)-dynamic system on time-space scales, the results obtained in our work are new and attractive. The main difficulty in our theoretical work is the construction of Lyapunov–Krasovskii functional and the application of the Banach’s fixed-point theorem. By fabricating an appropriate Lyapunov–Krasovskii Functional (LKF), some new sufficient conditions are obtained in terms of linear algebraic equations to guarantee the convergence to the Stepanov-like WPAA on time-space scales solution for the labeled neural networks solutions. The obtained conditions are expressed in terms of algebraic equations whose feasibility can be checked easily by a simple calculus. Furthermore, we have collated our effort with foregoing one in the available literatures and showed that it is less conserved. Finally, two numerical examples show the feasibility of our theoretical outcomes.

Similar content being viewed by others

Availability of data and supporting materials

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Lizama C, Mesquita JG (2013) Almost automorphic solutions of dynamic equations on time scales. J Funct Anal 265(10):2267–2311

Arbi A, Alsaedi A, Cao J (2018) Delta-differentiable weighted pseudo-almost automorphicity on time-space scales for a novel class of high-order competitive neural networks with WPAA coefficients and mixed delays. Neural Process Lett 47(1):203–232

Arbi A, Cao J, Alsaedi A (2018) Improved synchronization analysis of competitive neural networks with time-varying delays. Nonlinear Anal Model Control 23(1):82–102

Zhu H, Zhu Q, Sun X, Zhou H (2016) Existence and exponential stability of pseudo almost automorphic solutions for Cohen-Grossberg neural networks with mixed delays. Adv Differ Equ 2016:120

Li Y, Yang L (2014) Almost automorphic solution for neutral type high-order Hopfield neural networks with delays in leakage terms on time scales. Appl Math Comput 242:679–693

Guo Y (2009) Mean square global asymptotic stability of stochastic recurrent neural networks with distributed delays. Appl Math Comput 215:791–795

Sowmiya C, Raja R, Cao J, Rajchakit G, Alsaedi A (2017) Enhanced robust finite-time passivity for Markovian jumping discrete-time BAM neural networks with leakage delay. Adv Differ Equ 1:318

Bao H, Cao J (2012) Exponential stability for stochastic BAM networks with discrete and distributed delays. Appl Math Comput 218(11):6188–6199

Cao J, Rakkiyappan R, Maheswari K (2016) Chandrasekar A (2016) Exponential \(H_{\infty }\) filtering analysis for discrete-time switched neural networks with random delays using sojourn probabilities. Sci China Technol Sci 59(3):387–402

Maharajan C, Raja R, Cao J, Rajchakit G, Tu Z (2018) LMI-based results on exponential stability of BAM-type neural networks with leakage and both time-varying delays: a non-fragile state estimation approach. Appl Math Comput 326:33–55

Zhang A, Qiu J, She J (2014) Existence and global exponential stability of periodic solution for high-order discrete-time BAM neural networks. Neural Netw 50:98–109

Ren F, Cao J (2007) Periodic oscillation of higher-order bidirectional associative memory neural networks with periodic coefficients and delays. Nonlinearity 20:605–629

Kosko B (1988) Bi-directional associative memories. IEEE Trans Syst Man Cybern 18(1):49–60

Arbi A (2018) Dynamics of BAM neural networks with mixed delays and leakage time-varying delays in the weighted pseudo-almost periodic on time-space scales. Math Methods Appl Sci 41(3):1230–1255. https://doi.org/10.1002/mma.4661

Chen A, Huang L, Cao J (2003) Existence and stability of almost periodic solution for BAM neural networks with delays. Appl Math Comput 137(1):177–193

Cao J (2003) Global asymptotic stability of delayed bi-directional associative memory neural networks. Appl Math Comput 142:333–339

Arik S, Tavsanoglu V (2005) Global asymptotic stability analysis of bidirectional associative memory neural networks with constant time delays. Neurocomputing 68:161–176

Ozcan N, Arik S (2009) A new sufficient condition for global robust stability of bidirectional associative memory neural networks with multiple time delays. Nonlinear Anal Real World Appl 10(5):3312–3320

Huo HF, Li WT, Tang S (2009) Dynamics of high-order BAM neural networks with and without impulses. Appl Math Comput 215(6):2120–2133

Cao J, Liang J, Lam J (2004) Exponential stability of high-order bidirectional associative memory neural networks with time delays. Phys D 199(3):425–436

Chandran S, Ramachandran R, Cao J, Agarwal Ravi P, Rajchakit G (2019) Passivity analysis for uncertain BAM neural networks with leakage, discrete and distributed delays using novel summation inequality. Int J Control Autom Syst 17(8):2114–2124

Rajchakit G, Pratap A, Raja R, Cao J, Alzabut J, Huang C (2019) Hybrid control scheme for projective lag synchronization of Riemann-Liouville sense fractional order memristive BAM neuralnetworks with mixed delays. Mathematics 7(8):759

Saravanakumar R, Rajchakit G, Syed Ali M, Hoon Joo Y (2019) Exponential dissipativity criteria for generalized BAM neural networks with variable delays. Neural Comput Appl 31(7):2717–2726

Pratap A, Raja R, Rajchakit G, Cao J, Bagdasar O (2019) Mittag-Leffler state estimator design and synchronization analysis for fractional-order BAM neural networks with time delays. Int J Adapt Control Signal Process 33(5):855–874

Sowmiya C, Raja R, Cao J, Rajchakit G (2018) Enhanced result on stability analysis of randomly occurring uncertain parameters, leakage, and impulsive BAM neural networks with time-varying delays: discrete-time case. Int J Adapt Control Signal Process 32(7):1010–1039

Sowmiya C, Raja R, Cao J, Li X, Rajchakit G (2018) Discrete-time stochastic impulsive BAM neural networks with leakage and mixed time delays: an exponential stability problem. J Franklin Inst 355(10):4404–4435

Sowmiya C, Raja R, Cao J, Rajchakit G (2018) Impulsive discrete-time BAM neural networks with random parameter uncertainties and time-varying leakage delays: an asymptotic stability analysis. Nonlinear Dyn 91(4):2571–2592

Maharajan C, Raja R, Cao J, Rajchakit G, Alsaedi A (2018) Impulsive Cohen-Grossberg BAM neural networks with mixed time-delays: an exponential stability analysis. Neurocomputing 275:2588–2602

Gopalsamy K (2007) Leakage delays in BAM. J Math Anal Appl 325(2):1117–1132

Li Y, Li Y (2013) Existence and exponential stability of almost periodic solution for neutral delay BAM neural networks with time-varying delays in leakage terms. J Franklin Inst 350(9):2808–2825

Arbi A, Cao J (2017) Pseudo-almost periodic solution on time-space scales for a novel class of competitive neutral-type neural networks with mixed time-varying delays and leakage delays. Neural Process Lett 46(2):719–745

Liu B (2013) Global exponential stability for BAM neural networks with time-varying delays in the leakage terms. Nonlinear Anal Real World Appl 14(1):559–566

Maharajan C, Raja R, Cao J, Rajchakit G (2018) Novel global robust exponential stability criterion for uncertain inertial-type BAM neural networks with discrete and distributed time-varying delays via Lagrange sense. J Franklin Inst 355(11):4727–4754

Bohner M, Peterson A (2003) Advances in dynamic equations on time scales. Birkhäuser, Boston

Guseinov GS (2003) Integration on time scales. J Math Anal Appl 285:107–127

Wang C, Ravi PA (2014) Weighted piecewise pseudo almost automorphic functions with applications to abstract impulsive \(\nabla \)-dynamic equations on time scales. Adv Differ Equ 1:1–29

Diagana T (2013) Almost automorphic type and almost periodic type functions in abstract spaces. Spring, Cham

Acknowledgements

We would like to thank Prof. Dr. Haydar Akca and both anonymous reviewers and the editor for their insightful comments on the paper, as these comments led us to an improvement of the work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Arbi, A., Guo, Y. & Cao, J. Convergence analysis on time scales for HOBAM neural networks in the Stepanov-like weighted pseudo almost automorphic space. Neural Comput & Applic 33, 3567–3581 (2021). https://doi.org/10.1007/s00521-020-05183-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-020-05183-0