Abstract

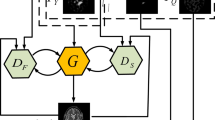

Medical image fusion techniques can further improve the accuracy and time efficiency of clinical diagnosis by obtaining comprehensive salient features and detail information from medical images of different modalities. We propose a novel medical image fusion algorithm based on deep convolutional generative adversarial network and dense block models, which is used to generate fusion images with rich information. Specifically, this network architecture integrates two modules: an image generator module based on dense block and encoder–decoder and a discriminator module. In this paper, we use the encoder network to extract the image features, process the features using fusion rule based on the Lmax norm, and use it as the input of the decoder network to obtain the final fusion image. This method can overcome the weaknesses of the active layer measurement by manual design in the traditional methods and can process the information of the intermediate layer according to the dense blocks to avoid the loss of information. Besides, this paper uses detail loss and structural similarity loss to construct the loss function, which is used to improve the extraction ability of target information and edge detail information related to images. Experiments on the public clinical diagnostic medical image dataset show that the proposed algorithm not only has excellent detail preserve characteristics but also can suppress the artificial effects. The experiment results are better than other comparison methods in different types of evaluation.

Similar content being viewed by others

References

Liu Y, Chen X, Cheng J, Peng H (2017) A medical image fusion method based on convolutional neural networks. In: 2017 20th International conference on information fusion (fusion), pp 1–7

Bhatnagar G, Wu QJ, Liu Z (2013) Directive contrast based multimodal medical image fusion in NSCT domain. IEEE Trans Multimed 15(5):1014–1024

Liang X, Hu P, Zhang L, Sun J, Yin G (2019) MCFNet: multi-layer concatenation fusion network for medical images fusion. IEEE Sens J 19(16):7107–7119

Toet A (1989) A morphological pyramidal image decomposition. Pattern Recognit Lett 9(4):255–261

Du J, Li W, Xiao B, Nawaz Q (2016) Union Laplacian pyramid with multiple features for medical image fusion. Neurocomputing 194:326–339

Li H, Manjunath B, Mitra SK (1995) Multisensor image fusion using the wavelet transform. Gr Models Image Process 57(3):235–245

Acerbi-Junior F, Clevers J, Schaepman ME (2006) The assessment of multi-sensor image fusion using wavelet transforms for mapping the Brazilian Savanna. Int J Appl Earth Observ Geoinform 8(4):278–288

Liu K, Guo L, Chen J (2011) Contourlet transform for image fusion using cycle spinning. J Syst Eng Electron 22(2):353–357

Miao Q, Shi C, Xu P, Yang M, Shi Y (2011) A novel algorithm of image fusion using shearlets. Opt Commun 284(6):1540–1547

Liu X, Zhou Y, Wang J (2014) Image fusion based on shearlet transform and regional features. AEU Int J Electron Commun 68(6):471–477

Qu X, Yan J, Xiao H, Zhu Z (2008) Image fusion algorithm based on spatial frequency-motivated pulse coupled neural networks in nonsubsampled contourlet transform domain. Acta Automatica Sinica 34(12):1508–1514

Das S, Kundu MK (2012) NSCT-based multimodal medical image fusion using pulse-coupled neural network and modified spatial frequency. Med Biol Eng Comput 50(10):1105–1114

Singh S, Gupta D, Anand R, Kumar V (2015) Nonsubsampled shearlet based CT and MR medical image fusion using biologically inspired spiking neural network. Biomed Signal Process Control 18:91–101

Liu X, Mei W, Du H (2017) Structure tensor and nonsubsampled shearlet transform based algorithm for CT and MRI image fusion. Neurocomputing 235:131–139

Li S, Kang X, Hu J (2013) Image fusion with guided filtering. IEEE Trans Image Process 22(7):2864–2875

Du J, Li W, Xiao B (2017) Anatomical-functional image fusion by information of interest in local Laplacian filtering domain. IEEE Trans Image Process 26(12):5855–5866

Liu Y, Chen X, Ward RK, Wang ZJ (2016) Image fusion with convolutional sparse representation. IEEE Signal Process Lett 23(12):1882–1886

Hou R, Zhou D, Nie R, Liu D, Ruan X (2019) Brain CT and MRI medical image fusion using convolutional neural networks and a dual-channel spiking cortical model. Med Biol Eng Comput 57(4):887–900

Zhang Y, Liu Y, Sun P, Yan H, Zhao X, Zhang L (2020) IFCNN: a general image fusion framework based on convolutional neural network. Inform Fusion 54:99–118

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4700–4708

Song X, Wu X, Li H (2019) MSDNet for medical image fusion. In: International conference on image and graphics, pp 278–288

Li H, Wu X (2018) Densefuse: a fusion approach to infrared and visible images. IEEE Trans Image Process 28(5):2614–2623

Zhang H, Sun Y, Liu L, Wang X, Li L, Liu W (2020) ClothingOut: a category-supervised GAN model for clothing segmentation and retrieval. Neural Comput Appl 32(9):4519–4530

Ma J, Yu W, Liang P, Li C, Jiang J (2019) FusionGAN: a generative adversarial network for infrared and visible image fusion. Inform Fusion 48:11–26

Ma J, Liang P, Yu W, Chen C, Guo X, Wu J, Jiang J (2020) Infrared and visible image fusion via detail preserving adversarial learning. Inform Fusion 54:85–98

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. In: Advances in neural information processing systems, pp 2672–2680

Radford A, Metz L, Chintala S (2015) Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv:1511.06434

Wang Z, Simoncelli EP, Bovik AC (2003) Multiscale structural similarity for image quality assessment. In: The thrity-seventh asilomar conference on signals, systems and computers, pp 1398–1402

Pajares G, De La Cruz JM (2004) A wavelet-based image fusion tutorial. Pattern Recognit 37(9):1855–1872

Liu Y, Chen X, Ward RK, Wang ZJ (2019) Medical image fusion via convolutional sparsity based morphological component analysis. IEEE Signal Process Lett 26(3):485–489

Qu G, Zhang D, Yan P (2002) Information measure for performance of image fusion. Electron Lett 38(7):313–315

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Wang H, Yao X (2016) Objective reduction based on nonlinear correlation information entropy. Soft Comput 20(6):2393–2407

Chen Y, Blum RS (2009) A new automated quality assessment algorithm for image fusion. Image Vis Comput 27(10):1421–1432

Acknowledgements

This work was supported partly by National Natural Science Foundation of China (Nos. 61871274, 61801305 and 81571758), National Natural Science Foundation of Guangdong Province (Nos. 2017A030313377 and 2016A030313047), Shenzhen Peacock Plan (No. KQTD2016053112051497), and Shenzhen Key Basic Research Project (Nos. JCYJ20170818142347251 and JCYJ20170818094109846).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declared that they have no conflict of interest to this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhao, C., Wang, T. & Lei, B. Medical image fusion method based on dense block and deep convolutional generative adversarial network. Neural Comput & Applic 33, 6595–6610 (2021). https://doi.org/10.1007/s00521-020-05421-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-020-05421-5