Abstract

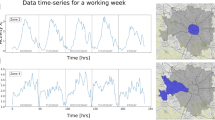

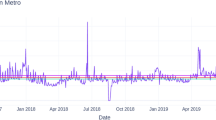

The increase in the amount of data collected in the transport domain can greatly benefit mobility studies and create high value-added mobility information for passengers, data analysts, and transport operators. This work concerns the detection of the impact of disturbances on a transport network. It aims, from smart card data analysis, to finely quantify the impacts of known disturbances on the transportation network usage and to reveal unexplained statistical anomalies that may be related to unknown disturbances. The mobility data studied take the form of a multivariate time series evolving in a dynamic environment with additional contextual attributes. The research mainly focuses on contextual anomaly detection using machine learning models. Our main goal is to build a robust anomaly score to highlight statistical anomalies (contextual extremums), considering the variability within the time series induced by the dynamic context. The robust anomaly score is built from normalized forecasting residuals. The normalization of the residuals is carried out using the estimated contextual variance. Indeed, there are complex dynamics on both the mean and the variance in the ridership time series induced by the flexible transportation schedule, the variability in transport demand, and contextual factors such as the station location and the calendar information. Therefore, they should be considered by the anomaly detection approach to obtain a reliable anomaly score. We investigate several prediction models (including an LSTM encoder–decoder of the recurrent neural network deep learning family) and several variance estimators obtained through dedicated models or extracted from prediction models. The proposed approaches are evaluated on synthetic data and real data from the smart card riderships of the Quebec Metro network. It includes a basis of events and disturbances that have impacted the transport network. The experiments show the relevance of variance normalization on prediction residuals to build a robust anomaly score under a dynamic context.

Similar content being viewed by others

Availability of data and material

Data provided by the Société de Transport de Montréal (STM) are private and the authors do not have the right to provide it to third parties. However, synthetic data and the generation process can be shared.

Notes

Git-lab of experiments on synthetic data: https://gitlab.com/Haroke/contextual-anomaly-detection.

References

Chandola V (2009) Anomaly detection for symbolic sequences and time series data, Ph.D. thesis, University of Minnesota

Hayes MA, Capretz MA (2014) Contextual anomaly detection in big sensor data. In: 2014 IEEE International Congress on Big Data, IEEE, pp 64–71

Benkabou S-E, Benabdeslem K, Canitia B (2018) Unsupervised outlier detection for time series by entropy and dynamic time warping. Knowl Inf Syst 54:463–486

Yeh C-CM, Zhu Y, Ulanova L, Begum N, Ding Y, Dau HA, Silva DF, Mueen A, Keogh E (2016) Matrix profile i: all pairs similarity joins for time series: a unifying view that includes motifs, discords and shapelets. In: 2016 IEEE 16th international conference on data mining (ICDM), IEEE, pp 1317–1322

Nakamura T, Imamura M, Mercer R, Keogh E (2020) Merlin: Parameter-free discovery of arbitrary length anomalies in massive time series archives. In: 2020 IEEE 16th international conference on data mining (ICDM), IEEE

Ding Z, Fei M (2013) An anomaly detection approach based on isolation forest algorithm for streaming data using sliding window. IFAC Proc. Vol. 46:12–17

Feremans L, Vercruyssen V, Cule B, Meert W, Goethals B (2019) Pattern-based anomaly detection in mixed-type time series, in: Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Springer, pp. 240–256

Tonnelier E, Baskiotis N, Guigue V, Gallinari P (2018) Anomaly detection in smart card logs and distant evaluation with twitter: a robust framework. Neurocomputing 298:109–121

Malhotra P, Vig L, Shroff G, Agarwal P (2015) Long short term memory networks for anomaly detection in time series. In: Proceedings, vol 89, Presses universitaires de Louvain

Guo Y, Liao W, Wang Q, Yu L, Ji T, Li P (2018) Multidimensional time series anomaly detection: a gru-based gaussian mixture variational autoencoder approach. In: Asian Conference on Machine Learning, pp 97–112

Pasini K, Khouadjia M, Same A, Ganansia F, Oukhellou L (2019) LSTM encoder-predictor for short-term train load forecasting. Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Springer, pp 535–551

Zhu L, Laptev N (2017) Deep and confident prediction for time series at uber. In: 2017 IEEE International Conference on Data Mining Workshops (ICDMW), IEEE, pp 103–110

Yu Y, Long J, Cai Z (2017) Network intrusion detection through stacking dilated convolutional autoencoders. Security and Communication Networks 2017

Hundman K, Constantinou V, Laporte C, Colwell I, Soderstrom T (2018) Detecting spacecraft anomalies using LSTMS and nonparametric dynamic thresholding. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 387–395

Schlegl T, Seeböck P, Waldstein SM, Schmidt-Erfurth U, Langs G (2017) Unsupervised anomaly detection with generative adversarial networks to guide marker discovery. In: International conference on information processing in medical imaging, Springer, pp. 146–157

Abdallah A, Maarof MA, Zainal A (2016) Fraud detection system: a survey. J Netw Comput Appl 68:90–113

Choi E, Bahadori MT, Sun J, Kulas J, Schuetz A, Stewart W, Retain, (2016) An interpretable predictive model for healthcare using reverse time attention mechanism. In: Advances in Neural Information Processing Systems 3504–3512

Cao N, Lin C, Zhu Q, Lin Y-R, Teng X, Wen X (2017) Voila: visual anomaly detection and monitoring with streaming spatiotemporal data. IEEE Trans visual Comput Graph 24:23–33

Chandola V, Banerjee A, Kumar V (2009) Anomaly detection: a survey. ACM Comput Surv (CSUR) 41:1–58

Habeeb RAA, Nasaruddin F, Gani A, Hashem IAT, Ahmed E, Imran M (2019) Real-time big data processing for anomaly detection: a survey. Int J Inf Manag 45:289–307

Chalapathy R, Chawla S (2019) Deep learning for anomaly detection: a survey, arXiv preprint arXiv:1901.03407

Cheng H, Tan P-N, Potter C, Klooster S (2009) Detection and characterization of anomalies in multivariate time series. In: Proceedings of the 2009 SIAM international conference on data mining, SIAM, pp 413–424

Dimopoulos G, Barlet-Ros P, Dovrolis C, Leontiadis I (2017) Detecting network performance anomalies with contextual anomaly detection. In: 2017 IEEE international workshop on measurement and networking (M&N), IEEE, pp 1–6

Liu FT, Ting KM, Zhou Z-H (2008) Isolation forest. In: 2008 Eighth IEEE international conference on data mining, IEEE, pp 413–422

Liu FT, Ting KM, Zhou Z-H (2012) Isolation-based anomaly detection. ACM Trans Knowl Discov Data TKDD 6:1–39

Yankov D, Keogh E, Rebbapragada U (2008) Disk aware discord discovery: finding unusual time series in terabyte sized datasets. Knowl Inf Syst 17:241–262

Akouemo HN, Povinelli RJ (2014) Time series outlier detection and imputation. In: 2014 IEEE PES General Meeting, IEEE, pp 1–5

Li J, Pedrycz W, Jamal I (2017) Multivariate time series anomaly detection: a framework of hidden Markov models. Appl Soft Comput 60:229–240

Salem O, Guerassimov A, Mehaoua A, Marcus A, Furht B (2014) Anomaly detection in medical wireless sensor networks using svm and linear regression models. Int J E-Health Med Commun IJEHMC 5:20–45

Kromanis R, Kripakaran P (2013) Support vector regression for anomaly detection from measurement histories. Adv Eng Inf 27:486–495

Hasan MAM, Nasser M, Pal B (2014) Ahmad S (2014) Support vector machine and random forest modeling for intrusion detection system (ids). J Intell Learn Syst Appl

Kasai H, Kellerer W, Kleinsteuber M (2016) Network volume anomaly detection and identification in large-scale networks based on online time-structured traffic tensor tracking. IEEE Trans Netw Serv Manag 13:636–650

Malhotra P, Ramakrishnan A, Anand G, Vig L, Agarwal P, Shroff G (2016) Lstm-based encoder-decoder for multi-sensor anomaly detection. In: Anomaly Detection Workshop of the 33rd International Conference on Machine Learning (ICML 2016)

Munir M, Siddiqui SA, Dengel A, Ahmed S (2018) Deepant: a deep learning approach for unsupervised anomaly detection in time series. IEEE Access 7:1991–2005

Meinshausen N (2006) Quantile regression forests. J Mach Learn Res 7:983–999

Carel L (2019) Big data analysis in the field of transportation, Ph.D. thesis, Université Paris-Saclay

Kingma DP, Welling M (2014) Auto-encoding variational bayes. In: 2nd international conference on learning representations, ICLR 2014, Conference Track Proceedings

Gal Y, Ghahramani Z (2016) Dropout as a bayesian approximation: Representing model uncertainty in deep learning. In: Proceedings of the 33rd International Conference on Machine Learning (ICML 2016), pp 1050–1059

Toqué F, Côme E, Oukhellou L, Trépanier M (2018) Short-term multi-step ahead forecasting of railway passenger flows during special events with machine learning methods

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay E (2011) Scikit-learn: machine learning in Python. J Mach Learn Res 12:2825–2830

Martin A et al. (2015) TensorFlow: Large-scale machine learning on heterogeneous systems

Chollet F et al. (2015) Keras,

Acknowledgements

This research is a part of the IVA Project, which aims to develop machine learning approaches to enhance traveler information. The project is carried out under the leadership of the Technological Research Institute SystemX, with the partnership and support of the transport organization authority Ile-De-France Mobilités (IDFM), SNCF, Université Gustave Eiffel and public funds under the scope of the French Program “ANR - Investissements d’Avenir.” The authors also wish to thank the Montreal Transit Corporation (STM) for providing ridership data and the database of events and disturbances.

Funding

This research is a part of the IVA Project, which aims to enhance traveler information. The project is carried out under the leadership of the Technological Research Institute SystemX, with the partnership and support of the transport organization authority Ile-De-France Mobilités (IDFM), the french railway operator NCF, and public funds under the scope of the French Program “Investissements d’Avenir.”

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection, and analysis were performed by Kevin PASINI. The first draft of the manuscript was written by Kevin PASINI and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflicts of interest

There is no conflict of interest.

Code availability

The code related to experiments on real data is the property of the project partners. The part of the code related to experiments on synthetic data can be shared. https://gitlab.com/Haroke/contextual-anomaly-detection.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Git-lab of experiments on synthetic data: https://gitlab.com/Haroke/contextual-anomaly-detection.

Appendix

Appendix

1.1 Bias-variance Estimators

First, we propose two ways to learn and estimate the bias-variance based on the prediction residues produced by the forecasting models.

1. EMP: Empirical estimation on a prior sampling.

The estimation model is based on prior knowledge. We segment the contextual attribute space \(\mathbf{c }\) into prior subspaces (subsamplings) defined by a set of constraints (\(V^{inf}, V^{sup}\)) given by expert knowledge. The bias \({\hat{B}}\) and variance \({\hat{\sigma }}\) estimators are summarized in three steps, as follows:

-

1.

Extract from each prior \(E_k\) the subsampling bias and variance.

-

2.

Associate each time step t to its subsampling \(E_k\).

-

3.

Return the bias \({\hat{B}}_t\) and variance \({\hat{\sigma }}_t\) for each time step t.

2. ML: Machine learning-based estimation.

The estimation model can be learned by a machine learning algorithm. We train two prediction models to learn the bias and variance of the residues of the predictions from the contextual attributes.

The two models are similar in terms of estimating a type of mean (absolute for the bias and quadratic-centered for the variance) on a learned contextual subsample.

Second, we propose directly extracting an estimation of the bias and variance from a forecasting model. We propose exploring the extraction for a random forest and a deep neural network. Often, extracting the estimated bias from the model itself will lead to a result of zero since the model has been optimized to minimize this bias.

-

RF: Random forest extraction

In [35], the authors show that we often exploit valuable information about the distribution learned from a random forest by considering only the mean of the subsamples. From this assumption, we propose extracting the variance based on a learned subsampling of our random forest forecasting model.

Let M be a random forest composed of \((T^1,..,T^n)\) binary trees. Each tree \(T^k\) is composed of a set of leaves \(L^k\). Values \(j_i\) are assigned to each leaf during the learning phase according to their attribute modalities \(X_i\). We define a tree walk operator \(F^k(X_t)\) that takes attributes \(X_t\) and returns for the associated leaf \(L^k_i\), the set of assigned values.

$$\begin{aligned} M(X_t) = \frac{1}{n} * \sum _{k \in [1,n]} \left( \sum _{j \in F^k(X_t)} \frac{j}{\#F^k(X_t)}\right) = {\hat{y}}_t \end{aligned}$$The prediction of an element by an RF model is similar to the weighted mean of a subsample formed by elements sharing a leaf. The weighting depends on the shared leaf number and shared element tree number. Shared leaf elements can be considered contextual neighbors on the basis of their attributes. Then, we can extract the bias (equal to 0) and variance from this contextual subsampling.

$$\begin{aligned} {\hat{B}}(t) = 0\quad {\hat{\sigma }}(t) = \sqrt{ \frac{1}{n} * \sum _{k \in [1,n]}\left( \sum _{j\in F^k(X_t)} \frac{(j- {\hat{y}}_t)^2}{\#F^k(X_t)}\right) } \end{aligned}$$ -

DEEP: Neural network extraction

A second form of extraction is based on variational dropout [38], which aims to approximate Bayesian behavior in a deterministic network. A study in [12] applies this technique to an LSTM neural network to extract the confidence in the prediction model. Following the same line of research, we use the variational dropout to estimate the variance from our LSTM encoder-predictor model.

Let \(M_{{\hat{\theta }}}\) be a neural network that infers \(y_t\) from \(X_t\).

$$\begin{aligned} \theta ={{\,\mathrm{argmin}\,}}_{\theta } \sum |M^{\theta }(X)-y|^2 \quad M_{\theta }(x_t) = \hat{y_t} \end{aligned}$$The neural network aims to capture the link between the attributes and prediction targets through an embedding of the attribute space into the prediction space. Successive nonlinear projections in the abstract space Z are used to this end. These abstract spaces give us abstract representations \(z_t\) of our elements that capture the topological structure of our data. We can exploit such spaces to perform contextual subsampling by defining a neighborhood in Z space. The contextual subsampling will be based on the contextual information captured by M. The main issue comes from the definition of a neighborhood \({\mathcal {B}}(z_t)\) in Z space.

$$\begin{aligned}&\{ {\mathcal {B}}(z_t) : k\ tq\ z_k \in [z_t \pm \varepsilon ]\}\ with\ z,\varepsilon \in {\mathcal {R}}^{\#Z} \\&\quad {\hat{B}}(t) = \sum _{k \in {\mathcal {B}}(z_t)}\frac{|\hat{y_k}-y_t|}{\# {\mathcal {B}}(z_t)}={\hat{r}}_t\\&\quad \ {\hat{\sigma }}(t) = \sqrt{\sum _{k \in {\mathcal {B}}(z_t)}\frac{((\hat{y_k}-y_t)-{\hat{B}}(t))^2}{\# {\mathcal {B}}(z_t)}} \end{aligned}$$This issue can be avoided with a variational neural network \(M_{\theta }^{var}\) based on an explicit (variational layer) or implicit (variational dropout) random drawing by generating a virtual sampling that self-defines the neighborhood in Z space.

$$\begin{aligned} \theta = {{\,\mathrm{argmin}\,}}_{\theta } \sum | (M_{\theta }^{var}(X)-y)|^2 \quad \sum _{m}\frac{M_{\theta }^{var}(x_t)}{m}= \hat{y_t} \end{aligned}$$The stochastic projections of model \(M_{\theta }^{var}\) transform the latent representations \(z_t\) into a collection of probabilistic points. We can access the probabilistic clouds of predictions for an element by making many predictions. This gives us a virtual contextual subsampling from which we can estimate the mean and variance.

$$\begin{aligned} \hat{{\mathcal {B}}}=0 \quad {\hat{\sigma }}(t) =\sqrt{\sum _{m}\frac{(M_{\theta }^{var}(x_t)-{\hat{y}}_t)^2}{m}} \end{aligned}$$

1.2 Forecasting models

1.2.1 Encoding cyclical features

Cyclical encoding aims to encode continuous cyclic attributes by preserving their cyclic structure. Instead of having a large one-hot vector per feature, the sine and cosine encodings project each attribute on a two-dimensional plane. However, some contextual information contains more than one cyclical structure. Using several pairs of sines and cosines with different frequencies can allow us to better express meaningful and compact structures. For instance, we can express several pieces of periodical information (weekly, monthly, and seasonal) by encoding the position of the day in the year with several pairs of sines and cosines with well-chosen frequencies, i.e., 1/53 for a weekly structure, 1/31 for monthly, and 1/1 for a yearly structure.

This technique yields compact and meaningful attributes, in contrast to the bulky and sparse hot encoding.

1.2.2 Random forest training

The forecasting and bias-variance estimation models are optimized through a mean-square error (MSE) optimization loss. We use a standard scikit-learn random forest regressor [40]. The random forest parameters are tuned through a random search using cross-validation combined with early stopping to control the number, size and depth of trees to avoid overfitting.

1.2.3 LSTM EP : architecture and training

In our previous research [11], we proposed an LSTM encoder predictor for ridership forecasting by using both long-term and short-term attributes.

The model was designed to manage the structural variability in the data induced by the transport plan. A simplified version of the model (Fig. 11) is applied in the current work thanks to the regular structure of the data.

First, the long-term features are synthesized through a multilayer perceptron neural network. Then, a pair of encoder–predictor LSTM layers attempt to capture the contextual influence and infer the short-term dynamics of the multivariate time series. Finally, another multilayer perceptron attempts to interpret the prediction embedding \(Z^p\) to produce a prediction \({\hat{y}}\). This model takes as input the contextual attribute \(X_t\) and past horizon value \(y^p_t=[y_{t-p},\ldots ,y_t)\) and aims to forecast a future horizon \([y_{t},y_{t+f})]\). Such a model reconstructs the time step t and then infers the temporal evolution on a future horizon \([t+1,t+f]\). Dropout layers are placed in almost every layer to avoid overfitting and to allow variational dropout. The size is manually chosen through a compromise between three components of size, performance and overfitting.

The encoder predictor model is implemented based on the TensorFlow [41] environment with Keras [42] as a library and a high-level neural network API. Training is performed through 3 training loops with gradient reduction and early stopping. We use an adaptive gradient (ADAM), and we reduce the batch size between each loop. The first training loop is a type of initialization in which we keep only the reconstruction task in the learning loss. Then, we add multistep forecasting with a higher weight to the t+1 prediction loss.

Rights and permissions

About this article

Cite this article

Pasini, K., Khouadjia, M., Samé, A. et al. Contextual anomaly detection on time series: a case study of metro ridership analysis. Neural Comput & Applic 34, 1483–1507 (2022). https://doi.org/10.1007/s00521-021-06455-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-06455-z