Abstract

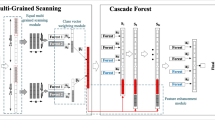

The deep forest (DF) model is built using a multilayer ensemble of forest units through decision tree aggregation. DF presents characteristics of an easy-to-understand structure, is suitable for small sample data, and has become an important research direction in the field of deep learning. These attributes are particularly suitable for the modeling of difficult-to-measure parameters in actual industrial process. However, existing methods have mainly focused on the problem of DF classification (DFC) and cannot be directly applied to regression modeling. To overcome these issues, a survey on the DFC algorithm is presented in terms of constructing a small sample data-oriented DF regression (DFR) model for industrial processes. Hence, a survey on the DFC algorithm is presented to construct a small sample size of the data-oriented DF regression (DFR) model for industrial processes. First, principle and properties of DFC are introduced in detail to demonstrate the non-neural network deep learning model. Second, methods of DFC are discussed in terms of feature engineering, representation learning, learner selection, weighting strategy, and hierarchical structure. Furthermore, related studies on decision tree algorithm are reviewed and future investigations on DFR and its relationship with deep learning are discussed and analyzed in detail. Finally, conclusions and the future direction of this study for industrial process modeling are presented. Developing a DFR algorithm with characteristics of dynamic adaptive and interpretation abilities and lightweight structure on the basis of actual industrial domain knowledge will be the focus of our follow-up investigation. Moreover, the existing research results of DFC and deep learning can provide guidance for the future investigations on the DFR model.

Similar content being viewed by others

References

Lu X, Kiumarsi B, Chai T, Jiang Y, Lewis FL (2019) Operational control of mineral grinding processes using adaptive dynamic programming and reference governor. IEEE Trans Ind Informat 15(4):2210–2221

Chai TY, Qin SJ, Wang H (2014) Optimal operational control for complex industrial processes. Annu Rev Control 38(1):81–92

Shao W, Ge Z, Song Z, Wang J (2020) Semi-supervised robust modeling of multimode industrial processes for quality variable prediction based on student’s t mixture model. IEEE Trans Industr Inf 16(5):2965–2976

Qiao JF, Guo ZG, Tang J (2020) Dioxin emission concentration measurement approaches for municipal solid wastes incineration process: a survey. Acta Automatica Sinica 46(6):1063–1089

Tang J, Qiao JF, Liu Z, Zhou X, Yu G, Zhao J (2018) Mechanism characteristic analysis and soft measuring method review for ball mill load based on mechanical vibration and acoustic signals in the grinding process. Miner Eng 128:294–311

Hinton G, Deng L, Yu D, Dahl G (2012) Deep neural networks for acoustic modeling in speech recognition. IEEE Signal Process Mag 29(6):82–97

Yang Y, Li W, Gulliver TA, Li S (2020) Bayesian deep learning-based probabilistic load forecasting in smart grids. IEEE Trans Industr Inf 16(7):4703–4713

Tan M, Yuan S, Li S, Su Y, Li H, He F (2020) Ultra-short-term industrial power demand forecasting using LSTM based hybrid ensemble learning. IEEE Trans Power Syst 35(4):2937–2948

Gong M, Liu J, Li H, Cai Q, Su L (2015) A multiobjective sparse feature learning model for deep neural networks. IEEE Tran Neural Netw Learn Syst 26(12):3263–3277

Singh G, Pal M, Yadav Y et al (2020) Deep neural network-based predictive modeling of road accidents. Neural Comput Appl 32:12417–12426

Eslami E, Salman AK, Choi Y et al (2020) A data ensemble approach for real-time air quality forecasting using extremely randomized trees and deep neural networks. Neural Comput Appl 32:7563–7579

Zhou ZH, Feng J (2018) Deep forest. Nat Sci Rev 6(1):74–86

LeCun Y, Bengio Y, Hinton GE (2015) Deep learning. Nature 521:436–444

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Miller K, Hettinger C (2017) Forward thinking: building deep random forests. arXiv:1705.07366

Geurts P, Ernst D, Wehenkel L (2006) Extremely randomized trees. Mach Learn 63(1):3–42

Lev U, Andrei K, Anna M, Mikhail R, Viacheslav C (2019) A deep forest improvement by using weighted schemes. In: Proceeding of the 24th conference of fruct association

Gong ZH, Wang JN, Su C (2019) A weighted deep forest algorithm. Comput Appl Softw 36(2):274–278

Guo Y, Liu S (2017) Towards the classification of cancer subtypes by using cascade deep forest model in gene expression data. In: IEEE international conference on bioinformatics and biomedicine, pp. 1664–1669

Gao H, Liu Z, Laurens VDM (2017) Densely connected convolutional networks. Proc IEEE Conf Comput Vis Pattern Recognit 1(2):3–12

Wang H, Tang Y, Jia Z (2019) Dense adaptive cascade forest: a self-adaptive deep ensemble for classification problems. Soft Comput 24:2955–2968

Utkin LV, Ryabinin MA (2017) Discriminative metric learning with deep forest. International J Artif Intell Tools 28:1950007

Utkin LV, Ryabinin MA (2018) A Siamese deep forest. Knowl-Based Syst 139:13–22

Bromley J, Bentz JW, Bottou L, Guyon I et al (1993) Signature verification using a Siamese time delay neural network. Int J Pattern Recognit Artif Intell 7(4):737–744

Tang J, Xia H, Qiao JF, Guo ZH (2021) Research on deep integrated forest regression modeling method and application. J Beijing Univ Technol 47(11):1219–1229

Tang J, Xia H, Zhang J, Qiao JF, Yu W (2021) Deep forest regression based on cross-layer full connection. Neural Comput Appl 33(15):9307–9328

Wolpert DH (1992) Stacked generalization. Neural Netw 5(2):241–260

Breiman L (1996) Stacked regressions. Mach Learn 24(1):49–64

Smyth P, Wolpert D (1997) Stacked density estimation. NIPS. https://proceedings.neurips.cc/paper/1997/hash/ee8374ec4e4ad797d42350c904d73077-Abstract.html

Guehairia O, Ouamane A, Dornaika F, Taleb-Ahmed A (2020) Feature fusion via deep random forest for facial age estimation. Neural Netw 130:238–252

Kim S, Ko BC (2020) Building deep random ferns without backpropagation. IEEE Access 8:8533–8542

Zhu G, Hu Q (2019) ForestLayer: efficient training of deep forests on distributed task-parallel platforms. J Parallel Distrib Comput 132:113–126

Breiman L, Friedman J, Stone C (1984) Classification and regression trees. Biometrics 40(3):874–874

Li ZJ, Cui LJ (2019) Research on traffic sign recognition method based on deep forest. Ind Control Comput 32(5):118–119

Chen L, Zhang F, Jiang S (2019) Recognition method of typical military targets based on deep forest learning model under small sample conditions. J China Acad Electr 14(3):232–237

Li H, Zhao HH (2018) A clothes classification method based on the gcForest. In: IEEE international conference on image, vision and computing

Mou L, Mao S, Xie H, Chen Y (2019) Structured behaviour prediction of on-road vehicles via deep forest. Electr Lett 55(8):452–455

Wen YZ, Qi XG, Cui SY, Chen C (2019) A multi-channel NIRS system for prefrontal mental task classification employing deep forest algorithm. In: IEEE biomedical circuits and systems conference (BioCAS), pp. 1–4

Zhou M, Zeng XH, Chen AZ (2019) Deep forest hashing for image retrieval. Pattern Recogn 95:114–127

Boualleg Y, Farah M, Farah IR (2019) Remote sensing scene classification using convolutional features and deep forest classifier. IEEE Geosci Remote Sens Lett 16(12):1944–1948

Zhu XY, Yan YY, Liu YA (2018) Flame detection based on deep forest model. Comput Eng 44(7):264–270

Du XY, Dong HW, Yang Z (2018) Three-dimensional face segmentation method based on square combined descriptor and deep forest. Sens Microsyst 37(9):35–37

Chen YD, Li CF, Sang QB (2019) Non-reference image quality evaluation based on convolutional neural network and deep forest. Progress Laser Optoelectr 56(11):131–137

Cheng YH, Jiang WJ (2020) Satellite ACS system actuator and sensor fault identification based on deep forest. Acta Aeronautica et Astronautica Sinica 41(S1):195–205

Zhang Z, Li B (2019) Deep forest-based fault diagnosis for railway turnout systems in the case of limited fault data. In: Chinese Control and Decision Conference, pp. 2636–2641

Liu Q, Gao H, You Z, Song H, Zhang L (2018) gcforest-based fault diagnosis method for rolling bearing. Prognostics and system health management conference, pp. 572–577

Wang C, Lu N (2018) Deep forest based multivariate classification for diagnostic health monitoring. Chinese Control and Decision Conference, pp. 6233–6238

Shi XH, Cao JX, Lu TL (2019) Analysis and detection of android malware behavior based on deep forest. Software 40(10):01–05

Yan JJ, Liu ZP, Liu GP (2019) TCM syndrome classification of chronic gastritis based on deep forest algorithm. J East China Univ Sci Technol 45(4):593–599

Yang XL, Tao XF (2019) Research on the detection method of electricity stealing behavior based on deep forest algorithm. Smart Electric Power 47(10):85–92

Ge SL, Ye J, He MX (2019) Prediction model of user purchase behavior based on deep forest. Comput Sci 46(09):190–194

Chen LP, Yin LF, Yu T, Wang KY (2018) Power system short-term load forecasting based on deep forest algorithm. Electric Power Constr 39(11):42–50

Hu P, Li S (2019) Predicting hospital readmission of diabetics using deep forest. In: IEEE International Conference on Healthcare Informatics, pp. 1–2

Wang L (2019) Stock selection strategy based on deep forest. Econ Res Guid 27:78–79

Wang L, Li L (2020) Multi-factor quantitative stock selection strategy based on gcForest. Comput Eng Appl 56(15):86–91

Zhang YH, Nie YM (2018) Decision-level fusion of depression evaluation method based on deep forest multimodal data. J Beijing Normal Univ 54(5):606–611

Chen Q, Bo H (2018) Research on EEG attention recognition based on deep forest. Electr Design Eng 26(17):35–39

Pang M, Ting K (2018) Improving deep forest by confidence screening. In: Proceedings of the 18th IEEE International Conference on Data Mining (ICDM’18), pp. 1–6

Gao J, Liu K, Wang B et al (2020) Improving deep forest by ensemble pruning based on feature vectorization and quantum walks. Soft Comput 25:2057–2068

Sangwon K, Mira J, Byoung CK (2020) Interpretation and simplification of deep forest. https://arxiv.org/abs/2001.04721

Wolpert DH, Macready WG (1997) No free lunch theorems for optimization. IEEE Trans Evol Comput 1(1):67–82

Jolliffe IT (2002) Principal component analysis, 2nd edn. Springer, New York

Yin X, Wang RL (2018) Deep forest-based classification of hyperspectral images. In: Proceedings of the 37th Chinese Control Conference, pp. 25–27

Zhang L, Sun H (2020) Hyperspectral imaging technology combined with deep forest model to identify frost-damaged rice seeds. Mol Biomol Spectrosc 229:1386–1425

Ahonen T, Hadid A, Pietikainen M (2006) Face description with local binary patterns: application to face recognition. IEEE TPAMI 28(12):2037

Liu GD, Qiu XH (2018) Finger vein recognition based on multi-mode LBP and deep forest. Comput Technol Dev 28(7):83–87

Zhou TC (2019) Improving defect prediction with deep forest. Inf Softw Technol 114:204–216

Yang F, Xu Q, Li B (2018) Ship detection from thermal remote sensing imagery through region-based deep forest. IEEE Geosci Remote Sens Lett 15(3):449–453

Tang WB (2017) Fast text detection in natural scenes based on band-pass filter and deep forest. Proceedings of China Automation Conference and International Intelligent Manufacturing Innovation Conference, pp. 355–361

Liu C, Wu S, Zheng YC, Hou WY (2020) Research on cancer classification based on deep forest and DNA methylation. Comput Eng Appl 56(3):189–193

Shao L, Zhang D, Du H, Fu D (2019) Deep forest in ADHD data classification. IEEE Access 7:137913–137919

Hu G, Li H, Xia Y, Luo L (2018) A deep Boltzmann machine and multi-grained scanning forest ensemble collaborative method and its application to industrial fault diagnosis. Comput Ind 100:287–296

Kalal Z, Mikolajczyk K, Matas J (2012) Tracking-learning-detection. IEEE Trans Pattern Anal Mach Intell 34(7):1409

Blagus R, Lusa L (2013) SMOTE for high-dimensional class-imbalanced data. BMC Bioinf 14(1):1–16

Debashree D, Sarojkr B, Biswajit P (2017) Redundancy-driven modified Tomek-link based undersampling: a solution to class imbalance. Pat Recogn Lett 93:3–12

López V, Fernández A, García S (2013) An insight into classification with imbalanced data: empirical results and current trends on using data intrinsic characteristics. Inf Sci 250:113–141

Salakhutdinov R, Hinton GE (2009) Deep Boltzmann machines. In: Proceeding of the international conference on artifical intelligence and statistics, pp 448–455

Liu X, Wang R, Cai Z, Cai Y, Yin X (2019) Deep multi-grained cascade forest for hyperspectral image classification. IEEE Trans Geosci Remote Sens 57(10):8169–8183

Pleiss G, Chen D, Huang G (2017) Memory-efficient implementation of DenseNets. 2017. https://arxiv.org/abs/1707.06990

Xue G, Yan XF (2018) Software defect prediction based on improved deep forest algorithm. Comput Sci 45(8):160–165

Li Q, Li W (2019) A SQL injection detection method based on adaptive deep forest. IEEE Access 7:145385–145394

Han H, Wang LM, Chai YM, Liu Z (2019) Text sentiment classification based on reinforcement representation learning deep forest. Comput Sci 46(7):172–179

Gao J, Liu K, Wang B et al (2021) An improved deep forest for alleviating the data imbalance problem. Soft Comput 25:2085–2101

Guo YY, Zhang L, Xiao C, Sun PW (2019) Research on wind turbine fault diagnosis technology based on improved deep forest algorithm. Renewable Energy 37(11):1720–1725

Su R, Liu XY (2019) Deep-Resp-forest: a deep forest model to predict anti-cancer drug response. Methods 166:91–102

Zhou ZH (2016) Machine learning. Tsinghua university Press, Beijing

Zhou ZH (2012) Ensemble methods: foundations and algorithms. Taylor & Francis, Milton Park

Tomáš K, Somol P (2018) Multiple instance learning with bag-level randomized trees. In: Joint European conference on machine learning and knowledge discovery in databases

Ren J, Hou BJ, Jiang Y (2019) Deep forest architecture under multi-instance learning. Comput Res Dev 56(8):1670–1676

Dietterich TG, Lathrop RH, Lozano PT (1997) Solving the multiple instance problem with axis-parallel rectangles. Artif Intell 89(12):31–71

Rodriguez JJ, Kuncheva LI, Alonso CJ (2006) Rotation forest: a new classifier ensemble method. IEEE Trans Pattern Anal Mach Intell 28(10):1619–1630

Cao X, Wen L, Ge Y, Zhao J, Jiao L (2019) Rotation-based deep forest for hyperspectral imagery classification. IEEE Geosci Remote Sens Lett 16(7):1105–1109

Chen T, Guestrin C (2016) Xgboost: a scalable tree boosting system. In: Proceedings of the 22nd Acmsigkdd international conference on knowledge discovery and data mining, pp. 785–794

Zhang X, Chen J, Zhou Y, Han L, Lin J (2019) A multiple-layer representation learning model for network-based attack detection. IEEE Access 7:91992–92008

Jiang PF, Wei SJ (2020) Classification and evaluation of mobile application network behavior based on deep forest and CWGAN-GP. Comput Sci 47(01):287–292

Zhao J, Mao J, Wang G, Yang H, Zhao B (2017) A miniaturized wearable wireless hand gesture recognition system employing deep-forest classifier. In: IEEE Biomedical Circuits and Systems Conference, pp. 1–4

Han M, Li S, Wan X, Liu G (2018) Scene recognition with convolutional residual features via deep forest. In: IEEE 3rd international conference on image, pp. 178–182

Roseline SA, Sasisri AD, Geetha S, Balasubramanian C (2019) Towards efficient malware detection and classification using multilayered random forest ensemble technique. In: International Carnahan conference on security technology, pp. 1–6

Ke GL, Meng Q, Finley T, Wang TF, Chen W, Ma WD, Ye QW, Liu TY (2017) Lightgbm: a highly efficient gradient boosting decision tree. Advances in Neural Information

Mounika M, Subba RO, Radha A (2019) Evaluating the combination of word embeddings with mixture of experts and cascading gcForest in identifying sentiment polarity. In: Proceedings of KDD 2019 (WISDOM’19): 8th KDD workshop on issues of sentiment discovery and opinion mining, august 4, 2019

Wang J, Hu B, Li X, Yang Z (2018) GTC forest: an ensemble method for network structured data classification. In: 14th international conference on mobile ad-hoc and sensor networks, pp. 81–85

Liu Y, Ge ZQ (2019) Deep ensemble forests for industrial fault classification. IFAC J Sys Control 10:100071

Li BY, Xiao JM, Wang XH (2020) Power system transient stability assessment integrating neighborhood rough reduction and deep forest. Trans China Electrotech Soc 35(15):245–3257

Chen Y, Yang B, Dong J, Abraham A (2005) Time-series forecasting using flexible neural tree model. Inf Sci 174(3–4):219–235

Xu J, Wu P, Chen Y, Meng Q, Dawood H, Khan MM (2019) A novel deep flexible neural forest model for classification of cancer subtypes based on gene expression data. IEEE Access 7:22086–22095

Friedman JH (2000) Greedy function approximation: a gradient boosting machine. Ann Stat 29(5):1189–1232

Wang Y, Bi X, Chen W, Li Y, Chen Q, Long T (2019) Deep forest for radar HRRP recognition. J Eng 2019(21):8018–8021

Munoz GM, Suarez A (2010) Out-of-bag estimation of the optimal sample size in bagging. Pattern Recogn 43:143–152

Bylander T (2002) Estimating generalization error on two-class datasets using out-of-bag estimates. Mach Learn 48:287–297

Dong YY, Yang WK (2019) MLW-gcForest: a multi-weighted gcForest model for cancer subtype classification by methylation data. Appl Sci 9(17):3589

Ross JQ (1986) Induction of decision trees. Mach Learn 1:81–106

Cortes C, Mohri M (2004) AUC optimization vs. error rate minimization. NIPS. https://proceedings.neurips.cc/paper/2003/hash/6ef80bb237adf4b6f77d0700e1255907-Abstract.html

Walley P (1991) Statistical reasoning with imprecise probabilities. Chapman and Hall, London

Utkin LV, Kovalev MS (2019) A deep forest classifier with weights of class probability distribution subsets. Knowl-Based Syst 173:15–27

Fan Y, Qi L, Tie Y (2019) The cascade improved model based deep forest for small-scale datasets classification. In: 2019 8th international symposium on next generation electronics (ISNE), pp. 1–3

Zhu Q, Pan M (2018) The phylogenetic tree based deep forest for metagenomic data classification. In: IEEE international conference on bioinformatics and biomedicine, pp. 279–282

Xia JS, Ming ZH (2019) Classification of hyperspectral and lidar with deep rotation forest. In: ICASSP 2019 - 2019 IEEE international conference on acoustics, speech and signal processing (ICASSP), pp. 2197–2201

Ting KM, Witten IH (2011) Issues in stacked generalization. J Artif Intel Res 10:271–289

Pang M, Ting KM, Zhao P, Zhou Z-H (2020) Improving deep forest by screening. IEEE Trans Knowl Data Eng. https://doi.org/10.1109/TKDE.2020.3038799

Li H (2012) Statistical learning methods. Tsinghua University Press, Beijing, p 3

Quinlan JR (1992) C4.5: programs for machine learning. Morgan Kaufmann, Burlington

Efron B, Tibshirani R (1993) An introduction to the bootstrap. Chapman and Hall, London

Breiman L (1996) Bagging predictors. Mach Learn 26(2):123–140

Freund Y, Schapire R (1996) Experiments with a new boosting algorithm. In: Machine learning: proceedings of the thirteenth international conference, pp. 148–156

Ho TK (1998) The random subspace method for constructing decision forests. IEEE Trans Pattern Anal Mach Intell 20(8):832–844

Zhu Z, Zhang Y (2021) Flood disaster risk assessment based on random forest algorithm. Neural Comput Applic. https://doi.org/10.1007/s00521-021-05757-6

Stulp F, Sigaud O (2015) Many regression algorithms, one unified model: a review. Neural Network 69:60–79

Freund Y, Schapire RE (1999) A short introduction to boosting. J Jpn Soc Artif Intell 14(5):771–780

Tan S (2009) An effective refinement strategy for KNN text classifier. Expert Syst Appl 30(2):280–298

Tang J, Jia, Z. Liu Z, Chai TY, Yu W (2015) Modeling high dimensional frequency spectral data based on virtual sample generation technique. In: IEEE international conference on information and automation, pp. 1090–1095

Shen XY, Hertzmann A, Jia J (2016) Automatic portrait segmentation for image stylization. Comput Gr Forum 35(2):93–102

Goodfellow I, Pouget JA, Mirza M (2014) Generative adversarial nets. In: Proc of conference and workshop on neural information processing systems, pp. 2672–2680

Pahde F, Hnichen PJ, Klein T (2018) Cross-modal hallucination for few-shot fine-grained recognition. In: Proc of CoRR

Schwartz E, Karlinsky L, Shtok J (2018) Delta-encoder: an effective sample synthesis method for few-shot object recognition. In: Proc of conference and workshop on neural information processing systems, pp. 2850–2860

Pan SJ, Yang Q (2010) A survey on transfer learning. IEEE Trans Knowl Data Eng 22(10):1345–1359

Li FF, Fergus R, Perona P (2008) A Bayesian approach to unsupervised one-shot learning of object categories. In: Proc of IEEE international conference on computer vision

Salakhutdinov R, Tenenbaum J, Torralba A (2012) One-shot learning with a hierarchical nonparametric Bayesian model. In: Proc of ICML Workshops, pp. 195–207

Lake BM, Salakhutdinov R, Tenenbaum JB (2013) One-shot learning by inverting a compositional causal process. Proc of conference and workshop on neural information processing systems, pp. 2526–2534

Ye M, Guo YX (2018) Deep triplet ranking networks for one-shot recognition. https://arxiv.org/abs/1804.07275

Vinyals O, Blundell C, Lillicrap T (2016) Matching networks for one shot learning. https://arxiv.org/abs/1606.04080

Cai Q, Pan YW, Yao T (2018) Memory matching networks for one-shot image recognition. In: Proc of IEEE conference on computer vision and pattern recognition, pp. 4080–4088

Snell J, Swersky K, Zemeln RS (2017) Prototypical networks for few-shot learning. https://arxiv.org/abs/1703.05175

Fort S (2017) Gaussian prototypical networks for few-shot learning on omniglot. https://arxiv.org/abs/1708.02735

Oreshkin B, Lacoste A, Rodriguez P (2018) TADAM: task dependent adaptive metric for improved few-shot learning. 2018. https://arxiv.org/abs/1805.10123

Mehrotra A, Dukkipati A (2017) Generative adversarial residual pairwise networks for one shot learning. https://arxiv.org/abs/1703.08033

He KM, Zhang XY, Ren SQ (2016) Deep residual learning for image recognition. In: Proc of IEEE conference on computer vision and pattern recognition, pp. 770–778

Sung F, Yang YX, Zhang L (2017) Learning to compare: relation network for few-shot learning. https://arxiv.org/abs/1711.06025

Zhang XT, Sung F, Qiang YT (2018) Deep comparison: relation columns for few-shot learning. https://arxiv.org/abs/1811.07100

Hilliard N, Phillips L, Howland S (2018) Few-shot learning with metric-agnostic conditional embeddings. https://arxiv.org/abs/1802.04376

Zhou ZH, Wu J, Tang W (2002) Ensembling neural networks: many could be better than all. Artif Intell 137(1–2):239–263

Caruana R, Niculescu-Mizil A, Crew G, Ksikes A (2004) Ensemble selection from libraries of models. In: Proceedings of the 21st international conference on machine learning, pp. 18–23

Hinton GE, Srivastava N, Krizhevsky A, Sutskever I, Salakhutdinov RR (2012) Improving neural networks by preventing co-adaptation of feature detectors. https://arxiv.org/abs/1207.0580

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfltting. J Mach Learn Res 15(1):1929–1958

Bucilua C, Caruana R, Niculescu-Mizil A (2006) Model compression. In: Proceedings of the 12th ACM SIGKDD international conference on knowledge discovery and data mining. Philadelphia, pp. 535–541

Valueva MV, Nagornov NN, Lyakhov PA, Valuev GV, Chervyakov NI (2020) Application of the residue number system to reduce hardware costs of the convolutional neural network implementation. Math Comput Simul 177:232–243

He KM, Sun J (2015) Convolutional neural networks at constrained time cost. In: Proceedings of 2015 IEEE conference on computer vision and pattern recognition, pp. 5353–5360

Iofie S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. https://arxiv.org/abs/1502.03167

Szegedy C, Vanhoucke V, Iofie S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: Proceedings of 2016 IEEE conference on computer vision and pattern recognition, pp. 2818–2826

Hopfield JJ (1982) Neural networks and physical systems with emergent collective computational abilities. Proc Nat Acad Sci 79(8):2554–2558

Carpenterg A, Grossberg S (1987) A massively parallel architecture for a self-organizing neural pattern recognition machine. IEEE Trans Comput Vis Gr Image Process 37:54–115

Wei W (1998) A fast online learning algorithm of regression neural network. J Autom 5:3–5

Miller T (2017) Explanation in artificial intelligence: insights from the social sciences. https://arxiv.org/abs/1706.07269

Kim B, Koyejo O, Khanna R (2016) Examples are not enough, learn to criticize! Criticism for interpretability. NIPS. https://proceedings.neurips.cc/paper/2016/hash/5680522b8e2bb01943234bce7bf84534-Abstract.html

Cui X, Wang D, Wang ZJ (2020) CHIP: channel-wise disentangled interpretation of deep convolutional neural networks. IEEE Trans Neural Netw Learn Syst 31(10):4143–4156

Castelvecchi D (2016) Can we open the black box of AI? Nat News 538(7623):20

Zhou B, Khosla A, Lapedriza A (2016) Learning deep features for discriminative localization. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2921–2929

Zhou B, Bau D, Oliva A (2019) Interpreting deep visual representations via network dissection. IEEE Trans Pattern Anal Mach Intell 41(9):2131–2145

Koh PW, Liang P (2017) Understanding black-box predictions via influence functions. https://arxiv.org/abs/1703.04730

Samek W, Wiegand T, Müller KR (2017) Explainable artificial intelligence: understanding, visualizing and interpreting deep learning models. https://arxiv.org/abs/1708.08296

Montavon G, Samek W, Müller KR (2017) Methods for interpreting and understanding deep neural networks. https://arxiv.org/abs/1706.07979

Selvaraju RR, Cogswell M, Das A (2017) Grad-CAM: visual explanations from deep networks via gradient-based localization. In: International conference on computer vision, pp. 618–626

Ribeiro MT, Singh S, Guestrin C (2016) Why should i trust you? Explaining the predictions of any classifier. ACM SIGKDD international conference on knowledge discovery and data mining, pp. 1135–1144

Wu M, Hughes MC, Parbhoo S (2018) Beyond sparsity: tree regularization of deep models for interpretability. In: National conference on artificial intelligence, pp. 1670–1678

Liu X, Wang X, Matwin S (2018) Interpretable deep convolutional neural networks via meta-learning. https://arxiv.org/abs/1802.00560

Zhang Q, Yang Y, Wu YN, Interpreting Cnns via decision trees. https://arxiv.org/abs/1802.00121

Park DH, Hendricks LA, Akata Z (2018) Multimodal explanations: justifying decisions and pointing to the evidence. In: 2018 IEEE/CVF conference on computer vision and pattern recognition, pp 8779–8788

Dong HP, Hendricks LA, Akata Z (2016) Attentive explanations: justifying decisions and pointing to the evidence. https://arxiv.org/abs/1612.04757

Gupta P, Schutze H (2018) LISA: explaining recurrent neural network judgments via layer-wise semantic accumulation and example to pattern transformation. Empirical Methods in Natural Language Processing, pp. 154–164

Tang Z, Shi Y, Wang D (2017) Memory visualization for gated recurrent neural networks in speech recognition. In: IEEE international conference on acoustics, speech and signal processing, pp. 2736–2740

Ming Y, Cao S, Zhang R (2017) Understanding hidden memories of recurrent neural networks. V Anal Sci Technol, pp. 13–24

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by jointly learning to align and translate. 2014. https://arxiv.org/abs/1409.0473

Xu K, Ba J, Kiros R (2015) Show, attend and tell: neural image caption generation with visual attention. In: International Conference on Machine Learning, pp. 2048–2057

Hermann KM, Kocisky T, Grefenstette E (2015) Teaching machines to read and comprehend. https://arxiv.org/abs/1506.03340

Zeng M, Gao H, Yu T (2018) Understanding and improving recurrent networks for human activity recognition by continuous attention. In: International symposium on wearable computers, pp. 56–63

Acknowledgements

This work was financially supported by the National Natural Science Foundation of China (62073006 and 62021003), Beijing Natural Science Foundation (4212032), and Beijing Key Laboratory of Process Automation in Mining & Metallurgy (BGRIMM-KZSKL-2020-02).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest. This article is considered for publication on the understanding that the article has neither been published nor will it be published elsewhere before being published in Neural Computing and Applications.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xia, H., Tang, J., Qiao, J. et al. DF classification algorithm for constructing a small sample size of data-oriented DF regression model. Neural Comput & Applic 34, 2785–2810 (2022). https://doi.org/10.1007/s00521-021-06809-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-06809-7