Abstract

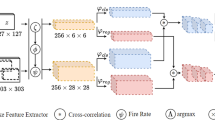

Recently, spiking neural networks (SNNs), the third generation of neural networks, have shown remarkable capabilities of energy-efficient computing, which is a promising alternative for artificial neural networks (ANNs) with high energy consumption. SNNs have reached competitive results compared to ANNs in relatively simple tasks and small datasets such as image classification and MNIST/CIFAR, while few studies on more challenging vision tasks on complex datasets. In this paper, we focus on extending deep SNNs to object tracking, a more advanced vision task with embedded applications and energy-saving requirements. We present a spike-based Siamese network called SiamSNN, which is converted from fully convolutional Siamese networks. Specifically, we propose a spiking correlation layer to evaluate the similarity between two spiking feature maps, and introduce a novel two-status coding scheme to optimize the temporal distribution of output spike trains for further improvements. SiamSNN is the first deep SNN tracker that achieves short latency and low precision degradation on the visual object tracking benchmarks OTB-2013, OTB-2015, VOT-2016, VOT-2018, and GOT-10k. Moreover, SiamSNN achieves notably low energy consumption and real-time on Neuromorphic chip TrueNorth.

Similar content being viewed by others

References

Basu A, Acharya J, Karnik T, Liu H, Li H, Seo JS, Song C (2018) Low-power, adaptive neuromorphic systems: recent progress and future directions. IEEE J Emerg Sel Top Circuits Syst 8(1):6–27

Bertinetto L, Valmadre J, Henriques JF, Vedaldi A, Torr PH (2016) Fully-convolutional siamese networks for object tracking. In: Proceedings of the European conference on computer vision (ECCV). Springer, pp 850–865

Bi GQ, Poo MM (1998) Synaptic modifications in cultured hippocampal neurons: dependence on spike timing, synaptic strength, and postsynaptic cell type. J Neurosci 18(24):10464–10472

Cao Y, Chen Y, Khosla D (2015) Spiking deep convolutional neural networks for energy-efficient object recognition. Int J Comput Vision 113(1):54–66

Cao Z, Cheng L, Zhou C, Gu N, Wang X, Tan M (2015) Spiking neural network-based target tracking control for autonomous mobile robots. Neural Comput Appl 26(8):1839–1847

Caporale N, Dan Y (2008) Spike timing-dependent plasticity: a hebbian learning rule. Annu Rev Neurosci 31:25–46

Danelljan M, Bhat G, Shahbaz Khan F, Felsberg M (2017) Eco: efficient convolution operators for tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 6638–6646

Danelljan M, Hager G, Shahbaz Khan F, Felsberg M (2015) Learning spatially regularized correlation filters for visual tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 4310–4318

Davies M, Srinivasa N, Lin TH, Chinya G, Cao Y, Choday SH, Dimou G, Joshi P, Imam N, Jain S et al (2018) Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro 38(1):82–99

Deng S, Gu S (2021) Optimal conversion of conventional artificial neural networks to spiking neural networks. In: International conference on learning representations (ICLR)

Diehl PU, Neil D, Binas J, Cook M, Liu SC, Pfeiffer M (2015) Fast-classifying, high-accuracy spiking deep networks through weight and threshold balancing. In: International joint conference on neural networks (IJCNN). IEEE, pp 1–8

Ding J, Yu Z, Tian Y, Huang T (2021) Optimal ann-snn conversion for fast and accurate inference in deep spiking neural networks. In: International joint conference on artificial intelligence (IJCAI)

Everingham M, Van Gool L, Williams CK, Winn J, Zisserman A (2010) The pascal visual object classes (voc) challenge. Int J Comput Vision 88(2):303–338

Gautrais J, Thorpe S (1998) Rate coding versus temporal order coding: a theoretical approach. Biosystems 48(1–3):57–65

Ghosh-Dastidar S, Adeli H (2009) Spiking neural networks. Int J Neural Syst 19(04):295–308

Guo D, Wang J, Cui Y, Wang Z, Chen S (2020) Siamcar: siamese fully convolutional classification and regression for visual tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 6269–6277

Guo J, Yuan C, Zhao Z, Feng P, Luo Y, Wang T (2020) Object detector with enriched global context information. Multimed Tools Appl 79(39):29551–29571

Han B, Srinivasan G, Roy K (2020) Rmp-snn: Residual membrane potential neuron for enabling deeper high-accuracy and low-latency spiking neural network. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 13558–13567

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 770–778

Huang L, Zhao X, Huang K (2021) Got-10k: a large high-diversity benchmark for generic object tracking in the wild. IEEE Trans Pattern Anal Mach Intell 43(5):1562–1577

Jin Y, Zhang W, Li P (2018) Hybrid macro/micro level backpropagation for training deep spiking neural networks. In: Advances in neural information processing systems, pp 7005–7015

Kasabov NK (2014) Neucube: a spiking neural network architecture for mapping, learning and understanding of spatio-temporal brain data. Neural Netw 52:62–76

Kim J, Kim H, Huh S, Lee J, Choi K (2018) Deep neural networks with weighted spikes. Neurocomputing 311:373–386

Kim S, Park S, Na B, Yoon S (2020) Spiking-yolo: Spiking neural network for energy-efficient object detection. In: Proceedings of the AAAI conference on artificial intelligence, pp 11270–11277

Kristan Matej Leonardis Ales MJ, et al (2018) The sixth visual object tracking vot2018 challenge results. In: Proceedings of the European conference on computer vision (ECCV) 2018 Workshops, pp 3–53

Kristan MA Leonardis JM, et al (2016) The visual object tracking VOT2016 challenge results. In: Proceedings of the European conference on computer vision (ECCV) 2016 Workshops, pp 777–823

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems (NeurIPS), pp 1097–1105

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Li B, Wu W, Wang Q, Zhang F, Xing J, Yan J (2019) Siamrpn++: evolution of siamese visual tracking with very deep networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 4282–4291

Li B, Yan J, Wu W, Zhu Z, Hu X (2018) High performance visual tracking with siamese region proposal network. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 8971–8980

Li X, Ma C, Wu B, He Z, Yang MH (2019) Target-aware deep tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 1369–1378

Liu Y, Dong X, Wang W, Shen J (2019) Teacher-students knowledge distillation for siamese trackers. arXiv:1907.10586

Luo Y, Cao X, Zhang J, Guo J, Shen H, Wang T, Feng Q (2021) CE-FPN: enhancing channel information for object detection. arXiv:2103.10643

Luo Y, Xu M, Yuan C, Cao X, Xu Y, Wang T, Feng Q (2021) Siamsnn: siamese spiking neural networks for energy-efficient object tracking. In: International conference on artificial neural networks (ICANN), pp 182–194

Luo Y, Yi Q, Wang T, Lin L, Xu Y, Zhou J, Yuan C, Guo J, Feng P, Feng Q (2019) A spiking neural network architecture for object tracking. In: International conference on image and graphics. Springer, pp 118–132

Maass W (1997) Networks of spiking neurons: the third generation of neural network models. Neural Netw 10(9):1659–1671

Merolla PA, Arthur JV, Alvarez-Icaza R, Cassidy AS, Sawada J, Akopyan F, Jackson BL, Imam N, Guo C, Nakamura Y et al (2014) A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 345(6197):668–673

Mulansky M, Kreuz T (2016) Pyspike-a python library for analyzing spike train synchrony. SoftwareX 5:183–189

Nam H, Han B (2016) Learning multi-domain convolutional neural networks for visual tracking. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 4293–4302

Park S, Kim S, Choe H, Yoon S (2019) Fast and efficient information transmission with burst spikes in deep spiking neural networks. In: ACM/IEEE design automation conference (DAC). ACM, p 53

Pei J, Deng L, Song S, Zhao M, Zhang Y, Wu S, Wang G, Zou Z, Wu Z, He W et al (2019) Towards artificial general intelligence with hybrid tianjic chip architecture. Nature 572(7767):106–111

Przewlocka D, Wasala M, Szolc H, Blachut K, Kryjak T (2020) Optimisation of a siamese neural network for real-time energy efficient object tracking. In: International conference on computer vision and graphics. Springer, pp 151–163

Redmon J, Divvala SK, Girshick RB, Farhadi A (2016) You only look once: unified, real-time object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 779–788

Roy K, Jaiswal A, Panda P (2019) Towards spike-based machine intelligence with neuromorphic computing. Nature 575(7784):607–617

Rueckauer B, Lungu IA, Hu Y, Pfeiffer M (2016) Theory and tools for the conversion of analog to spiking convolutional neural networks. arXiv:1612.04052

Rueckauer B, Lungu IA, Hu Y, Pfeiffer M, Liu SC (2017) Conversion of continuous-valued deep networks to efficient event-driven networks for image classification. Front Neurosci 11:682

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M et al (2015) Imagenet large scale visual recognition challenge. Int J Comput Vision 115(3):211–252

Santurkar S, Tsipras D, Ilyas A, Madry A (2018) How does batch normalization help optimization? In: Advances in neural information processing systems (NeurIPS), pp 2488–2498

Schuchart J, Hackenberg D, Schöne R, Ilsche T, Nagappan R, Patterson MK (2016) The shift from processor power consumption to performance variations: fundamental implications at scale. Comput Sci Res Dev 31(4):197–205

Sengupta A, Ye Y, Wang R, Liu C, Roy K (2019) Going deeper in spiking neural networks: Vgg and residual architectures. Front Neurosci 13:95

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556

Tan W, Patel D, Kozma R (2021) Strategy and benchmark for converting deep q-networks to event-driven spiking neural networks. In: Proceedings of the AAAI conference on artificial intelligence, pp 9816–9824

Tavanaei A, Ghodrati M, Kheradpisheh SR, Masquelier T, Maida A (2019) Deep learning in spiking neural networks. Neural Netw 111:47–63

Voigtlaender P, Luiten J, Torr PHS, Leibe B (2020) Siam r-cnn: Visual tracking by re-detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 6578–6588

Wei Y, Pan X, Qin H, Ouyang W, Yan J (2018) Quantization mimic: Towards very tiny cnn for object detection. In: Proceedings of the European conference on computer vision (ECCV), pp 267–283

Wu H, Zhang Y, Weng W, Zhang Y, Xiong Z, Zha Z, Sun X, Wu F (2021) Training spiking neural networks with accumulated spiking flow. In: Proceedings of the AAAI conference on artificial intelligence, pp 10320–10328

Wu Y, Deng L, Li G, Zhu J, Xie Y, Shi L (2019) Direct training for spiking neural networks: faster, larger, better. Proce AAAI Conf Artif Intell 33:1311–1318

Wu Y, Lim J, Yang M-H (2015) Object tracking benchmark. IEEE Trans Pattern Anal Mach Intell 37(9):1834–1848

Wu Y, Lim J, Yang MH (2013) Online object tracking: A benchmark. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 2411–2418

Xu Y, Wang Z, Li Z, Yuan Y, Yu G (2020) Siamfc++: towards robust and accurate visual tracking with target estimation guidelines. Proc AAAI Conf Artif Intell 34:12549–12556

Yan Z, Zhou J, Wong WF (2021) Near lossless transfer learning for spiking neural networks. Proc AAAI Conf Artif Intell 35:10577–10584

Yang K, He Z, Pei W, Zhou Z, Li X, Yuan D, Zhang H (2021) Siamcorners: Siamese corner networks for visual tracking. IEEE Trans Multimed. https://doi.org/10.1109/TMM.2021.3074239

Yang Z, Wu Y, Wang G, Yang Y, Li G, Deng L, Zhu J, Shi L (2019) Dashnet: a hybrid artificial and spiking neural network for high-speed object tracking. arXiv:1909.12942

Yu X, Liu T, Wang X, Tao D (2017) On compressing deep models by low rank and sparse decomposition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 7370–7379

Yuan C, Guo J, Feng P, Zhao Z, Luo Y, Xu C, Wang T, Duan K (2019) Learning deep embedding with mini-cluster loss for person re-identification. Multimed Tools Appl 78(15):21145–21166

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 61572214, and the Seed Foundation of Huazhong University of Science and Technology (2020kfyXGYJ114).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All the authors have participated sufficiently in the work to take public responsibility for the content, including participation in the concept, design, analysis, writing, or revision of the manuscript. The authors declare that they have no conflict of interest. And each author certifies that this manuscript has not been and will not be submitted to or published in any other publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Luo, Y., Shen, H., Cao, X. et al. Conversion of Siamese networks to spiking neural networks for energy-efficient object tracking. Neural Comput & Applic 34, 9967–9982 (2022). https://doi.org/10.1007/s00521-022-06984-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-06984-1