Abstract

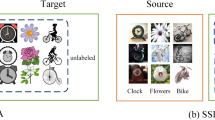

Unsupervised domain adaptation (UDA), which can transfer knowledge from labeled source domain to unlabeled target domain, needs to access a large number of labeled source data in the process of generalization. However, the data of two domains may not be accessed at the same time due to data privacy protection. To solve this problem, source-data free domain adaptation (SFDA) began to receive attention. However, too little source information will lead to some performance gaps. To balance the issues between UDA and SFDA, a new setting called Prototype-based domain adaptation (Prototype-DA) is proposed, which further improves the practicability of UDA by using source category prototype instead of source data. At the same time, it can also ensure the privacy of source data like SFDA. Specifically, our training process can be divided into two steps. First, the source data is used to pre-train a source model, and the source category prototypes are obtained after the training of source model. Then, to generalize the source model to the target domain, category maximum mean discrepancy (Category-MMD) is defined so that the target data can be aligned with the source category prototypes. In this way, source category prototypes will transfer knowledge to the target domain together with the source model. Through source category prototypes, Prototype-DA can not only achieve the comparable results than the method using source data, but also protect the privacy of source data to some extent. Furthermore, the target category prototypes are constructed and the consistency between the labels of target category prototypes and the classification results is required. This prototype-label consistency regularization, proposed by us for the first time, helps to extract discriminative features in the target domain. Compared with the previous UDA methods and SFDA methods, extensive experiments on multiple public domain adaptation datasets show that Prototype-DA achieves the state-of-the-art results. At the same time, the traditional UDA theory is expanded to our method setting and makes a theoretical analysis to ensure the effectiveness of our method.

Similar content being viewed by others

References

Ben-David S, Blitzer J, Crammer K, Kulesza A, Pereira F, Vaughan JW (2010) A theory of learning from different domains. Mach Learn 79(1–2):151–175

Bonawitz K, Eichner H, Grieskamp W, Huba D, Ingerman A, Ivanov V, Kiddon C, Konečnỳ J, Mazzocchi S, McMahan HB, et al (2019) Towards federated learning at scale: system design. arXiv preprint arXiv:1902.01046

Bonawitz K, Ivanov V, Kreuter B, Marcedone A, McMahan HB, Patel S, Ramage D, Segal A, Seth K (2017) Practical secure aggregation for privacy-preserving machine learning. Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, pp. 1175–1191

Chen C, Xie W, Huang W, Rong Y, Ding X, Huang Y, Xu T, Huang J (2019) Progressive feature alignment for unsupervised domain adaptation. Proceedings of the IEEE Conference on computer vision and pattern recognition, pp. 627–636

Chen C, Zheng Z, Ding X, Huang Y, Dou Q (2020) Harmonizing transferability and discriminability for adapting object detectors. In: Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pp. 8869–8878

Chen M, Zhao S, Liu H, Cai D (2020) Adversarial-learned loss for domain adaptation. In: AAAI, pp. 3521–3528

Chen X, Wang S, Long M, Wang J (2019) Transferability vs. discriminability: batch spectral penalization for adversarial domain adaptation. International Conference on machine learning, pp. 1081–1090

Chidlovskii B, Clinchant S, Csurka G (2016) Domain adaptation in the absence of source domain data. Proceedings of the 22nd ACM SIGKDD International conference on knowledge discovery and data mining, pp. 451–460

Crammer K, Kearns M, Wortman J (2008) Learning from multiple sources. J Mach Learn Res 9(Aug):1757–1774

Deng J, Li W, Chen Y, Duan L (2020) Unbiased mean teacher for cross domain object detection. arXiv preprint arXiv:2003.00707

Deng Z, Luo Y, Zhu J (2019) Cluster alignment with a teacher for unsupervised domain adaptation. Proceedings of the IEEE International conference on computer vision, pp. 9944–9953

Ganin Y, Ustinova E, Ajakan H, Germain P, Larochelle H, Laviolette F, Marchand M, Lempitsky V (2016) Domain-adversarial training of neural networks. J Mach Learn Resear 17(1):2030–2096

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. Advances in neural information processing systems, pp. 2672–2680

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778

Hou Y, Zheng L (2021) Visualizing adapted knowledge in domain transfer. In: Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pp. 13824–13833

Hu L, Kan M, Shan S, Chen X (2020) Unsupervised domain adaptation with hierarchical gradient synchronization. Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pp. 4043–4052

Jiang X, Lao Q, Matwin S, Havaei M (2020) Implicit class-conditioned domain alignment for unsupervised domain adaptation. arXiv preprint arXiv:2006.04996

Kim Y, Hong S, Cho D, Park H, Panda P (2020) Domain adaptation without source data. arXiv preprint arXiv:2007.01524

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems, pp. 1097–1105

Kurmi VK, Subramanian VK, Namboodiri VP (2021) Domain impression: A source data free domain adaptation method. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp. 615–625

Lee CY, Batra T, Baig MH, Ulbricht D (2019) Sliced wasserstein discrepancy for unsupervised domain adaptation. Proceedings of the IEEE Conference on computer vision and pattern recognition, pp. 10285–10295

Li R, Jiao Q, Cao W, Wong HS, Wu S (2020) Model adaptation: Unsupervised domain adaptation without source data. Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pp. 9641–9650

Liang J, He R, Sun Z, Tan T (2019) Distant supervised centroid shift: A simple and efficient approach to visual domain adaptation. Proceedings of the IEEE Conference on computer vision and pattern recognition, pp. 2975–2984

Liang J, Hu D, Feng J (2020) Do we really need to access the source data? source hypothesis transfer for unsupervised domain adaptation. International conference on machine learning (ICML)

Long M, Cao Y, Wang J, Jordan M (2015) Learning transferable features with deep adaptation networks. International conference on machine learning, pp. 97–105. PMLR

Long M, Cao Z, Wang J, Jordan MI (2018) Conditional adversarial domain adaptation. Advances in Neural Information Processing Systems, pp. 1640–1650

Long M, Wang J, Ding G, Sun J, Yu PS (2013) Transfer feature learning with joint distribution adaptation. Proceedings of the IEEE international conference on computer vision, pp. 2200–2207

Long M, Zhu H, Wang J, Jordan MI (2016) Unsupervised domain adaptation with residual transfer networks. Advances in neural information processing systems, pp. 136–144

Long M, Zhu H, Wang J, Jordan MI (2017) Deep transfer learning with joint adaptation networks. International conference on machine learning, pp. 2208–2217. PMLR

Loshchilov I, Hutter F (2016) Sgdr: Stochastic gradient descent with warm restarts. arXiv preprint arXiv:1608.03983

Lu Z, Yang Y, Zhu X, Liu C, Song YZ, Xiang T (2020) Stochastic classifiers for unsupervised domain adaptation. Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pp. 9111–9120

Maaten LVD, Hinton G (2008) Visualizing data using t-sne. J Mach Learn Resear 9(Nov):2579–2605

Mansour Y, Mohri M, Rostamizadeh A (2009) Domain adaptation with multiple sources. Advances in neural information processing systems, pp. 1041–1048

McMahan HB, Ramage D, Talwar K, Zhang L (2018) Learning differentially private recurrent language models. International Conference on Learning Representations

Müller R, Kornblith S, Hinton GE (2019) When does label smoothing help? Advances in Neural Information Processing Systems, pp. 4694–4703

Nelakurthi AR, Maciejewski R, He J (2018) Source free domain adaptation using an off-the-shelf classifier. 2018 IEEE International conference on big data (Big Data), pp. 140–145. IEEE

Pan SJ, Tsang IW, Kwok JT, Yang Q (2010) Domain adaptation via transfer component analysis. IEEE Trans Neur Netw 22(2):199–210

Pan SJ, Yang Q (2009) A survey on transfer learning. IEEE Trans Knowl Data Eng 22(10):1345–1359

Peng X, Huang Z, Zhu Y, Saenko K (2019) Federated adversarial domain adaptation. International Conference on Learning Representations

Peng X, Usman B, Kaushik N, Hoffman J, Wang D, Saenko K (2017) Visda: The visual domain adaptation challenge. arXiv preprint arXiv:1710.06924

Pinheiro PO (2018) Unsupervised domain adaptation with similarity learning. Proceedings of the IEEE Conference on computer vision and pattern recognition, pp. 8004–8013

Saenko K, Kulis B, Fritz M, Darrell T (2010) Adapting visual category models to new domains. European conference on computer vision, pp. 213–226. Springer

Saito K, Kim D, Sclaroff S, Darrell T, Saenko K (2019) Semi-supervised domain adaptation via minimax entropy. ICCV

Saito K, Ushiku Y, Harada T, Saenko K (2017) Adversarial dropout regularization. arXiv preprint arXiv:1711.01575

Tang H, Jia K (2020) Discriminative adversarial domain adaptation. AAAI, pp. 5940–5947

Tommasi T, Orabona F, Caputo B (2013) Learning categories from few examples with multi model knowledge transfer. IEEE Trans Patt Anal Mach Intell 36(5):928–941

Torralba A, Efros AA (2011) Unbiased look at dataset bias. CVPR 2011, pp. 1521–1528. IEEE

Tzeng E, Hoffman J, Saenko K, Darrell T (2017) Adversarial discriminative domain adaptation. Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 7167–7176

Venkateswara H, Eusebio J, Chakraborty S, Panchanathan S (2017) Deep hashing network for unsupervised domain adaptation. Proceedings of the IEEE Conference on computer vision and pattern recognition, pp. 5018–5027

Wang X, Jin Y, Long M, Wang J, Jordan MI (2019) Transferable normalization: Towards improving transferability of deep neural networks. Advances in neural information processing systems, pp. 1953–1963

Xie S, Zheng Z, Chen L, Chen C (2018) Learning semantic representations for unsupervised domain adaptation. International conference on machine learning, pp. 5423–5432

Xu R, Li G, Yang J, Lin L (2019) Larger norm more transferable: An adaptive feature norm approach for unsupervised domain adaptation. Proceedings of the IEEE International conference on computer vision, pp. 1426–1435

Yang J, Yan R, Hauptmann AG (2007) Cross-domain video concept detection using adaptive svms. Proceedings of the 15th ACM international conference on Multimedia, pp. 188–197

Yosinski J, Clune J, Bengio Y, Lipson H (2014) How transferable are features in deep neural networks. Advances in neural information processing systems, pp. 3320–3328

Zhu Y, Zhuang F, Wang J, Ke G, Chen J, Bian J, Xiong H, He Q (2020) Deep subdomain adaptation network for image classification. IEEE Trans Neur Netw Learn Sys 32(4):1713–1722

Acknowledgements

This work was supported in part by the National Key R &D Program of China (2018Y-FE0203900), Important Science and Technology Innovation Projects in Chengdu (2018-YF08-00039-GX), Key R &D Programs of Sichuan Science and Technology Department (2020YFG0476).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We declare that we have no financial and personal relationships with other people or organizations that can inappropriately influence our work, there is no professional or other personal interest of any nature or kind in any product, service and/or company that could be construed as influencing the position presented in, or the review of, the manuscript entitled, generative adversarial networks with adaptive learning strategy for noise-to-image synthesis.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhou, L., Ye, M. & Xiao, S. Domain adaptation based on source category prototypes. Neural Comput & Applic 34, 21191–21203 (2022). https://doi.org/10.1007/s00521-022-07601-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07601-x