Abstract

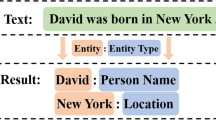

Recent studies revealed that even the most widely used benchmark dataset still contains more than 5% sample-level annotation noise in Named Entity Recognition (NER). Hence, we investigate annotation noise in terms of noise detection and noise-robust learning. First, considering that noisy labels usually occur when few or vague annotation cues appear in annotated texts and their contexts, an annotation noise detection model is constructed based on self-context contrastive loss. Second, an improved Bayesian neural network (BNN) is presented by adding a learnable systematic deviation term into the label generation processing of classical BNN. In addition, two learning strategies of systematic deviation items based on the output of the noise detection model are proposed. Experimental results of our proposed noise detection model show an improvement of up to 7.44% F1 on CoNLL03 than the existing method. Extensive experiments on two widely used but noisy benchmarks for NER, CoNLL03 and WNUT17 demonstrate that our proposed systematic deviation BNN has the potential to capture systematic annotation mistakes, and it can be extended to other areas with annotation noise.

Similar content being viewed by others

Notes

In our experiments, BiLSTM-CRF is not inferior to BiLSTM-BNN. Therefore, we conjectured that CRF can alleviate partial negative effects of random noise modeled by existing BNN.

Our code is available at https://github.com/ruby-yu-zhu/Annotation_Noise_NER.

SeqEval package were used to calculate F1 metric.

References

Aguilar G, López-Monroy AP, González FA, et al (2019) Modeling noisiness to recognize named entities using multitask neural networks on social media. Preprint at arXiv:1906.04129

Akbik A, Blythe D, Vollgraf R (2018) Contextual string embeddings for sequence labeling. In: Proceedings of the 27th international conference on computational linguistics, pp 1638–1649

Akbik A, Bergmann T, Blythe D, et al (2019) FLAIR: An easy-to-use framework for state-of-the-art NLP. In: Proceedings of the 2019 annual conference of the North American chapter of the association for computational Linguistics, pp 54–59

Apratim B. BSMario . (2018) Long-term on-board prediction of people in traffic scenes under uncertainty. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4194–4202

Derczynski L, Nichols E, van Erp M, et al (2017) Results of the WNUT2017 shared task on novel and emerging entity recognition. In: Proceedings of the 3rd workshop on noisy user-generated text, pp 140–147

Devlin J, Chang MW, Lee K, et al (2019) Bert: pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 annual conference of the North American chapter of the association for computational linguistics, pp 4171–4186

Duan Y, Wu O (2017) Learning with auxiliary less-noisy labels. IEEE Tans Neur Netw Learn Syst 28(7):1716–1721. https://doi.org/10.1109/TNNLS.2016.2546956

Gal Y, Ghahramani Z (2016) Dropout as a bayesian approximation: Representing model uncertainty in deep learning. In: Proceedings of the 33th international conference on machine learning, pp 1050–1059

Graves A, Schmidhuber J (2005) Framewise phoneme classification with bidirectional lstm and other neural network architectures. Neu Netw 18(5–6):602–610. https://doi.org/10.1016/j.neunet.2005.06.042

Gui T, Ma R, Zhang Q, et al (2019) Cnn-based chinese ner with lexicon rethinking. In: In Proceedings of the 28th international joint conference on artificial intelligence, pp 4982–4988

Guo Q, Guo Y (2022) Lexicon enhanced Chinese named entity recognition with pointer network. neural computing and applications

Guo Q, Qiu X, Liu P, et al (2019) Star-transformer. In: Proceedings of the 2019 conference of the North American chapter of the association for computational linguistics: human language technologies, volume 1 (long and short papers), pp 1315–1325

Hang Y, Bocao D, Xipeng Q (2019) Tener: adapting transformer encoder for name entity recognition. Preprint at arXiv:1911.04474

Hao Z, Wang H, Cai R et al (2013) Product named entity recognition for Chinese query questions based on a skip-chain crf model. Neur Computi Appl 23(2):371–379. https://doi.org/10.1007/s00521-012-0922-5

Huang J, Qu L, Jia R, et al (2019) O2u-net: a simple noisy label detection approach for deep neural networks. In: Proceedings of the IEEE international conference on computer vision, pp 3326–3334

Jenni S, Favaro P (2018) Deep bilevel learning. In: Proceedings of the 15th European conference on computer vision, pp 618–633

Jindal I, Pressel D, Lester B, et al (2019) An effective label noise model for DNN text classification. In: Proceedings of the 2019 annual conference of the North American chapter of the association for computational linguistics

Kendall A, Gal Y (2017) What uncertainties do we need in Bayesian deep learning for computer vision? In: advances in neural information processing systems, pp 5574–5584

Krizhevsky A, Sutskever I, Hinton G (2012) Imagenet classification with deep convolutional neural networks. In: Proceedings of the 26th annual conference on neural information processing Systems, pp 1097–1105

Kun L, Yao F, Chuanqi T, et al (2021) Noisy-labeled ner with confidence estimation. In: Proceedings of the 2021 annual conference of the North American chapter of the association for computational linguistics

Lee J, Bahri Y, Novak R, et al (2018) Deep neural networks as gaussian processes. In: Proceedings of the 6st international conference on learning representations

Li J, Sun A, Ma Y (2020) Neural named entity boundary detection. IEEE Trans Knowl Data Eng 33(4):1790–1795

Liang C, Yu Y, Jiang H, et al (2020) Bond: Bert-assisted open-domain named entity recognition with distant supervision. In: Proceedings of the 26th ACM SIGKDD international conference on knowledge discovery & data mining, pp 1054–1064

Liu Jw, Ren Zp, Lu Rk et al (2021) Gmm discriminant analysis with noisy label for each class. Neur Comput Appl 33(4):1171–1191

Ma X, Hovy E (2016) End-to-end sequence labeling via bi-directional lstm-cnns-crf. In: Proceedings of the 54th annual meeting of the association for computational linguistics

Mikolov T, Chen K, Corrado G, et al (2013) Efficient estimation of word representations in vector space. In: Proceedings of the 1st international conference on learning representations, Scottsdale, Arizona, USA, May 2-4, 2013, workshop track proceedings

Nie Y, Zhang Y, Peng Y et al (2022) Borrowing wisdom from world: modeling rich external knowledge for Chinese named entity recognition. Neur Comput Appl 34(6):4905–4922

Northcutt CG, Athalye A, Mueller J (2021) Pervasive label errors in test sets destabilize machine learning benchmarks. Preprint at arXiv:2103.14749

Panchendrarajan R, Amaresan A (2018) Bidirectional LSTM-CRF for named entity recognition. In: Proceedings of the 32nd Pacific Asia conference on language, information and computation, Hong Kong

Pennington J, Socher R, Manning C (2014) GloVe: Global vectors for word representation. In: Proceedings of the 2014 conference on empirical methods in natural language processing, pp 1532–1543

Peters ME, Ammar W, Bhagavatula C, et al (2017) Semi-supervised sequence tagging with bidirectional language models. In: Proceedings of the 55th annual meeting of the association for computational linguistics, pp 1756–1765

Rodrigues F, Pereira F, Ribeiro B (2014) Sequence labeling with multiple annotators. Mach Learn 95(2):165–181. https://doi.org/10.1007/s10994-013-5411-2

Sanh V, Debut L, Chaumond J, et al (2019) Distilbert, a distilled version of bert: smaller, faster, cheaper and lighter. arXiv preprint arXiv:1910.01108

Shang J, Liu L, Ren X, et al (2018) Learning named entity tagger using domain-specific dictionary. Preprint at arXiv:1809.03599

Shang Y, Huang HY, Mao X, et al (2020) Are noisy sentences useless for distant supervised relation extraction? In: Proceedings of the AAAI conference on artificial intelligence, pp 8799–8806

Shu J, Xie Q, Yi L, et al (2019) Meta-weight-net: Learning an explicit mapping for sample weighting. In: Proceedings of the 33th annual conference on neural information lrocessing systems, pp 1917–1928

Tjong Kim Sang EF, De Meulder F (2003) Introduction to the CoNLL-2003 shared task: language-independent named entity recognition. Proc Seventh Conf Nat Language Learn at HLT-NAACL 2003:142–147

Vaswani A, Shazeer N, Parmar N, et al (2017) Attention is all you need. In: Advances in neural information processing systems, pp 5998–6008

Wang J, Xu W, Fu X et al (2020) Astral: adversarial trained lstm-cnn for named entity recognition. Knowl Based Syst 197(105):842. https://doi.org/10.1016/j.knosys.2020.105842

Wang Y, Liu W, Ma X, et al (2018) Iterative learning with open-set noisy labels. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8688–8696

Wang Z, Shang J, Liu L, et al (2019) Crossweigh: training named entity tagger from imperfect annotations. In: Proceedings of the 2019 conference on empirical methods in natural language processing and the 9th international joint conference on natural language processing, pp 5157–5166

Wei W, Wang Z, Mao X et al (2021) Position-aware self-attention based neural sequence labeling. Pattern Recogn 110(107):636. https://doi.org/10.1016/j.patcog.2020.107636

Xiao Y, Wang WY (2019) Quantifying uncertainties in natural language processing tasks. In: Proceedings of the AAAI conference on artificial intelligence, pp 7322–7329

Xu Z, Qian X, Zhang Y, et al (2008) Crf-based hybrid model for word segmentation, ner and even pos tagging. In: Proceedings of the sixth SIGHAN workshop on Chinese language processing, pp 167–170

Zhai F, Potdar S, Xiang B, et al (2017) Neural models for sequence chunking. In: Proceedings of the AAAI conference on artificial intelligence

Zhang X, Wu X, Chen F, et al (2020) Self-paced robust learning for leveraging clean labels in noisy data. In: Proceedings of the AAAI conference on artificial intelligence, pp 6853–6860

Zhou G, Su J (2002) Named entity recognition using an hmm-based chunk tagger. In: Proceedings of the 40th annual meeting of the association for computational linguistics, pp 473–480

Zhou W, Chen M (2021) Learning from noisy labels for entity-centric information extraction. Preprint at arXiv:2104.08656

Funding

The funding was provided by National Nature Science Foundation of China (62076178), Natural Science Foundation of Tianjin City (19ZXAZNGX00050), Zhijiang Fund (2019KB0AB03).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhu, Y., Ye, Y., Li, M. et al. Investigating annotation noise for named entity recognition. Neural Comput & Applic 35, 993–1007 (2023). https://doi.org/10.1007/s00521-022-07733-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07733-0