Abstract

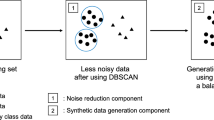

The class imbalance learning problem is an important topic that has attracted considerable attention in machine learning and data mining. The most common method of addressing imbalanced datasets is the synthetic minority oversampling technique (SMOTE). However, the SMOTE and its variants suffer from the noise derived from the interpolation of synthetic examples. In this paper, an overproduce-and-choose strategy, which is divided into the overproduction and selection phases, is proposed to generate an appropriate set of synthetic examples for imbalance learning problems. In the overproduction phase, a new interpolation mechanism is developed to produce numerous synthetic examples, while in the selection phase, the synthetic examples that are beneficial to the classification task are selected by using instance selection based on evolutionary computation. Experiments are conducted on a large number of datasets selected from the real-world applications. The experimental results demonstrate that the proposed method is significantly better than SMOTE and its well-known variants in terms of several metrics, including G-mean (GM) and area under the curve.

Similar content being viewed by others

Data availability

All the research data presented in this work can be reached when contacting with the author by email

References

Wang S, Yao X (2012) Multiclass imbalance problems: analysis and potential solutions. IEEE Trans Syst Man Cybern Part B (Cybern) 42(4):1119–1130

Fernández A, LóPez V, Galar M, Del Jesus MJ, Herrera F (2013) Analysing the classification of imbalanced data-sets with multiple classes: binarization techniques and ad-hoc approaches. Knowl-Based Syst 42:97–110

Hou W-H, Wang X-K, Zhang H-Y, Wang J-Q, Li L (2020) A novel dynamic ensemble selection classifier for an imbalanced data set: an application for credit risk assessment. Knowl-Based Syst 208:106462

Choudhary R, Shukla S (2021) A clustering based ensemble of weighted kernelized extreme learning machine for class imbalance learning. Expert Syst Appl 164:114041

Galar M, Fernandez A, Barrenechea E, Bustince H, Herrera F (2011) A review on ensembles for the class imbalance problem: bagging, boosting, and hybrid-based approaches. IEEE Transact Syst Man Cybern Part C (Appl Rev) 42(4):463–484

Fernández A, Garcia S, Herrera F, Chawla NV (2018) SMOTE for learning from imbalanced data: progress and challenges, marking the 15-year anniversary. J Artif Intell Res 61:863–905

Datta S, Das S (2015) Near-Bayesian support vector machines for imbalanced data classification with equal or unequal misclassification costs. Neural Netw 70:39–52

Ren Z, Zhu Y, Kang W, Fu H, Niu Q, Gao D, Yan K, Hong J (2022) Adaptive cost-sensitive learning: improving the convergence of intelligent diagnosis models under imbalanced data. Knowl-Based Syst 241:108296

Guzmán-Ponce A, Sánchez JS, Valdovinos RM, Marcial-Romero JR (2021) DBIG-US: a two-stage under-sampling algorithm to face the class imbalance problem. Expert Syst Appl 168:114301

Chen Z, Duan J, Kang L, Qiu G (2021) A hybrid data-level ensemble to enable learning from highly imbalanced dataset. Inf Sci 554:157–176

Barella VH, Garcia LP, de Souto MC, Lorena AC, de Carvalho AC (2021) Assessing the data complexity of imbalanced datasets. Inf Sci 553:83–109

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP (2002) SMOTE: synthetic minority over-sampling technique. J Artif Intell Res 16:321–357

Alcalá-Fdez J, Fernández A, Luengo J, Derrac J, García S, Sánchez L, Herrera F (2011) Keel data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. J Mult-Valued Log Soft Comput 17:255–287

Barandela R, Valdovinos RM, Sánchez JS (2003) New applications of ensembles of classifiers. Pattern Anal Appl 6(3):245–256

López V, Fernández A, García S, Palade V, Herrera F (2013) An insight into classification with imbalanced data: empirical results and current trends on using data intrinsic characteristics. Inf Sci 250:113–141

Gosain A, Sardana S (2019) Farthest SMOTE: a modified SMOTE approach. In: Computational intelligence in data mining. Springer, pp 309–320

García V, Sánchez JS, Martín-Félez R, Mollineda RA (2012) Surrounding neighborhood-based SMOTE for learning from imbalanced data sets. Progr Artif Intell 1(4):347–362

Dietterich TG, Bakiri G (1994) Solving multiclass learning problems via error-correcting output codes. J Artif Intell Res 2:263–286

López V, Fernández A, Herrera F (2014) On the importance of the validation technique for classification with imbalanced datasets: addressing covariate shift when data is skewed. Inf Sci 257:1–13

Weiss GM (2004) Mining with rarity: a unifying framework. ACM SIGKDD Explor Newsl 6(1):7–19

Weiss GM, Tian Y (2008) Maximizing classifier utility when there are data acquisition and modeling costs. Data Min Knowl Disc 17(2):253–282

Batista GE, Prati RC, Monard MC (2004) A study of the behavior of several methods for balancing machine learning training data. ACM SIGKDD Explor Newsl 6(1):20–29

Cieslak DA, Hoens TR, Chawla NV, Kegelmeyer WP (2012) Hellinger distance decision trees are robust and skew-insensitive. Data Min Knowl Disc 24(1):136–158

Czarnecki WM, Tabor J (2015) Multithreshold entropy linear classifier: theory and applications. Expert Syst Appl 42(13):5591–5606

Ando S (2016) Classifying imbalanced data in distance-based feature space. Knowl Inf Syst 46(3):707–730

Pérez-Godoy MD, Rivera AJ, Carmona CJ, del Jesus MJ (2014) Training algorithms for radial basis function networks to tackle learning processes with imbalanced data-sets. Appl Soft Comput 25:26–39

Penar W, Wozniak M (2010) Cost-sensitive methods of constructing hierarchical classifiers. Expert Syst 27(3):146–155

Zhou Z-H, Liu X-Y (2010) On multi-class cost-sensitive learning. Comput Intell 26(3):232–257

Sun Y, Kamel MS, Wong AK, Wang Y (2007) Cost-sensitive boosting for classification of imbalanced data. Pattern Recogn 40(12):3358–3378

Fan W, Stolfo SJ, Zhang J, Chan PK (1999) AdaCost: misclassification cost-sensitive boosting. In: Icml, vol 99, pp 97–105

Ting KM (2000) A comparative study of cost-sensitive boosting algorithms. In: In Proceedings of the 17th International Conference on Machine Learning . Citeseer

Zhou Z-H, Liu X-Y (2005) Training cost-sensitive neural networks with methods addressing the class imbalance problem. IEEE Trans Knowl Data Eng 18(1):63–77

Drummond C, Holte RC (2000) Exploiting the cost (in) sensitivity of decision tree splitting criteria. In: ICML, vol 1(1), 239–246

Krawczyk B, Woźniak M, Schaefer G (2014) Cost-sensitive decision tree ensembles for effective imbalanced classification. Appl Soft Comput 14:554–562

López V, Del Río S, Benítez JM, Herrera F (2015) Cost-sensitive linguistic fuzzy rule based classification systems under the MapReduce framework for imbalanced big data. Fuzzy Sets Syst 258:5–38

Woźniak M, Grana M, Corchado E (2014) A survey of multiple classifier systems as hybrid systems. Inform Fus 16:3–17

Breiman L (1996) Bagging predictors. Mach Learn 24(2):123–140

Freund Y, Schapire RE (1996) Experiments with a new boosting algorithm. In: ICML, vol 96, Citeseer, pp 148–156

Wang S, Yao X (2009) Diversity analysis on imbalanced data sets by using ensemble models. In: 2009 IEEE symposium on computational intelligence and data mining, pp 324–331

Chawla NV, Lazarevic A, Hall LO, Bowyer KW (2003) SMOTEBoost: improving prediction of the minority class in boosting. In: European conference on principles of data mining and knowledge discovery, pp. 107–119

Seiffert C, Khoshgoftaar TM, Van Hulse J, Napolitano A (2009) RUSBoost: a hybrid approach to alleviating class imbalance. IEEE Trans Syst Man Cybern-Part A Syst Humans 40(1):185–197

Galar M, Fernández A, Barrenechea E, Herrera F (2013) EUSBoost: enhancing ensembles for highly imbalanced data-sets by evolutionary undersampling. Pattern Recogn 46(12):3460–3471

Liu X-Y, Wu J, Zhou Z-H (2008) Exploratory undersampling for class-imbalance learning. IEEE Trans Syst Man Cybern Part B (Cybern) 39(2):539–550

Nanni L, Fantozzi C, Lazzarini N (2015) Coupling different methods for overcoming the class imbalance problem. Neurocomputing 158:48–61

Sáez JA, Luengo J, Stefanowski J, Herrera F (2015) SMOTE-IPF: addressing the noisy and borderline examples problem in imbalanced classification by a re-sampling method with filtering. Inf Sci 291:184–203

He H, Bai Y, Garcia EA, Li S (2008) ADASYN: adaptive synthetic sampling approach for imbalanced learning. In: 2008 IEEE international joint conference on neural networks (IEEE World Congress on Computational Intelligence), pp 1322–1328

Douzas G, Bacao F, Last F (2018) Improving imbalanced learning through a heuristic oversampling method based on k-means and SMOTE. Inf Sci 465:1–20

Han H, Wang W-Y, Mao B-H (2005) Borderline-SMOTE: a new over-sampling method in imbalanced data sets learning. In: International conference on intelligent computing, pp 878–887

Bunkhumpornpat C, Sinapiromsaran K, Lursinsap C (2009) Safe-Level-SMOTE: safe-level-synthetic minority over-sampling technique for handling the class imbalanced problem. In: Pacific-Asia conference on knowledge discovery and data mining, pp 475–482

Maciejewski T, Stefanowski J (2011) Local neighbourhood extension of SMOTE for mining imbalanced data. In: 2011 IEEE symposium on computational intelligence and data mining (CIDM), pp 104–111

Yun J, Ha J, Lee J-S (2016) Automatic determination of neighborhood size in SMOTE. In: Proceedings of the 10th international conference on ubiquitous information management and communication, pp 1–8

Ziȩba M, Tomczak JM, Gonczarek A (2015) RBM-SMOTE: restricted boltzmann machines for synthetic minority oversampling technique. In: Asian conference on intelligent information and database systems, pp 377–386

Wang K-J, Adrian AM, Chen K-H, Wang K-M (2015) A hybrid classifier combining Borderline-SMOTE with AIRS algorithm for estimating brain metastasis from lung cancer: A case study in taiwan. Comput Methods Programs Biomed 119(2):63–76

Raghuwanshi BS, Shukla S (2020) SMOTE based class-specific extreme learning machine for imbalanced learning. Knowl-Based Syst 187:104814

Randall D, Tony W, Martinez R (2000) Reduction techniques for Exemplar-Based learning algorithms. Mach Learn 38(3):257–286

García S, Luengo J, Herrera F (2016) Data preprocessing in data mining. Springer Publishing Company Incorporated, Heidelberg

Hart PE (1968) The condensed nearest neighbor rule. IEEE Trans Inf Theory 14(3):515–516

Olvera-López JA, Carrasco-Ochoa JA, Martínez-Trinidad JF (2010) A new fast prototype selection method based on clustering. Pattern Anal Appl 13(2):131–141

Wilson Dennis L (2007) Asymptotic properties of nearest neighbor rules using edited data. IEEE Trans Syst Man Cybern 2(3):408–421

Sánchez JS, Pla F, Ferri FJ (1997) Prototype selection for the nearest neighbour rule through proximity graphs. Pattern Recogn Lett 18(6):507–513

Vázquez F, Sánchez JS, Pla F (2005) A stochastic approach to Wilson’s editing algorithm. In: Iberian conference on pattern recognition and image analysis, pp 35–42

Sánchez JS, Barandela R, Marqués AI, Alejo R, Badenas J (2003) Analysis of new techniques to obtain quality training sets. Pattern Recogn Lett 24(7):1015–1022

Jankowski N, Grochowski M (2004) Comparison of instances seletion algorithms I. Algorithms survey. Curr Gastroenterol Rep 10(1):0937–0942

Aha DW, Kibler D, Albert MK (1991) Instance-based learning algorithms. Mach Learn 6(1):37–66

Marchiori E (2009) Class conditional nearest neighbor for large margin instance selection. IEEE Trans Pattern Anal Mach Intell 32(2):364–370

Brighton H, Mellish C (2002) Advances in instance selection for instance-based learning algorithms. Data Min Knowl Disc 6(2):153–172

Zhao K-P, Zhou S-G, Guan J-H, Zhou A-Y (2003) C-pruner: an improved instance pruning algorithm. In: Proceedings of the 2003 international conference on machine learning and cybernetics (IEEE Cat. No. 03EX693), vol. 1, pp 94–99

Cano JR, Herrera F, Lozano M (2003) Using evolutionary algorithms as instance selection for data reduction in KDD: an experimental study. IEEE Trans Evol Comput 7(6):561–575

Tsai CF, Eberle W, Chu CY (2013) Genetic algorithms in feature and instance selection. Knowl-Based Syst 39:240–247

Suganthi M, Karunakaran V (2019) Instance selection and feature extraction using cuttlefish optimization algorithm and principal component analysis using decision tree. Clust Comput 22(1):89–101

Rathee S, Ratnoo S, Ahuja J (2019) Instance selection using multi-objective CHC evolutionary algorithm. In: Information and communication technology for competitive strategies, pp 475–484

Kuncheva LI (1995) Editing for the k-nearest neighbors rule by a genetic algorithm. Pattern Recogn Lett 16(8):809–814

Sierra B, Lazkano E, Inza I, Merino M, Larranaga P, Quiroga J (2001) Prototype selection and feature subset selection by estimation of distribution algorithms. A case study in the survival of cirrhotic patients treated with tips. In: Conference on artificial intelligence in medicine in Europe, pp 20–29

Loh W-Y (2011) Classification and regression trees. Wiley Interdis Rev Data Min Knowl Disc 1(1):14–23

Quinlan JR (2014) C4. 5: programs for machine learning, Elsevier

Rokach L (2016) Decision forest: twenty years of research. Inform Fus 27:111–125

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20(3):273–297

Cover T, Hart P (1967) Nearest neighbor pattern classification. IEEE Trans Inf Theory 13(1):21–27

Wright RE (1995) Logistic regression. Reading and Underst Multivar Stat:217–244

Domingos P, Pazzani M (1997) On the optimality of the simple Bayesian classifier under zero-one loss. Mach Learn 29(2):103–130

Barua S, Islam MM, Yao X, Murase K (2012) MWMOTE-majority weighted minority oversampling technique for imbalanced data set learning. IEEE Trans Knowl Data Eng 26(2):405–425

Rong T, Gong H, Ng WW (2014) Stochastic sensitivity oversampling technique for imbalanced data. In: International conference on machine learning and cybernetics, pp 161–171

Menardi G, Torelli N (2014) Training and assessing classification rules with imbalanced data. Data Min Knowl Disc 28(1):92–122

Jiang K, Lu J, Xia K (2016) A novel algorithm for imbalance data classification based on genetic algorithm improved SMOTE. Arab J Sci Eng 41(8):3255–3266

Fawcett T (2006) An introduction to ROC analysis. Pattern Recogn Lett 27(8):861–874

Sheskin DJ (2003) Handbook of parametric and nonparametric statistical procedures, Chapman and Hall/CRC

Friedman M (1937) The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J Am Stat Assoc 32(200):675–701

Friedman M (1940) A comparison of alternative tests of significance for the problem of m rankings. Ann Math Stat 11(1):86–92

Wilcoxon F (1992) Individual comparisons by ranking methods. In: Breakthroughs in statistics, Chapman and Hall/CRC, pp 196–202

Acknowledgements

The authors would like to thank the (anonymous) reviewers for their constructive comments. This research was supported by the National Science Foundation of China under Grant No. 71801065, 72171065, 71831006 and 71801064, Zhejiang Provincial Natural Science Foundation of China under Grant No. LZ20G010001 and LY22G010009, as well as the Fundamental Research Funds for the Provincial Universities of Zhejiang under Grant No. GK209907299001-202.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A Detailed tables of experimental results

Appendix A Detailed tables of experimental results

In this appendix, the detailed experimental results in all the base classifiers and datasets are presented. These results are as follows:

See Tables

10,

11,

12,

13,

14,

15,

17,

17,

18,

and 19.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, ZL., Peng, RR., Ruan, YP. et al. ESMOTE: an overproduce-and-choose synthetic examples generation strategy based on evolutionary computation. Neural Comput & Applic 35, 6891–6977 (2023). https://doi.org/10.1007/s00521-022-08004-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-08004-8