Abstract

Keyframe extraction plays a significant role in wide variety of real-time video processing applications such as video summarization, video management and retrieval, etc. A keyframe captures the whole content of its shot and does not contain any redundant information. The keyframe extraction algorithms are facing challenges due to different visual characteristics in videos of different categories. Therefore, a single feature is not enough to capture visual characteristics of a variety of videos. In order to tackle this problem, we propose an approach of keyframe extraction that uses hybridization of features. In the present article, we propose a novel shot detection-based keyframe extraction algorithm based on combination of two features: one is Pearson correlation coefficient (PCC) and other is color moments (CM). The linear transformation invariance property of PCC facilitates the proposed algorithm to work well under varying lighting conditions. On the other hand, the scale and rotation invariance properties of color moments are beneficial for representation of complex objects that may be present in different poses and orientations. These sustained reasons support the combination of these two features, which brings significant benefits for keyframe extraction in the proposed method. The proposed method detects shot boundaries by employing combo feature set (PCC and CM). From each shot, the frame with highest mean and standard deviation is selected as keyframe. Furthermore, another important contribution is that we developed a new dataset by collecting the videos of different categories such as movies, news, serials, animations and personal interviews and made it available online. The proposed method is experimented on three datasets: two publicly available datasets and one dataset developed by us. The performance of the proposed method on these datasets has been evaluated on the basis of different evaluation parameters: figure of merit, detection percentage, accuracy, and missing factor. Principal advantage of proposed work lies in the fact that it is capable to detect both the abrupt and gradual shot transitions. In real-time videos, it is common to have abrupt and small transitions. The experimental results show the superior performance of the proposed method over the other state-of-the-art methods.

Similar content being viewed by others

References

Khare, M., Srivastava, R.K., Khare, A.: Object tracking using combination of Daubechies complex wavelet transform and Zernike moment. Multimed Tools Appl 76(1), 1247–1290 (2017)

Prakash, O., Gwak, J., Khare, M., Khare, A., Jeon, M.: Human detection in complex real scenes based on combination of biorthogonal wavelet transform and Zernike moments. Optik Int J Light Electron Opt 1(157), 1267–1281 (2018)

Khare, A., Mounika, B.R., Vasu, B.: On retrieval of nearly identical video clips with query frame. In 2019 International Conference on Automation, Computational and Technology Management (ICACTM), pp. 116–121. IEEE (2019)

Singhal, A., Kumar, P., Saini, R., Roy, P.P., Dogra, D.P., Kim, B.G.: Summarization of videos by analyzing affective state of the user through crowdsource. Cognit Syst Res 1(52), 917–930 (2018)

Jaiswal, S., Virmani, S., Sethi, V., De, K., Roy, P.P.: An intelligent recommendation system using gaze and emotion detection. Multimed Tools Appl 2018, 1–20 (2018)

Nigam, S., Khare, A.: Integration of moment invariants and uniform local binary patterns for human activity recognition in video sequences. Multimed Tools Appl 75(24), 17303–17332 (2016)

Khare, M., Binh, N.T., Srivastava, R.K., Khare, A.: Vehicle identification in traffic surveillance-complex wavelet transform based approach. J Sci Technol 52(4A), 29–38 (2014)

Khare, M., Srivastava, R.K., Khare, A.: Single change detection-based moving object segmentation by using Daubechies complex wavelet transform. IET Image Proc. 8(6), 334–344 (2014)

Birinci, M., Kiranyaz, S.: A perceptual scheme for fully automatic video shot boundary detection. Signal process Image Commun 29(3), 410–423 (2014)

Mohanta, P.P., Saha, S.K., Chanda, B.: A model-based shot boundary detection technique using frame transition parameters. IEEE Trans Multimed 14(1), 223–233 (2012)

Tavassolipour, M., Karimian, M., Kasaei, S.: Event detection and summarization in soccer videos using Bayesian network and copula. IEEE Trans Circ Syst Video Technol 24(2), 291–304 (2014)

Lu, Z.M., Shi, Y.: Fast video shot boundary detection based on SVD and pattern matching. IEEE Trans Image Process 22(12), 5136–5145 (2013)

Ayadi, T., Ellouze, M., Hamdani, T.M., Alimi, A.M.: Movie scenes detection with MIGSOM based on shots semi-supervised clustering. Neural Comput Appl 22(7–8), 1387–1396 (2013)

Dadashi, R., Kanan, H.R.: AVCD-FRA: a novel solution to automatic video cut detection using fuzzy-rule-based approach. Comput Vis Image Underst 117(7), 807–817 (2013)

Jadhav, M.P., Jadhav, D.S.: Video summarization using higher order color moments (VSUHCM). Procedia Comput Sci 1(45), 275–281 (2015)

Sheena, C.V., Narayanan, N.K.: Key-frame extraction by analysis of histograms of video frames using statistical methods. Procedia Comput Sci 1(70), 36–40 (2015)

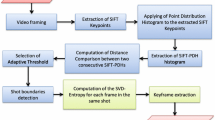

Hannane, R., Elboushaki, A., Afdel, K., Naghabhushan, P., Javed, M.: An efficient method for video shot boundary detection and keyframe extraction using SIFT-point distribution histogram. Int J Multimed Inform Retr 5(2), 89–104 (2016)

Thakre, K.S., RajurkarAM, Manthalkar R.R.: Video partitioning and secured keyframe extraction of MPEG video. Procedia Comput Sci 78, 790–798 (2016)

Yu, L., Cao, J., Chen, M., Cui, X.: Key frame extraction scheme based on sliding window and features. Peer to Peer Netw Appl 11(5), 1141–1152 (2018)

Lu, G., Zhou, Y., Li, X., Yan, P.: Unsupervised, efficient and scalable key-frame selection for automatic summarization of surveillance videos. Multimed Tools Appl 76(5), 6309–6331 (2017)

Loukas, C., Nikiteas, N., Schizas, D., Georgiou, E.: Shot boundary detection in endoscopic surgery videos using a variational Bayesian framework. Int J Comput Assist Radiol Surg 11(11), 1937–1949 (2016)

Thounaojam, D.M., Khelchandra, T., Singh, K.M., Roy, S.: A genetic algorithm and fuzzy logic approach for video shot boundary detection. Comput Intell Neurosci 1(2016), 14 (2016)

Dutta, D., Saha, S.K., Chanda, B.: A shot detection technique using linear regression of shot transition pattern. Multimed Tools Appl 75(1), 93–113 (2016)

Priya, G.L., Domnic, S.: Walsh-Hadamard transform kernel-based feature vector for shot boundary detection. IEEE Trans Image Process 23(12), 5187–5197 (2014)

González-Díaz, I., Martínez-Cortés, T., Gallardo-Antolín, A., Díaz-de-María, F.: Temporal segmentation and keyframe selection methods for user-generated video search-based annotation. Expert Syst Appl 42(1), 488–502 (2015)

Ji, P., Cao, L., Zhang, X., Zhang, L., Wu, W.: News videos anchor person detection by shot clustering. Neurocomputing 10(123), 86–99 (2014)

Wang, J., Neskovic, P., Cooper, L.N.: Improving nearest neighbor rule with a simple adaptive distance measure. Pattern Recogn Lett 28(2), 207–213 (2007)

Cotsaces, C., Nikolaidis, N., Pitas, I.: Video shot boundary detection and condensed representation: a review. IEEE Signal Process Mag 23(2), 28–37 (2006)

Dang, C., Radha, H.: RPCA-KFE: key frame extraction for video using robust principal component analysis. IEEE Trans Image Process 24(11), 3742–3753 (2015)

VáZquez-MartíN, R., Bandera, A.: Spatio-temporal feature-based keyframe detection from video shots using spectral clustering. Pattern Recogn Lett. 34(7), 770–779 (2013)

Ioannidis, A., Chasanis, V., Likas, A.: Weighted multi-view key-frame extraction. Pattern Recogn Lett 1(72), 52–61 (2016)

Priya, G.L., Domnic, S.: Shot based keyframe extraction for ecological video indexing and retrieval. Ecol Inform 1(23), 107–117 (2014)

Mendi, E., Bayrak, C.: Shot boundary detection and key-frame extraction from neurosurgical video sequences. Imaging Sci J 60(2), 90–96 (2012)

Vila, M., Bardera, A., Xu, Q., Feixas, M., Sbert, M.: Tsallis entropy-based information measures for shot boundary detection and keyframe selection. SIViP 7(3), 507–520 (2013)

Furuichi, S.: Information theoretical properties of Tsallis entropies. J Math Phys 47(2), 023302 (2006)

Burbea, J., Rao, C.: On the convexity of some divergence measures based on entropy functions. IEEE Trans Inf Theory 28(3), 489–495 (1982)

Vovk, V., Nouretdinov, I., Gammerman, A.: Testing exchangeability on-line. In Proceedings of the 20th International Conference on Machine Learning (ICML-03), pp. 768–775. Washington, DC (2003)

Chakraborty, D., Roy, P.P., Saini, R., Alvarez, J.M., Pal, U.: Frame selection for OCR from video stream of book flipping. Multimed Tools Appl 77(1), 985–1008 (2018)

Deb, K. (2001). Multi-objective optimization using evolutionary algorithms, vol. 16. Wiley

Poornima, K., Kanchana, R.: A method to align images using image segmentation. IJCSE 2(1), 294–298 (2012)

Khare, M., Srivastava, R.K., Khare, A.: Moving object segmentation in Daubechies complex wavelet domain. SIViP 9(3), 635–650 (2015)

Shaker, I.F., Abd-Elrahman, A., Abdel-Gawad, A.K., Sherief, M.A.: Building extraction from high resolution space images in high density residential areas in the Great Cairo region. Remote Sens 3(4), 781–791 (2011)

Lee, VirtualDub home page. http://www.virtualdub.org/index.html. Accessed 27 Sep 2018

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by A. Sur.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Bommisetty, R.M., Prakash, O. & Khare, A. Keyframe extraction using Pearson correlation coefficient and color moments. Multimedia Systems 26, 267–299 (2020). https://doi.org/10.1007/s00530-019-00642-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-019-00642-8