Abstract

The text to speech technology has achieved significant progress during the past decade and is an active area of research and development in providing different human–computer interactive systems. Even though a number of speech synthesis models are available for different languages focusing on the domain requirements with many motive applications, a source of information on current trends in Indian language speech synthesis is unavailable till date making it difficult for the beginners to initiate research for the development of TTS systems for the low-resourced languages. This paper provides a review of the contributions made by different researchers in the field of Indian language speech synthesis along with a study on the Indian language characteristics and the associated challenges in designing TTS systems. A set of available applications and tools results out of different projects undertaken by different organizations along with a set of possible future developments are also discussed to provide a single reference to an important strand of research in speech synthesis which may benefit anyone interested to initiate research in this area.

Similar content being viewed by others

References

Coelho, L.P., Braga, D., Dias, M.S., Mateo, C.G.: On the development of an automatic voice pleasantness classification and intensity estimation system. Comput. Speech Lang. 27(1), 75–88 (2013)

Feng, J., Ramabhadran, B., Hansel, J., Williams, J.D.: Trends in speech and language processing. IEEE Signal Process. Mag. 29(1), 177–179 (2012)

Alwan, A., Narayanan, S., Strope, B., Shen, A.: A speech production and perception models and their applications to synthesis, recognition, and coding. In Proc: URSI International Symposium on Signals, Systems, and Electronics, pp. 367–372 (19950

Ostendorf, M., Bulyko, I.: The impact of speech recognition on speech synthesis. In Proc: IEEE Workshop on Speech Synthesis, pp. 99–106 (2002)

Botha, G.R., Barnard, E.: Factors that affect the accuracy of text-based language identification. Comput. Speech Lang. 26(5), 307–320 (2012)

Li, Y., Lee, T., Qian, Y.: Analysis and modeling of F0 contours for Cantonese text-to-speech. ACM Trans. Asian Lang. Information Process. (TALIP) 3(3), 169–180 (2004)

Bali, K., Talukdar, P.P., Krishna, N.S., Ramakrishnan, A.G.: Tools for the development of a Hindi speech synthesis system. In Proc: Fifth ISCA Workshop on Speech Synthesis (2004)

Narasimhan, B., Sproat, R., Kiraz, G.: Schwa-deletion in hindi text-to-speech synthesis. Int. J. Speech Technol. 7(4), 319–333 (2004)

Rama, J., Ramakrishnan, A.G., Muralishankar, R., Prathibha, R.: A complete text-to-speech synthesis system in tamil. In Proc: WSS, pp. 191–194 (2002)

Talesara, S., Patil, H.A., Patel, T., Sailor, H., Shah, N.A.: Novel Gaussian filter-based automatic labeling of speech data for tts system in gujarati language. In Proc: ICALP, pp 139–142 (2013)

Panda, S.P., Nayak, A.K.: Integration of fuzzy if-then rule with waveform concatenation technique for text-to-speech synthesis in Odia. In Proc: 13th IEEE International Conference on Information Technology, pp. 88–93 (2014)

Christogiannis, C., Varvarigou, T., Zappa, A., Vamvakoulas, Y., Shih, C., and Arvaniti, A.: Construction of the acoustic inventory for a greek text-to-speech concatenative synthesis system. In Proc: IEEE International Conference on Acoustics, Speech, and Signal Processing, pp. II929–II932 (2002)

Maia, R., Akamine, M., Gales, M.J.: Complex cepstrum for statistical parametric speech synthesis. Speech Commun. 55(5), 606–618 (2013)

Maia, R., Akamine, M.: On the impact of excitation and spectral parameters for expressive statistical parametric speech synthesis. Comput. Speech Lang. 28(5), 1209–1232 (2014)

Panda, S.P., Nayak, A.K.: An efficient model for text-to-speech synthesis in Indian languages. Int. J. Speech Technol. 18(3), 305–315 (2015)

Panda, S.P., Nayak, A.K.: A waveform concatenation technique for text-to-speech synthesis. Int. J. Speech Technol. 20(4), 959–976 (2017)

Besacier, L., Barnard, E., Karpov, A., Schultz, T.: Automatic speech recognition for under-resourced languages: a survey. Speech Commun. 56, 85–100 (2014)

Panda, S.P., Nayak, A.K.: A rule-based concatenative approach to speech synthesis in Indian language text-to-speech systems. In Proc: Intelligent Computing, Communication and Devices, pp. 523-531, Springer (2015)

Handley, Z.: Is text-to-speech synthesis ready for use in computer-assisted language learning. Speech Commun. 51(10), 906–919 (2009)

McCoy, K.F., Arnott, J.L., Ferres, L., Oken, M.F., Roark, B.: Speech and language processing as assistive technologies. Comput. Speech Lang. 27(6), 1143–1146 (2013)

Bates, M.: The use of syntax in a speech understanding system. IEEE Trans. Acoust. Speech Signal Process. 23(6), 112–117 (1975)

Moller, S., Jekosch, U., Mersdorf, J., Kraft, V.: Auditory assessment of synthesized speech in application scenarios: two case studies. Speech Commun. 34(3), 229–246 (2001)

Panda, S.P., Nayak, A.K., Patnaik, S.: Text-to-speech synthesis with an Indian language perspective. Int. J. Grid Util. Comput. 6(3–4), 170–178 (2015)

Liang, M.S., Yang, R.C., Chiang, Y.C., Lyu, D.C., Lyu, R. Y.: A Taiwanese text-to-speech system with applications to language learning. In Proc: IEEE International Conference on Advanced Learning Technologies, pp. 91–95 (20010

Panda, S.P., Nayak, A.K.: modified rule-based concatenative technique for intelligible speech synthesis In indian languages. Adv. Sci. Lett. 22(2), 557–563 (2016)

Manning, A., Amare, N.: A simpler approach to grammar: (re)engineering parts-of-speech instruction to assist efl/esp students. In Proc: IEEE International Professional Communication Conference, pp. 1–9 (2007)

Nebbia, L., Quazza, S., Luigi, P.S.: A specialised speech synthesis technique for application to automatic reverse directory service. In Proc: 4th Workshop on Interactive Voice Technology for Telecommunication, pp. 223–228 (1998)

Rafieee, M.S., Jafari, S., Ahmadi, H.S., Jafari, M.: Considerations to spoken language recognition for text-to-speech applications. In Proc: 13th ICCMS, pp. 303–309 (2011)

Sak, H., Saraclar, M., Guungoor, T.: Morphology-based and sub-word language modeling for turkish speech recognition. In Proc: ICASSP, pp. 5402–5405 (2010)

Boldt, J., Ellis, D.: A simple correlation-based model of intelligibility for nonlinear speech enhancement and separation. In Proc: EUPSIPCO, pp. 1849–1853 (2009)

Coulston, R., Oviatt, S., Darves, C.: Amplitude convergence in children’s conversational speech with animated personas. In Proc: Seventh International Conference on Spoken Language, pp. 5402–5405 (2002)

Kleinberger, T., Becker, M., Ras, E., Holzinger, A.: Ambient intelligence in assisted living: enable elderly people to handle future interfaces. Lecture Notes Comput. Sci. Springer 4555, 103–112 (2007)

Qiu, L., Benbasat, I.: An investigation into the effects of Text-To-Speech voice and 3D avatars on the perception of presence and flow of live help in electronic commerce. ACM Trans. Comput. Hum. Interact. (TOCHI) 12(4), 329–355 (2005)

Lu, H., Brush, A., Priyantha, B., Karlson, A.K., Liu, J.: Speaker- sense: energy efficient unobtrusive speaker identification on mobile phones. In Proc: 9th International Conference on Pervasive Computing, pp. 188–205 (2011)

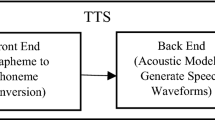

Tabet, Y., Boughazi, M.: Speech synthesis techniques. a survey. In Proc: 7th IEEE International Workshop on System, Signal processing and their Applications, pp. 67–70 (2011)

Buza, O., Toderean, G., Nica, A., Caruntu, A.: Voice signal processing for speech synthesis. In Proc: IEEE International Conference on Automation, Quality and Testing, Robotics, pp. 360–364 (2006)

Rojc, M., Kacic, Z.: Time and space-efficient architecture for a corpus-based text-to-speech synthesis system. Speech Commun. 49(3), 230–249 (2007)

Sasirekha, D., Chandra, E.: Text to speech: a simple tutorial. Int. J. Soft Comput. Eng. 2(1), 275–278 (2012)

Panda, S.P., Nayak, A.K.: A pronunciation rule-based speech synthesis technique for Odia numerals. In Proc: Computational Intelligence in Data Mining, pp. 483–491, Springer (2016)

Panda, S.P., Nayak, A.K.: A Context-based numeral reading technique for text to speech systems. Int. J. Electr. Comput. Eng. 8(6), 4533–4544 (2018)

Raj, A., Sarkar, T., Pammi, S.C, Yuvaraj, S., Bansal, M., Prahallad, K., Black. A.W.: Text processing for text to speech systems in Indian languages. In: Proc: 6th ISCA Speech Synthesis Workshop, pp. 188–193 (2007)

Ebden, P., Sproat, R.: The Kestrel TTS text normalization system. Nat. Lang. Eng. 21(3), 333–353 (2015)

Alias, F., Sevillano, X., Socor, J.C., Gonzalvo, X.: Towards high-quality next-generation text-to-speech synthesis: a multidomain approach by automatic domain classification. IEEE Trans. Audio Speech Lang. Process. 16(7), 1340–1354 (2008)

Kim, B., Lee, G.G., Lee, J.H.: Morpheme-based grapheme to phoneme conversion using phonetic patterns and morphophonemic connectivity information. ACM Trans. Asian Lang. Inf. Process. (TALIP) 1(1), 65–82 (2002)

Ward, N., Nakagawa, S.: Automatic user-adaptive speaking rate selection for information delivery. In Proc: 7th International Conference on Spoken Language Processing, pp. 341–362 (2002)

Prafianto, H., Nose, T., Chiba, Y., Ito, A.: Improving human scoring of prosody using parametric speech synthesis. Speech Commun. 111, 14–21 (2019)

Jia, Y., Huang, D., Liu, W., Dong, Y., Yu, S., Wang, H.: Text normalization in mandarin text-to-speech system. In Proc: IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 4693–4696 (2008)

Zhou, J., Su, X., Ylianttila, M., Riekki, J.: Exploring pervasive service computing opportunities for pursuing successful ageing. The Gerontologist, pp 73–82 (2012)

Kujala, J.V.: A probabilistic approach to pronunciation by analogy. Comput. Speech Lang. 27(5), 1049–1067 (2013)

Delogu, C., Conte, S., Sementina, C.: Cognitive factors in the evaluation of synthetic speech. Speech Commun. 24(2), 153–168 (1998)

Mayo, C., Robert, C., Clark, A.J., King, S.: Weighting of acoustic cues to synthetic speech naturalness: a multidimensional scaling analysis. Speech Commun. 53(3), 311–326 (2011)

Prahallad, K., Kumar, E.N., Keri, V., Rajendran, S., Black, A.W.: The iiit-h indic speech databases. In: Thirteenth Annual Conference of the International Speech Communication Association (2012)

Viswanathan, M.: Measuring speech quality for text-to-speech systems: development and assessment of a modified mean opinion score (mos) scale. Comput. Speech Lang. 19(1), 55–83 (2005)

Madhavi, G., Mini, B., Balakrishnan, N., Raj, R.: Om: one tool for many (indian) languages. J. Zhejiang Univ. Sci. A 6(11), 1348–1353 (2005)

Sarungbam, J.K., Kumar, B., Choudhary, A.: Script identification and language detection of 12 indian languages using dwt and template matching of frequently occurring character (s). In Proc: 5th IEEE International Conference on Confluence The Next Generation Information Technology, pp. 669–674 (2014)

Hangarge, M., Dhandra, B. V.: Shape and morphological transformation based features for language identification in indian document images. In Proc: IEEE First International Conference on Emerging Trends in Engineering and Technology, pp. 1175–1180 (2008)

Reddy, M.V., Margaret, M.T., Hanumanthappa, M.: Phoneme-to-speech dictionary for indian languages. In Proc: IEEE International Conference on Soft-Computing and Networks Security, pp. 1–4 (2015)

Reddy, V.R., Maity, S., Rao, K.S.: Identification of indian languages using multi-level spectral and prosodic features. Int. J. Speech Technol. 16(4), 489–511 (2013)

Kishore, S.P., Kumar, R., Sangal, R.: A data driven synthesis approach for indian languages using syllable as basic unit. In Proc: International Conference on Natural Language Processing, pp. 311–316 (2002)

Kanth, B L., Keri, V., Prahallad. K.S.: Durational characteristics of indian phonemes for language discrimination. In Proc: Information Systems for Indian Languages, pp. 130–135 (2011)

Lavanya, P., Kishore, P., Madhavi, G.T.: A simple approach for building transliteration editors for indian languages. J. Zhejiang Univ. Sci. A 6(11), 1354–1361 (2005)

Patil, H., Patel, T.B., Shah, N.J., Sailor, H.B., Krishnan, R., Kasthuri, G.R., Nagarajan, T., Christina, L., Kumar, N., Raghavendra, V., Kishore, S.P., Prasanna, S. R.M., Adiga, N., Singh, S.R., Anand, K., Kumar, P., Singh, B.C., Binil Kumar, S.L., Bhadran, T.G., Sajini, T., Saha, A., Basu, T., Rao, K.S., Narendra, N.P., Sao, A.K., Kumar, R., Talukdar, P., Chandra, S., Acharyaa, P., Lata, S., Murthy, H. A.: A syllable-based framework for unit selection synthesis in 13 indian languages. In Proc: IEEE International Conference on Asian Spoken Language Research and Evaluation, pp. 1–8 (2013)

Murthy, H.A., Bellur, A., Viswanath, V., Narayanan, B., Susan, A., Kasthuri, G., Krishnan, R., Rao, K.S., Maity, S., Narendra, N.P., Reddy, R., Ghosh, K., Sulochana, K. G., Abhilash, E. L., Sajini, T., Sasikumar, M., Singh, B.C., Kumar, P., Vijayaditya, P., Raghavendra, E. V., and Prahallad, K.: Building unit selection speech synthesis in indian languages: An initiative by an indian consortium. In Proc: COCOSDA, pp. 1–7 (2010)

Bellur, A., Narayan, K.B., Krishnan, K.R., Murthy, H.: A data driven synthesis approach for indian languages using syllable as basic unit. In Proc: IEEE National Conference on Communications, pp. 1–5 (2011)

Christiansen, C., Pedersen, M.S., Dau, T.: Prediction of speech intelligibility based on an auditory preprocessing model. Speech Commun. 52(7), 678–692 (2010)

Ma, J., Loizou, P.: Snr loss: a new objective measure for predicting the intelligibility of noise-suppressed speech. Speech Commun. 53(3), 340–354 (2011)

Taal, C., Hendriks, R., Heusdens, R., Jensen, J.: An algorithm for intelligibility prediction of time-frequency weighted noisy speech. IEEE Trans. Audio Speech Lang. Process. 19(7), 2125–2136 (2011)

Kates, J.M., Arehart, K.H.: Coherence and the speech intelligibility index. J. Acoust. Soc. Am. 117(4), 2224–2237 (2005)

Huang, G., Er, M.J.: An adaptive control scheme for articulatory synthesis of plosive-vowel sequences. In Proc: 38th Annual Conference on IEEE Industrial Electronics Society, pp. 1465–1470 (2012)

Qinsheng, D., Jian, Z., Lirong, W., Lijuan, S.: Articulatory speech synthesis: a survey. In Proc: 14th IEEE International Conference on Computational Science and Engineering, pp. 539–542 (2011)

Black, A.W., Bunnell, H.T., Dou, Y., Muthukumar, P.K., Metze, F., Perry, D., Polzeh, T., Prahallad, K., Steidl, S., Vaughn, C.: Articulatory features for expressive speech synthesis. In Proc: IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 4005–4008 (2012)

Yu, B.L., Zeng, S.C.: Acoustic-to-articulatory mapping codebook constraint for determining vocal-tract length for inverse speech problem and articulatory synthesis. In Proc: 5th IEEE International Conference on Signal Processing, pp. 827–830 (2020)

Aryal, S., Gutierrez-Osuna, R.: Accent conversion through cross-speaker articulatory synthesis. In Proc: IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 7694–7698 (2014)

Aryal, S., utierrez-Osuna, R.: Articulatory inversion and synthesis: towards articulatory-based modification of speech. In Proc: IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 7952–7956 (2013)

Badin, P., Abry, C.: Articulatory synthesis from x-rays and inversion for an adaptive speech robo. In Proc: Fourth International Conference on Spoken Language, pp. 1125–1128 (1996)

Aryal, S., Osuna, R.: Data driven articulatory synthesis with deep neural networks. Comput. Speech Lang. 36, 260–273 (2016)

Illa, A., Ghosh, P.K.: The impact of speaking rate on acoustic-to-articulatory inversion. Comput. Speech Lang. 59, 75–90 (2020)

Pape, D., Jesus, L., Birkholz, P.: Intervocalic fricative perception in European Portuguese: an articulatory synthesis study. Speech Commun. 74, 93–103 (2015)

Ngo, T., Akagi, M., Birkholz, P.: Effect of articulatory and acoustic features on the intelligibility of speech in noise: an articulatory synthesis study. Speech Commun. 117, 13–20 (2020)

Birkholz, P., Lucia, M., Xu, Y.Scherbaum, Rube, C.: Manipulation of the prosodic features of vocal tract length, nasality and articulatory precision using articulatory synthesis. Comput. Speech Lang. 41, 116–127 (2017)

Chen, C.P., Huang, Y.C., Wu, C.H., Lee, K.D.: Polyglot speech synthesis based on cross-lingual frame selection using auditory and articulatory features. IEEE/ACM Trans. Audio Speech Lang. Process. 22(10), 1558–1570 (2014)

Stevens, K.N.: Toward formant synthesis with articulatory controls. In Proc: IEEE Workshop on Speech Synthesis, pp. 67–72 (2002)

Ling, Z.H., Richmond, K., Yamagishi, J., Wang, R.H.: Integrating articulatory features into hmm-based parametric speech synthesis. IEEE Trans. Audio Speech Lang. Process. 17(6), 1171–1185 (2009)

Klatt, D.H.: Software for a cascade/parallel formant synthesizer. J. Acoust. Soc. Am. 67(3), 971–995 (1980)

Summerfield, C.D.: A multi-channel formant speech synthesis system. In Proc: Fourth IEEE Region 10 International Conference, pp. 490–493 (1989)

Khorinphan, C., Phansamdaeng, S., Saiyod, S.: Thai speech synthesis with emotional tone: Based on formant synthesis for home robot. In Proc: Third IEEE ICT International Student Project Conference, pp. 111–114 (2014)

Sousa, J., Araujo, F., Klautau, A.: Utterance copy for klatt’s speech synthesizer using genetic algorithm. In Proc: IEEE Workshop on Spoken Language Technology, pp. 89–94 (2014)

Trindade, J., Araujo, F., Klautau, A., Batista, P.: A genetic algorithm with look-ahead mechanism to estimate formant synthesizer input parameters. In Proc: IEEE Congress on Evolutionary Computation, pp. 3035–3042 (2013)

Chan, K., Hall, M.: The importance of vowel formant frequencies and proximity in vowel space to the perception of foreign accent. J. Phonet. 77, 100919 (2019)

Pellicani, A., Fontes, A., Santos, F., Pellicani, A., Aguiar-Ricz, L.: Fundamental frequency and formants before and after prolonged voice use in teachers. J. Voice 32(2), 177–184 (2018)

Barkana, B., Patel, A.: Analysis of vowel production in Mandarin/Hindi/American- accented English for accent recognition systems. Appl. Acoust. 16, 107203 (2020)

Akçay, M., Oğuz, K.: Speech emotion recognition: emotional models, databases, features, preprocessing methods, supporting modalities, and classifiers. Speech Commun. 116, 56–76 (2020)

Hansen, J.H., Chappell, D.T.: An auditory-based distortion measure with application to concatenative speech synthesis. IEEE Trans. On Speech Audio Process. 6(5), 489–495 (1998)

Panda, S.P., Nayak, A.K.: Vowel onset point based waveform concatenation technique for intelligible speech synthesis. In Proc: International Conference on Computing Methodologies and Communication (ICCMC 2017), IEEE, pp. 622–626 (2018)

Schwarz, D.: Corpus-based concatenative synthesis. IEEE Signal Process. Mag. 24(2), 92–104 (2007)

Conkie, A.: Robust unit selection system for speech synthesis. In Proc: 137th meeting of the Acoustical Society of America, pp. 978 (1999)

Black, A.W., Lenzo, K.A.: Optimal data selection for unit selection synthesis. In Proc: 4th ISCA Tutorial and Research Workshop (ITRW) on Speech Synthesis (2001)

Hunt, A.J., Black, A.W.: Unit selection in a concatenative speech synthesis system using a large speech database. In Proc: International Conference on Acoustics, Speech, and Signal Processing, ICASSP-96, pp. 373–376 (1996)

Sharma, P., Abrol, V., Nivedita Sao, A.K.: Reducing footprint of unit selection based text-to-speech system using compressed sensing and sparse representation. Comput. Speech Lang. 52, 191–208 (2018)

Nukaga, N., Kamoshida, R., Nagamatsu, K., Kitahara, Y.: Scalable implementation of unit selection based text-to-speech system for embedded solutions. In Proc: IEEE International Conference on Acoustic, Speech and Signal Processing, pp. 849–852 (2006)

Bellegarda, J.R.: Unit-centric feature mapping for inventory pruning in unit selection text-to-speech synthesis. IEEE Trans. Audio Speech Lang. Process. 16(1), 74–82 (2008)

Narendra, N.P., Rao, K.S.: Optimal weight tuning method for unit selection cost functions in syllable based text-to-speech synthesis. Appl. Soft Comput. 13, 773–781 (2013)

Zen, H., Tokuda, K., Black, A.W.: Statistical parametric speech synthesis. Speech Commun. 51(11), 1039–1064 (2009)

Black, A.W., Campbell, N.: Optimising selection of units from speech databases for concatenative synthesis (1995)

Xia, X.J., Ling, Z.H., Yang, C.Y., Dai, L.R.: Improved unit selection speech synthesis method utilizing subjective evaluation results on synthetic speech. In Proc: 8th IEEE International Symposium on Chinese Spoken Language Processing, pp. 160–164 (2012)

Bellegarda, J.R.: Globally optimal training of unit boundaries in unit selection text-to-speech synthesis. IEEE Trans. Audio Speech Lang. Process. 15(3), 957–965 (2008)

Epko, J., Talafova, R., Vrabec, J.: Indexing join costs for faster unit selection synthesis. In Proc: 15th IEEE International Conference on Systems, Signals and Image Processing, pp. 503–506 (2008)

Kishore, S.P., Black, A.W.: Unit size in unit selection speech synthesis. In Proc: INTERSPEECH, pp. 1–7 (2003)

Kishore, S. P., Black, A.W., Kumar, R., Sangal, R.: Experiments with unit selection speech databases for indian languages. In Proc: National seminar on Language Technology Tools, pp. 1–7 (2003)

Prahallad, K., Vadapalli, A., Elluru, N., Mantena, G., Pulugundla, B., Bhaskararao, P., Murthy, H.A., King, S., Karaiskos, V., Black, A.W.: The blizzard challenge 2013-indian language task. In Proc: Blizzard Challenge Workshop, pp. 1–7 (2013)

Black A., Tokuda, K.: The blizzard challenge 2005: Evaluating corpus-based speech synthesis on common databases. In Proc: Interspeech, pp. 1–7 (2005)

Charpentier, F.J., Stella, M.G.: Diphone synthesis using an overlap-add technique for speech waveforms concatenation. In Proc: IEEE International Conference on Acoustics, Speech, and Signal Processing, pp. 2015–2018 (1986)

Justin, T., Struc, V., Dobrisek, S., Vesnicer, B., Ipsic, I., Mihelic, F., 2015. Speaker de-identification using diphone recognition and speech synthesis. In Proc: IEEE International Conference and Workshops on Automatic Face and Gesture Recognition, pp. 1–7 (2015)

Mellahi, T., Hamdi, R.: Lpc-based formant enhancement method in kalman filtering for speech enhancement. AEU-Int. J. Electron. Commun. 69(2), 545–554 (2015)

Valbret, H., Moulines, E., Tubach, J.P.: Voice transformation using psola technique. In Proc: IEEE International Conference on Acoustics, Speech, and Signal Processing, pp. 145–148 (1992)

Dutoit, T., Leich, H.: Mbr-psola: text-to-speech synthesis based on an mbere-synthesis of the segments database. Speech Commun. 13(3), 435–440 (1993)

Hamon, C., Mouline, E., Charpentier, F.: A diphone synthesis system based on time-domain prosodic modifications of speech. In Proc: International Conference on Acoustics, Speech, and Signal Processing, pp. 238–241 (1989)

Katae, N., Kimura, S.: Natural prosody generation for domain specific text-to-speech systems. In Proc: Fourth International Conference on Spoken Language Processing, pp. 1852–1855 (1996)

Aust, H., Oerder, M., Seide, F., Steinbiss, V.: A spoken language inquiry system for automatic train timetable information. Philips J. Res. 49(4), 399–418 (1995)

Meng, H.M., Lee, S., Wai, C.: Intelligent speech for information systems: towards biliteracy and trilingualism. Interact. Comput. 14(4), 327–339 (2002)

Fries, G.: Hybrid time-and frequency-domain speech synthesis with extended glottal source generation. In Proc: IEEE International Conference on Acoustics, Speech, and Signal Processing, pp. 581–584 (1994)

Phung, T.N., Mai, C.L., Akagi, M.: A concatenative speech synthesis for monosyllabic languages with limited data. In Proc: IEEE Signal and Information Processing Association Annual Summit and Conference, pp. 1–10 (2012)

Narendra, N.P., Rao, K.S.: Syllable specific unit selection cost functions for text-to-speech synthesis. ACM Trans. Speech Lang. Process. (TSLP) 9(3), 5 (2012)

Reddy, V.R., Rao, K.S.: Two-stage intonation modeling using feed forward neural networks for syllable based text-to-speech synthesis. Comput. Speech Lang. 17(5), 1105–1126 (2013)

Xie, Y., Zhang, B., Zhang, J.: The training of the tone of mandarin two syllable words based on pitch projection synthesis speech. In Proc: 9th IEEE International Symposium on Chinese Spoken Language Processing, pp. 435–435 (2014)

Narendra, N.P., Rao, K.S., Ghosh, K., Vempada, R.R., Maity, S.: Development of syllable-based text to speech synthesis system in bengali. Int. J. Speech Technol. 14(1), 167–181 (2011)

Thomas, S., Rao, M.N., Murthy, H., Ramalingam, C.S.: Natural sounding tts based on syllable-like units. In Proc: 14th IEEE European Signal Processing Conference, pp. 1–5 (2006)

Venugopalakrishna, Y.R., Vinodh, M.V., Murthy, H., Ramalingam, C.S.: Methods for improving the quality of syllable based speech synthesis. In Proc: IEEE Spoken Language Technology Workshop, pp. 29–32 (2008)

Wu, C.H., Huang, Y.C., Lee, C.H., Guo, J.C.: Synthesis of spontaneous speech with syllable contraction using state-based context-dependent voice transformation. IEEE/ACM Trans. Audio Speech Lang. Process. 22(3), 585–595 (2014)

Raghavendra, E.V., Desai, S., Yegnanarayana, B., Black, A.W., Prahallad, K.: Global syllable set for building speech synthesis in indian languages. In Proc: IEEE Spoken Language Technology Workshop, pp. 49–52 (2008)

Latorre, J., Iwano, K., Furui, S.: Polyglot synthesis using a mixture of monolingual corpora. In Proc: ICASSP, pp. 1–4 (2005)

Black, A.W., Lenzo, K.A.: Multilingual text-to-speech synthesis. Proc Int. Conf. Acoust. Speech Signal Process. 3, iii-761 (2004)

Ramani, B., Actlin Jeeva, M.P., Vijayalakshmi, P., Nagarajan, T.: Voice conversion-based multilingual to polyglot speech synthesizer for Indian languages. In Proc: IEEE Region 10 Conference, pp. 1–4 (2013)

Latorre, J., Iwano, K., Furui, S.: New approach to the polyglot speech generation by means of an HMM-based speaker adaptable synthesizer. Speech Commun. 48, 1227–1242 (2006)

Chen, C.P., Huang, Y.C., Wu, C.H., Lee, K.D.: Cross-lingual frame selection method for polyglot speech synthesis. In Proc: IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 4521–4524 (2012)

Solomi, V., Sherlin, M.S., Saranya, G., Anushiya, R., Vijayalakshmi, P., Nagarajan, T.: Performance comparison of KLD and PoG metrics for finding the acoustic similarity between phonemes for the development of a polyglot synthesizer. In Proc: IEEE Region 10 Conference, pp. 1–4 (2014)

Romsdorfer, H., Pfister, B.: Text analysis and language identification for polyglot text-to-speech synthesis. Speech Commun. 49, 697–724 (2007)

Solomi, V.S., Christina, S.L., Rachel, G.A., Ramani, B., Vijayalakshmi, P., Nagarajan, T.: Analysis on acoustic similarities between tamil and english phonemes using product of likelihood-Gaussians for an HMM-based mixed-language synthesizer. In Proc: IEEE International Conference on Asian Spoken Language Research and Evaluation, pp. 1–5 (2013)

Gibson, M., Byrne, W.: Unsupervised intralingual and cross-lingual speaker adaptation for hmm-based speech synthesis using two-pass decision tree construction. IEEE Trans. Audio Speech Lang. Process. 19(4), 895–904 (2011)

Lorenzo-Trueba, J., Barra-Chicote, R., San-Segundo, R., Ferreiros, J., Yamagishi, J., Montero, J.M.: Emotion transplantation through adaptation in hmm-based speech synthesis. Comput. Speech Lang. 34(1), 292–307 (2015)

Maeno, Y., Nose, T., Kobayashi, T., Koriyama, T., Ijima, Y., Nakajima, H., Mizuno, H., Yoshioka, O.: Prosodic variation enhancement using unsupervised context labeling for hmm-based expressive speech synthesis. Speech Commun. 57, 144–154 (2014)

Nose, T., Kobayashi, T.: An intuitive style control technique in hmm based expressive speech synthesis using subjective style intensity and multiple regression global variance model. Speech Commun. 55(2), 347–357 (2013)

Ekpenyong, M., Urua, E.A., Watts, O., King, S., Yamagishi, J.: Statistical parametric speech synthesis for ibibio. Speech Commun. 56, 243–251 (2014)

Romsdorfer, H.: Speech prosody control using weighted neural network ensembles. In Proc: IEEE International Workshop on Machine Learning for Signal Processing, pp. 1–6 (2009)

Koriyama, T., Nose, T., Kobayashi, T.: Statistical parametric speech synthesis based on gaussian process regression. IEEE J. Select. Topics Signal Process. 8(2), 173–183 (2014)

Ilyes, R., Ayed, Y. B.: Statistical parametric speech synthesis for Arabic language using ann. In Proc: IEEE International Conference on Advanced Technologies for Signal and Image Processing, pp. 452–457 (2014)

Al-Radhi, M., Abdo, O., Csapó, T., Abdou, S., Fashal, M.: A continuous vocoder for statistical parametric speech synthesis and its evaluation using an audio-visual phonetically annotated Arabic corpus. Comput. Speech Lang. 60, 101025 (2020)

Reddy, M., Rao, K.S.: Excitation modelling using epoch features for statistical parametric speech synthesis. Comput. Speech Lang. 60, 101029 (2020)

Nagaraj Adiga, N., Khonglah, B., Mahadeva Prasanna, S.R.: Improved voicing decision using glottal activity features for statistical parametric speech synthesis. Dig. Signal Process. 71, 131–143 (2017)

Tiomkin, S., Malah, D., Shechtman, S., Kons, Z.: A hybrid text-to-speech system that combines concatenative and statistical synthesis units. IEEE Trans. Audio Speech Lang. Process. 19(5), 1278–1288 (2011)

Tokuda, K., Yoshimura, T., Masuko, T., Kobayashi, T, Kitamura, T.: Speech parameter generation algorithms for HMM-based speech synthesis. In Proc: IEEE International Conference on Acoustics, Speech, and Signal Processing, ICASSP’00, pp. 1315–1318 (2000)

Tokuda, K., Nankaku, Y., Toda, T., Zen, H., Yamagishi, J., Oura, K.: Speech synthesis based on hidden Markov models. Proc. IEEE 101(5), 1234–1252 (2013)

Toda, S., Neubig, T., Sakti, G., Nakamura, S.: A postfilter to modify the modulation spectrum in hmm-based speech synthesis. In Proc: IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 290–294 (2014)

Yang, C.Y., Ling, Z.H., Dai, L.R.: Unsupervised prosodic phrase boundary labeling of mandarin speech synthesis database using context-dependent hmm. In Proc: IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 6875–6879 (2013)

Gu, H.Y., Lai, M.Y., Hong, W.S.: Speech synthesis using articulatory knowledge based hmm structure. In Proc: IEEE International Conference on Machine Learning and Cybernetics, pp. 371–376 (2014)

Bollepalli, B., Urbain, J., Raitio, T., Gustafson, J., Cakmak, H.: A comparative evaluation of vocoding techniques for hmm-based laughter synthesis. In Proc: IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 255–259 (2014)

Kawahara, H.: Straight-tempo: A universal tool to manipulate linguistic and para-linguistic speech information. In Proc: IEEE International Conference on Systems, Man, and Cybernetics, pp. 1620–1625 (1997)

Saoudi, S., Boucher, J.M., Le, A.: Guyader. A new efficient algorithm to compute the lsp parameters for speech coding. Signal Process. 28(2), 201–212 (1992)

Yu, S.Z.: Hidden semi-markov models. Signal Process. 174(2), 215–243 (2010)

Cai, M.Q., Ling, Z.H., Dai, L.R.: Statistical parametric speech synthesis using a hidden trajectory model. Speech Commun. 72, 149–159 (2015)

Kawahara, H., Morise, M., Takahashi, T., Irino, T., Banno, H., Fujimura, O.: Group delay for acoustic event representation and its application for speech aperiodicity analysis. In Proc: EUSIPCO, pp. 2219–2223 (2007)

Ramani, B., Christina, S.L., Rachel, G.A., Solomi, V.S., Nandwana, M.K., Prakash, A., Shanmugam, S.A., Krishnan, R., Kishore, S., Samudravijaya, K. and Vijayalakshmi, P., 2013. A common attribute based unified HTS framework for speech synthesis in indian languages. In Proc: 8th ISCA Workshop on Speech Synthesis, pp. 311-316

Kang, S., Qian, X., Meng, H.: Multi-distribution deep belief network for speech synthesis. In Proc: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 8012–8016 (2013)

Ze, H., Andrew, S., Mike, S.: Statistical parametric speech synthesis using deep neural networks. In Proc: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 7962–7966 (2013)

Ronanki, S., Reddy, S., Bollepalli, B., King, S.: DNN-based Speech Synthesis for Indian Languages from ASCII text. arXiv preprint arXiv:1608.05374 (2016)

Hayashi, T., Yamamoto, R., Inoue, K., Yoshimura, T., Watanabe, S., Toda, T., Takeda, K., Zhang, Y., Tan, X.: Espnet-Tts: UNIFIED, REPRODUCIBLE, AND INTEGRATABLE OPEN SOURCE END-TO-END TEXT-TO-SPEECH TOOLkit. arXiv preprint arXiv:1910.10909 (2019)

Sotelo, J., Mehri, S., Kumar, K., Santosy, J.F., Kastner, K., Courvillez, A., Bengio, Y.: Char2wav: End-to-end speech synthesis. In: ICLR (2017)

Nicolson, A., Paliwal, K.: Deep learning for minimum mean-square error approaches to speech enhancement. Speech Commun. 111, 44–55 (2019)

Chang, Y.: Evaluation of TTS systems in intelligibility and comprehension tasks: a case study of HTS-2008 and multisyn synthesizers. Comput. Linguist. Chin. Lang. Process. 17(3), 109–128 (2012)

Benoît, C., Grice, M., Hazan, V.: The SUS test: a method for the assessment of text-to-speech synthesis intelligibility using Semantically Unpredictable Sentences. Speech Commun. 18, 381–392 (1996)

Benoit, C.: An intelligibility test using semantically unpredictable sentences: towards the quantification of linguistic complexity. Speech Commun. 9(4), 293–304 (1990)

Bielefeld, N., Schinkel.: Training listeners for multi-channel audio quality evaluation in MUSHRA with a special focus on loop setting. In Proc: Eighth International Conference on Quality of Multimedia Experience (QoMEX), pp. 1–6 (2016)

Kraft, S., Zölzer, U.: BeaqleJS: HTML5 and javascript based framework for the subjective evaluation of audio quality. In Proc: Linux Audio Conference, pp. 1–6 (2014)

Latorre, J., Iwano, K., Furui, S.: Combining Gaussian mixture model with global variance term to improve the quality of an HMM-based polyglot speech synthesizer. In Proc: IEEE International Conference on Acoustics, Speech and Signal Processing, pp. IV–1241 (2007)

Lu, H., Ling, Z.H., Dai, L.R., Wang, R H.: Building hmm based unit selection speech synthesis system using synthetic speech naturalness evaluation score. In Proc: IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 5352–5355 (2011)

Morton, H., Gunson, N., Marshall, D., McInnes, F., Ayres, A., Jack, M.: Usability assessment of text-to-speech synthesis for additional detail in an automated telephone banking system. Comput. Speech Lang. 25(2), 341–362 (1996)

Panda, S.P., Nayak, A.K.: Spectral Smoothening based Waveform Concatenation Technique for Speech Quality Enhancement in Text to Speech Systems. In proc: 3rd International Conference on Advanced Computing and Intelligent Engineering, vol 1. Springer, pp. 425–432 (2020)

Yang, S., Wu, Z., Xie, L.: On the training of DNN-based average voice model for speech synthesis. In Proc: Signal and Information Processing Association Annual Summit and Conference (APSIPA), 2016 Asia-Pacific, IEEE, pp. 1–6 (2016)

http://festvox.org. Accessed 18 Mar 2020

http://dhvani.sourceforge.net. Accessed 18 Mar 2020

http://espeak.sourceforge.net. Accessed 18 Mar 2020

http://hts-engine.sourceforge.net. Accessed 18 Mar 2020

http://hts.sp.nitech.ac.jp. Accessed 18 Mar 2020

http://www.censusindia.gov.in/Census_Data_2001/Census_Data_Online/Language/Statement1.aspx. Accessed 18 Mar 2020

http://www.censusindia.gov.in/Census_Data_2001/Census_Data_Online/Language/Statement4.aspx. Accessed 18 Mar 2020

https://www.ibm.com/watson/services/text-to-speech. Accessed 18 Mar 2020

https://cloud.google.com/text-to-speech. Accessed 18 Mar 2020

https://aws.amazon.com/polly. Accessed 18 Mar 2020

https://azure.microsoft.com/en-in/services/cognitive-services/text-to-speech. Accessed 18 Mar 2020

http://sanskrit.jnu.ac.in/samvacaka/index.jsp. Accessed 18 Mar 2020

https://indiantts.com. Accessed 18 Mar 2020

https://medialabasia.in/index.php/research/projects/empowerment-of-disabled. Accessed 18 Mar 2020

https://www.naturalreaders.com. Accessed 18 Mar 2020

https://www.linguatec.de/en/text-to-speech/voice-reader-home-15. Accessed 18 Mar 2020

https://www.captivoice.com/capti-site/public/entry/education. Accessed 18 Mar 2020

https://censusindia.gov.in/Census_And_You/disabled_population.aspx. Accessed 10 Mar 2020

https://anoopkunchukuttan.github.io/indic_nlp_library/. Accessed 18 Mar 2020

https://github.com/NVIDIA/tacotron2. Accessed 18 Mar 2020

http://cvit.iiit.ac.in/research/projects/cvit-projects/text-to-speech-dataset-for-indian-languages#. Accessed 18 Mar 2020

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Panda, S.P., Nayak, A.K. & Rai, S.C. A survey on speech synthesis techniques in Indian languages. Multimedia Systems 26, 453–478 (2020). https://doi.org/10.1007/s00530-020-00659-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-020-00659-4