Abstract

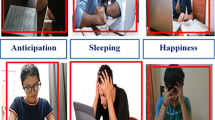

E-Learning has revolutionized the delivery of learning through the support of rapid advances in Internet technology. Compared with face-to-face traditional classroom education, e-learning lacks interpersonal and emotional interaction between students and teachers. In other words, although a vital factor in learning that influences a human’s ability to solve problems, affect has been largely ignored in existing e-learning systems. In this study, we propose a hybrid intelligence-aided approach to affect-sensitive e-learning. A system has been developed that incorporates affect recognition and intervention to improve the learner’s learning experience and help the learner become better engaged in the learning process. The system recognizes the learner’s affective states using multimodal information via hybrid intelligent approaches, e.g., head pose, eye gaze tracking, facial expression recognition, physiological signal processing and learning progress tracking. The multimodal information gathered is fused based on the proposed affect learning model. The system provides online interventions and adapts the online learning material to the learner’s current learning state based on pedagogical strategies. Experimental results show that interest and confusion are the most frequently occurring states when a learner interacts with a second language learning system and those states are highly related to learning levels (easy versus difficult) and outcomes. Interventions are effective when a learner is disengaged or bored and have been shown to help learners become more engaged in learning.

Similar content being viewed by others

References

Russell JA (1989) Measures of emotion. Emotion: theory, research, and experience, vol 4. Academic Press, pp 83–111

Bransford JD, Brown AL, Cocking RR (2000) How people learn: brain, mind, experience and school. National Academy Press, Washington, DC

Picard RW, Papert S, Bender W et al (2004) Affective learning-a manifesto. BT Technol J 22(4):253–269

Luo X, Spaniol M, Wang L, Li Q, Nejdl W, Zhang W (eds) (2010) Advances in web-based learning—ICWL 2010–9th International Conference, Shanghai, December 8–10, 2010. Proceedings Lecture Notes in Computer Science 6483, Springer, ISBN 978-3-642-17406-3

Beverly W, Burleson W, Arroyo I et al (2009) Affect-aware tutors: recognising and responding to student affect. Int J Learn Technol 4(3):129–164

Shen L, Wang M, Shen R (2009) Affective e-learning: “Emotional” data to improve learning in pervasive learning environment. J Educ Technol Soc 12:176–189

Chen D, Li X, Cui D, Wang L, Lu D (2014) Global synchronization measurement of multivariate neural signals with massively parallel nonlinear interdependence analysis. IEEE Trans Neural Syst Rehabil Eng 22(1):33–43

Wang L, Chen D, Ranjan R, Ullah Khan S, Kolodziej J, Wang J (2012) Parallel processing of massive EEG data with MapReduce. In: Proceedings of the IEEE 18th international conference on parallel and distributed systems (ICPADS), pp 164–171

Nosu K, Kurokawa T (2006) A multi-modal emotion-diagnosis system to support e-learning. In: IEEE proceedings of the first international conference on innovative computing, information and control, pp 274–278

Maat L, Pantic M (2007) Gaze-x, adaptive, affective, multimodal interface for single-user office scenarios, artifical Intelligence for Human Computing. Springer, Berlin, pp 251–271

Picard RW (2000) Affective computing. MIT Press, Cambridge

Lisetti CL, Nasoz F (2002) MAUI: a multimodal affective user interface. In: Proceedings of the tenth ACM international conference on Multimedia. ACM, pp 161–170

Graesser A, Chipman P, King B, et al (2007) Emotions and learning with auto tutor. In: Proceedings of the conference on artificial intelligence in education: building technology rich learning contexts that work, pp 569–571

Xia F, Yang LT, Wang L, Vinel AV (2012) Internet of things. Int J Commun Syst 25(9):1101–1102

Chang Y, Jung J, Wang L (2014) From ubiquitous sensing to cloud computing: technologies and applications. Int J Distrib Sens Netw

Kapoor A, Burleson W, Picard RW (2007) Automatic prediction of frustration. Int J Hum Comput Stud 65(8):724–736

Kolodziej J, Ullah Khan S, Wang L, Min-Allah N, Ahmad Madani S, Ghani N, Li H (2011) An application of Markov jump process model for activity-based indoor mobility prediction in wireless networks. FIT, pp 51–56

Jie W, Cai W, Wang L, Procter R (2007) A secure information service for monitoring large scale grids. Parallel Comput 33(7–8):572–591

Kapoor A, Picard RW, Ivanov Y (2004) Probabilistic combination of multiple modalities to detect interest. In: Proceedings of the 17th IEEE International Conference on Pattern Recognition, vol 3. pp 969–972

D’Mello S, Picard R, Graesser A (2007) Towards an affect-sensitive autotutor. IEEE Intell Syst 22(4):53–61

Beverly W, Burleson W, Arroyo I, Dragon T, Cooper D, Picard R (2009) Affect-aware tutors: recognizing and responding to student affect. J Learn Technol 4:129–164

IEEE Learning Technology Standards Committee (2001) Draft Standard for Learning Technology Learning Technology Systems Architecture (LTSA), IEEE Computer Society. http://www.computer.org/portal/web/sab/learning-technology

Ortony A (1990) The cognitive structure of emotions. Cambridge University Press, Cambridge

Russell JA (1980) A circumplex model of affect. J Personal Soc Psychol 39(6):1161

Caridakis G, Tzouveli P, Raouzaiou A, Karpouzis K, Kollias S (2010) Affective e-learning system: analysis of learners state, affective, interactive and cognitive methods for e-learning design: creating an optimal education experience

Viola P, Jones M (2001) Robust real-time object detection. Int J Comput Vis 4:34–47

Yang M (2009) Face detection. In: Stan ZL (ed) Encyclopedia of biometrics. Springer

Milborrow S, Nicolls F (2008) Locating facial features with an extended active shape model. In: Proceedings of the 10th european conference on computer vision: Part IV

Dementhon DF, Davis LS (1995) Model based object pose in 25 Lines of Code. Int J Comput Vis 15:123–141

Fischler MA, Bolles RC (1981) Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. J Commun ACM 24:381–395

Chang CC, Lin CJ (2011) LIBSVM: a library for support vector machines. ACM Trans Intell Syst Technol 2(3):27

Levenson RW (1988) Emotion and the autonomic nervous system: a prospectus for research on autonomic specificity, Soc Psychophysiol Emot Theo Clin Appl. pp 17–42

Wei J, Liu D, Wang L (2014) A general metric and parallel framework for adaptive image fusion in clusters. Concurr Comput Pract Exp 26(7):1375–1387

Atrey PK, Hossain MA, El Saddik A et al (2010) Multimodal fusion for multimedia analysis: a survey. Multimed Syst 16(6):345–379

Sharma R, Pavlovic VI, Huang TS (1998) Toward multimodal human-computer interface. Proc IEEE 86(5):853–869

Pantic M, Rothkrantz LJM (2003) Toward an affect-sensitive multimodal human-computer interaction. Proc IEEE 91(9):1370–1390

Isen A (2000) Positive affect and decision making. Handbook of emotios, Guilford

Acknowledgments

This work was supported by National Key Technology Research and Development Program (No. 2013BAH72B01) and research funds from Ministry of Education and China Mobile (No. MCM20130601), research funds from the Humanities and Social Sciences Foundation of the Ministry of Education (No. 14YJAZH005), research funds of CCNU from the Colleges’ Basic Research and Operation of MOE (No. CCNU13B001), Wuhan Chenguang Project (No. 2013070104010019), Scientific Research Foundation for the Returned Overseas Chinese Scholars (No. (2013)693),young foundation of Wenhua college (No. J0200540102) and National Natural Science Foundation of China (No. 61272206).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chen, J., Luo, N., Liu, Y. et al. A hybrid intelligence-aided approach to affect-sensitive e-learning. Computing 98, 215–233 (2016). https://doi.org/10.1007/s00607-014-0430-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00607-014-0430-9