Abstract

The query optimization phase within a database management system (DBMS) ostensibly finds the fastest query execution plan from a potentially large set of enumerated plans, all of which correctly compute the specified query. Occasionally the cost-based optimizer selects a slower plan, for a variety of reasons. We introduce the notion of empirical suboptimality of a query plan chosen by the DBMS, indicated by the existence of a query plan that performs more efficiently than the chosen plan, for the same query. From an engineering perspective, it is of critical importance to understand the prevalence of suboptimality and its causal factors. We examined the plans for thousands of queries run on four DBMSes, resulting in over a million query executions. We previously observed that the construct of empirical suboptimality prevalence positively correlated with the number of operators in the DBMS. An implication is that as operators are added to a DBMS, the prevalence of slower queries will grow. Through a novel experiment that examines the plans on the query/cardinality combinations, we present evidence for a previously unknown upper bound on the number of operators a DBMS may be able to support before performance suffers. We show that this upper bound may have already been reached.

Similar content being viewed by others

References

Albutiu, M.-C.: Scalable analytical query processing. PhD thesis, Technical University of Munich (2013)

Albutiu, M.-C., Kemper, A., Neumann, T.: Massively parallel sort-merge joins in main memory multi-core database systems. PVLDB 5(1), 1064–1075 (2012)

Babcock, B., Chaudhuri, S.: Towards a robust query optimizer: a principled and practical approach. In: Proceedings of the ACM SIGMOD International Conference on Management of Data, pp. 119–130. ACM, New York, NY, USA (2005)

Bayer, R., McCreight, E.: Organization and maintenance of large ordered indexes. Acta Inf. 1, 173–189 (1972)

Borovica-Gajic, R., Idreos, S., Ailamaki, A., Zukowski, M., Fraser, C.: Smooth scan: statistics-oblivious access paths. In: 2015 IEEE 31st International Conference on Data Engineering, pp. 315–326 (2015)

Bratbergsengen, K.: Hashing methods and relational algebra operations. In: Proceedings of the Very Large Database Conference, pp. 323–333 (1984)

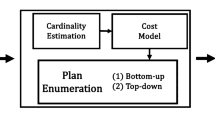

Chaudhuri, S.: An overview of query optimization in relational systems. In: Proceedings of the Seventeenth ACM SIGACT-SIGMOD-SIGART Symposium on Principles of Database Systems, pp. 34–43. ACM, New York, NY, USA (1998)

Comer, D.: The ubiquitous B-Tree. ACM Comput. Surv. 11, 121–137 (1979)

Currim, S., Snodgrass, R.T., Suh, Y.-K., Zhang, R.: DBMS metrology: measuring query time. ACM Trans. Database Syst. 42(1), 1–42 (2016)

Graefe, G.: Query evaluation techniques for large databases. ACM Comput. Surv. 25(2), 73–169 (1993)

Graefe, G.: New algorithms for join and grouping operations. Comput. Sci. Res. Dev. 27(1), 3–27 (2012)

Guo, R.B. , Daudjee, K.: Research challenges in deep reinforcement learning-based join query optimization. In: Proceedings of the Third International Workshop on Exploiting Artificial Intelligence Techniques for Data Management, aiDM’ 20. Association for Computing Machinery, New York, NY, USA (2020)

Harish, D., Darera, P.N., Haritsa, J.R.: On the production of anorexic plan diagrams. In: Proceedings of the 33rd International Conference on Very Large Data Bases, pp. 1081–1092. VLDB Endowment (2007)

Haritsa, J.R.: The Picasso database query optimizer visualizer. Proc. VLDB Endow. 3(1–2), 1517–1520 (2010)

Ibaraki, T., Kameda, T.: On the optimal nesting order for computing n-relatinal joins. ACM Trans. Database Syst. 9(3), 482–502 (1984)

ISO: ISO SQL:2008 international standard. ISO, International Organization for Standardization, Geneva, Switzerland (2008)

Kabra N, DeWitt DJ (1998) Efficient mid-query reoptimization of sub-optimal query execution plans. In: Proceedings of the 1998 ACM SIGMOD international conference on management of data, pp 106–117

Leis, V., Gubichev, A., Mirchev, A., Boncz, P., Kemper, A., Neumann, T.: How good are query optimizers, really? Proc. VLDB Endow. 9(3), 204–215 (2015)

Lohman G (2014) Is query optimization a “solved” problem? http://wp.sigmod.org/?p=1075

Marcus, R., Negi, P., Mao, M., Zhang, C., Alizadeh, M., Kraska, T., Papaemmanouil, O., Tatbul, N.: Neo: a learned query optimizer. Proc. VLDB Endow. 12, 1705–1718 (2019)

Melton, J.: Advanced SQL: 1999. Morgan Kaufmann, Burlington (2003)

Müller, I., Sanders, P., Lacurie, A., Lehner, W., Färber, F.: Cache-efficient aggregation: hashing is sorting. In: Proceedings of the 2015 ACM SIGMOD International Conference on Management of Data, pp. 1123–1136 (2015)

Ngo, H.Q., Porat, E., Ré, C., Rudra, A.: Worst-case optimal join algorithms. J. ACM 65(3), 1–40 (2018)

Reddy, N., Haritsa, J.R.: Analyzing plan diagrams of database query optimizers. In: Proceedings of the 31st International Conference on Very Large Data Bases, pp. 1228–1239. VLDB Endowment (2005)

Suh, Y., Snodgrass, R.T., Zhang, R.: AZDBLab: a laboratory information system for large-scale empirical DBMS studies. PVLDB 7(13), 1641–1644 (2014)

Winslett, M.: David DeWitt speaks out. SIGMOD Rec. 31(2), 50–62 (2002)

Acknowledgements

This research was supported in part by NSF Grants IIS-0639106, IIS-0415101, and EIA-0080123 and by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education under Grants NRF-2018R1A6A1A03025109 and NRF-2021R1I1A3056669. We thank Rui Zhang for his help in initiating this research. We appreciate helpful discussions and insightful feedback from Melanie Brucks, Curtis Dyreson, Christian Jensen, David Maier, Thomas Matheson, Abhijit Saha, Arash Termehchy, and Marianne Winslett. Ricardo Carlos, Preetha Chatterjee, Pallavi Chilappagari, Jennifer Dempsey, David Gallup, Kevan Holdaway, Matthew Wong Johnson, Andrey Kvochko, Siou Lin, Adam Robertson, Lopamudra Sarangi, Linh Tran, Cheng Yi, and Man Zhang contributed to AZDBLAB and Eric Collins, Phil Kaslo, Tom Lowry, and John Luiten helped in constructing and maintaining our experimental instrument. Finally, we thank the reviewers for their perceptive comments and suggestions for improving the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Details on the experiments

Appendix A: Details on the experiments

Table 1 in Sect. 3.3 lists the run statistics of the four experiments used in this paper. In this appendix, we provide more detailed information on the experiments.

We first discuss the features shared between the first four data sets: “A” through “D.” As introduced in Sect. 2, the queries referenced tables ft_HT1, ft_HT2, ft_HT3, and ft_HT4. All four tables contain four columns, each of type integer. The specific values of the rows for all but the first column depend on the Presence of skewed data.

To generate the values for different values of skew, we start with a distribution without a tail: the uniform distribution: the values from 1 to 2 million (2M). We consider this to be a skew of 0 (no skew). At the other end of the spectrum is one in which all the values are identical, or a skew of 1.0.

We define skew as “the reciprocal of the number of distinct values,” so \(0 < { skew} \le 1\). For 2M distinct values, skew would be \(\frac{1}{2M}= 0.000005\), which is practically 0. For exactly one distinct value, skew would be 1.0. For two distinct values, skew would be 0.5. For ten distinct values, the skew would be 0.1.

We can generate the table of 2M rows by generating values sequentially from 1 to the number of distinct values. This creates a “span of values.” We repeat this for the second span if necessary, and on and on, until we have 2M values in all.

When varying the cardinality, we remove 10K values from the variable table and then copy those tuples to a new table to ensure that every page is as full as possible (that is, 100% load factor). This gives us a table of 1.99M tuples. (We then get a query plan for this table.) We repeat this removal process until the final cardinality reaches 10K.

The way we effect the 10K removal is as follows. The key idea is to remove individual spans until we’ve deleted 10K values. Since we don’t touch the remaining spans, the umber of distinct values does not change, and so the skew remains constant.

We use two values of skew: \(\frac{1}{2M}\) (termed tiny) and \(\frac{1}{10K}\) (termed small). For the former, we generate a single span of 2M values. To shorten the table, it makes no sense to remove this single span in its entirety. But we can remove 10K values from this single span. Note that this changes the skew to 1/1.99M, then eventually to 1/10K, which is still very close to zero (skew has changed from .0000005 to .0001). For the latter, we generate 2000 spans each of 10K tuples, and drop a span to reduce to 1.99M tuples, repeating.

This algorithm is used in the second to fourth columns, which for any row will have identical values. The first column holds a unique integer starting from 1 and going to 60K or 2M, for use in an optional primary key.

There was one version of the last three tables, for use with one DBMS , with cardinality 60K, and one version for the rest of the DBMSes, with cardinality 2M.

We generate 200 versions of ft_HT1, termed the variable table. For that DBMS , these version contain 300, 600, 900, 1200, \(\ldots \), 59,700, and 60,000 rows; for the other DBMSes, these versions contain 10K, 20K, 30K, \(\ldots \), 1990K, and 2M rows, as introduced in Sect. 2.

Data Set C is the simplest to describe: it specifies no primary key, has no duplicate rows, and has no skew (of course, for any of the four tables). Data Set A differs from Data Set C only in that there is skew. As explained above, we use two values of skew, tiny and small.

Data Set B is similar to Data Set A, adding the specification of the first column as the primary key. And Data Set D is similar to Data Set B, adding the specification that the other three columns should each be associated with a secondary index, only for each (one) column. We see the confirmatory experiment examined a much larger variation in data sets than the exploratory experiment.

Lastly, Data Set E comes from the Join Order Benchmark [18]. Data Set E specifies primary key, has no duplicate rows, has some skew, and adds a secondary index. We choose the title table in JOB as the variable table akin to that of Data Set A. That is because title is the only table that is referenced by all the 113 queries in JOB. We generate 100 versions of title; these versions contain 25283, \(\ldots \), 2528312 rows.

The following sets of queries were used in the four experiments.

- QSa::

-

A query set consisting of 100 queries generated over the four tables (on Data Set A)

- QSb::

-

A query set consisting of 900 queries additionally generated over the four tables (on Data Set A)

- QSc::

-

A query set consisting of the 390 queries drawn from QSa–QSb (on Data Set B)

- QSd::

-

A query set consisting of 110 new queries (on Data Set B)

- QSe::

-

A query set consisting of 100 queries without aggregates (on Data Set C)

- QSf::

-

A subquery query set consisting of 100 queries, each with a subquery (on Data Sets A, B, and D)

- QSg::

-

A subquery query set consisting of 100 queries, each with a subquery (on Data Set D)

- QSh::

-

A query set consisting of 100 queries drawn from QSc (on Data Set D)

- QSi::

-

A query set consisting of 30 queries without aggregates drawn from QSa (on Data Set A)

- QSj::

-

A query set consisting of 60 queries with aggregates drawn from QSa (on Data Set A)

- QSk::

-

A query set consisting of 30 queries drawn from QSa (on Data Set A)

- QSl::

-

A query set consisting of 30 queries drawn from QSf (on Data Set A)

- QSm::

-

A query set consisting of 30 queries drawn from QSc (on Data Set B)

- QSn::

-

A query set consisting of 60 queries (on Data Set D)

- QSo::

-

A query set consisting of 113 queries (on Data Set E) from the Join Order Benchmark [18].

Experiment 1 (termed Exploratory) used QSa and the first 100 queries from QSb, plus the first 100 (primary key) queries from QSc, for the four DBMSes, for a total of 1200 query instances.

Experiment 2 (Confirmatory) used QSb except the first 100 queries used in Experiment 1, along with QSc except the first 100 queries included in Experiment 1, QSd for primary key (for two runs, or 220 queries), QSe for no data skew, QSf for primary key and subquery, QSf for subquery, QSf and QSg and for primary key and secondary index and subquery, QSh for primary key and secondary index, all across the four DBMSes, for a total of 7640 query instances.

Experiment 3 (Confirmatory with Warm Cache) used QSi (for no primary key, no aggregates, no secondary index, some data skew, and no subquery), QSj for aggregates, QSk for no data skew, QSl for subquery, QSm for primary key, and QSn for primary key and secondary index, all across the four DBMSes, for a total of 960 query instances.

Experiment 4 (JOB) used QSo, for the four DBMSes, for a total of 452 query instances.

Rights and permissions

About this article

Cite this article

Snodgrass, R.T., Currim, S. & Suh, YK. Have query optimizers hit the wall?. The VLDB Journal 31, 181–200 (2022). https://doi.org/10.1007/s00778-021-00689-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00778-021-00689-y