Abstract

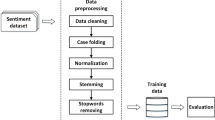

In order to improve the performance of internet public sentiment analysis, a text sentiment analysis method combining Latent Dirichlet Allocation (LDA) text representation and convolutional neural network (CNN) is proposed. First, the review texts are collected from the network for preprocessing. Then, using the LDA topic model to train the latent semantic space representation (topic distribution) of the short text, and the short text feature vector representation based on the topic distribution is constructed. Finally, the CNN with gated recurrent unit (GRU) is used as a classifier. According to the input feature matrix, the GRU-CNN strengthens the relationship between words and words, text and text, so as to achieve high accurate text classification. The simulation results show that this method can effectively improve the accuracy of text sentiment classification.

Similar content being viewed by others

References

Haddi E, Liu X, Shi Y (2013) The role of text pre-processing in sentiment analysis. Procedia Computer Science 17(3):26–32

Ahmed S, Pasquier M, Qadah G (2013) Key issues in conducting sentiment analysis on Arabic social media text[C]// International Conference on Innovations in Information Technology. IEEE. 72–77

Singh T, Kumari M (2016) Role of text pre-processing in twitter sentiment analysis. Procedia Computer Science 89:549–554

Zhao Y, Niu K, He Z, et al. (2013) Text sentiment analysis algorithm optimization and platform development in social network[C]//. International Symposium on Computational Intelligence & Design. IEEE Computer Society, p 410–413

Yazdani SF, Murad MAA, Sharef NM, Singh YP, Latiff ARA (2017) Sentiment classification of financial news using statistical features. Int J Pattern Recogni Artif Intell 31(03):1750006

Wei X, Lin H, Yu Y, Yang L (2017) Low-resource cross-domain product review sentiment classification based on a CNN with an auxiliary large-scale Corpus. Algorithms 10(3):81–90

Zhong Y, Fei F, Liu Y, Zhao B, Jiao H, Zhang L (2017) SatCNN: satellite image dataset classification using agile convolutional neural networks. Remote Sens Lett 8(2):136–145

Huang Q, Chen R, Zheng X, et al (2017) Deep sentiment representation based on CNN and LSTM[C]// International Conference on Green Informatics. IEEE, p 30–33

Du J, Gui L, He Y, et al (2018) A convolutional attentional neural network for sentiment classification[C]// International Conference on Security, Pattern Analysis, and Cybernetics. IEEE, p 445–450

Yang X, Macdonald C, Ounis I (2016) Using word embeddings in Twitter election classification. Inf Retriev J, p 1–25

Song S, Huang H, Ruan T (2018) Abstractive text summarization using LSTM-CNN based deep learning. Multimed Tools Appl 23(10):1–19

Athiwaratkun B, Stokes JW (2017) Malware classification with LSTM and GRU language models and a character-level CNN[C]// IEEE International Conference on Acoustics, Speech and Signal Processing. IEEE, p 2482–2486

Dey R, Salemt FM (2017) Gate-variants of gated recurrent unit (GRU) neural networks[C]// IEEE, International Midwest Symposium on Circuits and Systems. IEEE, p 1597–1600

Soumya G K, Joseph S (2014) Text classification by augmenting Bag of Words (BOW) representation with co-occurrence feature. 16(1):34–38

Kim HD, Park DH, Lu Y et al (2013) Enriching text representation with frequent pattern mining for probabilistic topic modeling. Proc Am Soc Inf Sci Technol 49(1):1–10

Razavi AH, Inkpen D (2013) Text representation and general topic annotation based on latent Dirichlet allocation. Stud Univ Babes-Bolyai Chem 58(2):31–39

Liu Z, Li M, Liu Y, et al (2011) Performance evaluation of latent Dirichlet allocation in text mining[C]// eighth International Conference on Fuzzy Systems and Knowledge Discovery. IEEE, p 2695–2698

Al-Salemi B, Aziz MJA, Noah SA (2015) LDA-AdaBoost.MH: accelerated AdaBoost.MH based on latent Dirichlet allocation for text categorization. J Inf Sci 41(1):27–40

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Chung J, Gulcehre C, Cho KH et al (2014) Empirical evaluation of gated recurrent neural networks on sequence modeling. Eprint Arxiv 1412:3555

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Luo, Lx. Network text sentiment analysis method combining LDA text representation and GRU-CNN. Pers Ubiquit Comput 23, 405–412 (2019). https://doi.org/10.1007/s00779-018-1183-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00779-018-1183-9