Abstract

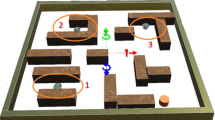

Much research has been conducted on the application of reinforcement learning to robots. Learning time is a matter of concern in reinforcement learning. In reinforcement learning, information from sensors is projected on to a state space. A robot learns the correspondence between each state and action in state space and determines the best correspondence. When the state space is expanded according to the number of sensors, the number of correspondences learnt by the robot is increased. Therefore, learning the best correspondence becomes time consuming. In this study, we focus on the importance of sensors for a robot to perform a particular task. The sensors that are applicable to a task differ for different tasks. A robot does not need to use all installed sensors to perform a task. The state space should consist of only those sensors that are essential to a task. Using such a state space consisting of only important sensors, a robot can learn correspondences faster than in the case of a state space consisting of all installed sensors. Therefore, in this paper, we propose a relatively fast learning system in which a robot can autonomously select those sensors that are essential to a task and a state space for only such important sensors is constructed. We define the measure of importance of a sensor for a task. The measure is the coefficient of correlation between the value of each sensor and reward in reinforcement learning. A robot determines the importance of sensors based on this correlation. Consequently, the state space is reduced based on the importance of sensors. Thus, the robot can efficiently learn correspondences owing to the reduced state space. We confirm the effectiveness of our proposed system through a simulation.

Similar content being viewed by others

References

Sutton RS, Barto AG (1998) Reinforcement learning: an introduction, MIT Press, Cambridge

Kondo T, Ito K (2004) A reinforcement learning with evolutionary state recruitment strategy for autonomous mobile robots control. Robot Auton Syst 46:111–124

Kober J, Oztop E, Peters J (2010) Reinforcement learning to adjust robot movements to new situations. In: Proceedings of the twenty-second international joint conference on artificial intelligence, pp 2650–2655

Navarro N, Weber C, Wermter S (2011) Real-world reinforcement learning for autonomous humanoid robot charging in a home environment. Lecture notes in computer science, vol 6856, pp 231–240

Tan M (1993) Multi-agent reinforcement learning: independent vs. cooperative agents. In: Proceedings of the tenth international conference on machine learning

Ahmadabadi MN, Asadpur M, Khodanbakhsh SH, Nakano E (2000) Expertness measuring in cooperative learning. In: Proceedings of the international conference on intelligent robots and systems 2000 (IROS 2000), vol 3 , pp 2261–2267

Ahmadabali MN, Asadpour M (2002) Expertness based cooperative Q-learning. IEEE Trans Syst Man Cybern 32(1):66–76

Iima H, Kuroe Y (2006) Swarm reinforcement learning algorithm based on exchanging information among agents. Trans Soc Instrum Control Eng 42(11):1244–1251

Yongming Y, Yantao T, Hao M (2007) Cooperative Q learning based on blackboard architecture. In: International conference on computational intelligence and security workshops, pp 224–227

Asada M, Noda S, Hosoda K (1996) Action-based sensor space categorization for robot learning. In: Proceedings of the IEEE/RSJ international conference on intelligent robots and systems, pp 1518–1524

Ishiguro H, Sato R, Ishida T (1996) Robot oriented state space construction. In: Proceedings of the IEEE/RSJ international conference on intelligent robots and systems, pp 1496–1501

Samejima S, Omori T (1999) Adaptive internal state space construction method for reinforcement learning of a real-world agent. Neural Netw 12:1143–1155

Smith AJ (2002) Applications of the self-organising map to reinforcement learning. Neural Netw 15:1107–1124

Aung KT, Fuchda T (2012) A proposition of adaptive state space partition in reinforcement learning with Voronoi tessellation. In: Proceedings of the 17th international symposium on artificial life and robotics, pp 638–641

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Kishima, Y., Kurashige, K. Reduction of state space in reinforcement learning by sensor selection. Artif Life Robotics 18, 7–14 (2013). https://doi.org/10.1007/s10015-013-0092-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10015-013-0092-2