Abstract

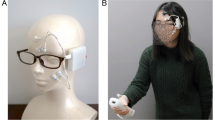

Since emotions have an inordinate amount of influence on daily life behaviors such as perception, memory, and decision making, it has recently become necessary to consider user emotions in the field of human–computer interface (HCI). This is especially important for future HCI applications that are expected to work in concert with humans. In this paper, we introduce an approach for estimating the emotions of video viewers in chronological order from remote measurements captured using a red–green–blue (RGB) camera. Facial expression and physiological responses such as heart rate and pupil were measured by analyzing facial videos. In addition, for the purpose of improving the estimation accuracy of emotional states and ease of measurement, we analyze the relationship between the features obtained from brain waves and the physiological signals acquired in a non-contact measurement. In this study, the Pearson’s correlation coefficient (COR) between the continuous Arousal estimated signal using the features from the electroencephalograph, which is the conventional method, and the subjective evaluation value was 0.1391, while the COR between the Arousal estimated signal and the subjective evaluation value using the features of facial expression, pupil diameter and heartbeat obtained from the camera without contact was 0.1609. In valence, the COR between the estimated signal using EEG features and the subjective evaluation value was − 0.0130, while the COR between the estimated signal from non-contact biometric features and the subjective evaluation value was 0.2879. In both cases, the proposed method improved the accuracy of continuous emotion estimation.

Similar content being viewed by others

References

Nakano YI, Ishii R (2010) Estimating user's engagement from eye-gaze behaviors in human-agent conversations. In: Proceedings of the 15th International Conference on Intelligent user interfaces, pp 139–148

Song W, Kim D, Kim J, Bien Z (2001) Visual serving for a user's mouth with effective intention reading in a wheelchair-based robotic arm. In: Proceedings 2001 ICRA. IEEE International Conference on robotics and automation, 4: 3662–3667

Salam H, Chetouani M (2015) Engagement detection based on mutli-party cues for human robot interaction. In: 2015 International Conference on affective computing and intelligent interaction, pp 341–347

Ekman P (1992) An argument for basic emotions. Cogn Emot 6(3–4):169–200

Ohkura M, Hamano M, Watanabe H, Aoto T (2010) Measurement of “wakuwaku” feeling generated by interactive systems using biological signals. In: Proc. Kansei Engineering and Emotion Research International Conference, pp 2293–2301

Mitsuhashi R, Iuchi K, Goto T, Matsubara A, Hirayama T, Hashizume H, Tsumura N (2019) Video-based stress level measurement using imaging photoplethysmography. In: 2019 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), pp 90–95

Monkaresi H, Bosch N, Calvo RA, D’Mello SK (2016) Automated detection of engagement using video-based estimation of facial expressions and heart rate. IEEE Trans Affect Comput 8(1):15–28

Kimberly C, Wong CY (2014) Player’s attention and meditation level of input devices on mobile gaming. In: 2014 3rd International Conference on user science and engineering (i-USEr), pp 13–17

Russell JA (1980) A circumplex model of affect. J Pers Soc Psychol 39(6):1161

Västfjäll D, Friman M, Gärling T, Kleiner M (2002) The measurement of core affect: A Swedish self-report measure derived from the affect circumplex. Scand J Psychol 43(1):19–31

Schuller B, Valstar M, Cowie R, Pantic M (2012) AVEC 2012: the continuous audio/visual emotion challenge. In: Proceedings of the 14th ACM International Conference on Multimodal interaction, pp 449–456

Soleymani M, Asghari-Esfeden S (2015) Analysis of EEG signals and facial expressions for continuous emotion detection. IEEE Trans Affect Comput 7(1):17–28

Bugnon L, Calvo RA, Milone D (2017) Dimensional affect recognition from 8: an approach based on supervised SOM and ELM. IEEE Trans Affect Comput 11:32–44

Gunes H, Schuller B (2013) Categorical and dimensional affect analysis in continuous input: Current trends and future directions. Image Vis Comput 31(2):120–136

McDuff D, Kaliouby R, Demirdjian D, Picard R (2013) Predicting Online Media Effectiveness Based on Smile Responses Gathered Over the Internet. In: 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), pp 1–7

Chakraborty PR, Tjondronegoro DW, Zhang L, Chandran V (2018) Towards generic modelling of viewer interest using facial expression and heart rate features. IEEE Access 6:62490–62502

Masui K, Okada G, Tsumura N (2020) Measurement of advertisement effect based on multimodal emotional responses considering personality. ITE Trans Media Technol Appl 8(1):49–59

Pham P, Jingtao W (2017) Understanding emotional responses to mobile video advertisements via physiological signal sensing and facial expression analysis. In: Proceedings of the 22nd International Conference on intelligent user interfaces, pp 67–78

Baltrušaitis T, Robinson P, Morency L-P (2016) Openface: an open source facial behavior analysis toolkit. In: 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), p 110

Baltrusaitis T, Mahmoud M, Robinson P (2015) Cross-dataset learning and person-specific normalisation for automatic Action Unit detection. In: 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), pp 1–6

Tsumura N, Ojima N, Sato K, Shiraishi M, Shimizu H, Nabeshima H, Akazaki S, Hori K, Miyake Y (2003) Image-based skin color and texture analysis/synthesis by extracting hemoglobin and melanin information in the skin. In: ACM SIGGRAPH 2003 Papers, pp 770–779

Kamen PW, Tonkin AM (1995) Application of the Poincaré plot to heart rate variability: a new measure of functional status in heart failure. Aust N Z J Med 25(1):18–26

Chaput C, Conceicao F (2020) Pupal-deep-learning. https://github.com/pupal-deep-learning/PuPal-Beta. Accessed 24 Feb 2020

Viola P, Jones M (2001) Rapid object detection using a boosted cascade of simple features. In: Proceedings of the 2001 IEEE computer society conference on computer vision and pattern recognition, vol 1, p 1

Ronneberger O, Fischer P, Brox T (2015) U-Net: convolutional networks for biomedical image segmentation. In: International Conference on medical image computing and computer-assisted intervention, pp 234–241

Davidson RJ (1998) Affective style and affective disorders: perspectives from affective neuroscience. Cogn Emot 12(3):307–330

Ramirez R et al (2015) Musical neurofeedback for treating depression in elderly people. Front Neurosci 9:354

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Soleymani M, Asghari-Esfeden S, Fu Y, Pantic M (2015) Analysis of EEG and facial expressions for continuous emotion detection. IEEE Trans Affect Comput 7(1):17–28

Bugnon LA, Calvo RA, Milone DH (2017) Dimensional affect recognition from HRV: an approach based on supervised SOM and ELM. IEEE Trans Affect Comput 11:32–44

Huang G, Zhu Q, Siew C (2006) Extreme learning machine: theory and applications. Neurocomputing 70(1–3):489–501

Oliveira AM, Teixeira MP, Fonseca IB, Olivelra M (2006) joint model-parameter validation of self-estimates of valence and arousal: probing a differential-weighting model of affective intensity. Proc Fechner Day 22:245–250

Valstar M, Gratch J, Schuller B, Ringeval F, Lalanne D, Torres MT, Scherer S, Stratou G, Cowie R, Pantic M (2016) Avec 2016: depression, mood, and emotion recognition workshop and challenge. In: Proceedings of the 6th International Workshop on Audio/visual Emotion Challenge, pp 3–10

Wakabayashi A, Baron-Cohen S, Uchiyama T, Yoshida Y, Tojo Y, Kuroda M, Wheelwright S (2006) The Autism-Spectrum Quotient (AQ) in Japan: a cross-cultural comparison. J Autism Dev Disord 36(2):263–270

Fukunishi I, Nakagawa T, Nakamura H, Kikuchi M, Takubo M (1997) Is alexithymia a culture-bound construct? Validity and reliability of the Japanese versions of the 20-item Toronto Alexithymia Scale and modified Beth Israel Hospital Psychosomatic Questionnaire. Psychol Rep 80(3):787–799

Kojima M, Furukawa TA, Takahashi H, Kawai M, Nagaya T, Tokudome S (2002) Cross-cultural validation of the Beck Depression Inventory-II in Japan. Psychiatry Res 110(3):291–299

Nakazato K, Shimonaka Y (1989) The Japanese State Trait Anxiety Inventory: age and sex differences. Percept Mot Skills 69(2):611–617

Girard JM, Wright AGC (2018) DARMA: Software for dual axis rating and media annotation. Behav Res Methods 50(3):902–909

Schaefer A, Nils F, Sanchez X, Philippot P (2010) Assessing the effectiveness of a large database of emotion-eliciting films: a new tool for emotion researchers. Cogn Emot 24(7):1153–1172

Baveye Y, Dellandréa E, Chamaret C, Chen L (2015) Liris-accede: A video database for affective content analysis. IEEE Trans Affect Comput 6(1):43–55

Ikeda Y, Horie R, Sugaya M (2017) Estimating emotion with biological information for robot interaction. Proc Comput Sci 112:1589–1600

Sato W, Hyniewska S, Minemoto K, Yoshikawa S (2019) Facial expressions of basic emotions in Japanese laypeople. Front Psychol 10:259

Ekman P (2009) Lie catching and microexpressions. In: The philosophy of deception, pp 118–133

Soukupová T, Čech J (2016) Real-time eye blink detection using facial landmarks. In: 21st Computer Vision Winter Workshop, pp 1–8

Machizawa MG, Lisi G, Kanayama N, Mizuochi R, Makita K, Sasaoka T, Yamawaki S (2019) Quantification of anticipation of excitement with three-axial model of emotion with EEG. BioRxiv 16:659979

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

About this article

Cite this article

Nagasawa, T., Masui, K., Doi, H. et al. Continuous estimation of emotional change using multimodal responses from remotely measured biological information. Artif Life Robotics 27, 19–28 (2022). https://doi.org/10.1007/s10015-022-00734-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10015-022-00734-1