Abstract

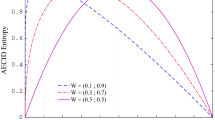

Most well-known classifiers can predict a balanced data set efficiently, but they misclassify an imbalanced data set. To overcome this problem, this research proposes a new impurity measure called minority entropy, which uses information from the minority class. It applies a local range of minority class instances on a selected numeric attribute with Shannon’s entropy. This range defines a subset of instances concentrating on the minority class to be constructed by decision tree induction. A decision tree algorithm using minority entropy shows improvement compared with the geometric mean and F-measure over C4.5, the distinct class-based splitting measure, asymmetric entropy, a top–down decision tree and Hellinger distance decision tree on 24 imbalanced data sets from the UCI repository.

Similar content being viewed by others

References

KDnuggets (2011) Poll results: top algorithms for analytics/data mining (Nov 2011) which methods/algorithms did you use for data analysis in 2011? http://www.kdnuggets.com/2011/11/algorithms-for-analytics-data-mining.html. Accessed 1 Feb 2013

Wu X, Kumar V, Ross Quinlan J, Ghosh J, Yang Q, Motoda H, McLachlan G, Ng A, Liu B, Yu P, Zhou ZH, Steinbach M, Hand D, Steinberg D (2008) Top 10 algorithms in data mining. Knowl Inf Syst 14(1):1–37. doi:10.1007/s10115-007-0114-2

Quinlan JR (1993) C4.5: programs for machine learning. Morgan Kaufmann Publishers Inc., San Francisco

Hunt EB, Marin J, Stone PJ (1966) Experiments in induction. Academic, New York

Quinlan J (1986) Induction of decision trees. Mach Learn 1:81–106

Breiman L, Friedman J, Olshen R, Stone C (1984) Classification and regression trees. Wadsworth and Brooks, Monterey

Dietterich T, Kearns M, Mansour Y (1996) Applying the weak learning framework to understand and improve c4.5. In: ICML, Citeseer, pp 96–104

Drummond C, Holte RC (2000) Exploiting the cost (in) sensitivity of decision tree splitting criteria. In: ICML, pp 239–246

Flach PA (2003) The geometry of roc space: understanding machine learning metrics through roc isometrics. In: ICML, pp 194–201

Marcellin S, Zighed DA, Ritschard G (2006) An asymmetric entropy measure for decision trees, pp 1292–1299. In: 11th conference on information processing and management of uncertainty in knowledge-based systems, IPMU 2006. http://archive-ouverte.unige.ch/unige:4531, iD: unige:4531

Zighed D, Ritschard G, Marcellin S (2010) Asymmetric and sample size sensitive entropy measures for supervised learning. In: Ras Z, Tsay LS (eds) Advances in intelligent information systems, studies in computational intelligence, vol 265. Springer, Berlin, pp 27–42

Cieslak D, Chawla N (2008) Learning decision trees for unbalanced data. In: Daelemans W, Goethals B, Morik K (eds) Machine learning and knowledge discovery in databases, vol 5211, Lecture notes in computer science. Springer, Berlin, pp 241–256

Chandra B, Kothari R, Paul P (2010) A new node splitting measure for decision tree construction. Pattern Recognit 43(8):2725–2731

Fan W, Miller M, Stolfo S, Lee W, Chan P (2004) Using artificial anomalies to detect unknown and known network intrusions. Knowl Inf Syst 6(5):507–527

Kubat M, Holte R, Matwin S (1998) Machine learning for the detection of oil spills in satellite radar images. Mach Learn 30(2–3):195–215

Liu W, Chawla S, Cieslak DA, Chawla NV (2010) A robust decision tree algorithm for imbalanced data sets. SDM, SIAM 10:766–777

Shannon C (1948) A mathematical theory of communication. Bell Syst Tech J 27:379

Ma BLWHY (1998) Integrating classification and association rule mining. In: Proceedings of the fourth international conference on knowledge discovery and data mining

Upton GJ (1992) Fisher’s exact test. J R Stat Soc Ser A Stat Soc 155(3):395–402. dio:10.2307/2982890

He H, Bai Y, Garcia EA, Li S (2008) ADASYN: Adaptive synthetic sampling approach for imbalanced learning. In: Neural networks, 2008. IJCNN 2008. (IEEE World Congress on Computational Intelligence). IEEE International Joint Conference, pp 1322–1328

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP (2002) Smote: synthetic minority over-sampling technique. J Artif Int Res 16(1):321–357

Han H, Wang WY, Mao BH (2005) Borderline-smote: A new over-sampling method in imbalanced data sets learning. In: Huang DS, Zhang XP, Huang GB (eds) Advances in intelligent computing, vol 3644, Lecture notes in computer science. Springer, Berlin, pp 878–887

Bunkhumpornpat C, Sinapiromsaran K, Lursinsap C (2012) Dbsmote: density-based synthetic minority over-sampling technique. Appl Intell 36(3):664–684

Bunkhumpornpat C, Sinapiromsaran K, Lursinsap C (2009) Safe-level-smote: Safe-level-synthetic minority over-sampling technique for handling the class imbalanced problem. In: Theeramunkong T, Kijsirikul B, Cercone N, Ho TB (eds) Advances in knowledge discovery and data mining, vol 5476, Lecture notes in computer science. Springer, Berlin, pp 475–482

Bunkhumpornpat C, Sinapiromsaran K, Lursinsap C (2011) Mute: majority under-sampling technique. In: 2011 8th International Conference on information, communications and signal processing (ICICS), pp 1–4. doi:10.1109/ICICS.2011.6173603

Gini CW (1971) Variability and mutability, contribution to the study of statistical distributions and relations, Studi Economico-Giuridici della R. Universita de Cagliari (1912). Reviewed in: Light, RJ Margolin BH: An analysis of variance for categorical data. J Amer Stat Assoc 66

Lindberg DV, Lee HK (2015) Optimization under constraints by applying an asymmetric entropy measure. J Comput Gr Stat 24(2):379–393. doi:10.1080/10618600.2014.901225

Su J, Zhang H (2006) A fast decision tree learning algorithm. In: Proceedings of the national conference on artificial intelligence. MIT Press, Cambridge, 1999, vol 21, p 500

Blake C, Merz CJ (1998) UCI repository of machine learning databases

Buckland MK, Gey FC (1994) The relationship between recall and precision. J Am Soc Info Sci 45(1):12–19

He H, Garcia E (2009) Learning from imbalanced data. Knowl Data Eng IEEE Trans 21(9):1263–1284

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30. http://dl.acm.org/citation.cfm?id=1248547.1248548

Acknowledgments

We thank Strategic scholarships Fellowships Frontier Research Networks (specific for Southern region) for the Ph.D. Program Thai doctoral degree from the Commission on Higher Education, Thailand for its financial support.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Boonchuay, K., Sinapiromsaran, K. & Lursinsap, C. Decision tree induction based on minority entropy for the class imbalance problem. Pattern Anal Applic 20, 769–782 (2017). https://doi.org/10.1007/s10044-016-0533-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10044-016-0533-3