Abstract

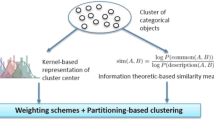

Clustering is to group similar data and find out hidden information about the characteristics of dataset for the further analysis. The concept of dissimilarity of objects is a decisive factor for good quality of results in clustering. When attributes of data are not just numerical but categorical and high dimensional, it is not simple to discriminate the dissimilarity of objects which have synonymous values or unimportant attributes. We suggest a method to quantify the level of difference between categorical values and to weigh the implicit influence of each attribute on constructing a particular cluster. Our method exploits distributional information of data correlated with each categorical value so that intrinsic relationship of values can be discovered. In addition, it measures significance of each attribute in constructing respective cluster dynamically. Experiments on real datasets show the propriety and effectiveness of the method, which improves the results considerably even with simple clustering algorithms. Our approach does not couple with a clustering algorithm tightly and can also be applied to various algorithms flexibly.

Similar content being viewed by others

Notes

An object is referred as an item in the whole dataset.

In this paper, the dissimilarity is considered as the opposite concept of similarity.

In representation of data vector, an attribute is considered as a dimension of space.

The distance between two objects \(x=(x_{1},x_{2},\ldots ,x_{n})\) and \(y=(y_{1},y_{2},\ldots ,y_{n})\) is defined with norm, \(r\), as \(\left(\sum _{i=1}^{n}\mid x_{i}-y_{i}\mid ^{r}\right)^{\frac{1}{r}}\), especially, 2-norm distance is known as Euclidean distance.

The Chi-square test is a statistical test procedure to understand if a relationship exists between two categorical variables.

Simple matching coefficient is defined as \(\frac{\text{ the} \text{ number} \text{ of} \text{ attributes} \text{ with} \text{ matching} \text{ values}}{\text{ the} \text{ number} \text{ of} \text{ total} \text{ attributes}}\).

Jaccard coefficient is defined as \(\frac{\text{ the} \text{ number} \text{ of} \text{ matching} \text{ presences}}{\text{ the} \text{ number} \text{ of} \text{ attributes} \text{ not} \text{ involved} \text{ in} \text{ null} \text{ matches}}\).

The values are the model numbers of the 2012 Mercedes \(\text{ Benz}^{(\mathrm TM )}\). CL550 and G550 are available at about $110,000 while E550 and ML550 are at about $60,000 in 2012 (http://www.mbusa.com/mercedes/).

The “Mushroom” dataset of the UCI machine learning repository (http://archive.ics.uci.edu/ml/index.html).

References

Ahmad A, Dey L (2007) A method to compute distance between two categorical values of same attribute in unsupervised learning for categorical dataset. Pattern Recognit Lett 28(1):110–118

Andritsos P, Tsaparas P, Miller RJ, Sevcik KC (2004) LIMBO: scalable clustering of categorical data. In: EDBT, LNCS, Springer, vol 2992, pp 123–146

Barbará D, Li Y, Couto J (2002) COOLCAT: an entropy-based algorithm for categorical clustering. In: Proceedings of the 11th CIKM. ACM Press, New York, pp 582–589

Beyer K, Goldstein J, Ramakrishnan R, Shaft U (1999) When is nearest neighbor meaningful. In: International conference on database theory, pp 217–235

Boriah S, Chandola V, Kumar V (2008) Similarity measures for categorical data: a comparative evaluation. In: SDM, SIAM, pp 243–254

Cost S, Salzberg S (1993) A weighted nearest neighbor algorithm for learning with symbolic features. Mach Learn 10:57–78

Ding CHQ, He X, Zha H, Simon HD (2002) Adaptive dimension reduction for clustering high dimensional data. In: ICDM, IEEE Computer Society, pp 147–154

Ganti V, Gehrke J, Ramakrishnan R (1999) Cactus-clustering categorical data using summaries. In: Proceedings of ACM SIGKDD, pp 73–83

Gomez JC, Boiy E, Moens MF (2012) Highly discriminative statistical features for email classification. Knowl Inf Syst 31(1):23–53

Guha S, Rastogi R, Shim K (2000) Rock: a robust clustering algorithm for categorical attributes. Inf Syst 25(5):345–366

Huang Z (1997) A fast clustering algorithm to cluster very large categorical data sets in data mining. In: In research issues on data mining and knowledge discovery, pp 1–8

Keßler C (2012) What is the difference? A cognitive dissimilarity measure for information retrieval result sets. Knowl Inf Syst 30(2):319–340

Kriegel HP, Kröger P, Zimek A (2009) Clustering high-dimensional data: a survey on subspace clustering, pattern-based clustering, and correlation clustering. ACM Trans Knowl Discov Data 3(1):1–58

Tan PN, Steinbach M, Kumar V (2005) Introduction to data mining. Addison-Wesley, Reading

Weber R, Schek HJ, Blott S (1998) A quantitative analysis and performance study for similarity-search methods in high-dimensional spaces. In: Proceedings of the 24th VLDB. Morgan Kaufmann, San Francisco, pp 194–205

Yu L, Liu H (2003) Feature selection for high-dimensional data: a fast correlation-based filter solution. In: ICML, AAAI Press

Zhang Y, Fu AWC., Cai CH, Heng PA (2000) Clustering categorical data. In: ICDE. IEEE Computer Society, San Diego, pp 305

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lee, J., Lee, YJ. An effective dissimilarity measure for clustering of high-dimensional categorical data. Knowl Inf Syst 38, 743–757 (2014). https://doi.org/10.1007/s10115-012-0599-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-012-0599-1