Abstract

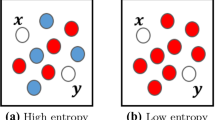

Nearest neighbor search is a core process in many data mining algorithms. Finding reliable closest matches of a test instance is still a challenging task as the effectiveness of many general-purpose distance measures such as \(\ell _p\)-norm decreases as the number of dimensions increases. Their performances vary significantly in different data distributions. This is mainly because they compute the distance between two instances solely based on their geometric positions in the feature space, and data distribution has no influence on the distance measure. This paper presents a simple data-dependent general-purpose dissimilarity measure called ‘\(m_p\)-dissimilarity’. Rather than relying on geometric distance, it measures the dissimilarity between two instances as a probability mass in a region that encloses the two instances in every dimension. It deems two instances in a sparse region to be more similar than two instances of equal inter-point geometric distance in a dense region. Our empirical results in k-NN classification and content-based multimedia information retrieval tasks show that the proposed \(m_p\)-dissimilarity measure produces better task-specific performance than existing widely used general-purpose distance measures such as \(\ell _p\)-norm and cosine distance across a wide range of moderate- to high-dimensional data sets with continuous only, discrete only, and mixed attributes.

Similar content being viewed by others

Notes

We used dissimilarity so that it is consistent with other distance or dissimilarity measures.

We used sufficiently large b in order to discriminate instances well.

Available with WEKA software http://www.cs.waikato.ac.nz/ml/weka/.

Another approach of assigning rank in the case of tie is to assign the average rank, i.e., \(\frac{r+(r+1)+\cdots +(r+n)}{n}\).

We used the implementation based on the range search and not the approximation using binning in order to have similar formulation as \(\ell _p\) with rank transformation.

References

Aggarwal CC, Hinneburg A, Keim DA (2001) On the surprising behavior of distance metrics in high dimensional space. In: Proceedings of the 8th international conference on database theory. Springer, Berlin, pp. 420–434

Ariyaratne HB, Zhang D (2012) A novel automatic hierachical approach to music genre classification. In: Proceedings of the 2012 IEEE international conference on multimedia and expo workshops, IEEE Computer Society, Washington DC, USA, pp. 564–569

Aryal S, Ting KM, Haffari G, Washio T (2014) Mp-dissimilarity: a data dependent dissimilarity measure. In: Proceedings of the IEEE international conference on data mining. IEEE, pp. 707–712

Bache K, Lichman M (2013) UCI machine learning repository, http://archive.ics.uci.edu/ml. University of California, Irvine, School of Information and Computer Sciences

Bellet A, Habrard A, Sebban M (2013) A survey on metric learning for feature vectors and structured data, Technical Report, arXiv:1306.6709

Beyer KS, Goldstein J, Ramakrishnan R, Shaft U (1999) When Is “Nearest Neighbor” meaningful? Proceedings of the 7th international conference on database theory. Springer, London, pp. 217–235

Boriah S, Chandola V, Kumar V (2008) Similarity measures for categorical data: a comparative evaluation. In: Proceedings of the eighth SIAM international conference on data mining, pp. 243–254

Cardoso-Cachopo A (2007) Improving methods for single-label text categorization, PhD thesis, Instituto Superior Tecnico, Technical University of Lisbon, Lisbon, Portugal

Conover WJ, Iman RL (1981) Rank transformations as a bridge between parametric and nonparametric statistics. Am Stat 35(3):124–129

Deza MM, Deza E (2009) Encyclopedia of distances. Springer, Berlin

Fodor I (2002) A survey of dimension reduction techniques. Technical Report UCRL-ID-148494, Lawrence Livermore National Laboratory

François D, Wertz V, Verleysen M (2007) The concentration of fractional distances. IEEE Trans Knowl Data Eng 19(7):873–886

Hall M, Frank E, Holmes G, Pfahringer B, Reutemann P, Witten IH (2009) The weka data mining software: an update. SIGKDD Explor Newsl 11(1):10–18

Han E-H, Karypis G (2000) Centroid-based document classification: Analysis and experimental results. In: Proceedings of the 4th European conference on principles of data mining and knowledge discovery. Springer, London, pp. 424–431

Indyk P, Motwani R (1998) Approximate nearest neighbors: towards removing the curse of dimensionality. Proceedings of the thirtieth annual ACM symposium on theory of computing, STOC ’98, ACM, New York, pp. 604–613

Jolliffe I (2005) Principal component analysis. Wiley Online Library, Hoboken

Krumhansl CL (1978) Concerning the applicability of geometric models to similarity data: the interrelationship between similarity and spatial density. Psychol Rev 85(5):445–463

Kulis B (2013) Metric learning: a survey. Found Trends Mach Learn 5(4):287–364

Lan M, Tan CL, Su J, Lu Y (2009) Supervised and traditional term weighting methods for automatic text categorization. IEEE Trans Pattern Anal Mach Intell 31(4):721–735

Lin D (1998) An information-theoretic definition of similarity. In: Proceedings of the fifteenth international conference on machine learning. Morgan Kaufmann Publishers Inc., San Francisco, pp. 296–304

Lundell J, Ventura D (2007) A data-dependent distance measure for transductive instance-based learning. In: Proceedings of the IEEE international conference on systems, man and cybernetics, pp. 2825–2830

Mahalanobis PC (1936) On the generalized distance in statistics. Proc Natl Inst Sci India 2:49–55

Minka TP (2003) The ‘summation hack’ as an outlier model, http://research.microsoft.com/en-us/um/people/minka/papers/minka-summation.pdf. Microsoft Research

Radovanović M, Nanopoulos A, Ivanović M (2010) Hubs in space: popular nearest neighbors in high-dimensional data. J Mach Learn Res 11:2487–2531

Salton G, Buckley C (1988) Term-weighting approaches in automatic text retrieval. Inf Process Manage 24(5):513–523

Salton G, McGill MJ (1986) Introduction to modern information retrieval. McGraw-Hill Inc, New York

Schleif F-M, Tino P (2015) Indefinite proximity learning: a review. Neural Comput 27(10):2039–2096

Schneider P, Bunte K, Stiekema H, Hammer B, Villmann T, Biehl M (2010) Regularization in matrix relevance learning. IEEE Trans Neural Netw 21(5):831–840

Tanimoto TT (1958) Mathematical theory of classification and prediction, International Business Machines Corp

Tuytelaars T, Lampert C, Blaschko MB, Buntine W (2010) Unsupervised object discovery: a comparison. Int J Comput Vision 88(2):284–302

Tversky A (1977) Features of similarity. Psychol Rev 84(4):327–352

Wang F, Sun J (2015) Survey on distance metric learning and dimensionality reduction in data mining. Data Min Knowl Disc 29(2):534–564

Yang L (2006) Distance metric learning: a comprehensive survey, Technical report, Michigan State Universiy

Zhou G-T, Ting KM, Liu FT, Yin Y (2012) Relevance feature mapping for content-based multimedia information retrieval. Pattern Recogn 45(4):1707–1720

Acknowledgements

The preliminary version of this paper is published in Proceedings of the IEEE International conference on data mining (ICDM) 2014 [3]. We would like to thank anonymous reviewers for their useful comments. Kai Ming Ting is partially supported by the Air Force Office of Scientific Research (AFOSR), Asian Office of Aerospace Research and Development (AOARD) under Award Number FA2386-13-1-4043. Takashi Washio is partially supported by the AFOSR AOARD Award Number 15IOA008-154005 and JSPS KAKENHI Grant Number 2524003.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Probabilistic interpretation of \(m_p\)

The formulation of \(m_p(\mathbf{x}, \mathbf{y})\) (Eq. 8) has a probabilistic interpretation. The simplest form of data-dependent dissimilarity measure is to define an M-dimensional region \(R(\mathbf{x}, \mathbf{y})\) that encloses \(\mathbf{x}\) and \(\mathbf{y}\), and to estimate the probability of a randomly selected point \(\mathbf{t}\) from the distribution of data, \(\phi (\mathbf{x})\), falling in \(R(\mathbf{x}, \mathbf{y})\), \(P(\mathbf{t} \in R(\mathbf{x},\mathbf{y})|\phi (\mathbf{x}))\). Let \(R(\mathbf{x}, \mathbf{y})\) has length of \(R_i(\mathbf{x}, \mathbf{y})\) in dimension i. Assuming that the dimensions are independent, \(P(\mathbf{t} \in R(\mathbf{x},\mathbf{y})|\phi (\mathbf{x}))\) can be approximated as:

where \(P(t_i\in R_i(\mathbf{x},\mathbf{y})|\phi _i(\mathbf{x}))\) is the probability of \(t_i\) falling in \(R_i(\mathbf{x},\mathbf{y})\) for dimension i.

The approximation in Eq. 14 is sensitive to outliers. An approximation which is tolerant to outliers can be estimated by replacing the product with the summation [23]. The sum-based approximation relates to the probability of \(\mathbf{t}\) in Eq. 14 under the following outlier model. Consider a data generation process in which in order to sample \(t_i\), a coin with probability of turning head \((1-\epsilon )\) is flipped. If the coin turns head, \(t_i\) is drawn from the distribution of data in dimension i, \(\phi _i(\mathbf{x})\), where the probability of sampling \(t_i\) is \(P_i(t_i|\phi _i(\mathbf{x}))\), otherwise it is sampled from the uniform distribution with probability 1 / A, and A is a constant.

Lemma 1

[23] Under the data generation process described above, the probability of a data point \(P'(\cdot )\) can be approximated as

where \(C_1\) and \(C_2\) are constants.

Proof

Under the outlier model, the probability of generating the value of the i’th dimension \(t_i\) is

We assume that each dimension is generated independently, hence

In the extreme case where the probability of generating \(t_i\) from the uniform distribution (i.e., the outlier component) is high, i.e., \(\epsilon \) is close to 1, only the first two terms matter. Assuming \(C_1 := (\epsilon /A)^M\) and \(C_2 := (\epsilon /A)^{M-1} (1-\epsilon )\), the lemma follows. \(\square \)

In addition to the above approximation given by Minka [23], we propose that the chance of \(t_i\) being drawn from the outlier model can be further reduced by sampling from \(\phi _i(\mathbf{x})^p\), \(p>1\) when coin turns up head in the above mentioned data generation process. The probability of sampling \(t_i\) from \(\phi _i(\mathbf{x})^p\) is \(\frac{P(t_i|\phi _i(\mathbf{x}))^p}{Z_{i,p}}\), where \(P(\cdot )^p\) is the probability of a random event occurring in p successive trials and \(Z_{i,p}\) is the normalization constant to ensure the total probability sums up to 1 in the \(i^{th}\) dimension.

Lemma 2

Under the data generation process of sampling from exponential distribution described above, the probability of a data point \(P''(\cdot )\) can be approximated as

where \(C_1\), \(C_2\), and \(\{Z_{i,p}\}_{i=1}^M\) are constants.

Proof

This follows from Lemma 1 by drawing \(t_i\) from \(\phi _i(\mathbf{x})^p\) \(p>1\) when coin turns up head in the data generation process. \(\square \)

As a result of Lemma 2 (by considering the outlier tolerant model), \(P(\mathbf{t} \in R(\mathbf{x},\mathbf{y}))\) can be approximated as:

Note that \(P(\mathbf{t} \in R(\mathbf{x},\mathbf{y}))\) is a data-dependent dissimilarity measure for \(\mathbf{x}\) and \(\mathbf{y}\). All the constants on RHS of Eq. 16 are independent of \(\mathbf{x}\) and \(\mathbf{y}\) and they are just the scaling factors of the dissimilarity measure. Particularly, in order to find the nearest neighbor of \(\mathbf{x}\) among a collection of data instances, the only important term in the measure is \(\sum _{i=1}^M P_i(t_i \in R_i(\mathbf{x},\mathbf{y}))^p\). The constants can be ignored as they do not change the ranking of data points. Hence, by ignoring the constants in Eq. 16, \(m_p(\mathbf{x}, \mathbf{y})\) can be expressed as its rescaled version as follows:

where the outer power of \(\frac{1}{p}\) is just a rescaling factor and \(\frac{1}{M}\) is a constant.

In practice, \(P_i\left( t_i\in R_i(\mathbf{x}, \mathbf{y})\right) \) can be estimated from D as:

Hence, Eqs. 17 and 18 lead to \(m_p\) defined in Eq. 8.

Appendix 2: Analysis of concentration and hubness

In order to examine the concentration and hubness of the three dissimilarity measures \(m_2\), \(\ell _2\) and \(d_{cos}\) in different data distributions with the increase in the number of dimensions, we used synthetic data sets with uniform (each dimension is uniformly distributed between [0,1]) and normal (each dimension is normally distributed with zero mean and unit variance) distributions with \(M=10\) and \(M=200\). Feature vectors were normalized to be in unit range in each dimension.

1.1 Concentration

The relative contrast between the nearest and farthest neighbor is computed for all \(N=1000\) instances in each data set using \(m_2\), \(\ell _2\) and \(d_{cos}\). The relative contrast for each instance in uniform and normal distributions with \(M=10\) and \(M=200\) are shown in Fig. 5.

The relative contrast of all three measures decreased substantially (note that the y-axes have different scales in Fig. 5) when the number of dimensions was increased from \(M=10\) to \(M=200\) in both distributions. It is interesting to note that \(m_2\) has the least relative contrast in both distributions with \(M=10\) and \(M=200\); and \(d_{cos}\) has the maximum relative contrast in all cases. The relative contrasts of \(\ell _2\) and \(m_2\) are almost the same except in the case of normal (\(M=200\)), where the relative contrast of \(\ell _2\) is slightly higher than that of \(m_2\) for many instances.

This suggests that \(m_2\) is more concentrated than \(\ell _2\) and \(d_{cos}\). Even in real-world data sets, we observed that \(m_2\) is more concentrated than \(\ell _2\) and \(d_{cos}\).

1.2 Hubness

In order to examine the hubness phenomenon, 5-Occurrences of each instance \(\mathbf{x}\in D\) is estimated, i.e., \(O_5(\mathbf{x}) = |\{\mathbf{y}: \mathbf{x}\in N_5(\mathbf{y})\}|\), where \(N_5(\mathbf{y})\) is the set of 5-NN of \(\mathbf{y}\). Then, the \(O_5\) distribution is plotted for each measure (\(m_2\), \(\ell _2\) and \(d_{cos}\)) in all four synthetic data sets which is shown in Fig. 6.

The \(O_5\) distributions of all three measures become skewed when the number of dimensions was increased from \(M=10\) to \(M=200\) in both distributions. It is interesting to note that the \(O_5\) distributions of \(m_2\) in uniform and normal distributions are almost similar for both \(M=10\) and \(M=200\), whereas those of \(\ell _2\) and \(d_{cos}\) in the case of normal distribution are more skewed than those in uniform distribution for both \(M=10\) and \(M=200\). Note that the \(O_5\) distributions of \(m_2\) and \(\ell _2\) in uniform distribution are similar for both \(M=10\) and \(M=200\). This is because of the fact that \(m_2\) is proportional to \(\ell _2\) under uniform distribution (also reflected in Fig. 2a). In the case of normal distribution and \(M=200\), the \(O_5\) distribution of \(m_2\) is less skewed than those of \(\ell _2\) and \(d_{cos}\). There are 361 and 348 (out of 1000) instances with \(O_5=0\) (which do not occur in the 5-NN set of any other instance) in the case of \(\ell _2\) and \(d_{cos}\), respectively; whereas there are only 161 instances with \(O_5=0\) in the case of \(m_2\). Similarly, the most popular nearest neighbors using \(\ell _2\) and \(d_{cos}\) have \(O_5=146\) and 152, respectively; whereas the most popular nearest neighbor using \(m_2\) has \(O_5=69\).

We observed similar behavior in many real-world data sets as well where the \(O_5\) distribution of \(m_2\) is less skewed than that of \(\ell _2\) and \(d_{cos}\).

Appendix 3: Standard error

Table 10 shows the standard error of classification accuracies (in %) of k-NN classification (\(k=5\)) over a tenfold cross-validation (average classification accuracy is presented in Table 4 in Sect. 4.1).

Table 11 shows the standard error of precision at top 10 retrieved results (P@10) over N queries in content-based multimedia information retrieval (average P@10 is presented in Table 6 in Sect. 4.2).

Appendix 4: Comparison with geometric distance measures after dimensionality reduction

Average 5-NN classification accuracies over a tenfold cross-validation of \(d_{cos}, \ell _{0.5}\) and \(\ell _2\) before and after dimensionality reduction through PCA along with those of \(m_{0.5}\) and \(m_2\) in the original space in 16 out of 22 data sets with continuous only attributes are provided in Table 12. With PCA, the number of dimensions was reduced by projecting data in the lower-dimensional space defined by the principal components capturing 95% of the variance in data. The principal components were computed by the eigen decomposition of the correlation matrix of the training data to ensure that the projection is robust to scale differences in the original dimensions. Note that PCA did not complete in 24 h in the remaining six data sets with \(M>5000\): New3s (26,832), Ohscal (11,465), Arcene (10,000), Wap (8460), R52 (7369) and NG20 (5489).

Rights and permissions

About this article

Cite this article

Aryal, S., Ting, K.M., Washio, T. et al. Data-dependent dissimilarity measure: an effective alternative to geometric distance measures. Knowl Inf Syst 53, 479–506 (2017). https://doi.org/10.1007/s10115-017-1046-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-017-1046-0