Abstract

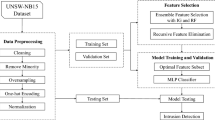

This paper presents a novel wrapper feature selection algorithm for classification problems, namely hybrid genetic algorithm (GA)- and extreme learning machine (ELM)-based feature selection algorithm (HGEFS). It utilizes GA to wrap ELM to search for the optimum subsets in the huge feature space, and then, a set of subsets are selected to make ensemble to improve the final prediction accuracy. To prevent GA from being trapped in the local optimum, we propose a novel and efficient mechanism specifically designed for feature selection problems to maintain GA’s diversity. To measure each subset’s quality fairly and efficiently, we adopt a modified ELM called error-minimized extreme learning machine (EM-ELM) which automatically determines an appropriate network architecture for each feature subsets. Moreover, EM-ELM has good generalization ability and extreme learning speed which allows us to perform wrapper feature selection processes in an affordable time. In other words, we simultaneously optimize feature subset and classifiers’ parameters. After finishing the search process of GA, to further promote the prediction accuracy and get a stable result, we select a set of EM-ELMs from the obtained population to make the final ensemble according to a specific ranking and selecting strategy. To verify the performance of HGEFS, empirical comparisons are carried out on different feature selection methods and HGEFS with benchmark datasets. The results reveal that HGEFS is a useful method for feature selection problems and always outperforms other algorithms in comparison.

Similar content being viewed by others

References

Alexandre E, Cuadra L, Salcedo-Sanz S, Pastor-Sánchez A, Casanova-Mateo C (2015) Hybridizing extreme learning machines and genetic algorithms to select acoustic features in vehicle classification applications. Neurocomputing 152:58–68

Bartlett PL (1998) The sample complexity of pattern classification with neural networks: the size of the weights is more important than the size of the network. IEEE Trans Inf Theory 44(2):525–536

Bermejo P, Gámez JA, Puerta JM (2014) Speeding up incremental wrapper feature subset selection with Naive Bayes classifier. Knowl Based Syst 55:140–147

Bolón-Canedo V, Sánchez-Maroño N, Alonso-Betanzos A (2013) A review of feature selection methods on synthetic data. Knowl Inf Syst 34(3):483–519

Bryll R, Gutierrez-Osuna R, Quek F (2003) Attribute bagging: improving accuracy of classifier ensembles by using random feature subsets. Pattern Recognit 36(6):1291–1302

Cao J, Lin Z, Huang GB, Liu N (2012) Voting based extreme learning machine. Inf Sci 185(1):66–77

Chang CC, Lin CJ (2011) Libsvm: a library for support vector machines. ACM Trans Intell Syst Technol TIST 2(3):27

Dash M, Liu H (1997) Feature selection for classification. Intell Data Anal 1(1):131–156

El Akadi A, Amine A, El Ouardighi A, Aboutajdine D (2011) A two-stage gene selection scheme utilizing MRMR filter and GA wrapper. Knowl Inf Syst 26(3):487–500

Feng G, Huang GB, Lin Q, Gay R (2009) Error minimized extreme learning machine with growth of hidden nodes and incremental learning. IEEE Trans Neural Netw 20(8):1352–1357

Fuchs CA, Peres A (1996) Quantum-state disturbance versus information gain: uncertainty relations for quantum information. Phys Rev A 53(4):2038

García-Nieto J, Alba E, Jourdan L, Talbi E (2009) Sensitivity and specificity based multiobjective approach for feature selection: application to cancer diagnosis. Inf Process Lett 109(16):887–896

Guyon I, Elisseeff A (2003) An introduction to variable and feature selection. J Mach Learn Res 3:1157–1182

Guyon I, Weston J, Barnhill S, Vapnik V (2002) Gene selection for cancer classification using support vector machines. Mach Learn 46(1–3):389–422

Hall MA (1999) Correlation-based feature selection for machine learning. Ph.D. thesis, The University of Waikato

Hansen LK, Salamon P (1990) Neural network ensembles. IEEE Trans Pattern Anal Mach Intell 10:993–1001

Ho TK (1998) The random subspace method for constructing decision forests. IEEE Trans Pattern Anal Mach Intell 20(8):832–844

Holland JH (1992) Adaptation in natural and artificial systems: an introductory analysis with applications to biology, control, and artificial intelligence. MIT press, Cambridge

Huang CL, Dun JF (2008) A distributed pso-svm hybrid system with feature selection and parameter optimization. Appl Soft Comput 8(4):1381–1391

Huang CL, Wang CJ (2006) A GA-based feature selection and parameters optimization for support vector machines. Exp Syst Appl 31(2):231–240

Huang GB, Zhou H, Ding X, Zhang R (2012) Extreme learning machine for regression and multiclass classification. IEEE Trans Syst Man Cybern Part B Cybern 42(2):513–529

Huang GB, Zhu QY, Siew CK (2004) Extreme learning machine: a new learning scheme of feedforward neural networks. In: 2004 IEEE International Joint Conference on Neural Networks. Proceedings, vol 2. IEEE, pp 985–990

Huang J, Cai Y, Xu X (2007) A hybrid genetic algorithm for feature selection wrapper based on mutual information. Pattern Recognit Lett 28(13):1825–1844

Jain A, Zongker D (1997) Feature selection: evaluation, application, and small sample performance. IEEE Trans Pattern Anal Mach Intell 19(2):153–158

Kira K, Rendell LA (1992) A practical approach to feature selection. In: Proceedings of the ninth international workshop on machine learning, pp 249–256

Kohavi R, John GH (1997) Wrappers for feature subset selection. Artif Intell 97(1):273–324

Li S, Harner EJ, Adjeroh DA (2011) Random KNN feature selection—a fast and stable alternative to random forests. BMC Bioinform 12(1):450

Li X, Xiao N, Claramunt C, Lin H (2011) Initialization strategies to enhancing the performance of genetic algorithms for the p-median problem. Comput Ind Eng 61(4):1024–1034

Lin SW, Chen SC, Wu WJ, Chen CH (2009) Parameter determination and feature selection for back-propagation network by particle swarm optimization. Knowl Inf Syst 21(2):249–266

Liu H, Setiono R (1995) Chi2: feature selection and discretization of numeric attributes. In: TAI. IEEE, p 388

Pudil P, Novovičová J, Kittler J (1994) Floating search methods in feature selection. Pattern Recognit Lett 15(11):1119–1125

Quinlan JR (1986) Induction of decision trees. Mach Learn 1(1):81–106

Quinlan JR (1996) Improved use of continuous attributes in c4.5. J Artif Intell Res 4:77–90

Quinlan JR (2014) C4.5: programs for machine learning. Elsevier, Amsterdam

Singh S, Kubica J, Larsen S, Sorokina D (2009) Parallel large scale feature selection for logistic regression. In: Proceedings of the 2009 SIAM International Conference on Data Mining. Society for Industrial and Applied Mathematics, vol. 2009. pp 1172–1183

Tan M, Tsang IW, Wang L (2014) Towards ultrahigh dimensional feature selection for big data. J Mach Learn Res 15(1):1371–1429

Xu Z, Sun S (2010) An algorithm on multi-view adaboost. In: Neural information processing. Theory and algorithms. Springer, Berlin, pp 355–362

Xue X, Yao M, Wu Z, Yang J (2014) Genetic ensemble of extreme learning machine. Neurocomputing 129:175–184

Yang W, Li D, Zhu L (2011) An improved genetic algorithm for optimal feature subset selection from multi-character feature set. Exp Syst Appl 38(3):2733–2740

Yao L, Sethares WA (1994) Nonlinear parameter estimation via the genetic algorithm. IEEE Trans Signal Process 42(4):927–935

Yu L, Liu H (2003) Feature selection for high-dimensional data: a fast correlation-based filter solution. In: Proceedings of the 20th international conference on machine learning (ICML-03). pp 856–863

Zhang Y, Wang S, Phillips P, Ji G (2014) Binary PSO with mutation operator for feature selection using decision tree applied to spam detection. Knowl Based Syst 64:22–31

Zhu Z, Ong YS, Dash M (2007) Wrapper–filter feature selection algorithm using a memetic framework. IEEE Trans Syst Man Cybern Part B Cybern 37(1):70–76

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Xue, X., Yao, M. & Wu, Z. A novel ensemble-based wrapper method for feature selection using extreme learning machine and genetic algorithm. Knowl Inf Syst 57, 389–412 (2018). https://doi.org/10.1007/s10115-017-1131-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-017-1131-4