Abstract

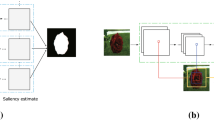

At present, You only look once (YOLO) is the fastest real-time object detection system based on a unified deep neural network. During training, YOLO divides the input image to \(S \times S \) gird cells and the only one grid cell that contains the center of an object, takes charge of detecting that object. It is not sure that the cell corresponding to the center of the object is the best choice to detect the object. In this paper, inspired by the visual saliency mechanism we introduce the saliency map to YOLO to develop YOLO3-SM method, where saliency map selects the grid cell containing the most salient part of the object to detect the object. The experimental results on two data sets show that the prediction box of YOLO3-SM obtains the lager IOU value, which demonstrates that compared with YOLO3 , the YOLO3-SM selects the cell that is more suitable to detect the object . In addition, YOLO3-SM gets the highest mAP that the other three state-of-the-art object detection methods on the two data sets, which shows that introducing the saliency map to YOLO can improve the detection performance.

Similar content being viewed by others

References

Girshick R, Donahue J, Darrell T, Malik J (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In: Computer vision and pattern recognition (CVPR), pp 580–587

Girshick R (2015) Fast R-CNN. In:: Computer vision and pattern recognition (CVPR), pp 1440–1448

Ren S, He K, Girshick R, Sun J (2015) Faster R-CNN: towards real-time object detection with region proposal networks. In: Advances in neural information processing systems (NIPS), pp 91–99

Redmon J, Divvala S, Girshick R et al (2016) You only look once: unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 779–788

Liu W, Anguelov D, Erhan D et al (2016) SSD: single shot multibox detector. In: European conference on computer vision. Springer, Cham, pp 21–37

He K, Gkioxari G, Dollár P, Girshick R (2017) Mask R-CNN. In: Computer vision (ICCV), pp 2980–2988

Redmon J, Farhadi A (2017) YOLO9000: better, faster, stronger. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7263–7271

Redmon J, Farhadi A (2018) Yolov3: an incremental improvement. arXiv:1804.02767

Borji Ali, Itti L (2013) State-of-the-art in visual attention modeling. IEEE Trans Pattern Anal Mach Intell 35(1):185–207

Rutishauser U et al (2004) Is bottom-up attention useful for object recognition? In: Proceedings of the 2004 IEEE computer society conference on computer vision and pattern recognition, vol 2, p II

Zhao R, Ouyang W, Wang X (2013) Unsupervised salience learning for person re-identification. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3586–3593

Wu R, Yu Y, Wang W (2013) Scale: supervised and cascaded Laplacian eigenmaps for visual object recognition based on nearest neighbors. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 867–874

Welleck S, Mao J, Cho K et al (2017) Saliency-based sequential image attention with multiset prediction. In: Advances in neural information processing systems, pp 5173–5183

Zagoruyko S, Komodakis N (2016) Paying more attention to attention: improving the performance of convolutional neural networks via attention transfer. arXiv:1612.03928

Acknowledgements

This work is supported by the National Key Research and Development Program of China: 2018YFC0809001, the Natural Science Foundation of China: 61572393, 61877049, 11671317, 12001428.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hu, Jy., Shi, CJ.R. & Zhang, Js. Saliency-based YOLO for single target detection. Knowl Inf Syst 63, 717–732 (2021). https://doi.org/10.1007/s10115-020-01538-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-020-01538-0