Abstract

Despite the growing awareness about mobility issues surrounding auditory interfaces used by visually impaired people, designers still face challenges while creating sound for auditory interfaces. This paper presents a new approach of hybrid auditory feedback, which converts frequently used speech instructions to non-speech (i.e., spearcons), based on users’ travelled frequency and sound repetition. Using a within-subject design, twelve participants (i.e., blind people) carried out a task, using a mobility assistant application in an indoor environment. As surfaced from the study results, the hybrid auditory feedback approach is more effective than non-speech and it is pleasant compared with repetitive speech-only. In addition, it can substantially improve user experience. Finally, these findings may help researchers and practitioners use hybrid auditory feedback, rather than using speech- or non-speech-only, when designing or creating accessibility/assistive products and systems.

Similar content being viewed by others

Notes

References

Fallah, N.: AudioNav: a mixed reality navigation system for individuals who are visually impaired. ACM SIGACCESS Access. Comput. 96, 24–27 (2010)

Ran, L., Helal, S., Moore, S.: Drishti: an integrated indoor/outdoor blind navigation system and service. In: Proceedings of the 2nd IEEE Annual Conference on Pervasive Computing and Communications, pp. 23–30 (2004)

Loomis, J.M., Marston, J.R., Golledge, R.G., Klatzky, R.L.: Personal guidance system for people with visual impairment: a comparison of spatial displays for route guidance. J. Vis. Impair. Blindness 99(4), 219 (2005)

Sánchez, J., Oyarzún, C.: Mobile audio assistance in bus transportation for the blind. Int. J. Disabil. Hum. Dev. 10(4), 365–371 (2011)

World Health Organization: Factsheet visual impairment and blindness (2009) Accessible at: http://www.who.int/medacentre/factsheets/fs282/en/

Lutz, R.J.: Prototyping and evaluation of landcons: auditory objects that support wayfinding for blind travelers. ACM SIGACCESS Access. Comput. 86, 8–11 (2006)

Strachan, S., Eslambolchilar, P., Murray-Smith, R., Hughes, S., O’Modhrain, S.: GpsTunes: controlling navigation via audio feedback. In: Proceedings of the 7th International Conference on Human Computer Interaction with Mobile Devices and Services, pp. 275–278 (2005)

Gaver, W.W.: Auditory icons: using sound in computer interfaces. Hum.-Comput. Interact. 2(2), 167–177 (1986)

Blattner, M.M., Sumikawa, D.A., Greenberg, R.M.: Earcons and icons: their structure and common design principles. Hum.-Comput. Interact. 4(1), 11–44 (1989)

Brewster, S.A., Wright, P.C., Edwards, A.D.N.: Experimentally derived guidelines for the creation of earcons. In: Adjunct Proceedings of People and Computers (Huddersfield). British Computer Society, pp. 155–159 (1995)

Walker, B.N., Nance, A., Lindsay, J.: Spearcons: Speech-based earcons improve navigation performance in auditory menus. In: Proceedings of the International Conference on Auditory Display, pp. 63–68 (2006)

Palladino, D.K., Walker, B.N.: Learning rates for auditory menus enhanced with spearcons versus earcons. In: Proceedings of the 13th International Conference on Auditory Display, pp. 274–279 (2007)

Dingler, T., Lindsay, J., Walker, B.N.: Learnability of sound cues for environmental features: Auditory icons, earcons, spearcons, and speech. In: Proceedings of the 14th International Conference on Auditory Display, pp. 1–6 (2008)

Walker, B.N., Lindsay, J.: Navigation performance with a virtual auditory display: effects of beacon sound, capture radius, and practice. J. Hum. Factors Ergonomics Soc. 48(2), 265–278 (2006)

Tran, T.V., Letowski, T., Abouchacra, K.S.: Evaluation of acoustic beacon characteristics for navigation tasks. Ergonomics 43(6), 807–827 (2000)

Holland, S., Morse, D.R., Gedenryd, H.: AudioGPS: spatial audio navigation with a minimal attention interface. Pers. Ubiquit. Comput. 6(4), 253–259 (2002)

Ghiani, G., Leporini, B., Paternò, F.: Supporting orientation for blind people using museum guides. In: Extended Abstracts on Human Factors in Computing Systems, pp. 3417–3422 (2008)

Kainulainen, A., Turunen, M., Hakulinen, J., Melto, A.: Soundmarks in spoken route guidance. In: Proceedings of the 13th International Conference of Auditory Display, pp. 107–111 (2007)

Jones, M., Jones, S., Bradley, G., Warren, N., Bainbridge, D., Holmes, G.: ONTRACK: dynamically adapting music playback to support navigation. Pers. Ubiquit. Comput. 12(7), 513–525 (2008)

Hansen, K.F., Bresin. R.: Use of soundscapes for providing information about distance left in train journeys. In: Proceedings of the 9th Sound and Music Computing Conference, pp. 79–84 (2012)

Vazquez-Alvarez, Y., Oakley, I., Brewster, S.A.: Urban sound gardens: supporting overlapping audio landmarks in exploratory environments. In: Proceedings of Multimodal Location Based Techniques for Extreme Navigation Workshop, Pervasive (2010)

Stahl, C.: The roaring navigator: a group guide for the zoo with shared auditory landmark display. In: Proceedings of the 9th International Conference on Human Computer Interaction with Mobile Devices and Services, pp. 383–386 (2007)

McGookin, D.K.: Understanding and improving the identification of concurrently presented earcons. University of Glasgow (2004)

Loomis, J.M., Klatzky, R.L., Golledge, R.G.: Navigating without vision: basic and applied research. Optom. Vis. Sci. 78, 282–289 (2001)

Loomis, J.M., Golledge, R.G., Klatzky, R.L.: GPS-based navigation systems for the blind. In: Fundamentals of Wearable Computers and Augmented Reality, pp. 429–446 (2001)

Strothotte, T., Fritz, S., Michel, R., Raab, A., Petrie, H., Johnson, V., Reichert, L., Schalt, A.: Development of dialogue systems for a mobility aid for blind people: initial design and usability testing. In: Proceedings of the 2nd Annual ACM Conference on Assistive Technologies, pp. 139–144 (1996)

Helal, A., Moore, S.E., Ramachandran, B.: Drishti: an integrated navigation system for visually impaired and disabled. In: Proceedings of the 5th International Symposium on Wearable Computers, pp. 149–156 (2001)

Wilson, J., Walker, B. N., Lindsay, J., Cambias, C., Dellaert, F.: Swan: system for wearable audio navigation. In: Proceedings of the 11th International Symposium on Wearable Computers, pp. 91–98 (2007)

Hussain, I., Chen, L., Mirza, H. T., Majid, A., Chen, G.: Hybrid auditory feedback: A new method for mobility assistance of the visually impaired. In: Proceedings of the 14th International ACM SIGACCESS Conference on Computers and Accessibility, pp. 255–256 (2012)

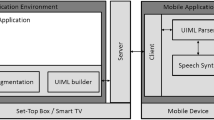

Chen, L., Hussain, I., Chen, R., Huang, W., Chen, G.: BlueView: a perception assistant system for the visually impaired. In: Adjunct Proceedings of the ACM Conference on Pervasive and Ubiquitous Computing, pp. 143–146 (2013)

Lazar, J., Feng, J.H., Hochheiser, H.: Research methods in human-computer interaction. Wiley, London (2010)

Acknowledgments

The authors would like to thank reviewers for their valuable comments/suggestions on the early versions of the paper. Our gratitude goes to the participants for their time and involvement in the study. This work was funded by the Natural Science Foundation of China (Nos. 60703040, 61332017), Science and Technology Department of Zhejiang Province (Nos. 2007C13019, 2011C13042).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hussain, I., Chen, L., Mirza, H.T. et al. Right mix of speech and non-speech: hybrid auditory feedback in mobility assistance of the visually impaired. Univ Access Inf Soc 14, 527–536 (2015). https://doi.org/10.1007/s10209-014-0350-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10209-014-0350-7