Abstract

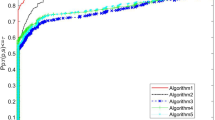

In this paper, we consider an unconstrained optimization problem and propose a new family of modified BFGS methods to solve it. As it is known, classic BFGS method is not always globally convergence for nonconvex functions. To overcome this difficulty, we introduce a new modified weak-Wolfe–Powell line search technique. Under this new technique, we prove global convergence of the new family of modified BFGS methods and the classic BFGS method, for nonconvex functions. Furthermore, all members of this family have at least \(o(\Vert s \Vert ^{5})\) error order. Our obtained results from numerical experiments on 77 standard unconstrained problems, indicate that the algorithms developed in this paper are promising and more effective than some similar algorithms.

Similar content being viewed by others

References

Al-Baali M, Khalfan H (2012) A combined class of self-scaling and modified quasi-Newton methods. Comput Optim Appl 52:393–408

Al-Baali M, Grandinetti L, Pisacane O (2014) Damped techniques for the limited memory BFGS method for large-scale optimization. J Optim Theory Appl 161:688–699

Amini K, Rizi AG (2010) A new structured quasi-Newton algorithm using partial information on hessian. J Comput Appl Math 234:805–811

Andrei N (2008) An unconstrained optimization test functions collection. Adv Model Optim 10:147–161

Biglari F, Mahmoodpur F (2016) Scaling damped limited-memory updates for unconstrained optimization. J Optim Theory Appl 170:177–188

Biglari F, Abu-Hassan M, Leong WJ (2011) New quasi-Newton methods via higher order tensor models. J Comput Appl Math 235:2412–2422

Burke JV, Engle A (2018) Line search methods for convex-composite optimization. arXiv:1806.05218v1

Dai YH (2002) Convergence properties of the BFGS algorithm. SIAM J Optim 13:693–701

Dai YH, Kou CX (2013) A nonlinear conjugate gradient algorithm with an optimal property and an improved Wolff line search. SIAM J Optim 23:296–320

Dolan ED, Moré JJ (2002) Benchmarking optimization software with performance profiles. Math Program Ser A 91:201–213

Guo Q, Liu JG (2007) Global convergence properties of two modified BFGS-type methods. J Appl Math Comput 23:311–319

Kou CX, Dai YH (2015) A modified self-scaling memoryless Broyden–Fletcher–Goldfarb–Shanno method for unconstrained optimization. J Optim Theory Appl 165:209–224

Leong WJ (2016) A class of diagonal quasi-Newton methods for large-scale convex minimization. Bull Malays Math Sci Soc 39:1659–1672

Li DH, Fukushima M (2001) A modified BFGS method and its global convergence in nonconvex minimization. J Comput Appl Math 129:15–35

Li X, Wang B, Hu W (2017) A modified nonmonotone BFGS algorithm for unconstrained optimization. J Inequal Appl 183:1–18

Liu H, Wang HJ, Qian XY, Shi QS (2013) A class of modified BFGS methods with function value information for unconstrained optimization. Asia Pac J Oper Res 30:1–20

Liu L, Yao S, Wei Z (2014) A modified non-monotone BFGS method for non-convex unconstrained optimization. Asia Pac J Oper Res 31:1–15

Mascarenhas WF (2004) The BFGS method with exact line searches fails for nonconvex objective functions. Math Program Ser A 99:49–61

Nocedal J, Wright SJ (2006) Numerical optimization, 2nd edn. Springer series in operations research. Springer, Berlin

Powell MJD (1976) Some global convergence properties of a variable metric algorithm for minimization without exact line searches. In: Cottle RW, Lemke CE (eds) Nonlinear programming, SIAM-AMS proceedings, vol IX. SIAM, Philadelphia, PA

Scheinberg K, Tang X (2016) Practical inexact proximal quasi-Newton method with global complexity analysis. Math Program Ser A 160:495–529

Sofi AZM, Mamat M, Mohd I (2013) An improved BFGS search direction using exact line search for solving unconstrained optimization problems. Appl Math Sci 7:73–85

Tarzanagh DA, Peyghami MR (2015) A new regularized limited memory BFGS-type method based on modified secant conditions for unconstrained optimization problems. J Glob Optim 63:709–728

Wan Z, Huang S, Zheng XD (2012) New cautious BFGS algorithm based on modified Armijo-type line search. J Inequal Appl 24:1–10

Wan Z, Teo KL, Shen XL, Hu CM (2014) New BFGS method for unconstrained optimization problem based on modified Armijo line search. Optimization 63:285–304

Wei Z, Yu G, Yuan G, Lian Z (2004) The superlinear convergence of a modified BFGS type method for unconstrained optimization. Comput Optim Appl 29:315–332

Wei Z, Li G, Qi L (2006) New quasi-Newton methods for unconstrained optimization problems. Appl Math Comput 175:1156–1188

Xiao Y, Wei Z, Wang Z (2008) A limited memory BFGS-type method for large-scale unconstrained optimization. Comput Math Appl 56:1001–1009

Xiao YH, Li TF, Wei ZX (2013) Global convergence of a modified limited memory BFGS method for nonconvex minimization. Acta Math Appl Sin Engl Ser 29:555–566

Yabe H, Ogasawara H, Yoshino M (2007) Local and superlinear convergence of quasi-Newton methods based on modified secant conditions. J Comput Appl Math 205:617–632

Yu G, Guan L, Wei Z (2009) Globally convergent Polak–Ribiére–Polyak conjugate gradient methods under a modified Wolfe line search. Appl Math Comput 215:3082–3090

Yuan YX (1991) A modified BFGS algorithm for unconstrained optimization. IMA J Numer Anal 11:325–332

Yuan G, Wei Z (2010) Convergence analysis of a modified BFGS method on convex minimization. Comput Optim Appl 47:237–255

Yuan G, Wei Z, Wu Y (2010) Modified limited memory BFGS method with nonmonotone line search for unconstrained optimization. J Korean Math Soc 47:767–788

Yuan G, Wei Z, Lu X (2017) Global convergence of BFGS and PRP methods under a modified weak Wolfe–Powell line search. Appl Math Model 47:811–825

Yuan G, Sheng Z, Wang B, Hu W, Li C (2018) The global convergence of a modified BFGS method for nonconvex functions. J Comput Appl Math 327:274–294

Zhang J, Xu C (2001) Properties and numerical performance of quasi-Newton methods with modied quasi-Newton equations. J Comput Appl Math 137:269–278

Zhang JZ, Deng NY, Chen LH (1999) New quasi-Newton equation and related methods for unconstrained optimization. J Optim Theory Appl 102:147–167

Zhujun W, Detong Z (2014) A nonmonotone filter line search technique for the MBFGS method in unconstrained optimization. J Syst Sci Complex 27:565–580

Acknowledgements

The authors would like to thank the anonymous reviewers for their useful suggestions and valuable comments which were greatly helpful to improve the quality of this paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Bojari, S., Eslahchi, M.R. Global convergence of a family of modified BFGS methods under a modified weak-Wolfe–Powell line search for nonconvex functions. 4OR-Q J Oper Res 18, 219–244 (2020). https://doi.org/10.1007/s10288-019-00412-2

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10288-019-00412-2