Abstract

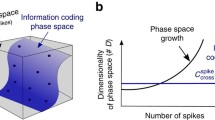

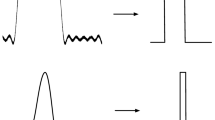

In this paper, we compare existing methods for quantifying the coding capacity of a spike train, and review recent developments in the application of information theory to neural coding. We present novel methods for characterising single-unit activity based on the perspective of a downstream neurone and propose a simple yet universally applicable framework to characterise the order of complexity of neural coding by single units. We establish four orders of complexity in the capacity for neural coding. First-order coding, quantified by firing rates, is conveyed by frequencies and is thus entirely described by first moment processes. Second-order coding, represented by the variability of interspike intervals, is quantified by the log interval entropy. Third-order coding is the result of spike motifs that associate adjacent inter-spike intervals beyond chance levels; it is described by the joint interval histogram, and is measured by the mutual information between adjacent log intervals. Finally, nonstationarities in activity represent coding of the fourth-order that arise from the effects of a known or unknown stimulus.

Similar content being viewed by others

References

Abeles M (1982) Role of the cortical neuron: integrator or coincidence detector? Israel J Med Sci 18:83–92

Abeles M (1991) Corticonics. Cambridge University Press, Cambridge

Abeles M, Gerstein G (1988) Detecting spatiotemporal firing patterns among simultaneously recorded single neurons. J Neurophysiol 60:909–924

Abeles M, Bergman H, Margalit E, Vaadia E (1993) Spatiotemporal firing patterns in the frontal-cortex of behaving monkeys. J Neurophysiol 70:1629–1638

Adrian E (1926) The impulses produced by sensory nerve endings: part i. J Physiol 1:151–171

Aertsen A, Arndt M (1993) Response synchronization in the visual cortex. Curr Opin Neurobiol 3:586–594

Aitchison J, Brown J (1963) The lognormal distribution. Cambridge University Press, Cambridge

Armstrong W, Stern J (1997) Electrophysiological and morphological characteristics of neurons in perinuclear zone of supraoptic nucleus. J Neurophysiol 78:2427–2437

Awiszus F (1988) Continuous functions determined by spike trains of a neuron subject to stimulation. Biol Cybernet 58:321–327

Awiszus F, Feistner H, Schafer S (1991) On a method to detect long-latency excitations and inhibitions of single hand muscle motoneurones in man. Exp Brain Res 86:440–446

Baker S, Gerstein G (2000) Improvements to the sesntivity of gravitational clustering for multiple neuron recordings. Neural Computation 12:2597–2620

Baker S, Lemon R (2000) Precise spatiotemporal repeating patterns in monkey primary and supplementary motor areas occur at chance levels. J Neurophysiol 84:1770–1780

Bell A, Sejnowski T (1995) An information maximisation approach to blind separation and blind deconvolution. Neural Comput 7:1129–1159

Berry M, Warland D, Meister M (1997) The structure and precision of retinal spike trains. Proc Natl Acad Sci USA 94:5411–5416

Bessou P, Laporte Y, Pages B (1968) A method of analysing the responses of spindle primary endings to fusimotor stimulation. J Physiol 196:37–45

Bhumbra G, Dyball R (2004) Measuring spike coding in the supraoptic nucleus. J Physiol 555:281–296

Bhumbra G, Inyushkin A, Dyball R (2004) Assessment of spike activity in the supraoptic nucleus. J Neuroendocrinol 16:390–397

Bhumbra G, Inyushkin A, Saeb-Parsy K, Hon A, Dyball R (2005) Rhythmic changes in spike coding in the rat suprachiasmatic nucleus. J Physiol 563(1):291–307

Blakey W (1949) University Mathematics. Blackie and Son Limited, London

Borst A, Theunissen A (1999) Information theory and neural coding. Nat Neurosci 2:947–957

Brenner N, Bialek W, de Ruyter van Steveninck R (2000a) Adaptive rescaling maximizes information transmission. Neuron 26:695–702

Brenner N, Strong S, Koberle K, Bialek W, de Ruyter Van Steveninck R (2000b) Synergy in a neural code. Neural Comput 12:1231–1552

Burns B, Webb A (1976) The spontaneous activity of neurones in the cat’s cerebral cortex. Proc R Soc Lond B 194:211–223

Chacron M, Longtin A, Maler L (2001) Negative interspike interval correlations increase the neuronal capacity for encoding time-dependent stimuli. J Neurosci 21:5328–5343

Cover T, Thomas J (1991) Elements of information theory. Wiley, New York

Cutler D, Haraura M, Reed H, Shen S, Sheward W, Morrison C, Marston H, Harmar A, Piggins H (2003) The mouse VPAC2 receptor confers suprachiasmatic nuclei cellular rhythmicity and responsiveness to vasoactive intestinal polypeptide in vitro. Eur J Neurosci 17:197–204

Dan Y, Alonso J, Ursery W, Reid R (1998) Coding of visual information by precisely correlated spikes in the lateral geniculate nucleus. Nat Neurosci 1:501–507

Dayhoff J, Gerstein G (1983) Favored patterns in spike trains. 2. Applications. J Neurophysiol 49:1349–1363

Dear S, Simmons J, Fritz J (1993) A possible neuronal basis for representation of acoustic scences in the auditory cortex of the big brown bat. Nature 364:620–623

DeCharms R, Merzenich M (1995) Primary cortical representation of sounds by the coordination of action potential timing. Nature 381:610–613

Deco G, Schurmann B (1998) The coding of information by spiking neurons: an analytical study. Netw Comput Neural Syst 9:303–317

Dyball R, Leng G (1986) Regulation of the milk ejection reflex in the rat. J Physiol 380:239–256

Ellaway P (1978) Cumulative sum technique and its application to the analysis of peristimulus time histogram. Electroencephologr Clin Neurophysiol 45:302–304

Fairhall A, Lewen G, Bialek W, de Ruyter Van Steveninck R (2001) Efficiency and ambiguity in an adaptive neural code. Nature 412:787–792

Fatt P, Katz B (1952) Spontaneous subthreshold activity at motor nerve endings. J Physiol 117:109–128

Fee M, Kleinfeld D (1994) Neuronal responses in rat vibrissa cortex during behaviour. Soc Neurosci Abstr

Gerstein G, Kiang N-S (1960) An approach to the quantitative analysis of electrophysiological data from single neurons. Biophys J 1:15–28

Ferster D, Spruston N (1995) Cracking the neuronal code. Science 270:756–757

Gerstein G, Mandelbrot B (1964) Random walk models for the spike activity of a single neuron. Biophysical J 4:41–68

Gray C (1999) The temporal correlation hypothesis of visual feature integration: still alive and well. Neuron 24:31–47

Gross A, Clark V (1975) Survival distributions: reliability applications in the biomedical sciences. Wiley, New York

Haken H (1996) Principles of brain functioning: a synergetic approach to brain activity, behaviour and cognition. Springer, Berlin Heidelberg New York

Harris J, Stocker H (1998) Handbook of mathematics and computational science. Springer, Berlin Heidelderg New York

Hastie T, Tibshirani R, Friedman J (2001) The elements of statistical learning. Springer, Berlin Heidelberg New York

Hodgkin A, Huxley A (1952) A quantitative description of membrane current and its application to conduction and excitation in the nerve. J Physiol 117:500–544

Jaynes E (1957) Information theory and statistical mechanics. Phys Rev 106:171–190

Jaynes E (2003) Probability theory: the logic of science. Cambridge University Press, Cambridge

Jeffreys H (1939) Theory of probability. Clarendon Press, Oxford

Karbowiak A (1969) Theory of Communication. Oliver and Boyd, Edinburgh

Klemm W, Sherry C (1981) Serial ordering in spike trains: what’s it “trying to tell us”? Int J Neurosci 14:15–33

Knierem J, van Essen D (1992) Neuronal responses to static textures in area V1 of the alert Macaque monkey. J Neurophysiol 67:961–980

Knudsen E, Konishi M (1979) Mechanisms of sound localization in the barn owl (Tyto alba). J Comp Physiol 133:13–21

Kozachenko L, Leonenko L (1987) Sample estimate of the entropy of a random vector. Problems Information Transmission 23:95–101

Land M, Collett T (1974) Chasing behavior of houseflies (Fannia canicularis): a description and analysis. J Comp Physiol 89:331–357

Lapicque L (1907) Recherches quantitatives sur l’excitation electrique des nerfs traitee comme une polarization. J Gen Physiol Pathol 9:620–635

Lee T-W (1998) Independent component analysis. Kluwer, Dordrecht

Leng G, Brown C, Bull P, Brown D, Scullion S, Currie J, Blackburn-Munro R, Feng J, Onaka T, Verbalis J, Russell J, Ludwig M (2001) Responses of magnocellular neurons to osmotic stimulation involves coactivation of excitatory and inhibitory input: an experimental and theoretical analysis. J Neurosci 21(17):6967–6977

Leonard T, Hsu J (1999) Bayesian methods. Cambridge University Press, Cambridge

MacKay D (2003) Information theory, inference, and learning algorithms. Cambridge University Press, Cambridge

Mackay D, McCulloch W (1952) The limiting information capacity of a neuronal link. Bull Math Biophys 14:127–135

MacLeod K, Baecker A, Laurent G (1998) Who reads temporal information contained across synchronized and oscillatory spike trains? Nature 395:693–698

Madras N (2002) Lectures on Monte Carlo methods. American Mathematical Society, Rhode Island

Mann N, Schafer R, Singpurwalla N (1974) Methods for statistical analysis of reliability and life data. Wiley, New York

Matthews P (1996) Relationship of firing intervals of human motor units to the trajectory of post-spike after-hyperpolarization and synaptic noise. J Physiol 492:597–628

Mel B (1993) Synaptic integration in an excitable dendritic tree. J Neurophysiol 70:1086–1101

Nakahama H, Nishioka S, Otsuka T, Aikawa S (1966) Statistical dependency between interspike intervals of spontaneous activity in thalamic lemniscal neurons. J Neurophysiol 29:921–934

Nirenberg S, Latham P (2003) Decoding neuronal spike trains: how important are correlations. Proc Natl Acad Sci USA 100:7348–7353

O’Keefe J, Recce M (1993) Phase relationship between hippocampal place units and the EEG theta rhythm. Hippocampus 3:317–330

Oram M, Wiener M, Lestienne R, Richmond BJ (1999) Stochastic nature of precisely timed spike patterns in visual system neuronal responses. J Neurophysiol 81:3021–3033

Papoulis A, Pillia S (2002) Probability, random variables, and stochastic processes. McGraw-Hill, New York

Perkel D, Bullock T (1968) Neural coding: a report based a a Neuroscience Research Progress work session. Neurosci Res Prog Bull 6:3

Perkel D, Gerstein G, Moore G (1967) Neuronal spike trains and stochastic point processes: I. the single spike train. Biophys J 7:391–418

Pincus S (1991) Approximate entropy as a measure of system complexity. Proc Natl Acad Sci USA 88:2297–2301

Poggio G, Viernstein L (1964) Time series analysis of impulse sequences of thalamic somatic sensory neurons. J Neurophysiol 27:517–545

Poizari P, Mel B (2001) Impact of active dendrites and structural plasticity on the memory capacity of neural tissue. Neuron 29:779–796

Poulain D, Wakerley J (1982) Electrophysiology of hypothalamic magnocellular neurones secreting oxytocin and vasopressin. Neuroscience 7:773–808

Press W, Teukolsky S, Vetterling W, Flannery B (2002) Numerical recipes in C++: the art of scientific computing. Cambridge University Press, Cambridge

Rao R, Olshausen B, Lewicki M (2002) Probabilistic models of the brain. The Massachusetts Institute of Technology Press, Cambridge

Reeke G, Coop A (2004) Estimating the temporal interval entropy of neuronal discharge. Neural Comput 16:941–970

Reid R, Soodak R, Shapley R (1991) Directional selectivity and spatiotemporal structure of receptive fields of simple cells in cat striate cortex. J Neurophysiol 66:505–529

Reinagel P, Reid R (2000) Temporal coding of visual information in the thalamus. J Neurosci 20:5392–5400

Rieke F, Warland D, de Ruyter Van Steveninck R, Bialek W (1999) Spikes: exporing the neural code. The Massachachusetts Institute of Technology Press, Cambridge

Roberts S, Everson R (2001) Independent component analysis: principles and practice. Cambridge University Press, Cambridge

Rodieck R, Kiang N-S, Gerstein G (1962) Some quantitative methods for the study of spontaneous activity of single neurons. Biophys J 2:351–367

Rose G, Heilenberg W (1985) Temporal hyperacuity in the electrical sense of fish. Nature 318:178–180

de Ruyter van Steveninck R, Lewen G, Strong S, Koberle R, Bialek B (1997) Reproducibility and variability in neural spike trains. Science 275:1805–1808

Sabatier N, Brown C, Ludwig M, Leng G (2004) Phasic spike patterning in rat supraoptic neurones in vivo and in vitro. J Physiol 558:161–180

Saeb-Parsy K, Dyball R (2003) Defined cell groups in the rat suprachiasmatic nucleus have different day/night rhythms of single-unit activity in vivo. J Biol Rhythm 18:26–42

Salinos E, Sejnowski T (2001) Correlated neuronal activity and the flow neural information. Nat Rev 2:539–550

Shadlen M, Moveshon J (1999) Synchrony unbound: a critical evaluation of the temporal binding hypothesis. Neuron 24:67–77

Shadlen M, Newsome W (1994) Noise, neural codes and cortical organization. Curr Opin Neurobiol 4:569–579

Shannon C, Weaver W (1949) The mathematical theory of communication. University of Illinois Press, Urbana

Sherry C, Klemm W (1981) Entropy as an index of the informational state of neurons. Int J Neurosci 15:171–178

Sherry C, Klemm W (1984) What is the meaningful measure of neuronal spike train activity? J Neurosci 10:205–213

Simmons J (1979) Perception of echo phase information in bat sonar. Science 204:1336–1338

Simmons J (1989) A view of the world through the bat’s eat: the formation of acoustic images in echolocation. Cognition 33:155–199

Simmons J, Ferragam M, Moss C, Stevenson S, Altes R (1990) Discrimination of jittered sonar echoes by the echolocating bat, Eptesicus fuscus: The shape of target images in echolocation. J Comp Physiol 167:589–616

Sivia D (1996) Data analysis: a bayesian tutorial. Oxford University Press Inc., New York

Softky W (1995) Simple codes versus efficient codes. Curr Opin Neurobiol 5:s239–s247

Softky W, Koch C (1993) The highly irregular firing of cortical cells is consistent with temporal integration of random epsps. J Neurosci 13:334–350

Stein R (1965) A theoretical analysis of neuronal variability. Biophysiol J 5:173–194

Stopfer M, Bhagavan S, Smith B, Laurent G (1997) Impaired odour discrimination on desynchronization of odour-encoding neural assemblies. Nature 390:70–74

Strong S, Koberle R, de Ruyter van Steveninck R, Bialek W (1998) Entropy and information in neural spike trains. Phys Rev Lett 80:197–200

Tasker J, Dudek F (1991) Electrophysiological properties of neurones in the region of the paraventricular nucleus in slices of the rat hypothalamus. J Physiol 434:271–293

Tiesenga P, Jose J, Sejnowski T (2000) Comparison of current-driven and conductance-driven neocortical model neurons with Hodgkin-Huxley voltage-gated channels. Phys Rev E 62:8413–8419

Tuckwell H (1988a) Introduction to theoretical neurobiology: volume 1-linear cable theory and dendritic structure. Cambridge University Press, Cambridge

Tuckwell H (1988b) Introduction to theoretical neurobiology: volume 2-nonlinear and stochastic theories. Cambridge University Press, Cambridge

Turker K, Cheng H (1994) Motor-unit firing frequency can be used for the estimation of synaptic potentials in human motoneurones. J Neurosci 53:225–234

Turker K, Powers R (1999) Effects of large excitatory and inhibitory inputs on motoneuron discharge rate and probability. J Neurophysiol 82:829–840

Turker K, Yang J, Scutter S (1997) Tendon tap induces a single long lasting excitatory reflex in the motoneurons of human soleus muscle. Exp Brain Res 115:169–173

Uscher M, Stemmler M, Koch C (1994) Network amplification of local fluctuations causes high spike rate variability, fractal firing patterns and oscillatory field potentials. Neural Comput 6:795–836

Usrey W, Reid R (1999) Synchronous activity in the nervous system. Annu Rev Neurosci 61:435–456

Victor J (2002) Binless strategies for estimation of information from neural data. Phys Rev E 66:051903_1–051903_15

Wadsworth H (1990) Handbook of statistical methods for engineers and scientists. McGraw-Hill, New York

Waelti P, Dickinson A, Shultz W (2001) Dopamine responses comply with basic assumptions of formal learning theory. Nature 412:43–48

Wehr M, Laurent G (1999) Relationship between afferent and central temporal patterns in the locust olfactory system. J Neurosci 19:381–390

Wetmore D, Baker S (2004) Post-spike distance-to-threshold trajectories of neurones in monkey motor cortex. J Physiol 555:831–850

Wilson M, McNaughton B (1993) Dynamics of the hippocampal code for space. Science 261:1055–1058

Yang G, Chen T (1978) On statistical methods in neural spike train analysis. Math Biosci 38:1–34

Acknowledgements

This work was supported by the Medical Research Council (U.K.), Engineering and Physical Sciences Research Council (U.K.), Merck, Sharp, and Dohme (U.K.), and the James Baird Fund. We are extremely grateful to G. Leng from the University of Edinburgh for his assistance in the preparation of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Gareth Leng

Appendix

Appendix

The entropy of a gamma probability density function

Consider a gamma probability density function \({\mathcal{G}}(\alpha, \beta)\) expressed with respect to time w, using α and β as shaping and scaling parameters, respectively.

where the gamma function Γ(α) is given by a definite integral expressed with respect to z over its support set \({\mathcal{S}}_Z\) defined over the interval [0, ∞].

It is possible to estimate α and β from the first two moments (Wadsworth 1990), but precise values can only be obtained using computational algorithms such as maximum likelihood estimation (Mann et al. 1974; Gross and Clark 1975). By substituting the gamma probability density function for f w (w|I) into Eq. 8, the differential entropy s[w] can be expressed in ‘nats’.

For a gamma probability density function \({\mathcal{G}} (\alpha, \beta),\) the expectation E(w) is given by αβ (Papoulis and Pillia 2002).

The expectation term E(log e w) can be expressed with respect to \({\mathcal{G}}(\alpha, \beta).\)

To expand the integral, it is useful to change the variable w for z, where z=w/β, thus w=βz, therefore dw=βdz, and \({\mathcal{S}}_Z\) is defined over the interval [0, ∞].

The first integral expression can be substituted with the gamma function expressed in Eq. 53.

The remaining integral expression corresponds to the differential of the gamma function, where:

Equation 64 can thus be expressed with respect to the differential term:

where the psi function Ψ(α) is the derivative of the log gamma function:

The expression for E(log e w) in Eq. 67 can thus be substituted into Eq. 57.

The log interval entropy of a gamma process

First, the probability density function f x (x|I) of the logarithmic intervals x is expressed with respect to the probability density function f w (w|I) of the linear intervals w, where w = ex = dw/dx.

The probability density function \({\mathcal{G}}(\alpha, \beta)\) can be used to represent f w (w|I) for a gamma process.

The entropy s[x] can be expressed by substitution into Eq. 23.

For a gamma probability density function \({\mathcal{G}}(\alpha, \beta),\) the expectation E(w) is given by α β (Papoulis and Pillia 2002).

To express the expectation term E(log w), it is first useful to introduce a new variable y, where y=x−log e β. Since dy/dx = 1, the probability density function f y (y|I) is identical to f x (x|I) as shown in Eq. 76, and may be expressed with respect to y by the substitution x=y + log e β.

Since the probability density function f y (y|I) is independent of β, and identical to f x (x|I) except shifted negatively by log e β, the entropy of f x (x|I) is also independent of β. This can be proved by expressing the expectation E(y) by the integral of the product of f y (y|I) and y over the support set \({\mathcal{S}}_Y\) that is defined over the interval [−∞, ∞].

Equation 66 can be expressed with respect to y where z = ey and dz = eydy.

Equation 90 expresses the integral term of Eq. 88 as the derivative of the gamma function. It can be divided by the gamma function to express the psi function Ψ (α) as defined in Eq. 69.

Since x is y + log e β, its expectation E(x) can be expressed with respect to E(y).

The expression for E(x) can thus be substituted into Eq. 79.

Approximation of the log interval entropy of a gamma process

If α is a positive integer, the gamma function Γ (α) can be expressed by factorial (a−1)!. Using Stirling’s formula (Harris and Stocker 1998), Γ (α+1) can be approximated for large values of α.

Since Γ (α+1) equals α Γ (α) (Press et al. 2002), it is possible to approximate log e Γ (α).

The function Ψ (α) can be expressed with respect to Euler’s constant γ by summation.

where γ is Euler’s constant that can be expressed with respect to an infinite summation.

Although the summation of reciprocals is divergent (Blakey 1949), the negative logarithmic term converges the value of the expression to a single quantity (≈ 0.5772). If α is large, it can be used to provide an approximation for γ.

The approximation of γ can be substituted into Eq. 103.

Equations 102 and 109 can thus be used to approximate s[x] expressed in Eq. 28.

Rights and permissions

About this article

Cite this article

Bhumbra, G.S., Dyball, R.E.J. Spike coding from the perspective of a neurone. Cogn Process 6, 157–176 (2005). https://doi.org/10.1007/s10339-005-0006-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10339-005-0006-x