Abstract

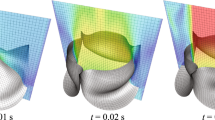

We analyze the behavior of a multigrid algorithm for variational inequalities of the second kind with a Moreau-regularized nondifferentiable term. First, we prove a theorem summarizing the properties of the Moreau regularization of a convex, proper, and lower semicontinuous functional that is used in the rest of the paper. We prove that the solution of the regularized problem converges to the solution of the initial problem when the regularization parameter approaches zero. To give a procedure of explicit writing of the Moreau regularization of a convex and lower semicontinuous functional, we have constructed the Moreau regularization for two problems with a scalar unknown taken from the literature and also, for a contact problem with Tresca friction. These functionals are of an integral form and we prove some propositions giving general conditions for which the functionals of this type are lower semicontinuous, proper, and convex. To solve the regularized problem, which is a variational inequality of the first kind, we use a standard multigrid method for two-sided obstacle problems. The numerical experiments have showed a high accuracy and a very good convergence of the method even for values of the regularization parameter close to zero. In view of these results, we think that the proposed method can be an alternative to the existing multigrid methods for the variational inequalities of the second kind.

Similar content being viewed by others

References

Badea, L.: Global convergence rate of a standard multigrid method for variational inequalities. IMA J. Numer. Anal. 34(1), 197–216 (2014)

Badea, L.: Convergence rate of some hybrid multigrid methods for variational inequalities. J. Numer. Math. 23(3), 195–210 (2015)

Badea, L., Krause, R.: One- and two-level Schwarz methods for inequalities of the second kind and their application to frictional contact problems. Numer. Math. 120(4), 573–599 (2012)

Barbu, V.: Nonlinear Differential Equations of Monotone Types in Banach Spaces, Springer monographs in mathematics. Springer, New York (2010)

Brent, R.P.: Chapter 4: An Algorithm with Guaranteed Convergence for Finding a Zero of a Function, Algorithms for Minimization without Derivatives. Englewood Cliffs, Prentice-Hall (1973)

Brezis, H.: Functional Analysis, Sobolev Spaces and Partial Differential Equations. Springer, New York (2010)

Burke, J., Qian, M.: On the superlinear convergence of the variable metric proximal point algorithm using Broyden and BFGS matrix secant updating. Math. Program. 88(1), 157–181 (2000)

Chen, X., Fukushima, M.: Proximal quasi-Newton methods for nondifferentiable convex optimization. Math. Program. 85(2), 313–334 (1999)

Christof, C.: Sensitivity Analysis of Elliptic Variational Inequalities of the First and the Second Kind, PhD. Thesis, https://eldorado.tu-dortmund.de/bitstream/2003/37059/1/DissertationChristof.eps (2018)

Combettes, P.L., Pesquet, J.-C.: Proximal splitting methods in signal processing. In: Fixed-Point Algorithms for Inverse Problems in Science and Engineering, pp 185–212 (2011)

Combettes, P.L., Wajs, V.R.: Signal recovery by proximal forward-backward splitting. Multiscale Model. Simul. 4(4), 1168–1200 (2005)

Dahlquist, G., Björck, G.A.: Numerical Methods in Scientific Computing, vol. I. SIAM (2008)

Dekker, T.J.: Finding a zero by means of successive linear interpolation. In: Dejon, B., Henrici, P. (eds.) Constructive Aspects of the Fundamental Theorem of Algebra. Wiley-Interscience, London (1969)

Ekeland, I., Temam, R.: Analyse convexe et problèmes variationnels. Dunod, Paris (1974)

Fuentes, M., Malick, J., Lemaréchal, C.: Descentwise inexact proximal algorithms for smooth optimization. Comput. Optim. Appl. 53(3), 755–769 (2012)

Fukushima, M., Qi, L.: A globally and superlinearly convergent algorithm for nonsmooth convex minimization. SIAM J. Optim. 6(4), 1106–1120 (1996)

Glowinski, R., Lions, J.L., Trémolières, R.: Analyse numérique des inéquations variationnelles. Dunod, Paris (1976)

Hintermüller, M., Kunisch, K.: Path-following methods for a class of constrained minimization problems in function space. SIAM J. Optim. 17(1), 159–187 (2006)

Hintermüller, M., Kunisch, K.: Feasible and noninterior path following in constrained minimization with low multiplier regularity. SIAM J. Control Optim. 45 (4), 1198–1221 (2006)

Hintermüller, M., Hinze, M., Tber, M.: An adaptive finite element Moreau-Yosida-based solver for a non-smooth Cahn-Hilliard problem. Optim. Methods Softw. 26, 777–811 (2011)

Hintermüller, M., Hinze, M., Kahle, C.: An adaptive finite element Moreau-Yosida-based solver for a coupled Cahn-Hilliard/Navier-Stokes system. J. Comput. Phys. 235, 810–827 (2013)

Hintermüller, M., Schiela, A., Wollner, W.: The length of the primal-dual path in Moreau–Yosida-based path-following methods for state constrained optimal control. SIAM J. Optim. 24(1), 108–126 (2014)

Keuthen, M., Ulbrich, M.: Moreau-Yosida regularization in shape optimization with geometric constraints. Comput. Optim. Appl. 62(1), 181–216 (2015)

Kornhuber, R.: Monotone multigrid methods for elliptic variational inequalities I. Numer. Math. 69, 167–184 (1994)

Kornhuber, R.: Monotone multigrid methods for elliptic variational inequalities II. Numer. Math. 72, 481–499 (1996)

Kornhuber, R.: On constrained Newton linearization and multigrid for variational inequalities. Numer. Math. 91, 699–721 (2002)

Mandel, J.: A multilevel iterative method for symmetric, positive definite linear complementarity problems. Appl. Math. Opt. 11, 77–95 (1984)

Mandel, J.: Etude algébrique d’une méthode multigrille pour quelques problèmes de frontière libre. C. R. Acad. Sci. 298(Ser. I), 469–472 (1984)

Martinet, B.: Régularisation d’inéquations variationnelles par approximations successives. Rev. Française Informat. Recherche Opérationnelle 4(R-3), 154–158 (1970)

Martinet, B.: Determination approchée d’un point fixe d’une application pseudocontractante. Acad. Sci. Paris 274, 163–165 (1972)

Moreau, J.J.: Proximité et dualité dans un espace hilbertien. Bull. Soc. Math. France 93, 273–299 (1965)

Rockafellar, R.T.: Monotone operators and the proximal point algorithm. SIAM J. Control. Optim. 14(5), 877–898 (1976)

Ulbrich, M., Ulbrich, S., Bratzke, D.D.: A multigrid semismooth Newton method for semilinear contact problems. Int. J. Comput. Math. 35(4), 486–528 (2017)

Acknowledgments

The author acknowledges the partial support of the network GDRI ECO Math for this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by: Stefan Volkwein

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix. Discrete approach of calculating the Moreau regularization

Appendix. Discrete approach of calculating the Moreau regularization

If the argument of φ is a scalar function, let us consider that

where ek, k = 1,…,np, are some nonnegative real constants and \(\phi \text { : }\mathbf {R}\to \overline {\mathbf {R}}\). Similarly with Proposition 4.1, we have

Proposition A.1

If\(\phi \text { : }\mathbf {R}\to {\overline {\mathbf {R}}}\)isa lower semicontinuous, proper and convex function,then\(\varphi \text { : }\mathbf {R}^{n_{p}}\to \overline {\mathbf {R}}\)definedin (A.1) is a lower semicontinuous, proper and convex functional.

Proof

Evidently, since ϕ is proper and convex, them φ is also a proper and convex functional. Now, let \(v_{n}=\{(v^{n}(x_{1}),\ldots ,v^{n}(x_{n_{p}}))\}_{n}\subset \mathbf {R}^{n_{p}}\), \(v=(v(x_{1}),\ldots ,v(x_{n_{p}}))\in \mathbf {R}^{n_{p}}\) and ψ ∈R such that vn → v in \(\mathbf {R}^{n_{p}}\), as n →∞, and φ(vn) ≤ ψ. Then, vn(xk) → v(xk) as n →∞, for all k = 1,…,np, and, since ek ≥ 0, k = 1,…,np and ϕ is lower semicontinuous, we have

i.e., φ is lower semicontinuous. □

Also, with a reasoning similar with that in the case of L2(Ω) we get

and, evidently, the value of a subgradient \(\partial \varphi _{(u(x_{1}),\ldots ,u(x_{n_{p}}))}\in \partial \varphi (u(x_{1}),\ldots ,u(x_{n_{p}}))\) at a point \((z_{1},\ldots ,z_{n_{p}})\in \mathbf {R}^{n_{p}}\) is written as

Similarly, if the argument of φ is a vectorial function, we consider \(\varphi \text { : }({\mathbf {R}^{\mathbf {d}}})^{n_{p}}\to \overline {\mathbf {R}}\) and

Remark A.1

Similarly with the above scalar case, we can prove that if \(\phi \text { : }{\mathbf {R}^{\mathbf {d}}}\to \overline {\mathbf {R}}\) is a proper, lower semicontinuous, and convex functional which is continuous on its effective domain D(ϕ), then φ defined in (A.2) is proper, lower semicontinuous, and convex.

Also, we have

and the value of a subgradient \(\partial \varphi _{(\boldsymbol {u}(x_{1}),\ldots ,\boldsymbol {u}(x_{n_{p}}))}\in \partial \varphi (\boldsymbol {u}(x_{1}),\ldots ,\boldsymbol {u}(x_{n_{p}}))\) at a point \((\boldsymbol {z}_{1},\ldots ,\boldsymbol {z}_{n_{p}})\in (\mathbf {R}^{d})^{n_{p}}\) is written as

In the following, for completeness, we write the functionals φλ and \(\varphi ^{\prime }_{\lambda }\) in the three examples in Section 4 when the functional φ is written as in (A.1) or (A.2).

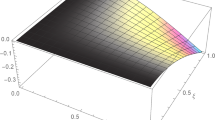

If φ in Example 1 is written as in (A.1), then

where

for k = 1,…,np. Also,

In the case of Example 2, \(\varphi ^{\prime }_{\lambda }(u)\) has the form in the previous example with

for k = 1,…,np, and

Finally, if φ in Example 3 is written as in (A.1), i.e.,

then

where

for k = 1,…,np, and

Rights and permissions

About this article

Cite this article

Badea, L. On the convergence of a multigrid method for Moreau-regularized variational inequalities of the second kind. Adv Comput Math 45, 2807–2832 (2019). https://doi.org/10.1007/s10444-019-09709-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10444-019-09709-6

Keywords

- Domain decomposition methods

- Multigrid methods

- Variational inequalities of the second kind

- Moreau regularization

- Nonlinear obstacle problems